A Modular Approach to the Analysis and Evaluation of Particle Filters for Figure Tracking

Project description Modular analysis of PFs Testing Videos Ground truth Code download Publications Space-Time acquisition Acknowledgements Contact Copyright

Project description

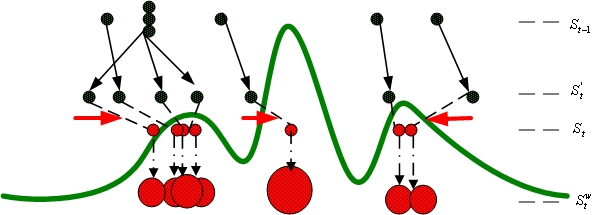

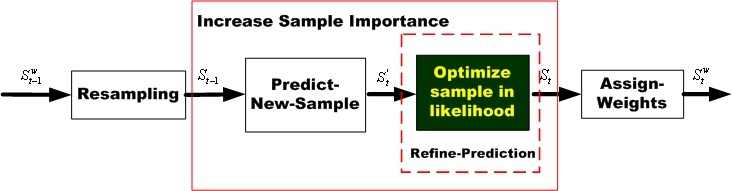

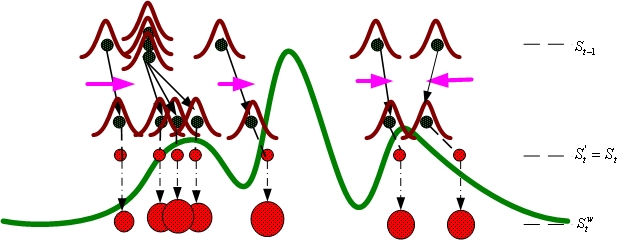

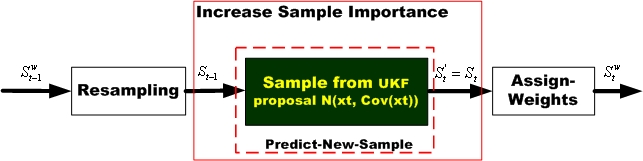

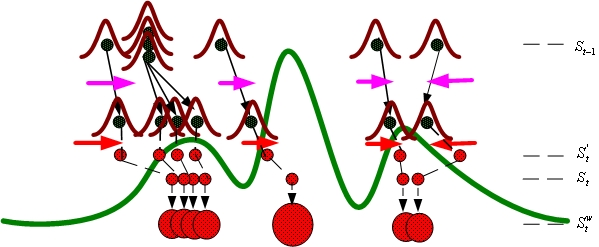

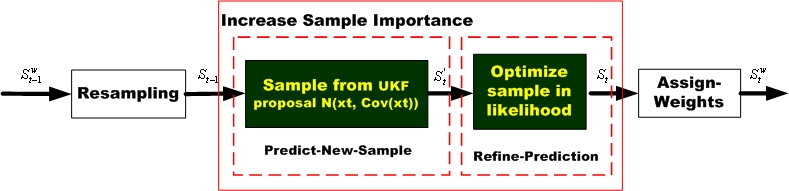

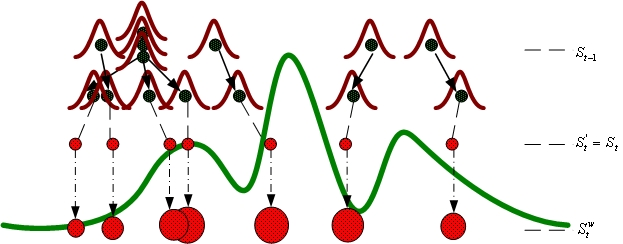

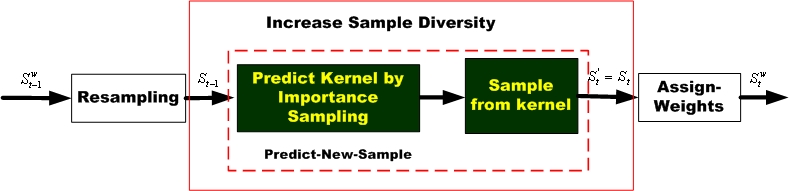

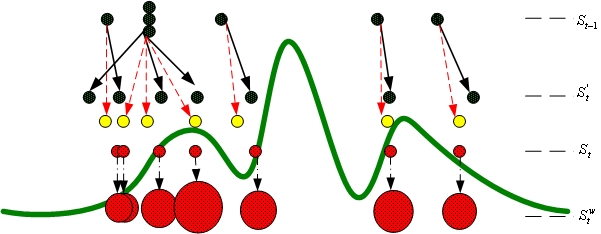

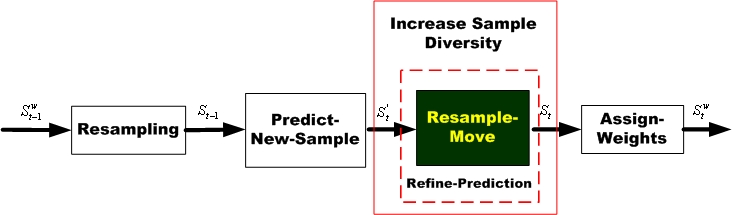

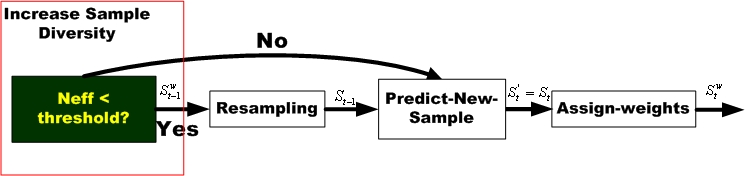

This work presents the first systematic empirical study of the particle filter (PF) algorithms for human figure tracking in video. Our analysis and evaluation follows a modular approach which is based upon the underlying statistical principles and computational concerns that govern the performance of PF algorithms. Based on our analysis, we propose a novel PF algorithm for figure tracking with superior performance called the Optimized Unscented PF. We examine the role of edge and template features, introduce computationally-equivalent sample sets, and describe a method for the automatic acquisition of reference data using standard motion capture hardware. The software and test data are made publicly-available on our project website.

Modular

analysis of selected Particle Filters

|

|

|

|

|

|

|

|

|

|

|

|

Video data and

associated properties

|

|

Dancing clip 0,1,2 -- Foreshortening, self-occlusion, rotation, fast movement. clustered/moving background, lighting change

Walking (viewing angle=45) -- Foreshortening, self-occlusion

Jumping jacks -- Small foreshortening

Ground truth

Joint centers -- Joint center positions of the 2D figure in every frame. dancing clip 1,2, walking, jumping jacks

States -- State inferred from joint center ground truth. dancing clip 1,2, walking, jumping jacks

Software architecture and Code

Figure tracking code: patch-based, edge-based

Document of single hypothesis figure tracking, by Alton Patrick

Space-Time acquisition system

Link to Matt Flagg's work!

Publication

|

|

A Modular Approach to the Analysis and Evaluation of Particle Filters for Figure Tracking, Ping Wang and James M. Rehg, IEEE Computer Society Conference on Computer Vision and Pattern Recognition - CVPR'06, Volume 1, pp. 790-797, New York, June, 2006. |

Acknowledgements

The authors thank Matthew Flagg and Young Ki Ryu for building the mocap system, and Alton Patrick for the figure tracking code. This work was supported by NSF Award IIS-0133779.

Contact

pingwang at gatech.edu

Copyright

The downloadable publications, data and software on this web site are presented to ensure timely dissemination of scholarly and technical work. Copyright and all rights therein are retained by authors or by other copyright holders. All persons copying this information are expected to adhere to the terms and constraints invoked by each author's copyright. These works may not be reposted without the explicit permission of the copyright holder.

Last updated on 10/05/06