Perceptual

User Interfaces using Vision-based Eye Trackers

Ravikrishna Ruddarraju ,Antonio Haro ,Irfan A. Essa

Publications

1. R. Ruddarraju, A. Haro, I. Essa, Fast Multiple Camera Head Pose Tracking, In Proceedings, Vision Interface 2003, Halifax, Canada. pdf

2. R. Ruddaraju, A. Haro, K. Nagel, Q. Tran, I. Essa, G. Abowd, E. Mynatt, "Perceptual User Interfaces using Vision-Based Eye Tracking" In Proceedings of the Fifth International Conference on Multimodal Interfaces (ICMI-PUI'03), Nov. 5-7th, 2003, (In conjunction with ACM UIST 2003), ACM Press, Vancouver B.C., Canada. (To Appear) pdf

1. Introduction

Head pose and eye gaze information are very valuable cues in face-to-face interactions between people. Such information is also important for computer systems that a person intends to interact with. The awareness of eye gaze provides context to the computer system that the user is looking at it and therefore supports effective interaction with the user.

In this paper, we present a real-time vision-based eye tracking system to robustly track a user's eyes and head movements. Our system utilizes robust eye tracking data from multiple cameras to estimate 3D head orientation via triangulation. Multiple cameras also ao,ord a larger tracking volume than is possible with an individual sensor, which is valuable in an attentive environment. Each individual eye tracker exploits the red-eye eo,ect to track eyes robustly using an infrared lighting source. Although our system relies on infrared light, it can still track reliably in environments saturated with infrared light, such as a residential living room with much sunlight.

We also present experiments and quantitative results to demonstrate the robustness of our eye tracking in two application prototypes: the Family Intercom [16], and the Cooks Collage [19]. In the Family Intercom application, eye gaze estimation is used to make inferences about the desire of an elderly parent to communicate with remote family members. In the Cooks Collage application, eye gaze estimates are used to assist a user while cooking in a kitchen as well as to evaluate the effectiveness of the Collage's user interface. In both cases, our tracking system works well under real-world environments, subject to varying lighting conditions, while allowing the user interaction to be unobtrusive yet engaging.

2. Our Vision System

Figure 1. Program Flow

Our system uses IBM BlueEyes infrared lighting cameras (courtesy

of IBM Research ). These cameras are used as sensors for

our eye tracking algorithm and the tracked eyes are used in conjunction to estimate the users head pose and

eye gaze direction. The tracked

head pose is used to estimate a

user's eye gaze to measure whether a user is looking at a previously defined

region of interest that the prototype applications use to further interact with the user.

3. Multi-camera IR-based eye tracking

Figure 2.

Feature Tracking, 2 eyes and 2 mouth corners.

We use several pre-calibrated cameras to estimate a userÕs head pose. For each camera, we use the tracked eye locations to estimate mouth corners. These two mouth corners and eye positions are then used as low level features between all cameras to estimate the userÕs 3D head pose. We use a combination of stereo triangulation, noise reduction via interpolation, and a camera switching metric to use the best subsets of cameras for better tracking as a user is moving their head in the tracking volume. Further details of our head pose estimation can be found in [5].

Figure 3. Multiple camera setup

Multiple cameras provide both a large tracking volume as well as 3D head pose information. However, as a user moves in the tracking volume, it is possible that their eyes are no longer visible from some cameras. Our system detects when a userÕs head is moving away from a camera and uses a different subset of cameras not including it to estimate the 3D pose more accurately. This is done because cameras with only partial views of the face will have increased eye-

tracking errors since

their appearance (and possibly motion) will no longer appear to be eyes.

Removing these cameras from the 3D pose calculation is important because the 3D

pose is sensitive to noise since we use a small number of cameras. In practice,

camera subset switching is not done very often, but must be done for certain

head angles to avoid incorrect pose estimates.

4. Dealing with Infrared Saturation

FisherÕs linear discriminant has previously been used to compensate for varying light conditions for improved facial recognition [7]. We use it to compute a classification score for candidate eyes versus non-eyes and have found it to yield improved classification over PCA for our data.

Figure

4. Tracking using Fisher Figure 2. Classification using PCA

5. Application Š Family Intercom

The Family Intercom

project investigates context-aware family communication between homes [16]. The

intent is to provide interfaces that facilitate a personÕs ability to decide

whether a proposed conversation should be initiated or not. The user-gaze

interface shown in Figure 6 provides feedback to the caller to help them

determine whether it would be appropriate to initiate a voice conversation.

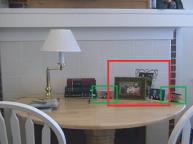

Figure 5. Family intercom setup

In one home, the

vision-based eye tracking system tracks user gaze towards a collection of

framed family photographs. Figure 5 shows the setup of the eye trackers on a

common household table in an elderly parentÕs home. In the second home, the

remote panel is based on the Digital Family Portrait [15] and displays a

portrait and a qualitative estimate of activity for the family member pictured

from the first home. Figure 6b shows the interface at a remote family memberÕs

house. When a family member notices the digital portrait of their family, they

simply touch the portrait to create a connection.

Figure 6. Family Intercom interface with eye tracking data

from vision system

The visual gaze tracker conveys statistics of the calleeÕs eye gaze towards the family pictures to the caller and facilitates the appropriate social protocol for initiating conversations between the users.

6. Application Š CookÕs Collage

The CookÕs Collage

application explores how to support retrospective memory, such as keeping a

record of transpired events, using a household cooking scenario [19]. Our

system estimates user attention in three regions: the CollageÕs display,

recipe, and cooking area.

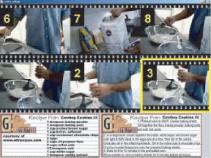

Figure 7. CookÕs Collage setup

Currently, we use the

head pose data to evaluate the usability of the CollageÕs display. The CookÕs

Collage expects the user to refer to its display every time the cook returns to

cooking after an interruption for additional cooking prompts. Ignoring the

display after an interruption could suggest the need for additional user

interface improvements or a better placement of displays.

Figure 8. Collage display

7. Results

Our subject pool

consists of 4 subjects for the Family Intercom and 4 the for CookÕs Collage.

For the Family Intercom experiment, a subject performs a regular daily activity

like reading or eating while sitting near a table containing the

userÕs family

pictures. A separate 15 minute sequence consisting of 225 frames is recorded

throughout the experiment to capture ground truth for veri„cation. The video is

hand labeled to represent the ground truth of regions viewed by the user. These

hand labeled frames are compared with the regions estimated by the head pose

tracking system. The percentage of accuracy gives the fraction of frames

estimated correctly by the system.

For the CookÕs

Collage experiment, subjects were asked to cook a recipe provided to them and a

video was also recorded to capture ground truth. Since the length of this

experiment is shorter than the Family Intercom experiment, a 10 minute sequence

was used for comparison instead. This is also motivated by the fact that the

user is more actively involved in the cooking experiment, which provides a

larger amount of head poses for comparison purposes.

Subject Correct

estimate

Subject 1 87%

Subject 2 88%

Subject 3 90%

Subject 4 84%

Average correct estimate 87.25%

Table 1: Statistics of

estimated eye contact for the

Family Intercom.

Subject Correct

estimate

Subject 1 81%

Subject 2 84%

Subject 3 78%

Subject 4 82%

Average correct estimate 81.25%

Table 2: Statistics of

estimated eye contact for the

CookÕs Collage.

References

[1] A. Aaltonen, A.

Hyrskykari, and K. Raiha. 101 spots on how do users read menus? In Human

Factors in Computing Systems: CHI 98,

pages 132Š139, New

York, 1998. ACM Press.

[2] Alan Allport. Visual

Attention. MIT Press, 1993.

[3] M. Argyle. Social

Interaction. Methuen & Co.,

London, England, 1969.

[4] M. Argyle and M. Cook.

Gaze and Mutual Gaze. Cambridge

University Press, Cambridge, UK, 1976.

[5] R. Ruddarraju, A.

Haro, I. Essa, Fast Multiple Camera Head Pose Tracking, In Proceedings, Vision

Interface 2003, Halifax, Canada.

[6] P. Barber and D.

Legge. Perception and Information.

Methuen & Co., London, England, 1976.

[7] P. Belhumeur, J.

Hespanha, and D. Kriegman. Eigenfaces vs. „sherfaces: Recognition using class

specific linear projection. In IEEE Transactions on Pattern Analysis and

Machine Intelligence, volume 19,

July 1997.

[8] La Cascia, M. Sclaro„,

and S. Athitso. Fast reliable head tracking under varying illumination: An

approach based on robust registration of texture mapped 3-d models. In IEEE

Transactions on Pattern Analysis and Machine Intelligence, 2000.

[9] J.H. Goldberg and J.C.

Schryver. Eye-gaze determination of user intent at computer interface. Elsevier Science Publishing, New York, New York,

1995.

[10] A. Haro, M.

Flickner, and I. Essa. Detecting and tracking eyes by using their physiological

properties, dynamics, and appearance. In IEEE Computer Vision

and Pattern

Recognition, pages 163Š168, 2000.

[11] M. Harville, A.

Rahimi, T. Darrell, G. Gordon, and J. Wood„ll. 3-d pose tracking with linear

depth and brightness constraints. In International Conference on Computer

Vision, 1999.

[12] B. Jabrain, J.

Wu, R. Vertegaal, and L. Grigorov. Establishing remote conversations through

eye contact with physical awareness proxies. In Extended Abstracts of ACM

CHI, 2003.

[13] Y. Matsumoto, T.

Ogasawara, and A. Zelinsky. Behavior recognition based on head pose and gaze

direction measurement. In IEEE International Conference on Intelligent

Robots and Systems, 2000.

[14] C.H. Morimoto,

D. Koons, A. Amir, and M. Flickner. Pupil detection and tracking using multiple

light sources. Technical report RJ-10117, IBM Almaden

Research Center,

1998.

[15] E. Mynatt, J.

Rowan, and A Jacobs. Digital family portraits: Providing peace of mind for

extended family members. In ACM CHI,

2001.

[16] K. Nagel, C.

Kidd, T. OÕConnell, S. Patil, and G. Abowd. The family intercom: Developing a

context-aware audio communication system. In Ubicomp, 2001.

[17] A. Schoedl, A.

Haro, and I. Essa. Head tracking using a textured polygonal model. In Proceedings

Workshop on Perceptual User Interfaces,

1998.

[18] Rainer

Stiefelhagen. Tracking focus of attention in meetings. In International

Conference on Multi-Modal Interfaces,

2002.

[19] Q. Tran and E.

Mynatt. CookÕs collage: Two exploratory designs. In CHI 2002, Conference

Proceedings, 2002.

[20] A. L. Yarbus. Eye

Movements during Perception of Complex Objects. Plenum Press, New York, New York, 1967.