CS 7476 Advanced Computer Vision

Spring 2024, TR 12:30 to 1:45, Molecular Sciences and Engineering G011

Instructor: James Hays

TA: Akshay Krishnan

Course Description

This course covers advanced research topics in computer vision. Building on the introductory materials in CS 4476/6476 (Computer Vision), this class will prepare graduate students in both the theoretical foundations of computer vision as well as the practical approaches to building real Computer Vision systems. This course investigates current research topics in computer vision. We will examine data sources and learning methods useful for understanding and manipulating visual data. This year, special emphasis will be placed on research at the intersection of computer vision and robotics. Class topics will be pursued through independent reading, class discussion and presentations, and research projects.The goal of this course is to give students the background and skills necessary to perform research in computer vision and its application domains such as robotics, VR/AR, healthcare, and graphics. Students should understand the strengths and weaknesses of current approaches to research problems and identify interesting open questions and future research directions. Students will hopefully improve their critical reading and communication skills, as well.

Course Requirements

Reading and Discussion Topics

Students will be expected to read one paper for each class. For each assigned paper, students must identify at least one question or topic of interest for class discussion. Interesting topics for discussion could relate to strengths and weaknesses of the paper, possible future directions, connections to other research, uncertainty about the conclusions of the experiments, etc. Questions / Discussion topics must be posted to the course Canvas discussions tab by 11:59pm the day before each class. Feel free to reply to other comments and help each other understanding confusing aspects of the papers. The Canvas discussion will be the starting point for the class discussion. If you are presenting you don't need to post a question to Canvas.Class participation

Attendence is required for this course. All students are expected to take part in class discussions. If you do not fully understand a paper that is OK. We can work through the unclear aspects of a paper together in class. If you are unable to attend a specific class please let me know ahead of time. Don't come to class if you are sick.Presentation(s)

Each student will lead the presentation of one paper during the semester (possibly as part of a pair of students). Ideally, students would implement some aspect of the presented material and perform experiments that help us to understand the algorithms. Presentations and all supplemental material should be ready one week before the presentation date so that students can meet with the instructor, go over the presentation, and possibly iterate before the in-class discussion. For the presentations it is fine to use slides and code from outside sources (for example, the paper authors) but be sure to give credit.Semester group projects

Students will work alone or in pairs to complete a state-of-the-art research project on a topic relevant to the course. Students will propose a research topic early in the semester. After a project topic is finalized, students will meet occasionally with the instructor or TA to discuss progress. Students will report their progress on their semester project twice during the course and the course will end with final project presentations. Students will also produce a conference-formatted write-up of their project. Projects will be published on the this web page. The ideal project is something with a clear enough direction to be completed in a couple of months, and enough novelty such that it could be published in a peer-reviewed venue with some refinement and extension.Prerequisites

Strong mathematical skills (linear algebra, calculus, probability and statistics) are needed. It is strongly recommended that students have taken one of the following courses (or equivalent courses at other institutions):- Computer Vision CS 4476 / 6476

- Machine Learning

- Deep Learning

- Computational Photography

Textbook

We will not rely on a textbook, although the free, online textbook "Computer Vision: Algorithms and Applications, 2nd edition" by Richard Szeliski is a helpful resource.Grading

Your final grade will be made up from- 20% Reading summaries and questions posted to Canvas

- 10% Classroom participation and attendance

- 15% Leading discussion for particular research paper

- 20% Semester project updates

- 35% Final semester project presentation and writeup

Office Hours:

James Hays, immediately after lecture on TuesdaysAkshay Krishnan, Mondays 2:30 - 4:30 pm at Coda

Tentative Schedule

| Date | Paper | Paper, Project page | Presenter |

| Tue, Jan 9 | Course overview, paper scheduling | James | |

| Thu, Jan 11 | [ViT] An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. Alexey Dosovitskiy, Lucas Beyer, Alexander Kolesnikov, Dirk Weissenborn, Xiaohua Zhai, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer, Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, Neil Houlsby | arXiv | James |

| Tue, Jan 16 | [CLIP] Learning Transferable Visual Models From Natural Language Supervision. Alec Radford, Jong Wook Kim, Chris Hallacy, Aditya Ramesh, Gabriel Goh, Sandhini Agarwal, Girish Sastry, Amanda Askell, Pamela Mishkin, Jack Clark, Gretchen Krueger, Ilya Sutskever | arXiv | James |

| Thu, Jan 18 | [MAE] Masked Autoencoders Are Scalable Vision Learners. Kaiming He, Xinlei Chen, Saining Xie, Yanghao Li, Piotr Dollar, Ross Girshick | arXiv | James |

| Tue, Jan 23 | [SimCLR] A Simple Framework for Contrastive Learning of Visual Representations. Ting Chen, Simon Kornblith, Mohammad Norouzi, Geoffrey Hinton | arXiv | Vivek |

| Thu, Jan 25 | The Curious Robot: Learning Visual Representations via Physical Interactions. Lerrel Pinto, Dhiraj Gandhi, Yuanfeng Han, Yong-Lae Park, Abhinav Gupta | arXiv | Ethan |

| Tue, Jan 30 | Making Sense of Vision and Touch: Self-Supervised Learning of Multimodal Representations for Contact-Rich Tasks. Michelle A. Lee, Yuke Zhu, Krishnan Srinivasan, Parth Shah, Silvio Savarese, Li Fei-Fei, Animesh Garg, Jeannette Bohg | arXiv | Bowen |

| Thu, Feb 1 | [ConvNext] A ConvNet for the 2020s. Zhuang Liu, Hanzi Mao, Chao-Yuan Wu, Christoph Feichtenhofer, Trevor Darrell, Saining Xie | arXiv

|

Kevin and Saba |

| ConvNeXt V2: Co-designing and Scaling ConvNets with Masked Autoencoders. Sanghyun Woo, Shoubhik Debnath, Ronghang Hu, Xinlei Chen, Zhuang Liu, In So Kweon, Saining Xie | arXiv

|

Kevin and Saba | |

| ConvNets Match Vision Transformers at Scale. Samuel L. Smith, Andrew Brock, Leonard Berrada, Soham De | arXiv

|

Kevin and Saba | |

| Tue, Feb 6 | SENTRY: Selective Entropy Optimization via Committee Consistency for Unsupervised Domain Adaptation. Viraj Prabhu, Shivam Khare, Deeksha Kartik, Judy Hoffman | arXiv | Ziyan |

| Thu, Feb 8 | Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. Ze Liu, Yutong Lin, Yue Cao, Han Hu, Yixuan Wei, Zheng Zhang, Stephen Lin, Baining Guo | arXiv | Katherine |

| Tue, Feb 13 | [Dino] Emerging Properties in Self-Supervised Vision Transformers. Mathilde Caron, Hugo Touvron, Ishan Misra, Herve Jegou, Julien Mairal, Piotr Bojanowski, Armand Joulin | arXiv | Abhijith and Huili |

| DINOv2: Learning Robust Visual Features without Supervision. Oquab et al. | arXiv | Abhijith and Huili | |

| Thu, Feb 15 | The Unsurprising Effectiveness of Pre-Trained Vision Models for Control. Simone Parisi, Aravind Rajeswaran, Senthil Purushwalkam, Abhinav Gupta | arXiv | Manthan |

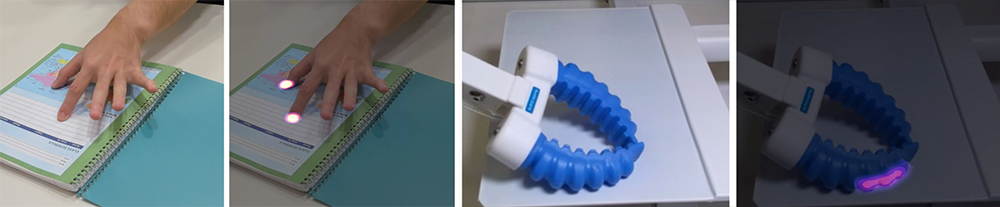

| Tue, Feb 20 | PressureVision: Estimating Hand Pressure from a Single RGB Image. Patrick Grady, Chengcheng Tang, Samarth Brahmbhatt, Christopher D. Twigg, Chengde Wan, James Hays, Charles C. Kemp | arXiv | Gabriela |

| Thu, Feb 22 | Omni3D: A Large Benchmark and Model for 3D Object Detection in the Wild. Garrick Brazil, Abhinav Kumar, Julian Straub, Nikhila Ravi, Justin Johnson, Georgia Gkioxari | arXiv | Haotian |

| Tue, Feb 27 | High-Resolution Image Synthesis with Latent Diffusion Models. Robin Rombach, Andreas Blattmann, Dominik Lorenz, Patrick Esser, Bjorn Ommer | arXiv | Dipanwita |

| Thu, Feb 29 | RT-1: Robotics Transformer for Real-World Control at Scale. Brohan et al. | arXiv | Dhruv |

| RT-2: Vision-Language-Action Models Transfer Web Knowledge to Robotic Control. Brohan et al. | arXiv | Dhruv | |

| Tue, Mar 5 | [ControlNet] Adding Conditional Control to Text-to-Image Diffusion Models. Lvmin Zhang, Anyi Rao, Maneesh Agrawala | arXiv | Avinash |

| Thu, Mar 7 | TerrainNet: Visual Modeling of Complex Terrain for High-speed, Off-road Navigation. Xiangyun Meng, Nathan Hatch, Alexander Lambert, Anqi Li, Nolan Wagener, Matthew Schmittle, JoonHo Lee, Wentao Yuan, Zoey Chen, Samuel Deng, Greg Okopal, Dieter Fox, Byron Boots, Amirreza Shaban | arXiv | Changxuan |

| Tue, Mar 12 | OneFormer: One Transformer to Rule Universal Image Segmentation. Jitesh Jain, Jiachen Li, MangTik Chiu, Ali Hassani, Nikita Orlov, Humphrey Shi | arXiv | Kartik |

| Thu, Mar 15 | Segment Anything. Alexander Kirillov, Eric Mintun, Nikhila Ravi, Hanzi Mao, Chloe Rolland, Laura Gustafson, Tete Xiao, Spencer Whitehead, Alexander C. Berg, Wan-Yen Lo, Piotr Dollar, Ross Girshick | arXiv | Prateek |

| Tue, Mar 19 | No Classes, Institute Holiday | ||

| Thu, Mar 21 | No Classes, Institute Holiday | ||

| Tue, Mar 26 | Robot Learning with Sensorimotor Pre-training. Ilija Radosavovic, Baifeng Shi, Letian Fu, Ken Goldberg, Trevor Darrell, Jitendra Malik | arXiv | ? |

| Thu, Mar 28 | NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. Ben Mildenhall, Pratul P. Srinivasan, Matthew Tancik, Jonathan T. Barron, Ravi Ramamoorthi, Ren Ng | arXiv | Zulfiqar and Kshitij |

| Tue, Apr 2 | 3D Gaussian Splatting for Real-Time Radiance Field Rendering. Bernhard Kerbl, Georgios Kopanas, Thomas Leimkuhler, George Drettakis | arXiv | Pranay |

| Thu, Apr 4 | Distilled Feature Fields Enable Few-Shot Language-Guided Manipulation. William Shen, Ge Yang, Alan Yu, Jansen Wong, Leslie Pack Kaelbling, Phillip Isola | arXiv | Jesse |

| Tue, Apr 9 | Lifelong Robot Learning with Human Assisted Language Planners. Meenal Parakh, Alisha Fong, Anthony Simeonov, Tao Chen, Abhishek Gupta, Pulkit Agrawal | arXiv | Marcus and Mohamed |

| Thu, Apr 11 | The Un-Kidnappable Robot: Acoustic Localization of Sneaking People. Mengyu Yang, Patrick Grady, Samarth Brahmbhatt, Arun Balajee Vasudevan, Charles C. Kemp, James Hays | arXiv | Yilong |

| Tue, Apr 16 | Learning to See Physical Properties with Active Sensing Motor Policies. Gabriel B. Margolis, Xiang Fu, Yandong Ji, Pulkit Agrawal | arXiv | Desiree |

| Thu, Apr 18 | Sequential Modeling Enables Scalable Learning for Large Vision Models. Yutong Bai, Xinyang Geng, Karttikeya Mangalam, Amir Bar, Alan Yuille, Trevor Darrell, Jitendra Malik, Alexei A Efros | arXiv | Manushree and Mehrdad |

| Tue, Apr 23 | AutoRT: Embodied Foundation Models for Large Scale Orchestration of Robotic Agents. Ahn et al. | Project page | Navya and Isha |

| Final Exam Slot Thursday, May 2, 11:20 to 2:10 |

Final Project Presentations | Everyone | |

| Saturday, May 4 | Final Report due | Everyone |