- What is the sequence of transformations that must be composed to create the simple perspective viewing transformation matrix? Write out the sequence of transformations symbolically (don’t write out the matrices, but rather define each matrix using words). For example, one of the transformations is “Mper”, the perspective matrix. Make sure you clearly define what each matrix does.

- How would you derive the required rotation matrix in your sequence from the inputs P1, P2, and P3?

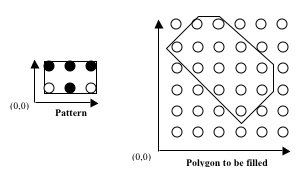

- As discussed in class and the text, it is useful to think of last of

the steps required to transform the visible viewing volume into the canonical

parallel volume (referred to as Mper above) as two separate steps (Mper1

and Mper2). Write out these two matrices, labelling any constants you

use on the supplied diagram.

Mper = Mper2 * Mper1

Mper1 =Mper2 =