Project 1: Image Filtering and Hybrid Images

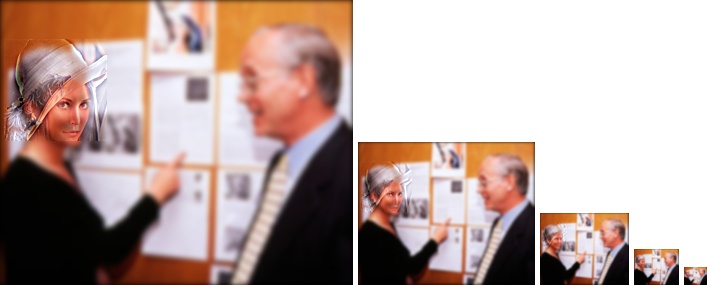

Hybrid image of two images of Lena Soderberg.

A hybrid image is a picture that combines the low spatial frequencies of one picture with the high spatial frequencies of another picture producing an image with an interpretation that changes with viewing distance (A. Oliva et al, 2006). It is based on the observation that high frequency component tends to dominate human visual perception.

We create a hybrid image by filtering image 1 with lowpass filter (LPF) and image 2 with highpass filter (HPF) followed by combining the low frequency component of image 1 and high frequency component of image 2. When the hybrid image is observed from a proper (not too far) distance, people will percept the image as the object in image 2 because high frequencies in image 2 is visually dominant. When it is observed from a distance far away, the decease in image resolution will make high frequency unperceptable, and low frequency in image 1 becomes high frequency, which results in the visual domination of image 1.

Implementation

We use a MATLAB script proj1.m to filter and combile two image and we also define a MATLAB function my_imfilter() that imitates the behavior of imfilter(), which calculates the convolution output given an image and a filter.

Image filtering

To apply a filter of dimension h_filter-by-w_filter to a single or multiple channel image, we convolve the filter with the image channel by channel. For each channel, every pixel in the output is defined by the original pixel convolving with the filter, i.e., summation of the element-wise product of the filter and the original pixel array centered at that pixel position and with size of the filter. Specifically in my script, r and c are the coordinat

% In my_filter.m:

% For each image channel, image_gray is an h_image-by-w_image array of pixel intensities.

for r = 1:h_image

for c = 1:w_image

output_pixel = sum(sum(filter.*image_gray_pad(r:r+h_filter-1,c:c+w_filter-1)));

output_gray(r,c) = output_pixel;

end

end

For pixels at the edges and corners of the original image, I simply zero-pad them so that the convolution algorithm can be applied in the same way for all pixels. Therefore, input image should be padded on the top/bottom and left/right side with a margin of (h_filter-1)/2 and (w_filter-1)/2 respectively:

% In my_filter.m:

% Before the two for loops shown above, zero-pad each image channel:

h_pad = (h_filter-1)/2;

w_pad = (w_filter-1)/2;

image_gray_pad = padarray(image_gray, [h_pad, w_pad]);

Example filter results

|

|

|

|

Original image/filtering with identity filter |

Image blurred with a box filter |

Image blurred with a Gaussian filter |

|

|

|

|

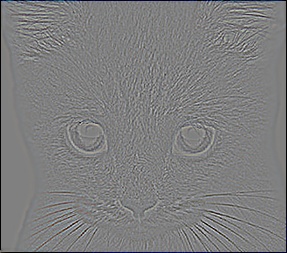

Horizontal edges detected with a Sobel filter |

Highpass filtering with a Laplacian filter |

Highpass filtering by subtracting blurred |

Hybriding two images

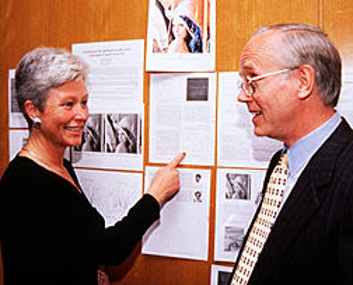

In my project, I combine two pictures of Lena Soderberg: I use high frequency component in the famous Lena picture that is frequently used as test image in computer vision community, and I use low frequency component of one of her recent picture when she was invited to Image Science and Technology (IS&T) conference in 1997.

This code creates an Gaussian filter and then removes high frequency from the recent picture (image1) as well as applies HPF to the old pictureas (image2).

Notice that when cutoff_frequency is 2.8, ceil(cutoff_frequency*4+1) is 13, which gives the filter an odd side length so that it can be centered on one pixel.

cutoff_frequency = 2.8;

filter = fspecial('Gaussian', ceil(cutoff_frequency*4+1), cutoff_frequency);

low_frequencies = imfilter(image1, filter);

high_frequencies = image2 - imfilter(image2, filter);

This code above produces image1 that is blurred and image2 that is mainly the edgy (high frequency) part.

Combining the high frequency image to the face part of the low frequency image gives the hybrid image. To avoid the fading edges in high frequency image2 due to the zero-padding of original image before filtering, I cropped 5 pixels from each edge of the high frequency Lena face.

hybrid_image = low_frequencies;

padwidth_start = 5;

padheight_start = 40;

[height_highfreq, width_highfreq, ~] = size(high_frequencies);

high_frequencies_cropped = high_frequencies(5:height_highfreq-5, 5:width_highfreq-5, :);

[height_highfreq, width_highfreq, ~] = size(high_frequencies_cropped);

hybrid_image(padheight_start:padheight_start+height_highfreq-1, ...

... padwidth_start:padwidth_start+width_highfreq-1,:) = ...

... hybrid_image(padheight_start:padheight_start+height_highfreq-1, ...

... padwidth_start:padwidth_start+width_highfreq-1, :) + high_frequencies_cropped;

Here comes the hybrid Lena image! When we observe from near the hybrid image (image of large scale), high frequency dominates our vision so we can see the face part of the famous Lena picture combined with a background which is from her recent picture. On the other hand, when we observe from a large distance, high frequency becomes too high to be perceived and low frequency becomes moderately high frequency, which dominates our vision. In this case, the hybrid image just looks like the recent Lena picture.

Hybrid Lena image in multiple scales.

More examples

Some interesting experiments include hybrid image that looks like a cat with a high resolution and a dog from distance and hybrid image that looks like Albert Einstein from a close distance and Marilyn Monroe from a large distance.