Project 1: Image Filtering and Hybrid Images

Images of objects or scenes that are naturally occuring, contain information at different spatial scales. Features that are associated with a certain spatial scale can be isolated by utilizing the concept of spatial frequency. Figure 1 shows an image decomposed into low, mid, and high frequencies. From the images it can be seen that lower frequency features include continous blobs of color, and in contrast high frequency features include textures and finer details.

|

Fig. 1: Frequency decomposition of an image of a cat.

The visual system in humans processes scenes by generating neural representations at multiple scales simultaneously. This ability enables humans to maintain awareness of events at multiple scales, while also focusing at a specific scale. This project examines a method for linear filtering based on convolution, how it can be used to extract petrinent visual information and generate hybrid images.

Image Filtering

Linear filters for image processing can be used to redefine pixels as linear combinations of a surrounding neighborhood of pixels. The weighting coefficients for each neighboring pixel composes a mask which is applied to each pixel of an image. Convolution provides an efficient method for applying filters, especially when processed in parallel. An algorithm to apply filters of aribtrary size (for odd no. of cells) to color images in MATLAB, is presented below. The input image is padded with zeros so th emask can be applied to boundary pixels.

%% Processing Input

% Determine size of image and filter

size_img = size(img); size_filt = size(filt);

% Check if color or BW image

if size(img,3)== 3

num_layers = 3;

else

num_layers = 1;

end

%% Padding Input Image

filt_center = floor((size_filt+1)/2);% Find center of filter

pad = size_filt-filt_center;% Calculate pad size

pad_img = padarray(img,pad);% Pad image

%% Performing 2D Convolution

filt = rot90(rot90(filt));% Rotate filter by 180 deg

out_img = zeros(size_img);% Initialize output image

for k = 1:num_layers

for i = 1:size_img(1)

for j = 1:size_img(2)

%Spatial convolution

out_img(i,j,k) = sum(sum(pad_img(i:i+size_filt(1)-1, j:j+size_filt(2)-1,k).*filt));

end

end

end

The selection of weights and shape for the mask determines what features are filtered. A few examples of global linear filters are provided in Figure 2 (blurring, edge detection (Sobel), high pass). Blurring can be achieved by uniformly weighting the neighborhood, and edge detection by taking directional pixel differences.

|

Fig. 2: Linear filters applied to an image of a dog

Hybrid Images

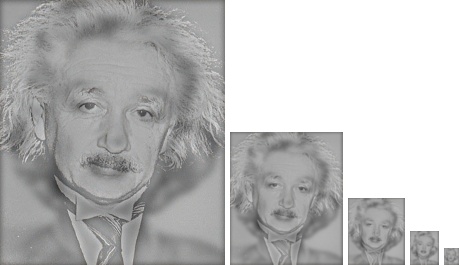

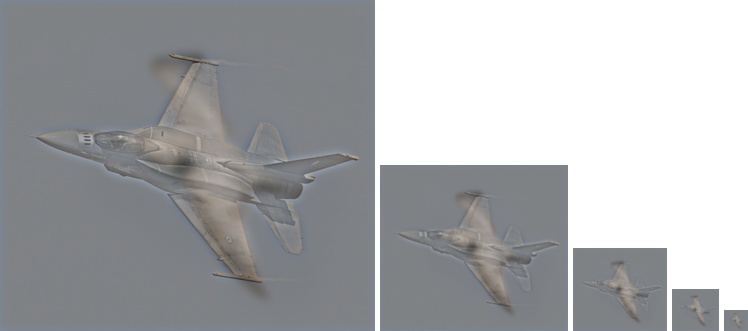

Hybrid images are composed of two different images with complementary frequency spectrums, which have different interpretations depending on the viewing distance. Low frequency information is more readily perceived at larger distances, while conversely high frequency data is more readily percieved at smaller distances. The selection of images must be done carefully to generate the effect of two interpretations; input images should have objects of similar shape, scale, and alignment. A few examples of hybrid images are presented in Figure 3. The choice for which image is used for low an high frequencies is dependent on the characteristics of features within the images. Images that are highly textured or contain hard edges are better represented at high frequencies, and conversely images with softer textures and edges are better represented at low frequencies.

|

|

|