Project 1: Image Filtering and Hybrid Images

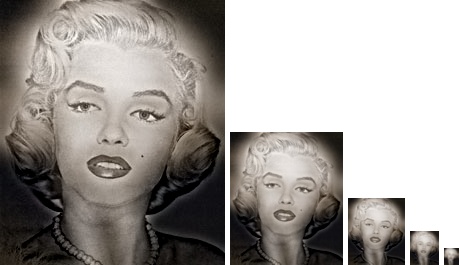

An example hybrid image.

Images, both digital and real, are composed of a number of different frequencies that our eyes and brains interpret on sight. These various frequencies can be split into "high" and "low" frequencies. Up close higher frequencies tend to dominate our vision, while from farther away we tend to only interpret the lower frequencies. By taking advantage of this interesting property of our vision. We can create "hybrid" images that are composed of high frequencies of one image and low frequencies of another. From up close (or enlarged) our vision mostly sees the high frequency part. However, as we move farther and farther away (or continuously shrink the image) it will start to look more and more like the low frequency image. The image to the right contains an example hybrid image. The mechanism that allows us to separate the high and low frequencies is known as image filtering or convolution.

Image filtering is technique in which each pixel of an image is transformed as a function of the specific filter used and the neighborhood of the pixel. For this project, various different Gaussian filters were used in order to smoothly blur the image (get the low frequencies). High frequencies of images were obtained by subtracting out these low frequencies from the original image.

my_imfilter.m

Original Marilyn Monroe Image

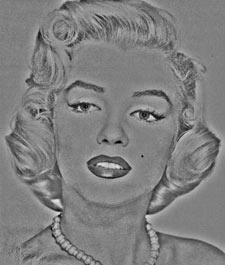

High Frequency Marilyn Monroe Image

Original Albert Einstein Image

Low Frequency Albert Einstein Image

The first thing that needed to be done for the project was creating a general image filtering function in the spirit of MATLAB's imfilter. To implement the filter, a few things had to be kept in mind:

- Images need to be padded. If images are not padded, then there becomes an issue around the edges of the image since they do not have all necessary neighbors.

- Code should be flexible enough to handle greyscale and color images (potentially of multiple dimensions). Code should also be able to work with any filter of odd dimensions (so that the center pixel is unambiguous)

- For loops in MATLAB are slow. An effort should be made to use built-in vectorized functions rather than manual looping.

To handle padding the image, I used MATLAB's very handy padarray method. Specifically, I used padarray to pad the array with symmetric/mirror values to the edge rather than just padding with zeros. Zero padding resulted in small dark lines around the edges of the image while symmetric padding gave a more organic feel to the image. The code also filters each color channel individually. This allows the code to be flexible to the number of total color channels.

Performance

In my initial version of the code, I looped over each color channel, then every x and y pixel to run all my calculations for that pixel's convolution. However, this initial version was too slow for my liking. I realized that I could do a couple of simple refactors to not calculate redundant information inside each loop, however this only saved only a little time (around 1-2 seconds on each call to my_imfilter out of around 11 total seconds per call). If I were to continue optimizing this route further, I could probably precompute all the indicies in each image then loop through those faster. However, looking at MATLAB's profiler, the indexing here was never the bulk of work being done and time savings would be minimal.

The next approach I looked at was using MATLAB's im2col fuction which turns each neighborhood into a column, after which I can then use much faster vectorized operations to calculate convolutions. And these calculations were much faster. After using im2col my code only took around 2 seconds per call. The only problem was that im2col itself would take around 58 seconds (and 10GB of memory). While the code here was much cleaner and I had eliminated my nested for loops from above, I unfortunately had to trash this approach in favor of the previous one. If I had more time to devote to this project, then the next step would likely be to implement the convolutions in the Fourier Domain.

Results

After implementing my_imfilter plus some convenience functions for display, the final part was to use the filter to create hybrid images. At the recommendation of the project description and the original paper on hybrid images, I used two different cutoffs for the high and low frequency images. First, I tried swapping which image I selected the high versus low frequencies from as some (in particular the bicycle-motorcycle pair) worked much better one way than the other. To get a good feel for the effects that each cutoff had on the resulting images, I ran a grid search over both cutoffs to find approximate values that looked best to me. After arriving at some general boundaries, I then tested each pair on images provided in the data folder to find the subjective best thresholds for each pair.

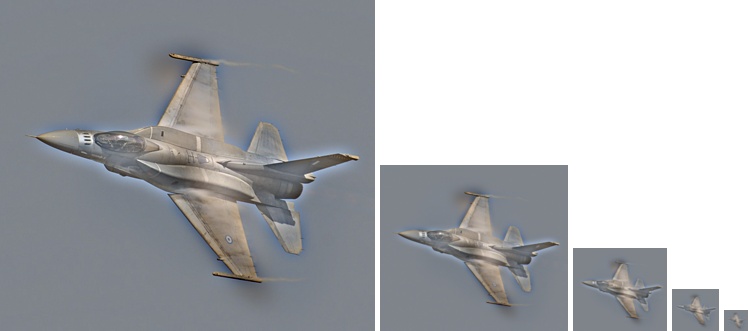

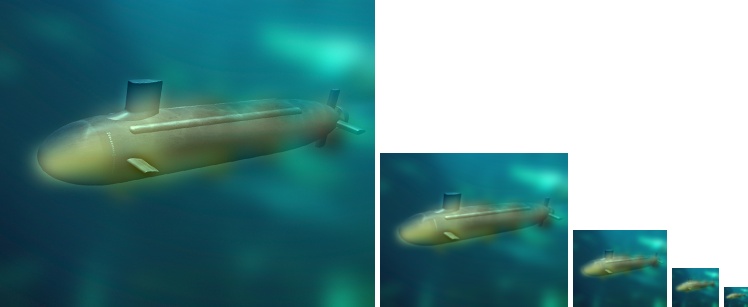

To simulate the effect that distance has on viewing hybrid images, each image is shown at different scales. The high frequency image should be most obvious at the largest two scales, while the low frequency image should appear in the smallest two images.

Various sizes of a hybrid Einstein and Monroe image. High Cutoff: 5 Low Cutoff: 10

Various sizes of a hybrid dog and cat image. High Cutoff: 15 Low Cutoff: 7

Various sizes of a hybrid bird and plane image. High Cutoff: 7 Low Cutoff: 11

Various sizes of a hybrid Enstein and Monroe image. High Cutoff: 6 Low Cutoff: 10

Various sizes of a hybrid bicycle and motorcycle image. High Cutoff: 7 Low Cutoff: 7

As the above images demonstrate, not all pairs of images need the same cutoffs and not all pairs provide an equally good effect. In particular, the last image is interesting because the original image for the bicycle is against a white backdrop. This means that it cannot contribute any high frequencies for the background so almost all coloration comes from the motorcycle image (despite the bike itself looking quite sharp against it). Generally speaking, pairs where the main focal points of both images are aligned correctly and the general color scheme is similar tend to produce the best results as seen in the fish-submarine and bird-plane images. Of particular note is the Albert Einstein and Marilyn Monroe hybrid image. Humans are particularly adept at recognizing faces, even at very low resolutions and sizes. Because of this, the Albert Einstein face is very evident in the smallest picture, despite being the smallest image on this entire page.