Project 2: Local Feature Matching

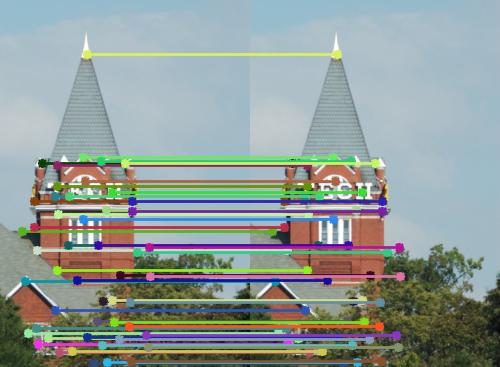

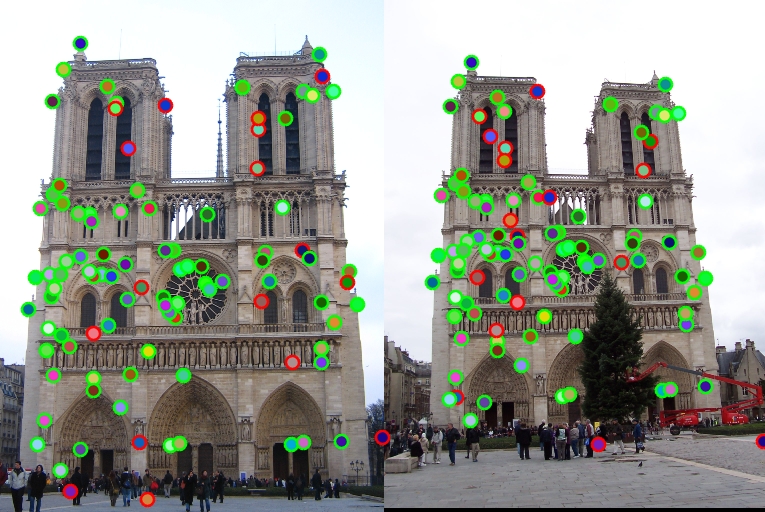

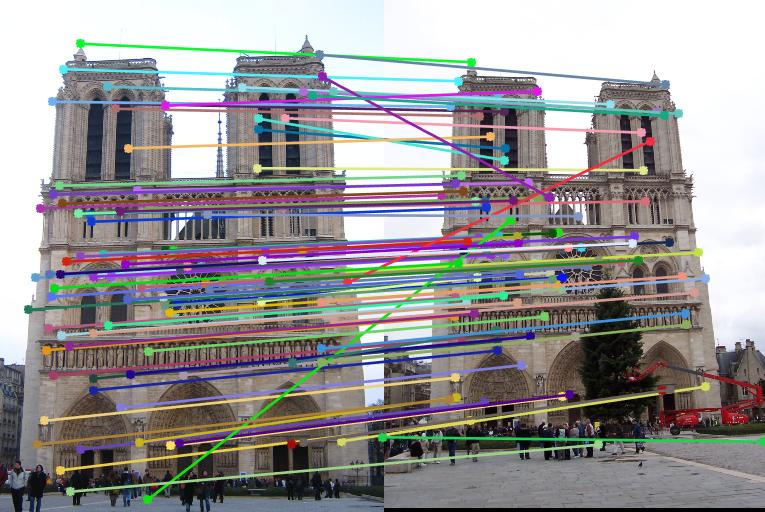

Baseline Sift Pipeline on Notre Dame Image with accuracy 83% over 100 points. Over 50 points the accuracy is 94%

The goal of this assignment is to create a local feature matching pipeline using techniques described in the Class Slides and Szeliski. The matching pipeline is intended to work for instance level matching -- multiple views of the same physical scene. We implement the project in three parts

- Interest point localisation using Harris Corner detection.

- Local Feature Description using SIFT-like features.

- Feature matching using nearest neighborhood.

We implement the pipeline in Matlab without using the inbuilt SiftDetector and similar functions.

Harris Corner Detection

Harris Corner Detection is useful for shape detection and matching. In layman's term the Harris Corner Detector is just a mathematical way of determining which windows produce large variations when moved in any direction. With each window, a score R is associated. Based on this score, you can figure out which ones are corners and which ones are not.

Example of code with highlighting

The javascript in thehighlighting folder is configured to do syntax highlighting in code blocks such as the one below.

%example code

filter = fspecial('Gaussian', 16, 1);

image=imfilter(image,filter);

[GX,GY]=gradient(filter);

[M N]=size(image);

R=zeros(M,N);

IX=imfilter(image,GX);%computing X gradient of image

IY=imfilter(image,GY);%computing Y gradient of image

IXX=IX.*IX;

IYY=IY.*IY;

IXY=IX.*IY;

filter = fspecial('Gaussian', 8, 2); %designing a filter

IXX=imfilter(IXX, filter); %computing IXX, IXY and IYY

IYY=imfilter(IYY,filter);

IXY=imfilter(IXY,filter);

R=(IXX.*IYY-IXY.^2)-0.01*((IXX+IYY).^2);%computing Scalar interest measure

SIFT-like Feature Detection

We extract 128 sized feature vectors for each keypoint descriptor that are eventually used in the matching function. Gradient orientations are calcucated for each keypoint and appropriate histogram binning is performed.

Image gradient directions spread out across -180 to 180 degrees.

Matching Features

Nearest Neighborhood method is used to determine the 100 most confident keypoints.

Example of code with highlighting

The javascript in thehighlighting folder is configured to do syntax highlighting in code blocks such as the one below.

%example code

for i=1:num_features

NNDR = mat(1,i)/mat(2,i);

if(NNDR>threshold)

continue;

end

confidences(k) = NNDR;

matches(k,2) = i;

matches(k,1) = I(1,i);

k = k+1;

end

Extra Graduate Credits: Part1

Here, I implement scale invariance

|

|

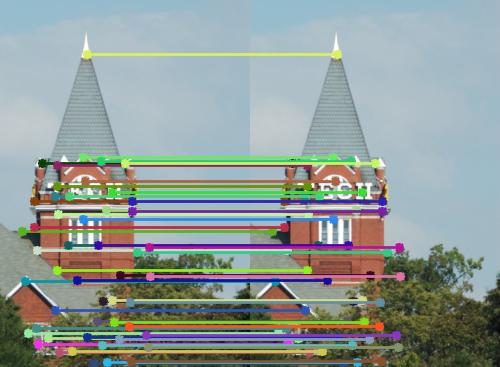

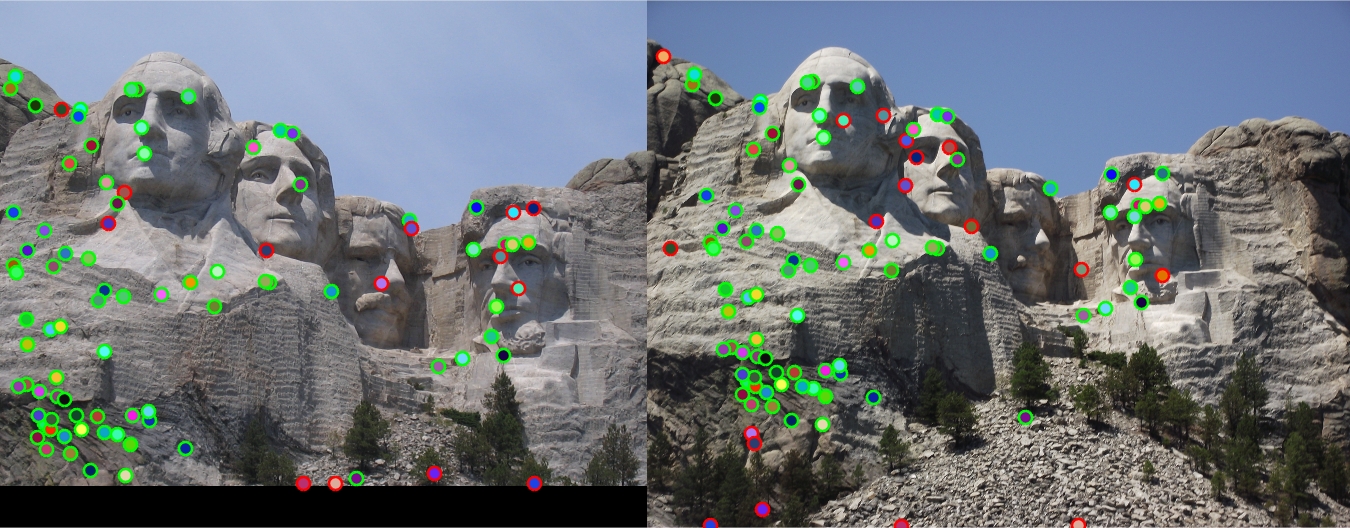

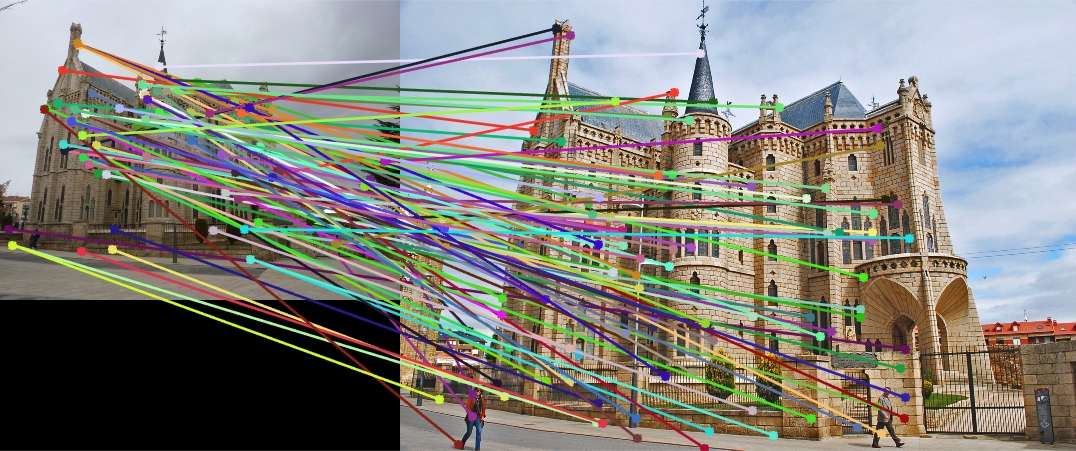

Here we implement Scale Space Invariance by considering four sized Image Pyramid. I I(0.9) I(0.9)^2 I(0.9)^3 The accuracy for Notre Dame falls to 71% from 83%. The accuracy for Mount Rushmore falls to 80% from 81%. The accuracy for Gaudi increases to 15% from 2%> This can be explained because in the case of Notre Dame image scaled image pyramid features do not provide any useful information. However, in case of Gaudi, the Response Function Value of each scaled image is quite close showing useful information being contributed by the scaled dominant image

Extra Graduate Credits: Part 2

Here, I implement KDTREE MATCHING

|

Here we implement KD-Tree which are essentially a special case of binary space partitioning trees. Elapsed time is 29.708755 seconds with an accuracy of 78%. FOR PDIST2 Elapsed time is 94.131788 seconds with an accuracy of 83%. KD-Trees are significantly faster in case of multidimensional search queries. The decrease in accuracy can be because of approximations during the KDTREE search algorithm implmentation. On decreasing the size of match data points the accuracy increases for both KDTREE and pdist2.

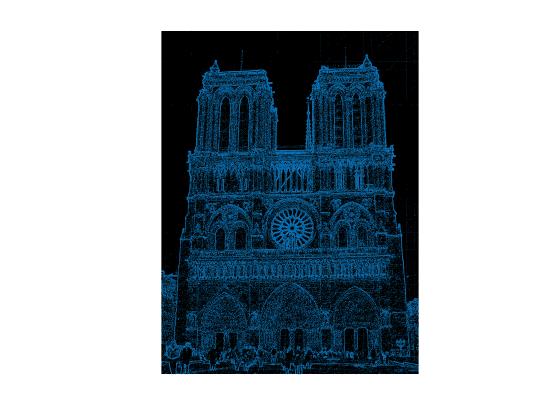

TEST IMAGE SHOWING 100 percent Matching