Project 2: Local Feature Matching

Notre Dame

Local feature matching is a method used heavily in computer vision applications which identifies distinct features in multiple photos and then compares features between photos to try and find common ground. This is used for things like image stitching to create panoramas, or even object modelling. The most common method for implementation of feature matching is:

- Finding points of interest

- Extracting features

- Matching features between images

I will explore these steps in more detail below.

Interest Points

I implemented the Harris Corner Detection algorithm to find interest points in the image. In this case, the interest points I was looking for were parts with sharp contrasts, like corners. The first step was to employ image filtering with two Sobel filters, a horizontal one and a vertical one. This gave me two convolutions, one sharpening changes in horizontal nuances and the other sharpening the vertical. I then ran the following operations in order to obtain the Harris Corner Matrix.

%G is a Gaussian filter

dx2 = dx.^2;

dxy = dx.*dy;

dy2 = dy.^2;

gx2 = imfilter(dx2,G);

gxy = imfilter(dxy,G);

gy2 = imfilter(dy2,G);

har = gx2.*gy2 - gxy.^2 - 0.01 * (gx2+gy2).^2;

A Gaussian was used above to give the gradients enough of a blur that it would hide any minor gradients. This helped to weed out lots of non-corners. The last step was detecting all the local maxima across the image and store their locations as interest points.

Extracting Features

Now that the important locations were defined, I was able to move on to extracting the features from the original image. In order to achieve this, I implemented the SIFT pipeline. I started off by calculating the x and y gradients of the image. In order to match features, I needed to give them some kind of quantifiable identity. This was easier done in polar coordinates, so I converted the gradients to polar. At each point of interest, I arranged a box around it split into a 4x4 set of cells. At each cell, I used the gradient at each pixel to create a histogram. This was done by dropping the magnitude of the gradient into one of 8 buckets depending on its orientation. For my implantation, I didn't bother with creating a properly oriented set of buckets. The specific orientation is irrelevant as long as I remain consistent with it. This helped to simplify the math as I didn't have to worry about things like placing all magnitudes with angles -22.5 to 22.5 degrees into the 0 bucket. With this, each feature now had a solid and likely unique descriptor.

Matching Features

With all the features located and defined for both images, it was time to play matchmaker and determine a feature's most compatible partner in the other image. I did this using a simple distance formula to determine each feature's two closest matches. I then took the ratio between these to score each pair with a particular confidence. If the feature's top two only had a small change in distance, I gave that particular pair a lower priority. In this way, I picked the top set of feature pairs which were much more indisputable.

Results

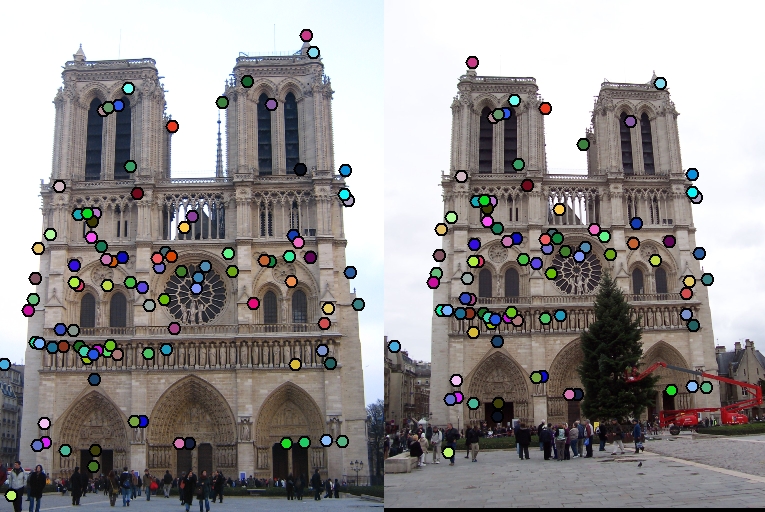

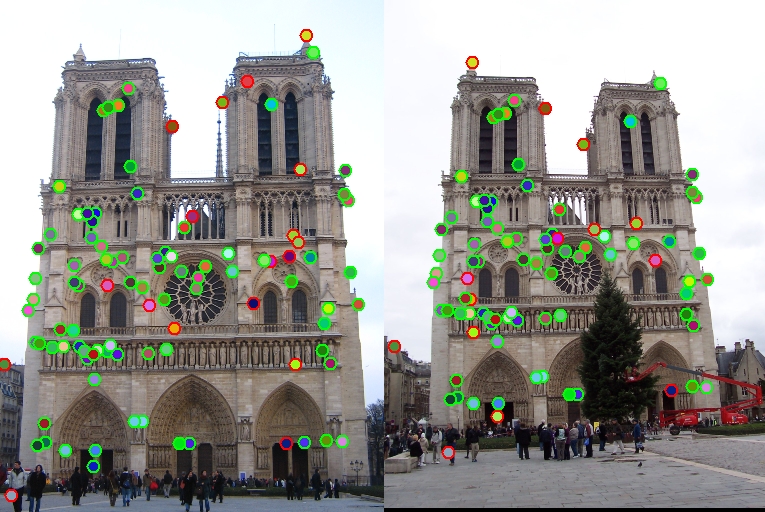

|

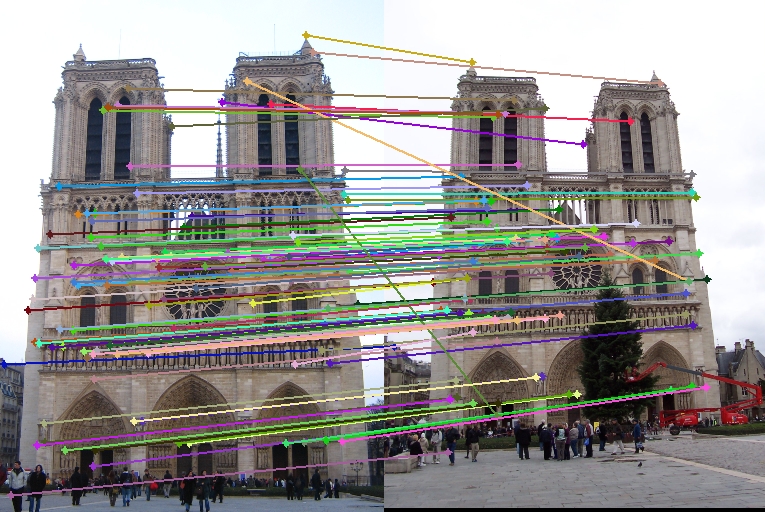

|

The final results using the top 100 matches gave me 82 true positives and 18 false positives. This resulted with an accuracy of 82%.

Possible Improvements

While my algorithm gets the job done, it is incredibly slow and still has room for lots of improvement in accuracy. For the most part, it is exhaustively searching everything, checking each possible pair of features for matches in one spot, and picking up multiple false positives in a another. One way good way to improve speed would be in the matching features step. Rather than comparing each feature to every other one in the opposite image, it would be much more efficient to simply pick a small number to compare it to based on a heuristic. This could reduce accuracy slightly, but the vast improvement in speed will be well worth. We can take a step back and improve accuracy in other regards without sacrificing speed. There are other filters we could apply in the first step to sharpen edges and provide more accurate interest points. This would result in more distinct features and therefore better matches.