Project 2: Local Feature Matching

Forming Features

In order to create the features, I first used normalized patches as my local feature. This gave me an accuracy of 65% when using the predefined interest points. Next I implemented a SIFT-like implementation For each interest point, I divide into 16x16 slices centered around the given x, y coordinate. Then I create a 4x4 cell from each slice and use imgradient() on each cell to find the magnitudes and directions. Next, I divide the directions from each cell into bins from -180 to 180 in 45 degree slices creating masks. I use these masks to know which direction contributed to which bin, and for each bin, I find the histogram bin value by summing this mask .* magnitude. Appending all these bins together to creates each feature row. Lastly I normalize each row of feature using normr(). By doing this, I increase the accuracy of the matching of the predefined interest points to 92%. This is because the dimensionality data created by the SIFT-like algorithm is much more likely to match across the two images for the same feature than the data created by flattening the normalized patch.

Choosing Interest Points

Choosing the interest points is where I ran into the most trouble on this project. I first found the horizontal and vertical derivatives of the Image, Ix and Iy. Then I found the three images Ix2, Iy2, IxIy corresponding to the products of the derivatives. I then filtered these with a larger Gaussian filter. I computed the scalar interest measure harris (har) in order to determine cornerness score for each point and used a threshold value of .05*maximum to create a thresholded score and used colfilter() with a sliding 2x2 window to find local maxima. This threshold value multiplier and window size were found through testing of different value to see what combination yeilded the best results. Then I sorted the non-zero points by their confidence, the thresholded harris score, and returned the x and y coordinates of the most confident points first. I found that blurring the image slightly at the beginning with a sigma value of 1 helped increase the likelihood that the interest points corresponded in the two images. Unfortunately, my resultant points still didn't achieve the level of accuracy as the test points but did reach a value of 68%.

Confidence Values and Sorting

points = find(harThresh > 0);

confidences = harThresh(points);

[confidences , idx] = sort(confidences(:,1),'descend');

ind = points(idx);

points = harThresh(ind);

Feature Matching

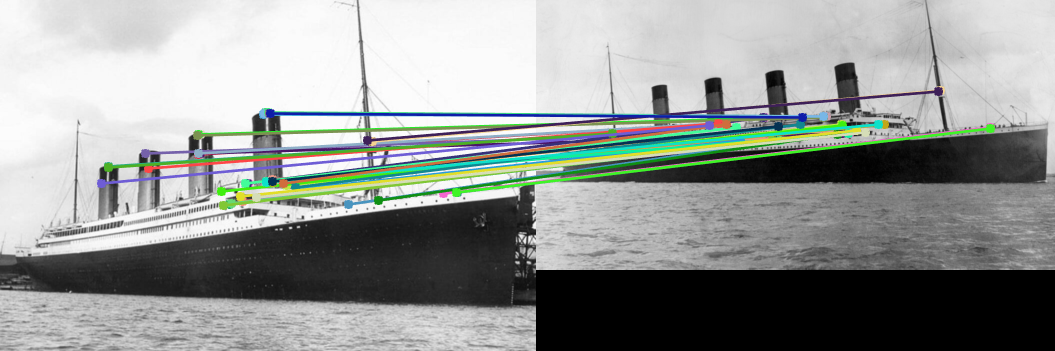

For feature matching, I found the 2 closest distances between each feature pair and used the ratio test to determine the confidence of the match. I also added in some geometric verification logic by assuming that the coordinate pairs of each of the two features in a match would be within a mean distance of each other, and if not, demoted the confidence of the match.

Geometric Verification Logic

yOffsets = abs(y1(1:num_features)- y2(I(:,1)));

avgOffset = mean(yOffsets);

offsetThreshold = avgOffset;

yThresholded = yOffsets > offsetThreshold;

confidences(yThresholded) = confidences(yThresholded)*.3;

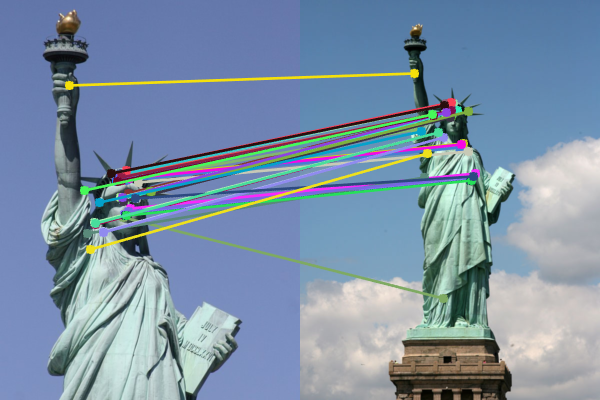

You can see in the image below, feature matching works well when there is little difference in scale between the two images,little rotation, and little difference in saturation. One of the reasons this image works so well is there is only a slight difference in angle from which the image is taken.

As you can see below, with such a large difference in scale, the algorithm has a hard time matching points on the face of the statue. However, it does do a good job in selecting similar interest points. The algorithm is able to do this because the images are taken from almost the same horizontal and vertical angle, and it is easier to identify similar corner points.