Proj. 2: Local Feature Matching

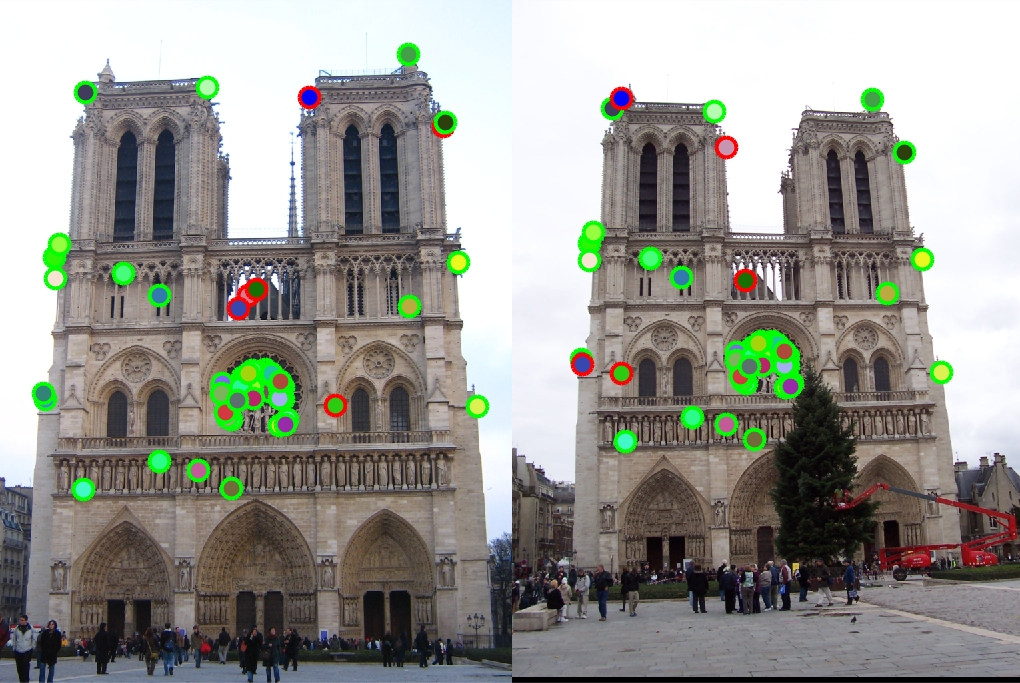

Sample result

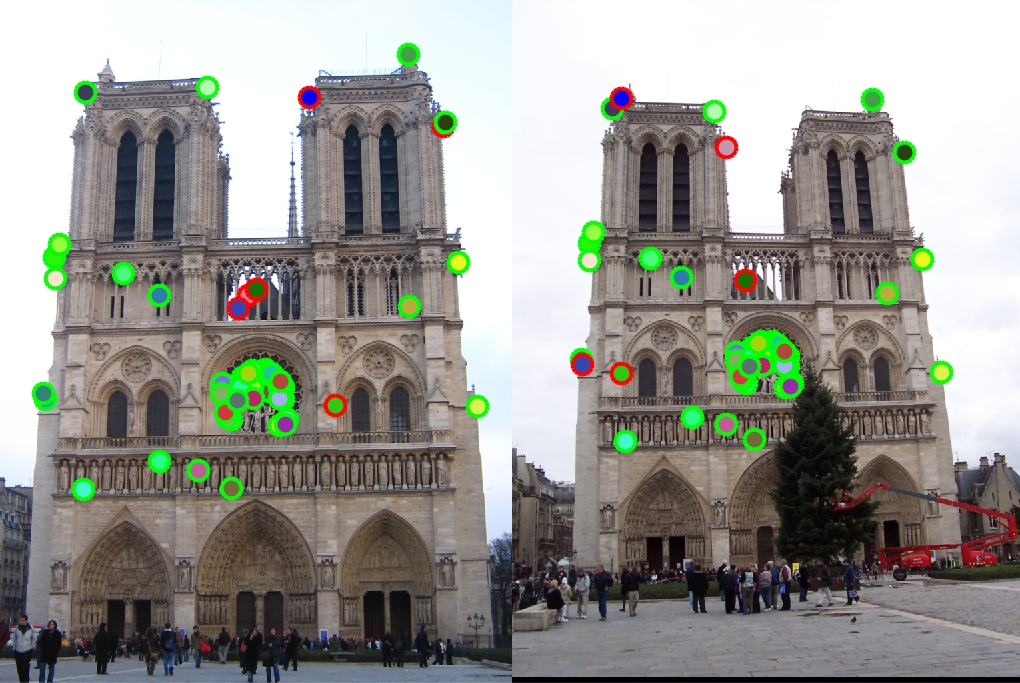

The aim of this project is to perform local feature matching, ie. identify feature points that are the same on two different images of the same object/scene. Typically, corner points are chosen as the features to match as they are distinct and are not affected by the scale, illumination or other properties of the images. A sample of the matched features corresponding to the Note Dame picture set is shown beside.

There are three main steps in feature matching:

- Detecting Interest Points

- Local feature generation

- Matching the features

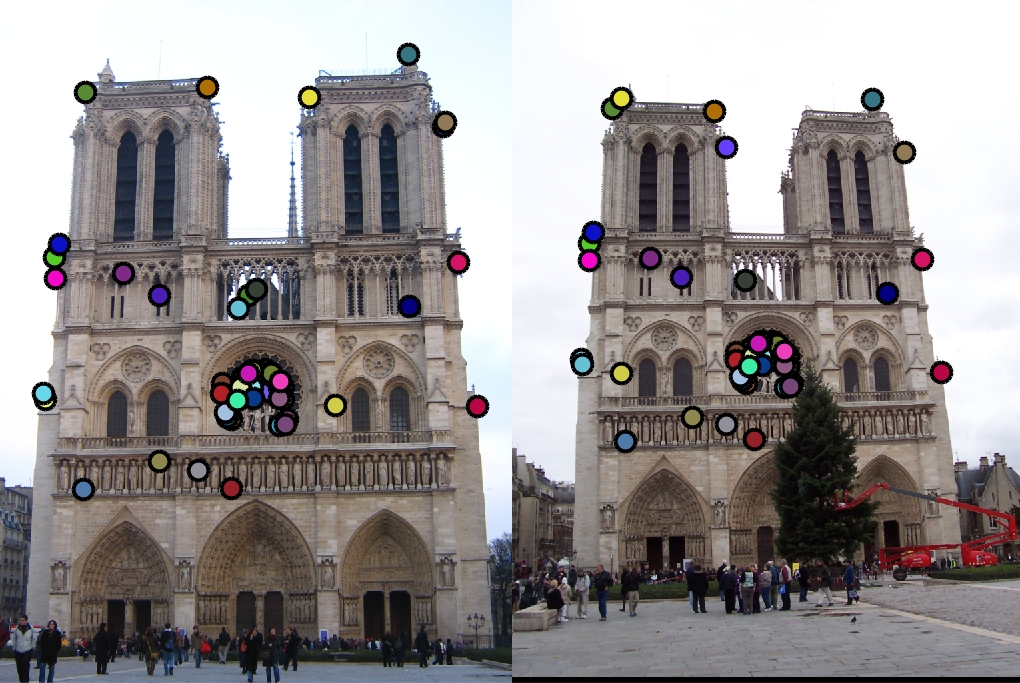

Detecting Interest Points

In this part, Harris Corner detection algorithm was implemented which is given as follows: the gradients in x and y direction are computed at each pixel of the image and a corenerness score is computed from the gradients. All points having score greater than a particular threshold(which is tuned for performance) are slected as interest points for feature matching.

[Gaussx, Gaussy] = gradient(Gaussian);

Gaussian2 = fspecial('Gaussian', feature_width, 2);

Ix = imfilter(image, Gaussx);

Iy = imfilter(image, Gaussy);

Ixx = Ix .* Ix;

Ixy = Ix .* Iy;

Iyy = Iy .* Iy;

Ixx = imfilter(Ixx, Gaussian2);

Ixy = imfilter(Ixy, Gaussian2);

Iyy = imfilter(Iyy, Gaussian2);

a = 0.06;

R = (Ixx.*Iyy - Ixy.^2) - a*((Ixx + Iyy).^2);

% Retaining values greater than cutoff

R = R.*(R>0.025);

% Max val in 5x5 window

Temp = colfilt(R,[5,5],'sliding',@max);

% Retaining only max values

R = R .* (R == Temp);

% returns non zero indeces from R

[Y, X] = find(R);

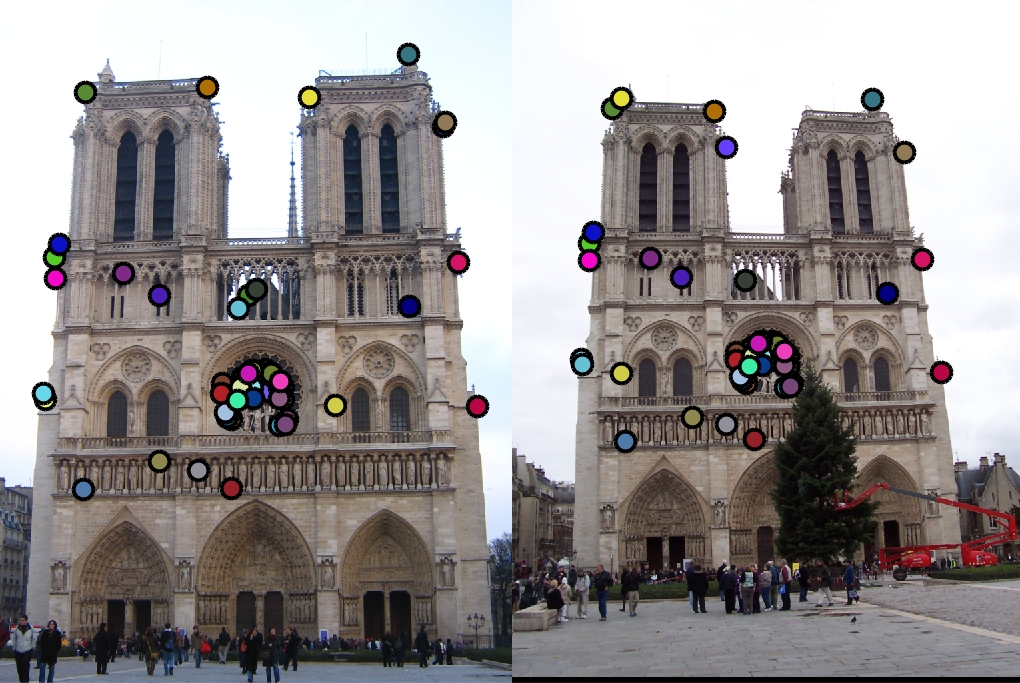

Local Feature Generation

A local descriptor for each point detected in the previous stage is claculated over a 16 pixel block arount the interest point, which is a histogram of the gradient values of dimension 128.

for i = 1: size(x,1)

% Angle and magnitude patch

apatch = angle(y(i)-7:y(i)+8, x(i)-7:x(i)+8);

mpatch = magnitude(y(i)-7:y(i)+8, x(i)-7:x(i)+8);

feature = [];

for p = 1: 4 : 16

for q = 1: 4: 16

vec = zeros(1,8);

for m = p: p+3

for n = q: q+3

tmp = ceil((apatch(m, n) + pi) / (pi/4));

vec(tmp) = vec(tmp) + mpatch(m, n);

end

end

vec = vec / sum(vec);

feature = [feature vec];

end

end

features(i,:) = feature;

end

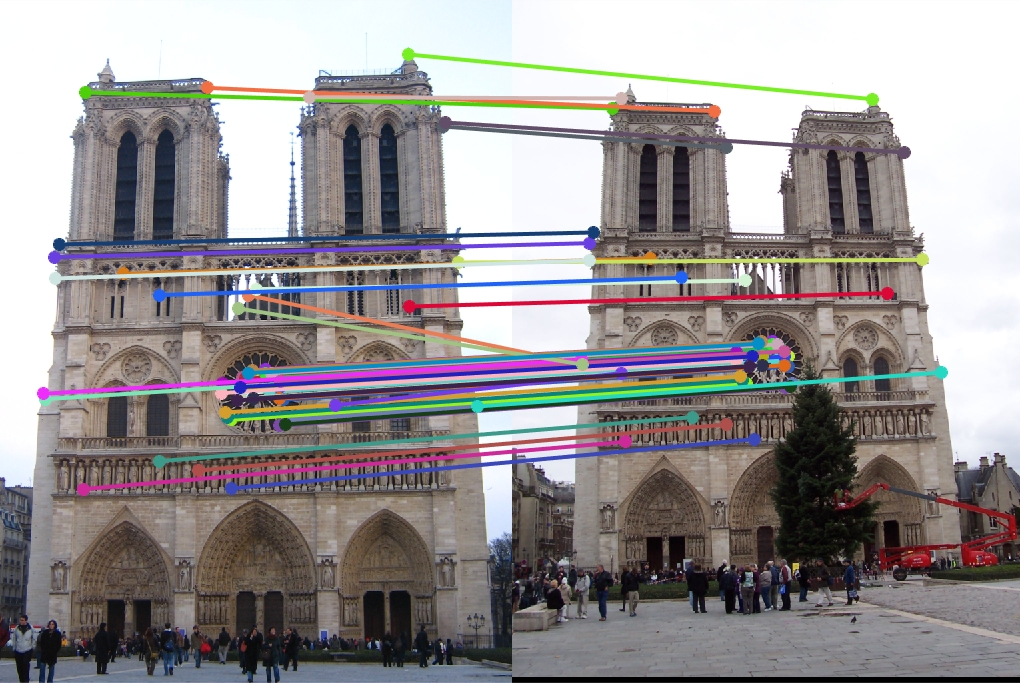

Matching the features

The nearest neighbor distance ratios(NNDR) is calculated between pairs of interest points of the two images. The inverse of the NNDR is the confidence. Pairs having confidence greater than a particular threshold are matched.

dist = pdist2(features1, features2); % pairwise dist bet each feature

[B, I] = sort(dist,2);

nearest_dist_ratio = B(:,1) ./ B(:,2);

matches = [];

matches(:,1) = find(nearest_dist_ratio <= 0.82); %tuned threshold

matches(:,2) = I(matches(:,1));

confidences = nearest_dist_ratio(matches(:,1)).^-1;

|

|

Notre Dame : 48 total good matches, 6 total bad matches. 89% accuracy

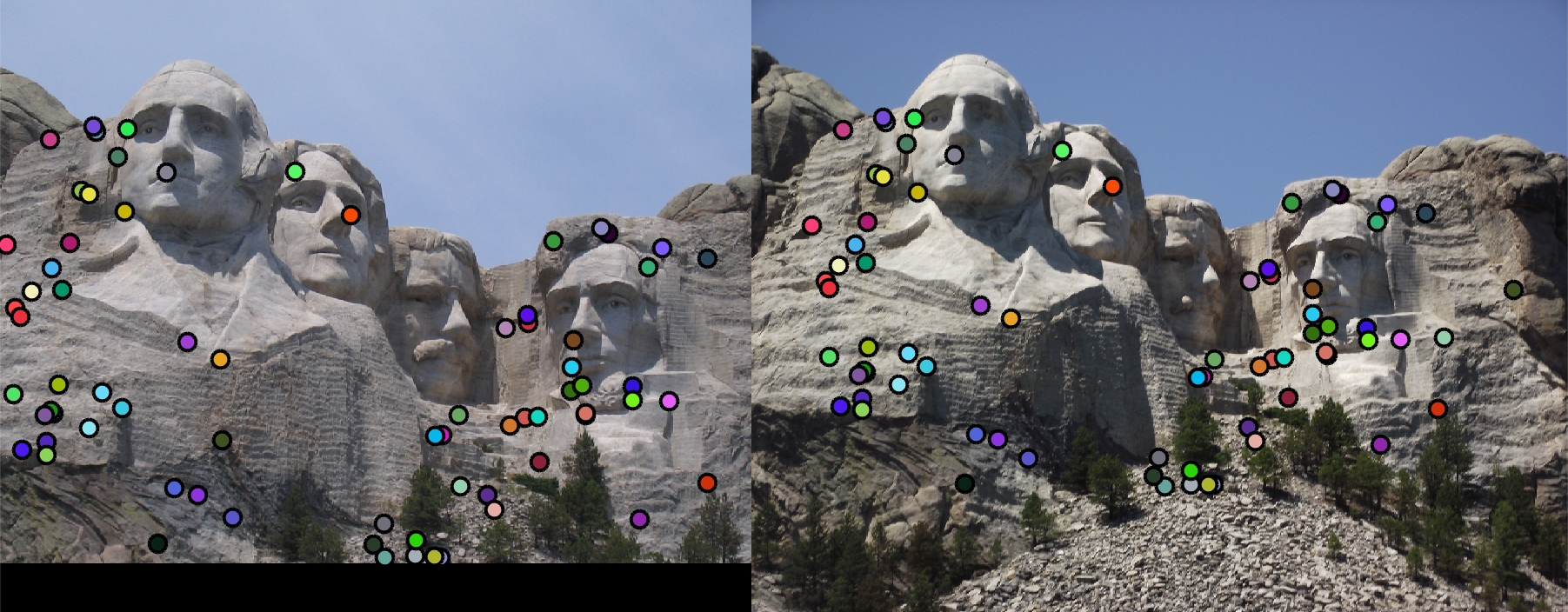

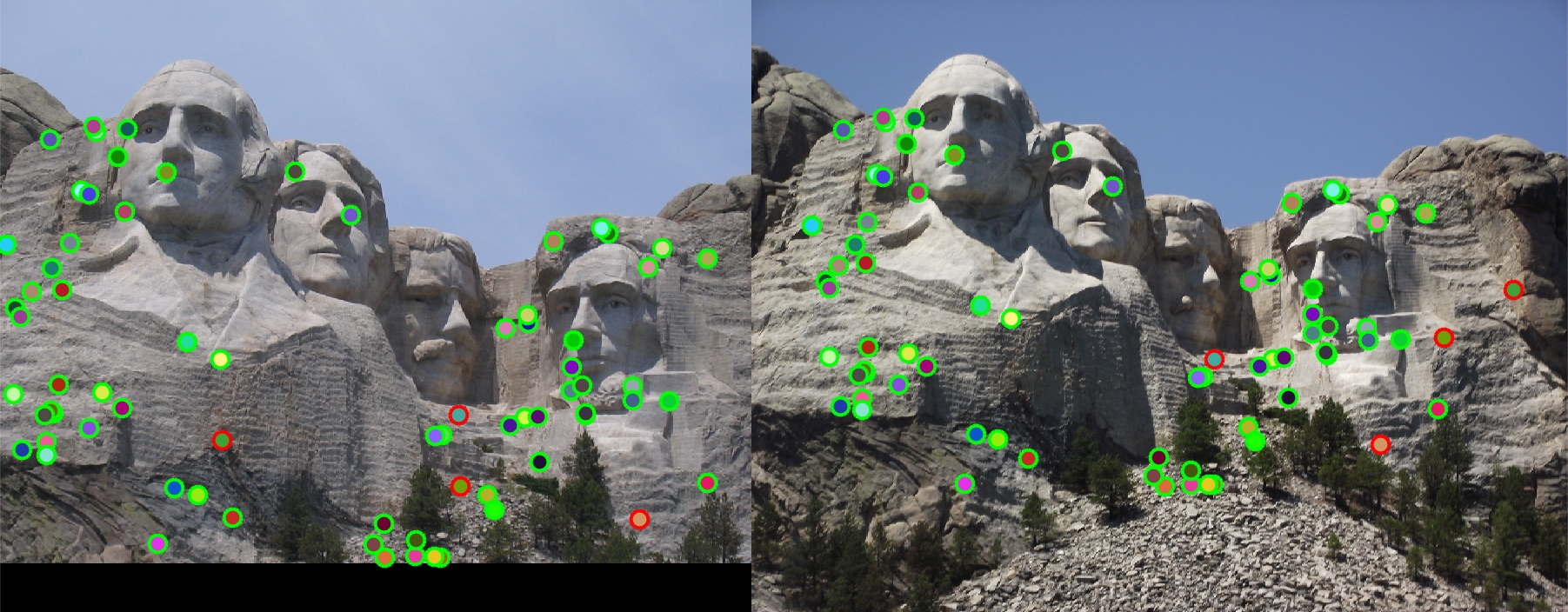

Mount Rushmore : 69 total good matches, 4 total bad matches. 95% accuracy