Project 2: Local Feature Matching

In project 2 feature matching is implemented in the following steps:

- Implement functions for matching features.

- Implement functions to get images patches as features for testing match_features().

- Implement functions to get SIFT like features.

- Implement functions for getting interest points using Harris corners detection.

match_features()

This is accomplished by exhaustively trying to compare feature of one interest point with another. The differences between 2 interest points are stored and sorted. A threshold of 0.8 is applied towards the ratio of the top match and the second best match. If the ratio is smaller than the threshold, the match is stored as a valid match. The confidence value corresponding to the match is simply 1 over the ratio as in if the best match's difference is significantly smaller than the second best match, the top match is more likely to be a true match. The code for the function is shown below:

function [matches, confidences] = match_features(features1, features2)

num_features = min(size(features1, 1), size(features2,1));

confidences = zeros(num_features,1);

matches = zeros(num_features, 2);

threshold = 0.8;

for i=1:num_features

distances = zeros(num_features);

for j=1:num_features

distances(j) = norm(features1(i, :) - features2(j, :));

end

[distances, ind] = sort(distances, 'ascend');

ratio = distances(1) / distances(2);

if ratio < threshold

matches(i, :) = [i, ind(1)];

confidences(i) = 1/ratio;

end

end

ind = find(confidences > 0);

matches = matches(ind, :);

confidences = confidences(ind);

% Sort the matches so that the most confident onces are at the top of the

% list. You should probably not delete this, so that the evaluation

% functions can be run on the top matches easily.

[confidences, ind] = sort(confidences, 'descend');

matches = matches(ind,:);

end

get_features() using image patches

For each interest points, a box with dimension feature_width by feature_width around the interest point is cut out is used as the feature. The features are padded with 1s if applicable.

function [features] = get_features(image, x, y, feature_width)

x = round(x);

y = round(y);

features = zeros(length(x), feature_width.^2);

for i=1:length(x)

arr = image(max(1, y(i)-feature_width/2+1):min(length(image(1, :)), y(i)+feature_width/2),...

max(1, x(i)-feature_width/2+1):min(length(image(:, 1)), x(i)+feature_width/2));

arr = reshape(arr, [], 1);

arr = padarray(arr, [feature_width .^2 - length(arr), 0], 1, 'post');

features(i, :) = arr';

end

end

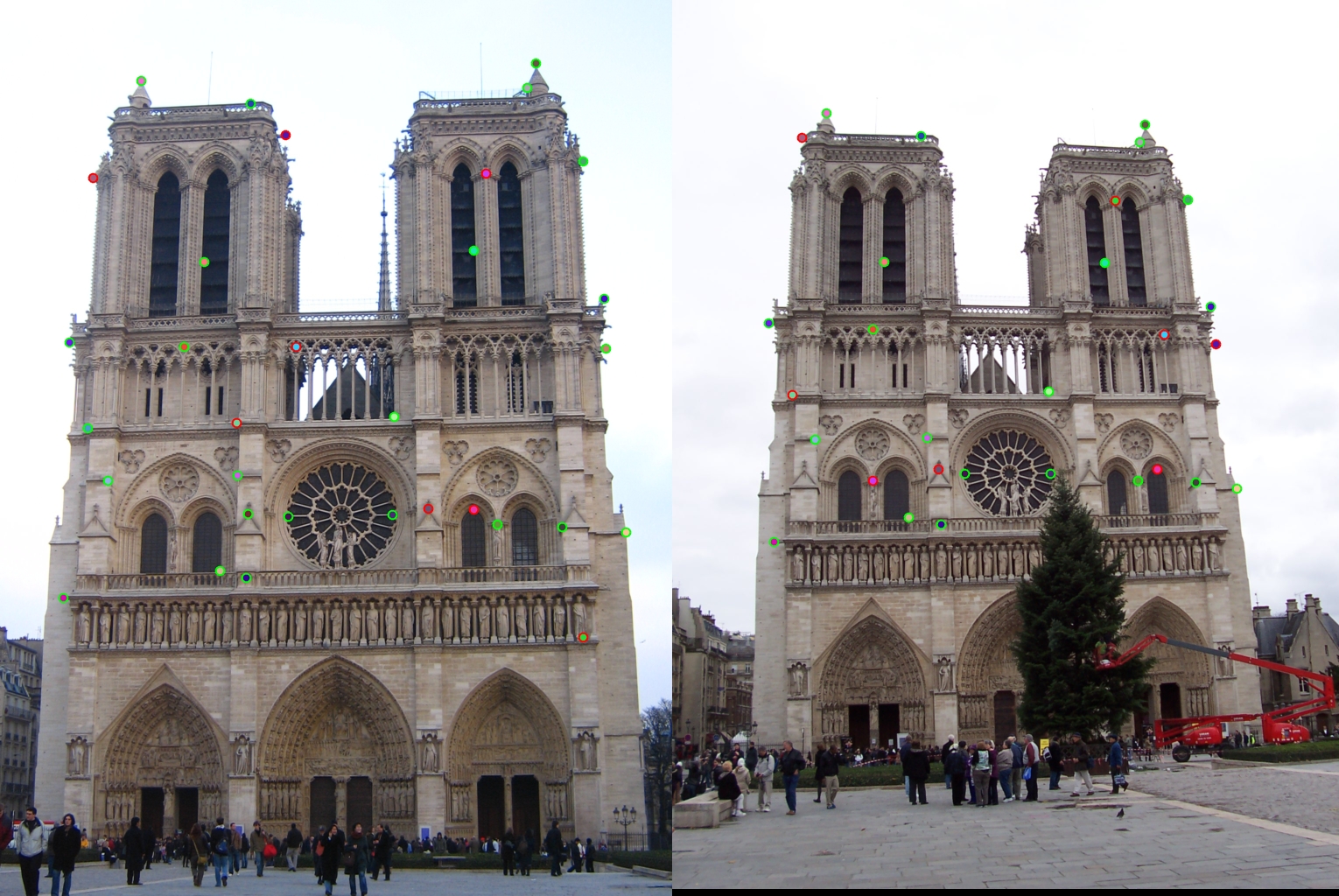

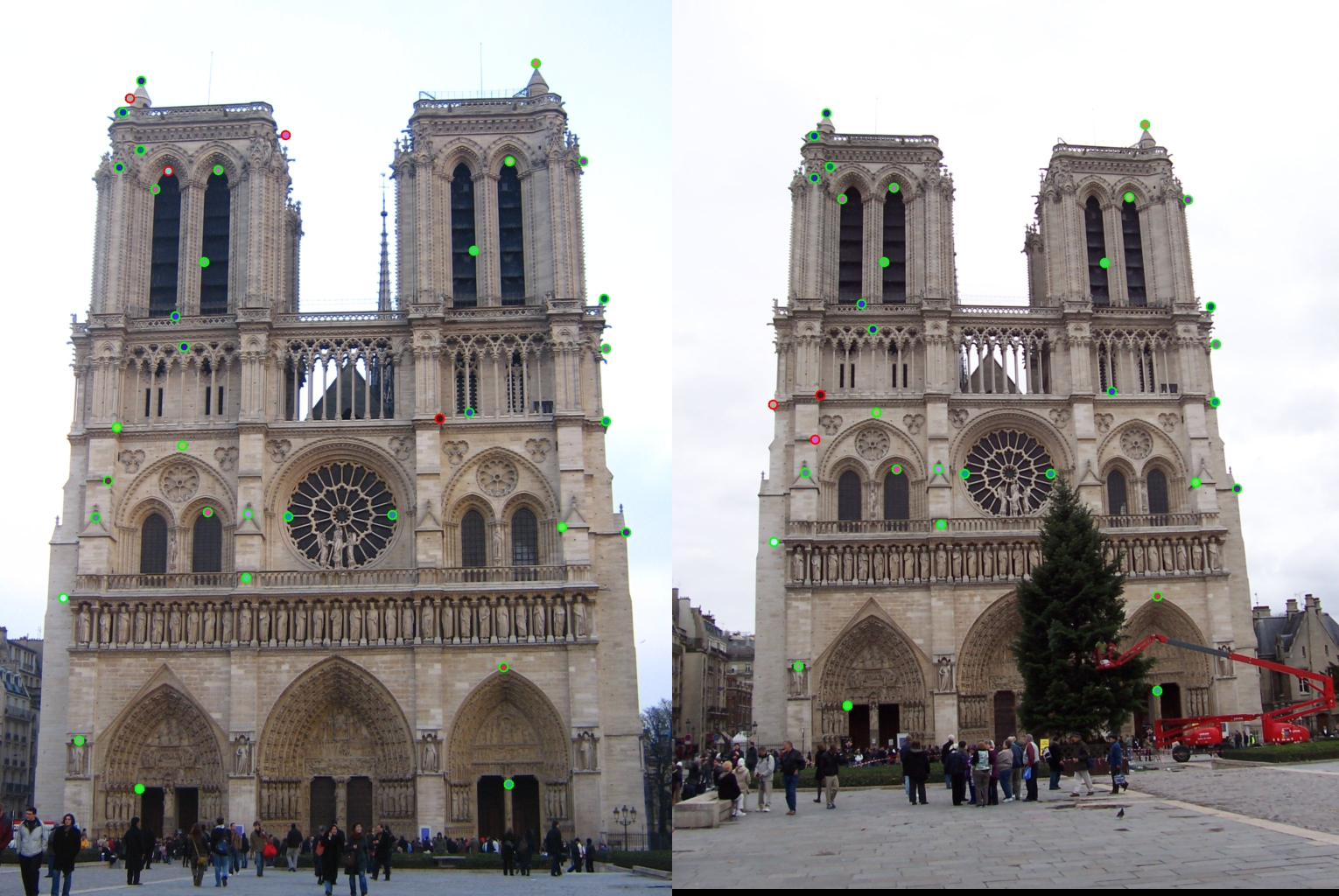

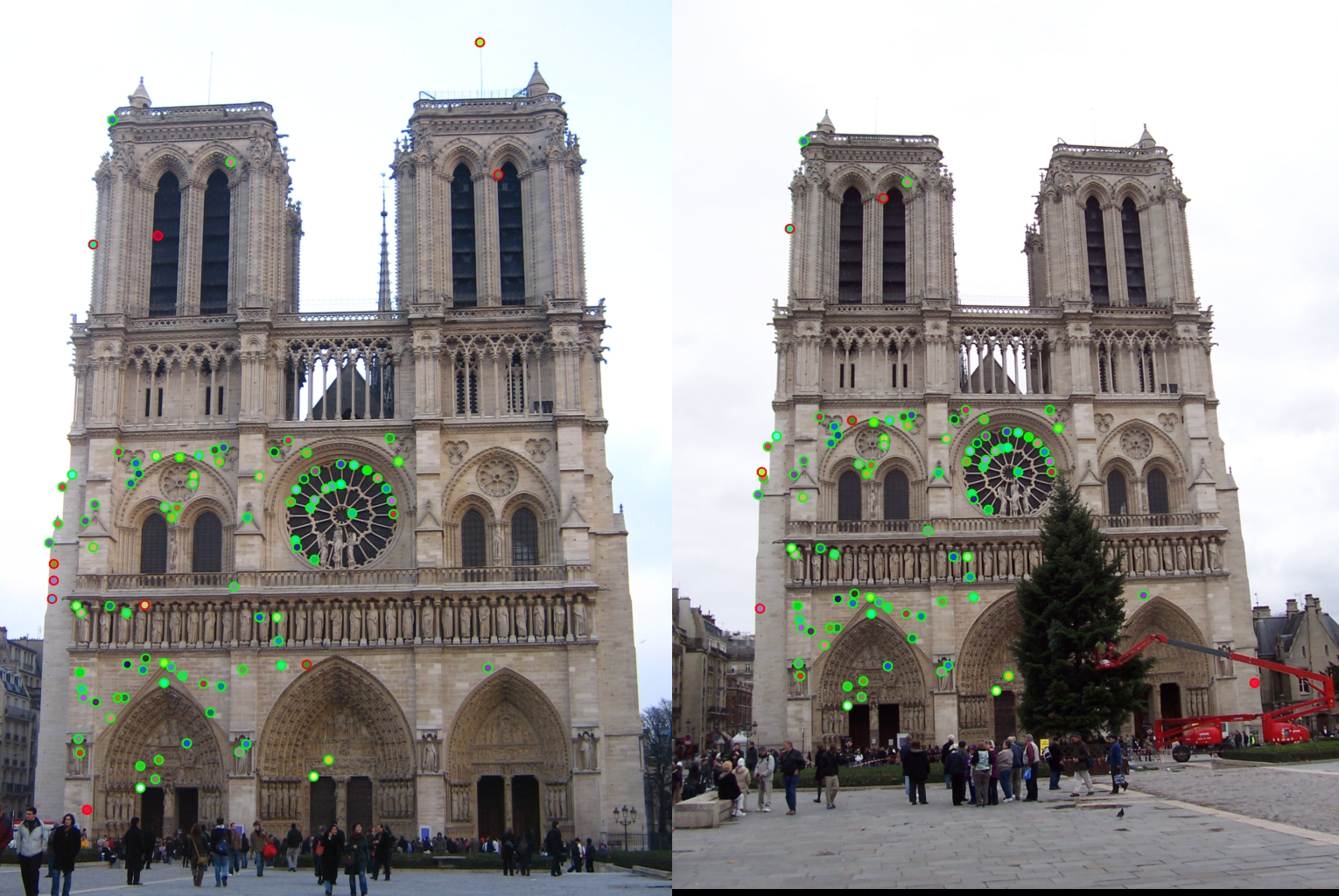

The accuracy is 75% for the Notre dame image pair.

get_features() using SIFT

For each interest points, a box with dimension feature_width by feature_width around the interest point is cut out. The patch of image is padded with 1s if applicable. The patch is then divided into a grid of 16 cells. Imgradient function is used to calculate the gradients and directions for each cell. The graidents are arranged into 8 directions and the sum of gradients for each direction are summed as feature.

function [features] = get_features(image, x, y, feature_width)

x = round(x);

y = round(y);

angles = -180:45:180;

features = zeros(length(x), 128);

for i = 1:length(x)

%Get image patch and pad 1s if applicable.

arr = image(max(1, y(i)-feature_width/2+1):min(length(image(:, 1)), y(i)+feature_width/2),...

max(1, x(i)-feature_width/2+1):min(length(image(1, :)), x(i)+feature_width/2));

arr = reshape(arr, [], 1);

arr = padarray(arr, [feature_width .^2 - length(arr), 0], 1, 'post');

patch = reshape(arr, [feature_width, feature_width]);

[mag, dir] = imgradient(patch);

descriptor = zeros(1,128);

width = feature_width / 4;

for j = 0 : 15

r = floor(j/4);

c = mod(j, 4);

cellMag = reshape(mag(r*width + 1:r*width +width, c*width + 1:c*width +width), [], 1);

cellDir = reshape(dir(r*width + 1:r*width +width, c*width + 1:c*width +width), [], 1);

[~, inds] = histc(cellDir, angles);

orientations = zeros(1,8);

for k = 1:length(inds)

orientations(inds(k)) = orientations(inds(k)) + cellMag(k);

end

descriptor(j*8+1:j*8+8) = orientations;

end

features(i, :) = descriptor./norm(descriptor);

end

features = features .^ .8;

end

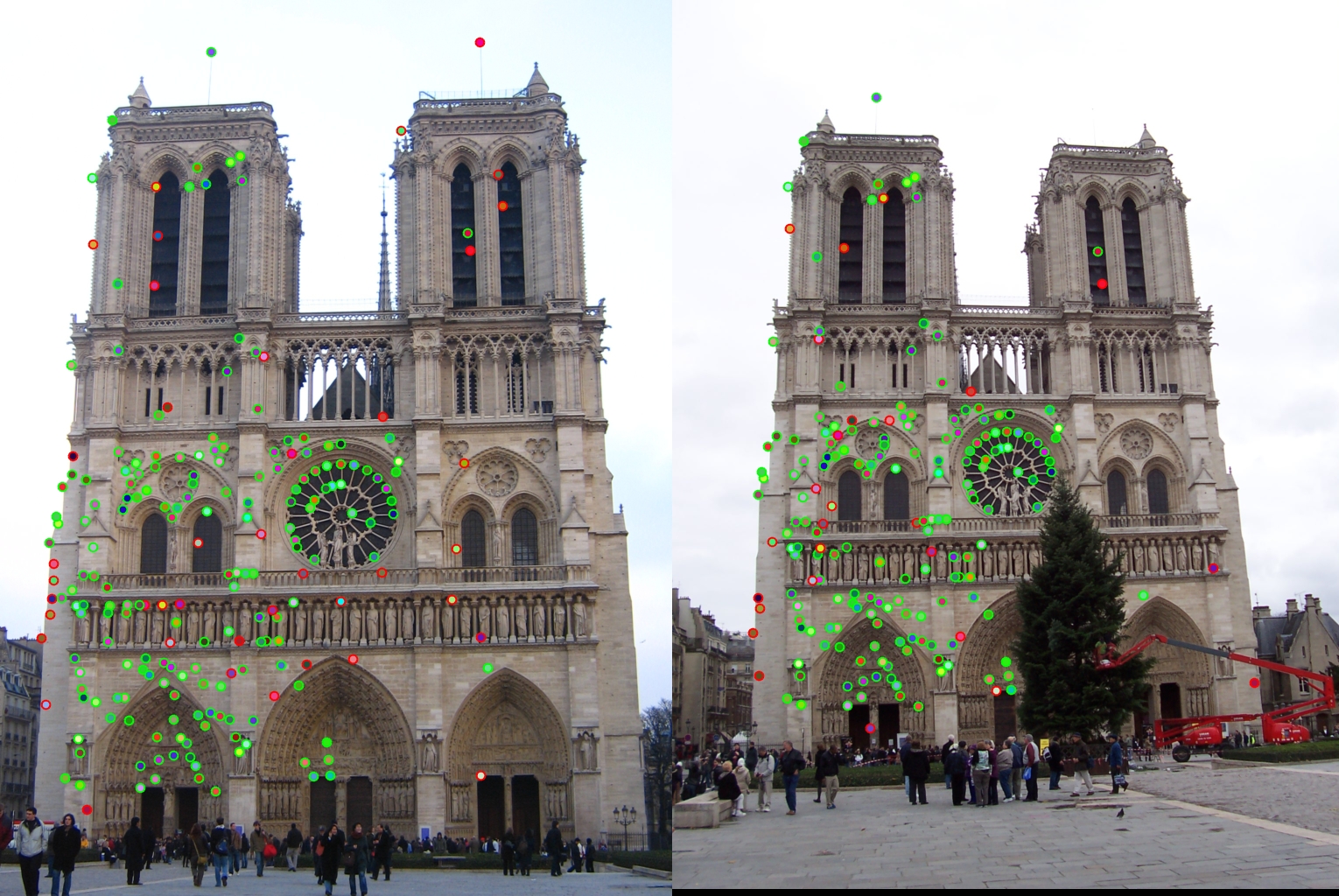

The accuracy is 89% now for the Notre dame image pair. 33 out of 37 points are correctly matched.

get_interest_points()

This function implements Harris corner detection by getting the image gradient first. The image gradients are used to calculate harris score. A sliding window with size feature_width / 2 by feature_width / 2 is applied to find max score inside the window. The max scores are then thresholded using 5e-6.

function [x, y, confidence, scale, orientation] = get_interest_points(image, feature_width)

[Gx, Gy] = imgradientxy(image);

f = fspecial('gaussian', 10, 1.5);

Ixx = imfilter(Gx.^2, f);

Iyy = imfilter(Gy.^2, f);

Ixy = imfilter(Gx.*Gy, f);

har = ((Ixx.*Iyy) - Ixy.^2) - 0.05.*(Ixx + Iyy).^ 2;

har = imfilter(har, f);

har(1:feature_width*2, :) = 0;

har(end - feature_width*2:end, :) = 0;

har(:, 1:feature_width*2) = 0;

har(:, end - feature_width*2:end) = 0;

har_max = colfilt(har, [feature_width/2 feature_width/2], 'sliding', @max);

har = har.*(har == har_max).* (har > 5e-6);

[y, x] = find(har > 0);

end

Out of the best 100 points, 90 points are true matches for the Notre dame image pair.

Out of all 214 matches, 173 are true matches for the Notre dame image pair.

Mount Rushmore

Out of best 100 matches, 94 are true matches.

Episcopal Gaudi

Out of 22 matches, 4 are true matches.

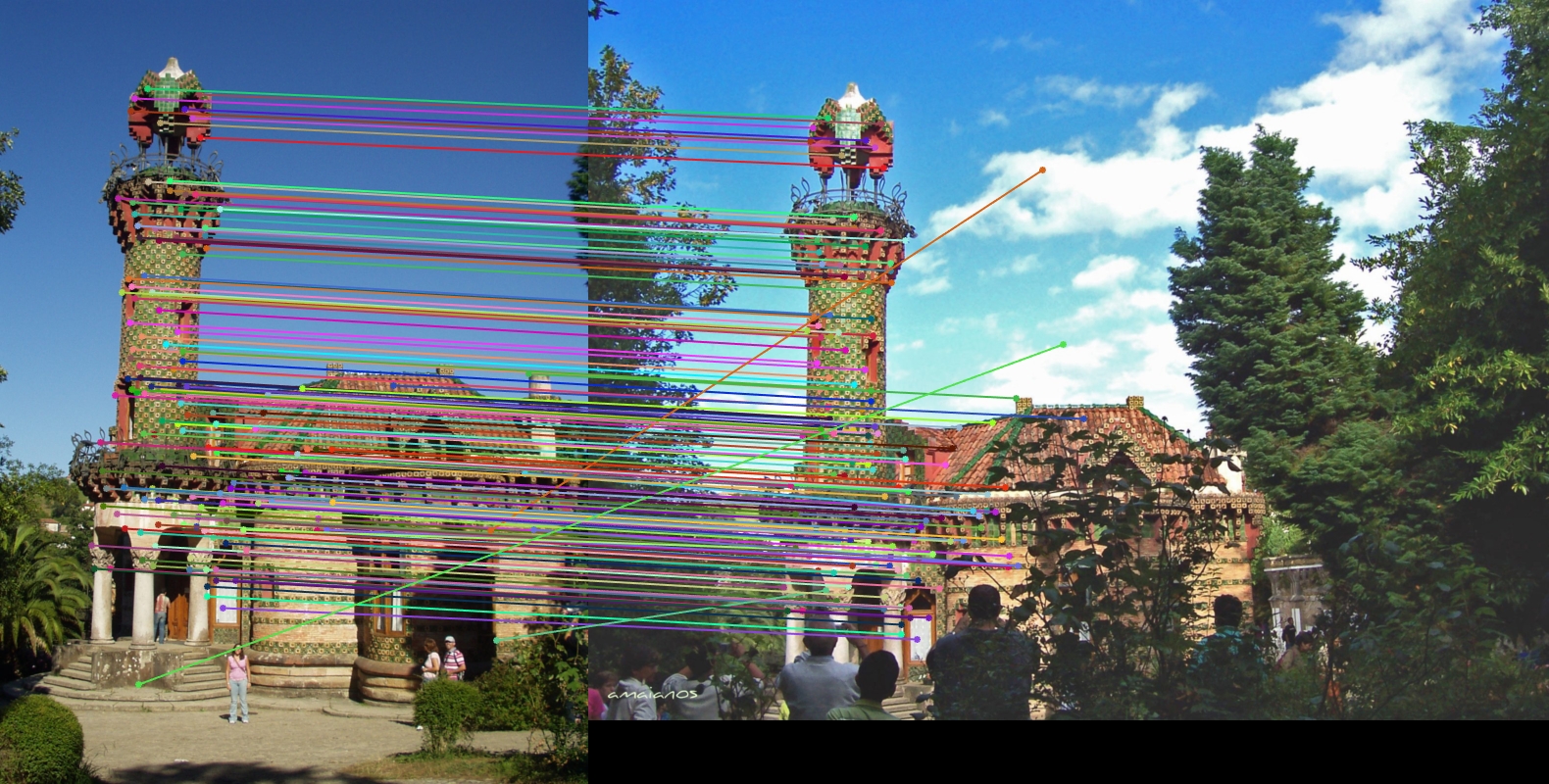

Capricho Gaudi

This pair of Capricho Gaudi works well. The matches are indicated by arrows.