Project 2: Local Feature Matching

In this project, features were matched between two images consisting of the same object but taken at slightly different perspectives. The basic algorithm went through these steps:

- Find interest points in both images

- Describes each interest point with a local feature

- Matches the local features

Interest Points

Interest points were found using an implementation of the Harris Corner Detector. The image is first blurred and then the gradients in the x and y direction are found. The Haris Corner function was then used with an alpha value of 0.06. Non-maximum suppression was used on the result to find the true corners of the image. Upwards of 2000 interest points were detected on some images, which overall improved the accuracy when compared with using hand picked interest points.

Local Features

SIFT like features were taken from each interest point. The image was first blurred, and the gradients found. A patch of the image was taken and divided into a 4x4 grid, into each a 8 bar histogram was filled with the directions of the gradients at each point. The resulting 128x1 dimension feature was found. At first, normalized patches were used, but SIFT greatly improved the accuracy of the matching upwards of 20%.

Feature Matching

Features were matched to their closest relative, measured by their simplle Euclidean distance. Confidences for those matches were determined by subtracting their neigherest neighbor ratio away from 1. The matches were sorted by their confidence and the top 100 matches were used.

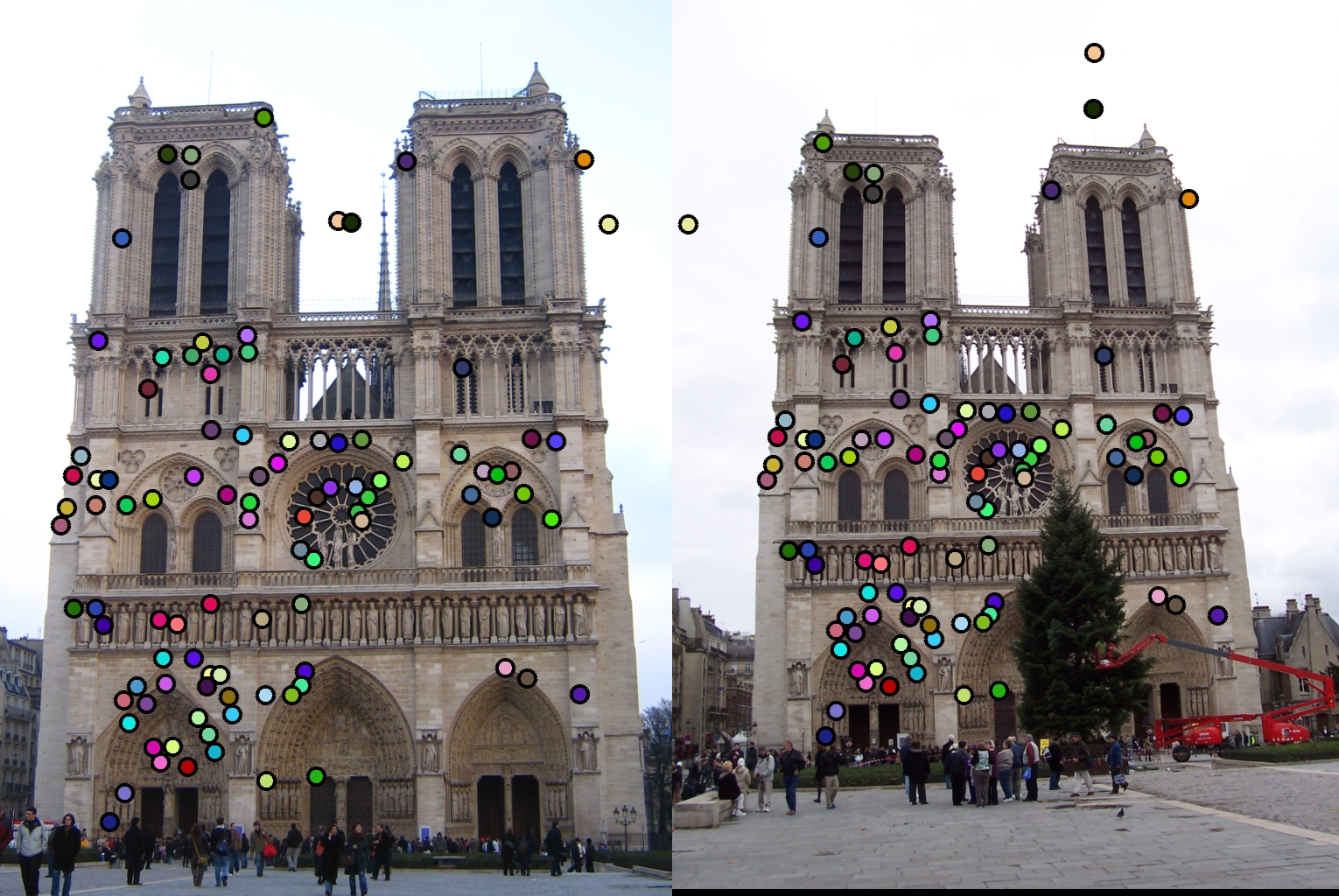

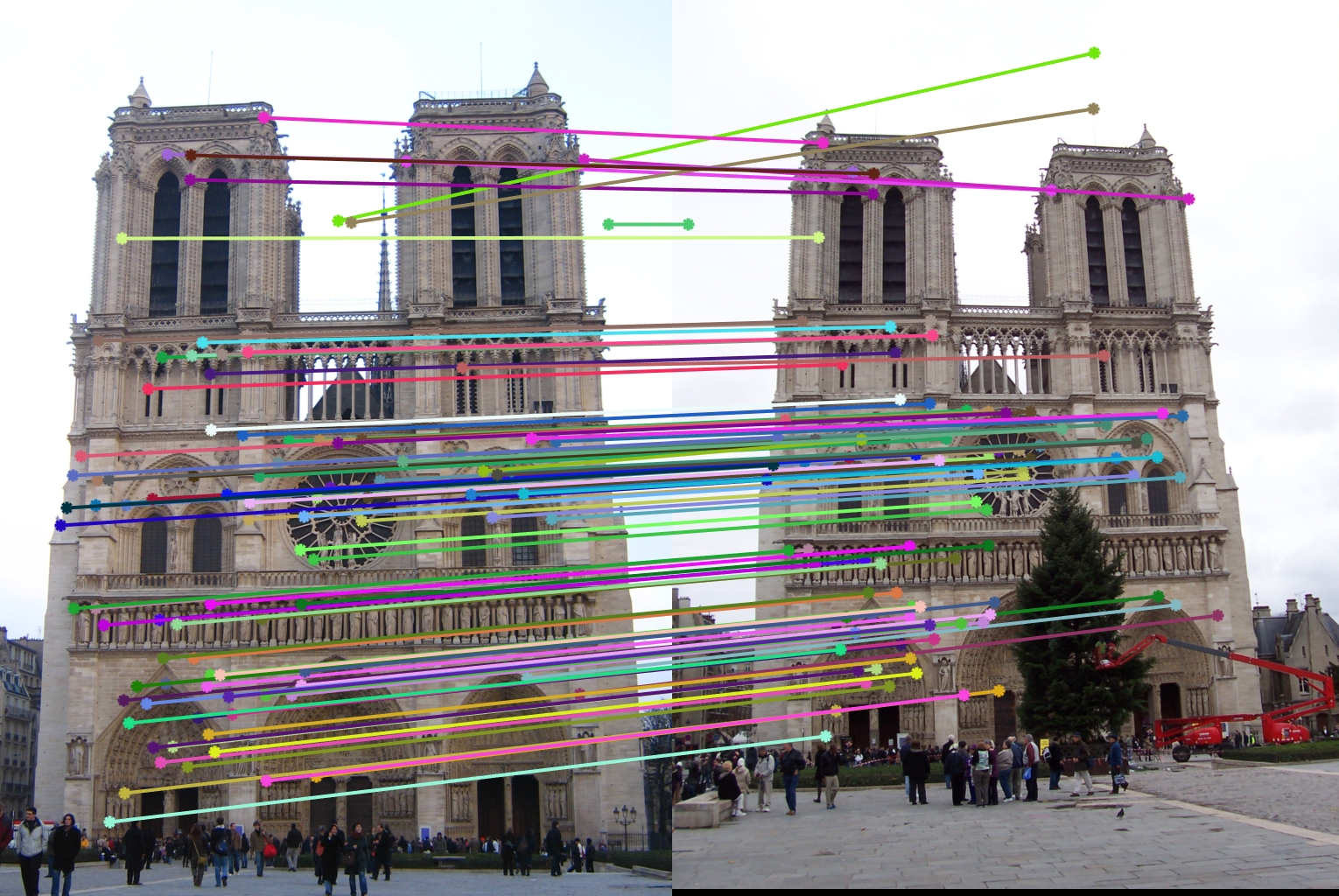

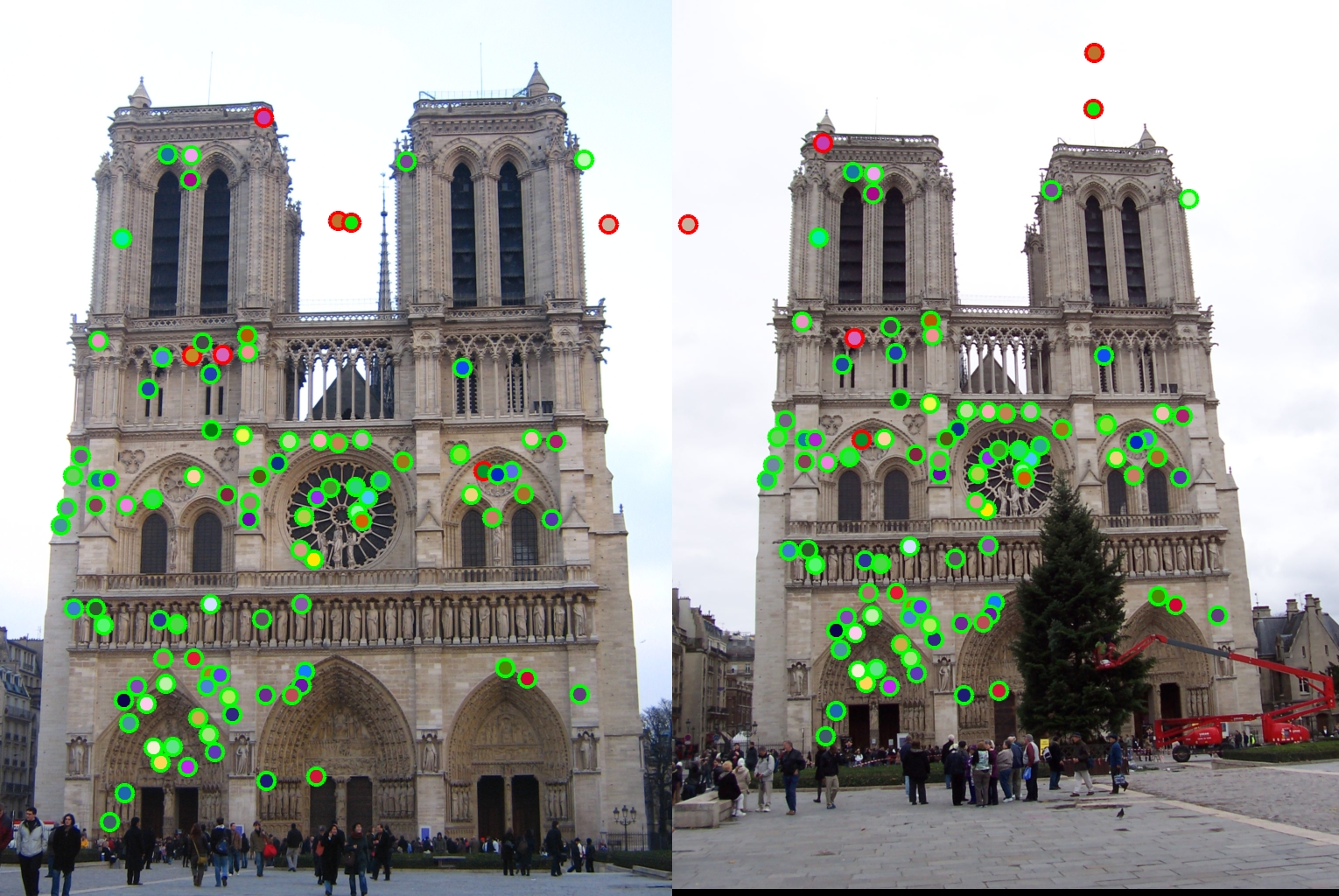

Notre Dame

An accuracy of 93% was achieved with basic feature matching.

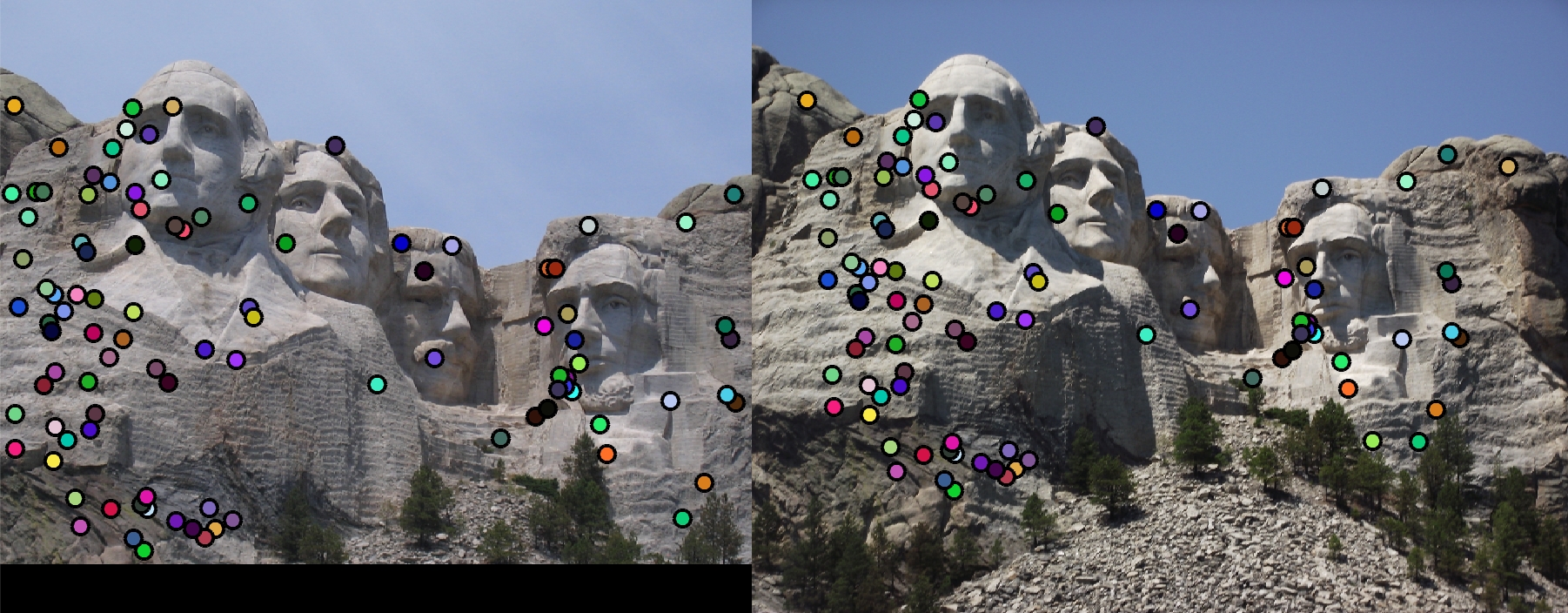

Mount Rushmore

An accuracy of 93% was achieved with basic feature matching.

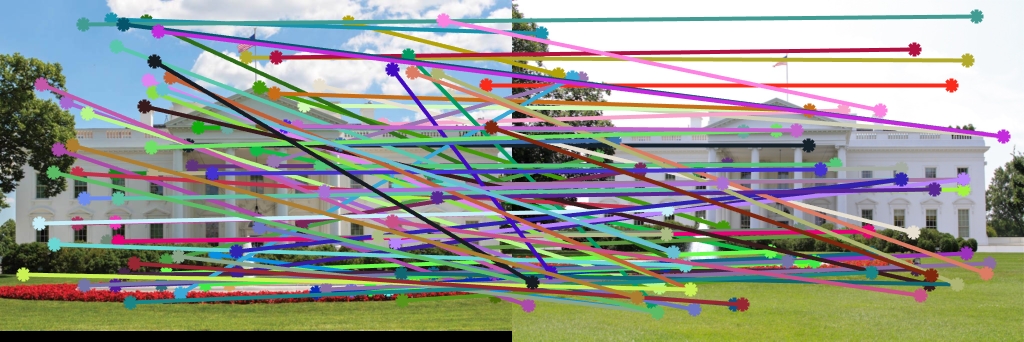

White House

Although no true accuracy is availabel for these images, looking at the visualization shows that while not 100%, many points were accuractly matched on these images. This image provides a challenge as there are several repeating patterns such as the windows and columns that were matching incorrectly.