Project 2: Local Feature Matching

The goal of this project is matching the local features in two images. This algorithm used in this project is a simplified SIFT-like approach which primarily includes the following steps. First, corner keypoints are detected as local features on both images using Harris corner detector and non-maximum suppression is used to reduce a connected area to one pixel. Second, feature descriptors are developed by using normalized patches or SIFT descriptors for all detected keypoints. Finally, by comparing Euclidean distance between each pair of keypoints from the two images, the most confident mateches are detected using Nearest Neighbor Distance Ratio (NNDR) algorithm.

Harris Corner Detector

The Harris corner dector consists of the following steps. The first step is obtain the gradients of images. To reduce the noise effect, Gaussian filter is used to blur images first and then Matlab function imgradientxy() is used to obtain the gradients in x- and y- directions, which are denoted as Ix and Iy. The function imgradientxy() essentially uses Sobel filter to calculate the image gradients. Then, square of image gradients, including Ix2, Iy2, and IxIy are computed. Finally, a window function, which is a Gaussian function g(σ), is convoluted with the results in the previous step to obtain g(Ix2), g(Iy2), and g(IxIy). Therefore, the cornerness function is given by [Harris, 1988]:

Har=g(Ix2)g(Iy2)-[g(IxIy)]2-α[g(Ix2)+g(Iy2)]2

where α is a parameter between 0.04 and 0.06. Then, a threshold is used to pick out all corner candidates. The Matlab function bwconncomp() is used to check 8-connected neighborhood and find all connected regions. Within each connected region, the pixel with maximum intensity is remained and all other pixels are suppressed. In addition, corner points close to the image boundaries are suppressed to avoid spurious points near the boundaries.

Local Feature Descriptor

The local feature descriptor is developed to describe the information about the detected keypoint. The first local feature descriptor implemented in this project is normalized patch. In this algorithm, the intensity in a square window around a keypoint is stored in a vector and then the vector is normalized to form a local feature descriptor. The window size is 16 by 16, which forms a 256 by 1 vector.

The second local feature descriptor implemented in this project is a SIFT descriptor, which stores the information of gradient magnitude and orientation in a square window around a keypoint. This algorithm is capable of taking keypoint scale and orientation into account to develop a scale- and orientation-invarient feature matching system, but they are not implemented in this project. In this project, we calculate the gradient magnitude and orientation in a 16 by 16 window with the keypoint as one of the four center pixels. The window is divided into 4 by 4 cells with 8 bins repsensenting 8 orientations in each cell. A gaussian weight function is applied on the gradient magnitude within the 16 by 16 window to give more weight to the pixel closer to the center. Then the the gradient magnitude in each grid is contributed to 8 bins including 2 nearest orientations and 4 nearest cell. In this project, only the local cell is considered. Therefore, a 128 by 1 vector is formed and then normalized as a local feature descriptor.

Feature Matching

After obtaining all feature points with their descriptors in both images, the Euclidean distances from each feature point in one image to all feature points in the other image are computed, and the two keypoints with the shortest distance are regarded as a pair of matches. Then, a shreshold is used to eliminate those feature matches with their shortest distances larger than the shreshold. Afterwards, Nearest Neighbor Distance Ratio (NNDR) is used to sort all the matches. This algorithm calculates the ratio between the distance to the first nearst neighbor (NN1) and the distane to the second nearest neigbor (NN2). Those matches with lower ratio have higher confidence, which yields:

Conf=1-NN1/NN2

Where Conf is the confidence for one pair of feature match. By sorint the confidences, the feature matching system can output the most confident mateches.

Results

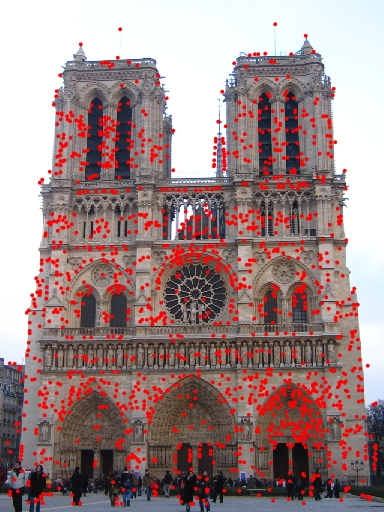

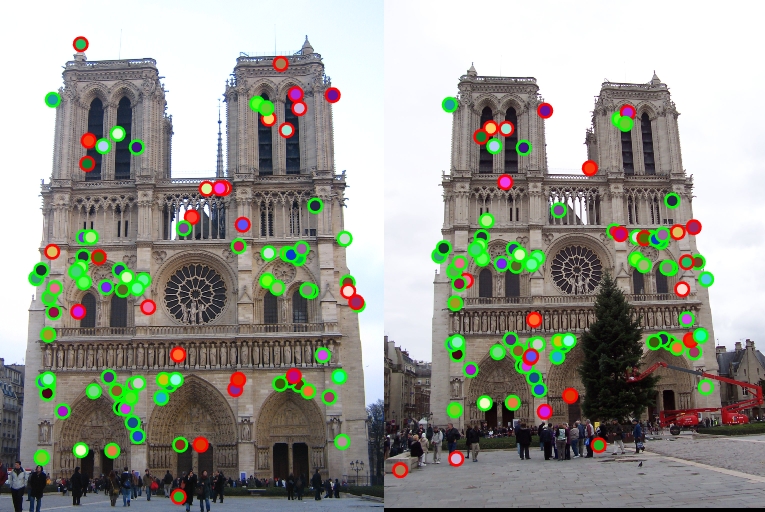

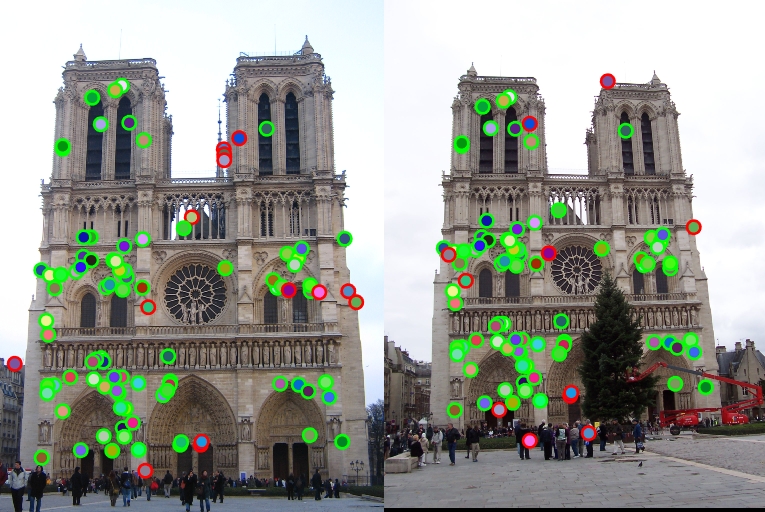

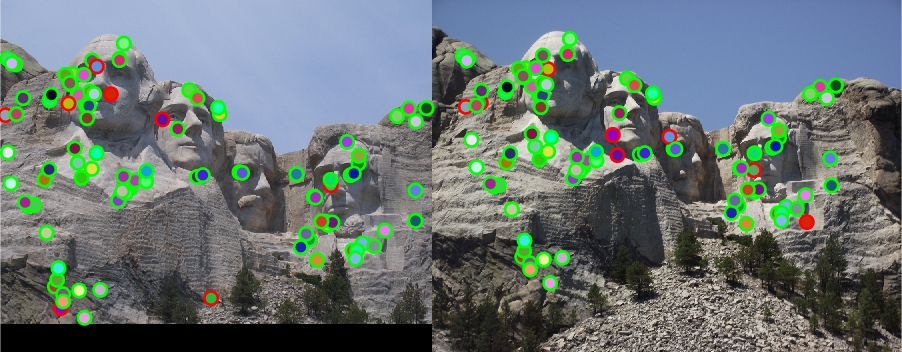

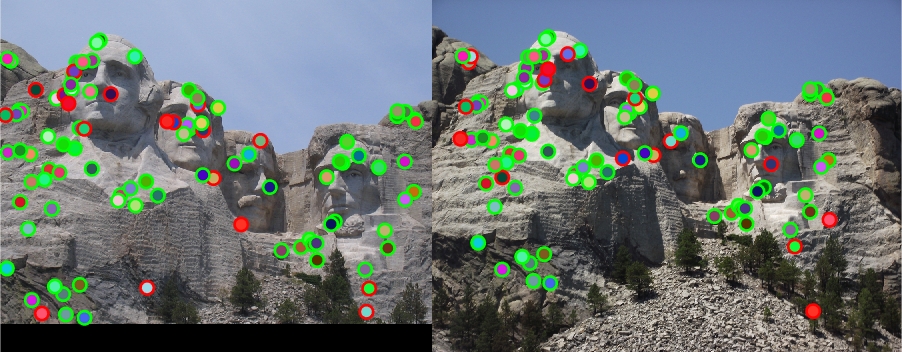

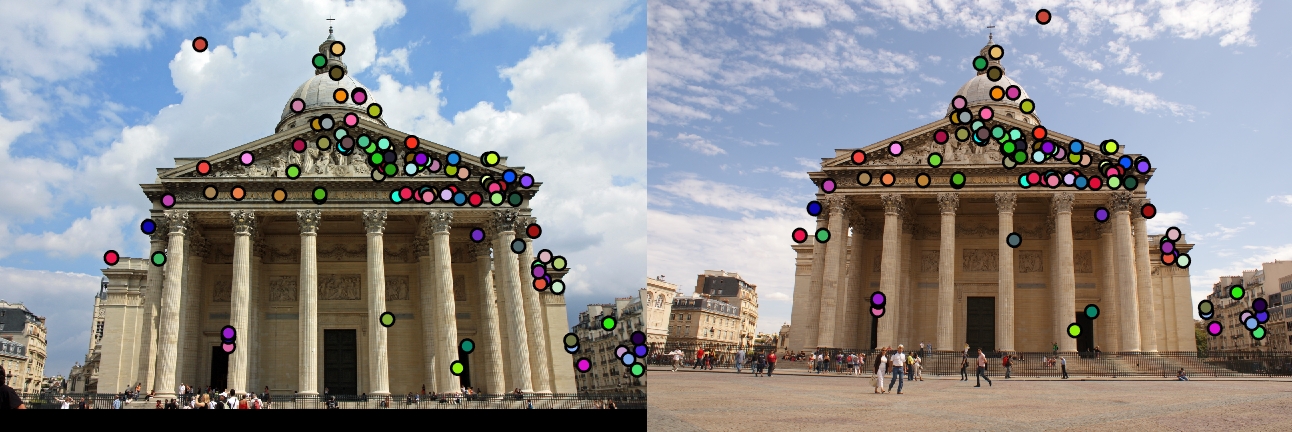

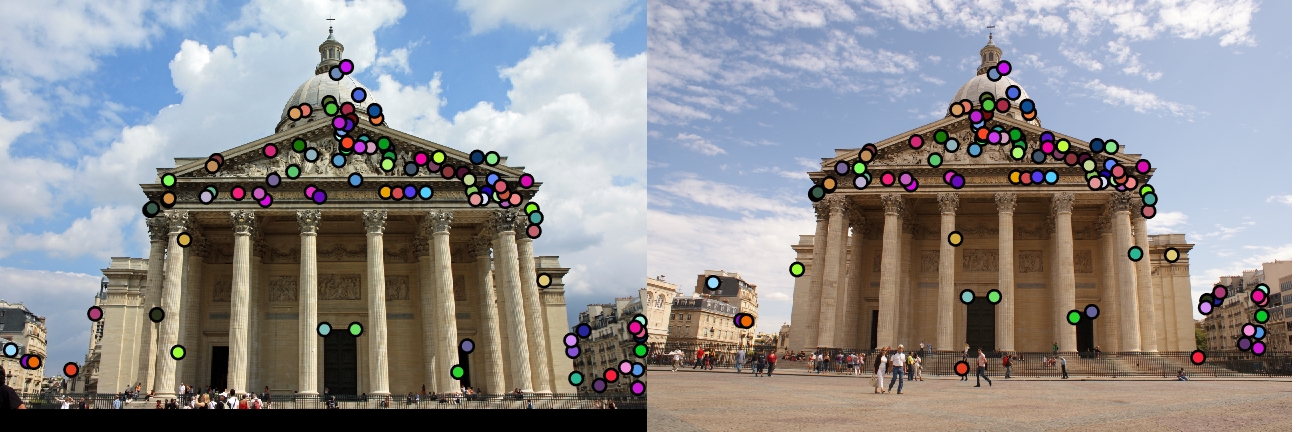

In Figs. 1(a) and 1(b), different Harris cornerness thresholds are tried to see their influence on corner detection. It is found that if a very small threshold is used, many points in the flat region are picked as corner points. This causes many real corner points to be connected by these fake corner points and then suppressed after non-maximum suppresion. If the threshold is too large, many weak corner points are not picked, so the total number of keypoints also decreases. In Figs. 2(a) and 2(b), the matching correctness by using SIFT descriptors is greatly improved, compared with the correctness by using normalized patches, showing the advantage of SIFT descriptors in feature matching. In Figs. 3(a) and 3(b), the mateching correctness of normalized patches is higher than SIFT descriptors. This is probably because that the Mount Rushmore images have more detailed information in their texture, which can be coded in normalized patches rather than SIFT descriptors. An extra pair of Pantheon Paris images are tested using normalized pateches and SIFT descriptors and their results are shown in Figs. 4(a) and 4(b) without ground truth to evaluate their correctness.

(a) (b)

(b) (c)

(c)

|

|

Fig. 1 (a) 1253 keypoints detected by setting Harris cornerness threshold as 1e-6. (b) 1943 keypoints detected by setting Harris cornerness threshold as 1e-5. (c) 1594 keypoints detected by setting Harris cornerness threshold as 1e-4. |

(a) (b)

(b)

|

|

Fig. 2 (a) Feature matching results for Notre Dame images using normalized patches: 74 total good matches, 26 total bad matches, and 74% accuracy. (b) Feature matching results for Notre Dame images using SIFT descriptors: 85 total good matches, 15 total bad matches, and 85% accuracy. |

(a) (b)

(b)

|

|

Fig. 3(a) Feature matching results for Mount Rushmore images using normalized patches: 92 total good matches, 8 total bad matches, and 92% accuracy; (b) Feature matching results for Mount Rushmore images using SIFT descriptors: 82 total good matches, 18 total bad matches, and 82% accuracy. |

(a) (b)

(b)

|

|

Fig. 4 (a) Feature matching results for Pantheon Paris images using normalized patches; (b) Feature matching results for Pantheon Paris images using SIFT descriptors. |