Project 2: Local Feature Matching

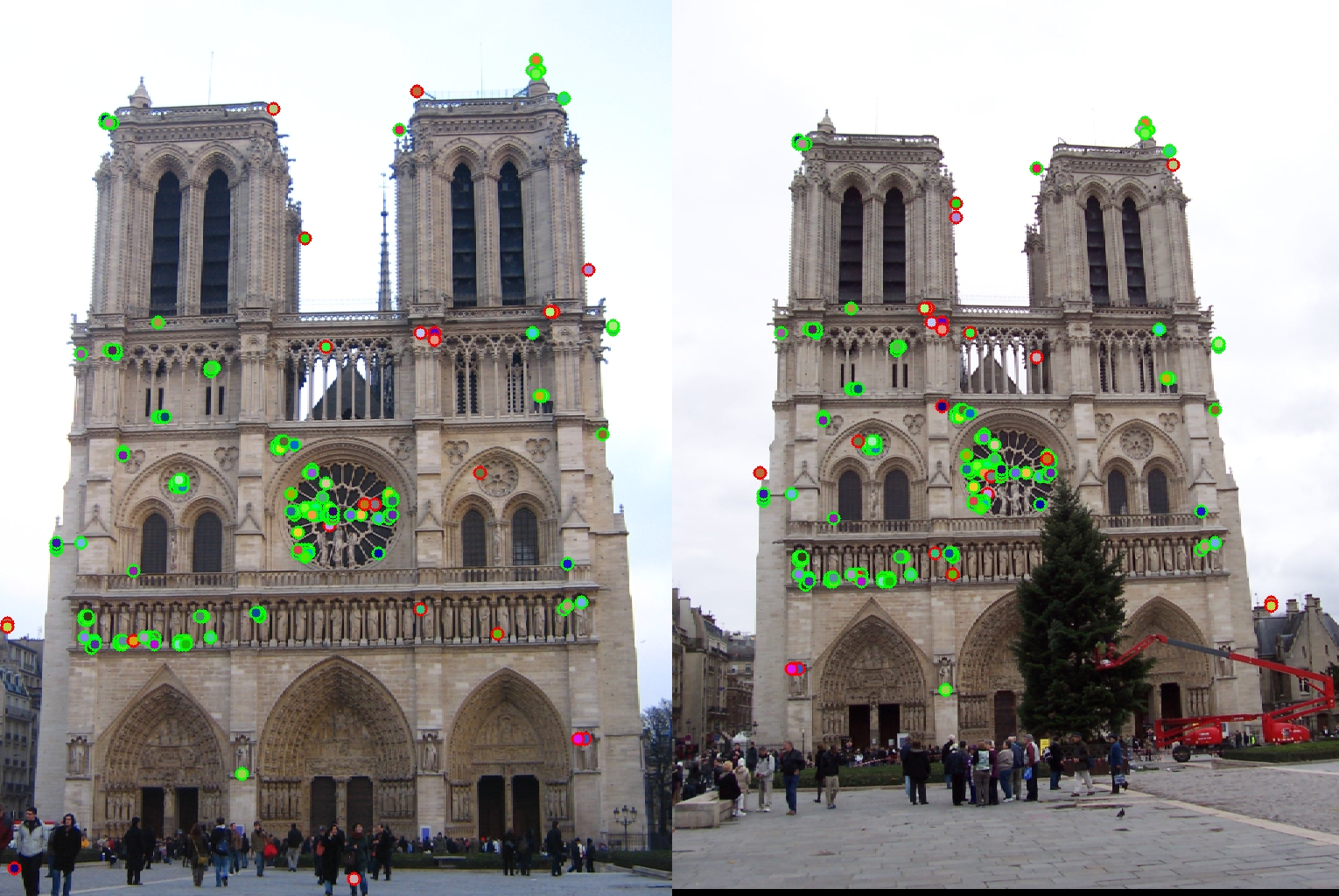

The best I managed on Notre Dame was 85.19% on 162 matches. Here's my eval.jpg.

As you can see, the Harris corner detector picks up some "corners" along the edges of ole Damey, particularly along the towers. I don't have a satisfying explanation for this. Maybe my parameters need some tweaking, especially the "threshold" parameter mentioned below.

Let A be the average Hessian matrix computed over an average patch. The Harris detector computes the quantity det(A) - a*trace(A), where a is some fixed parameter. If this value is a large positive number, then the center of the patch is an interest point. Otherwise it is not. Of course the threshold of a "large" positive number must be specified. This threshold is the most important parameter as far as accuracy/# matches goes. Setting it too low can result in uninteresting points being called interesting, and therefore (very) low accuracy. Setting it too high can result in interesting points being called uninteresting, and therefore a small number of matches. The following table illustrates this

| Threshold | # Matches | Accuracy |

| 2 x 10-7 | 42 | 0.00% |

| 4 x 10-7 | 34 | 82% |

As you can see the lower threshold gets laughably poor accuracy.

As you have probably noticed (and probably are annoyed by), my code runs slowly. It takes minutes. Believe it or not, that code is actually the sped up version. I sped it up by looking for interest points every 4th pixel, rather than every pixel. The data used in this report (except for the eval.jpg images) search every 8th pixel. This speed tradeoff can only reduce the accuracy. For example, assume that we match a corner on the left tower of one Dame, but miss the corresponding feature on the other Dame (because of the skipping). So the nearest-neighbors distance ratio does not work properly, and we match with the symmetric feature on the other tower of the other image. But the code runs faster, and is still fairly accurate.

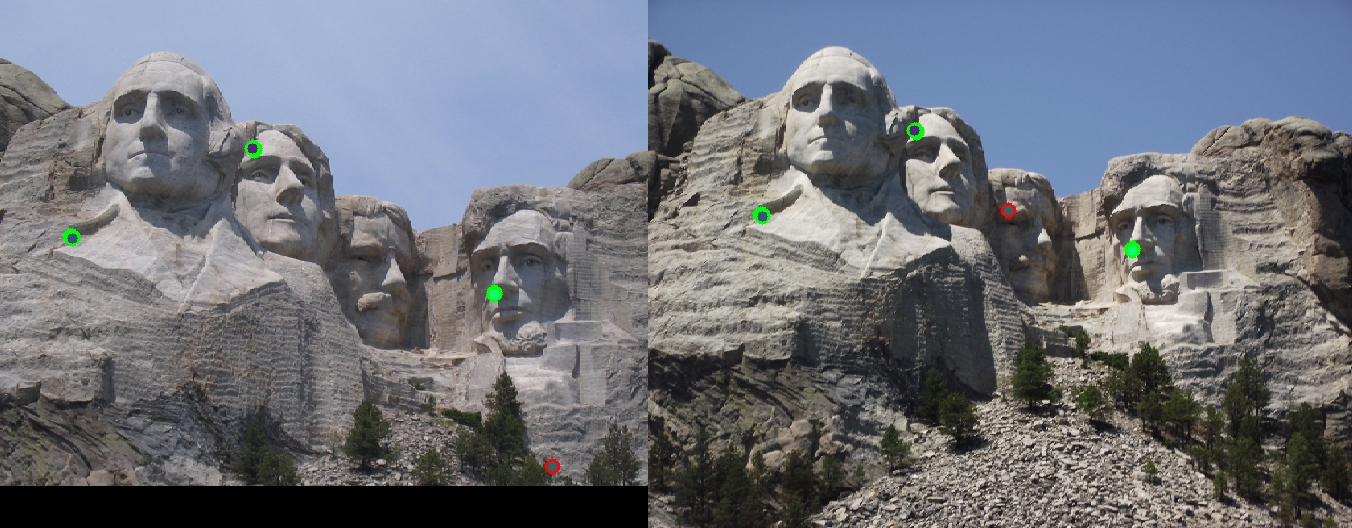

Here's my eval.jpg for Rushmore. I used exactly the same parameters as those for the Dame matching above.

It gets 89% on 9 matches. This is not a very good number of matches. It's only just enough to compute the fundamental matrix, but of course that matrix will have significant error from the truth. I suspect the very reserved matching is due to the different lumenousities of the images, and of the slightly different scales.

It gets 89% on 9 matches. This is not a very good number of matches. It's only just enough to compute the fundamental matrix, but of course that matrix will have significant error from the truth. I suspect the very reserved matching is due to the different lumenousities of the images, and of the slightly different scales.

Here's my eval.jpg for Gaudi. I used exactly the same parameters as those for the Dame and Rushmore matchings above.

It gets 67% on 3 matches. This number of matches is essentially meaningless. I suspect the number of matchings are poor for the same reasons as Rushmore. But this one also has a drastically different orientation disparity between the images.