Project 2: Local Feature Matching

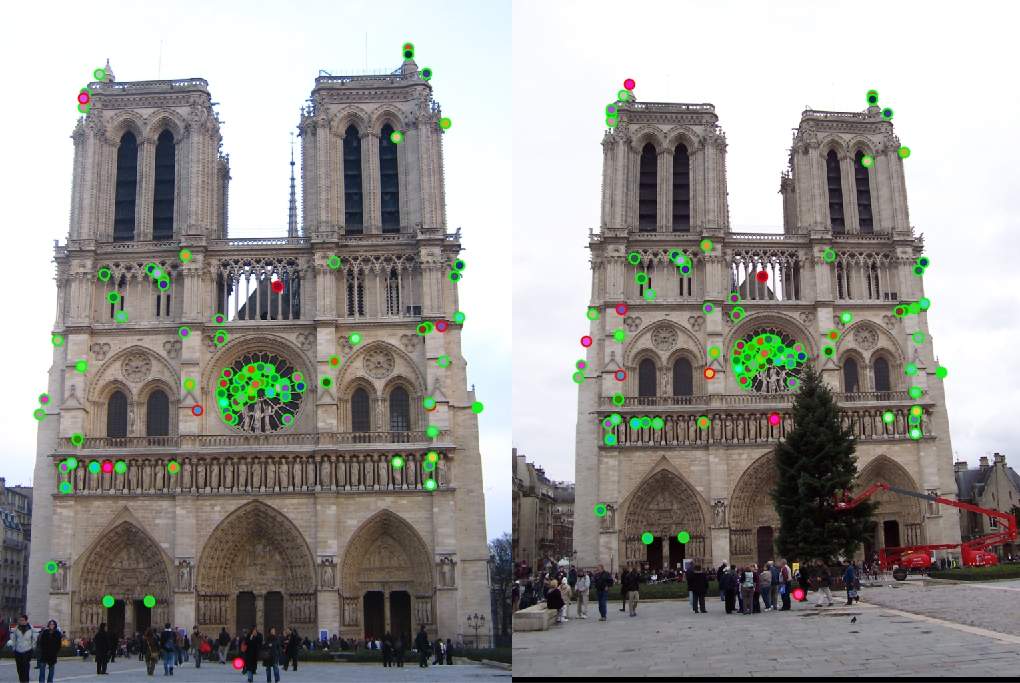

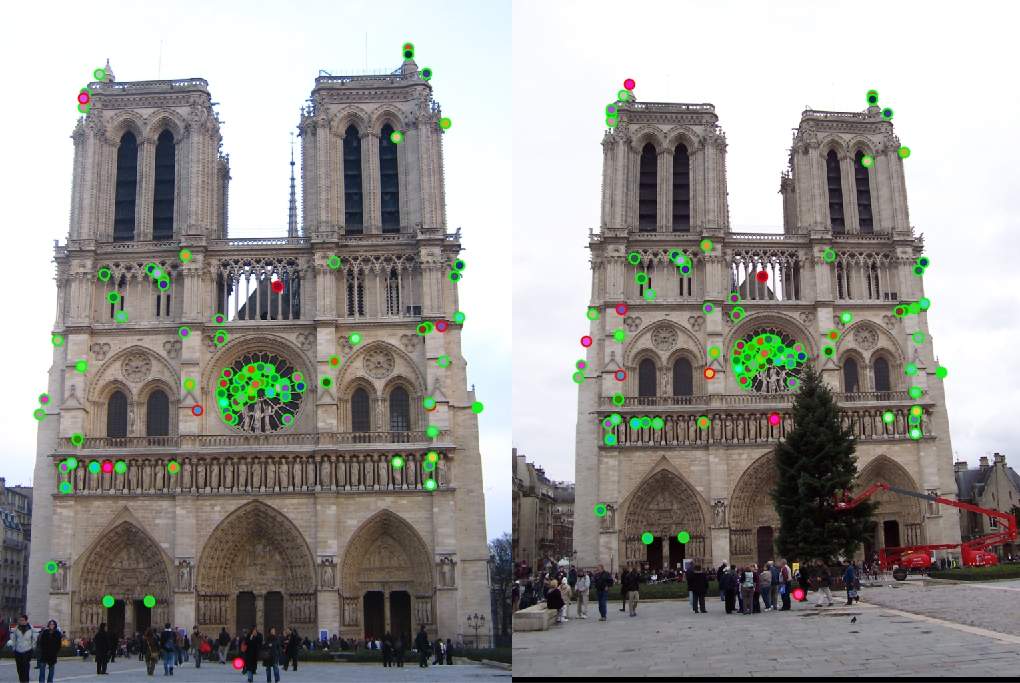

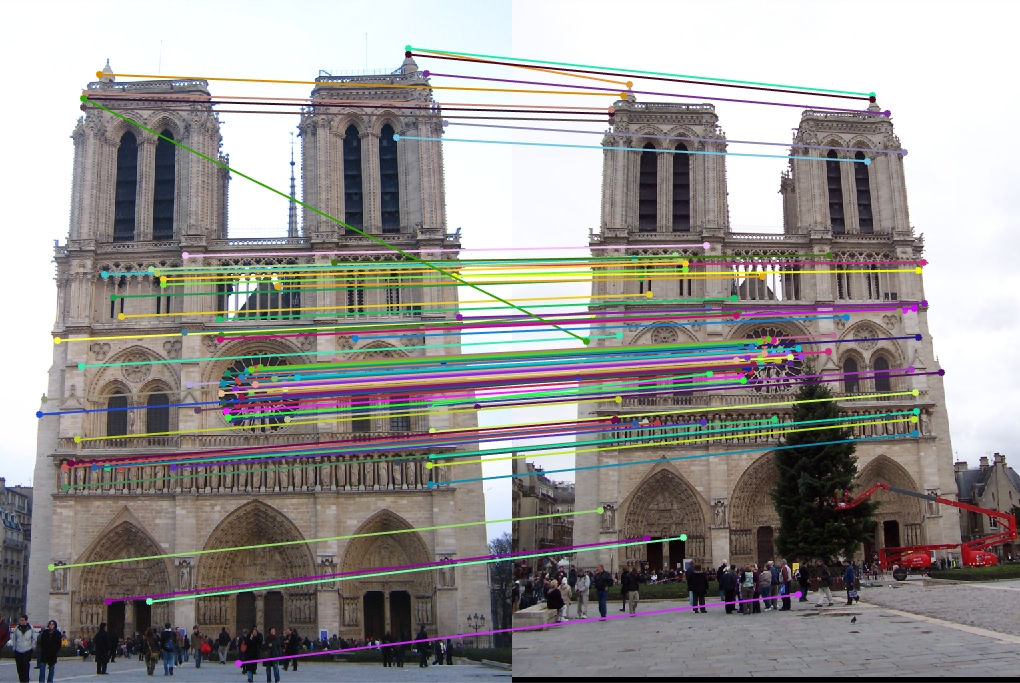

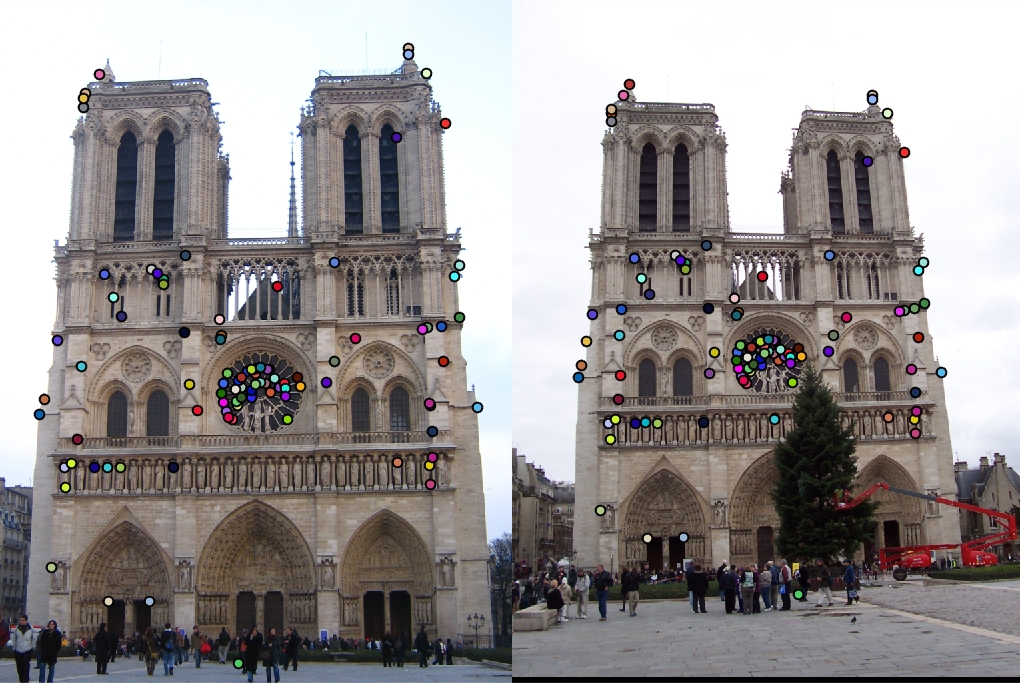

Baseline implmentation on Notre Dame. Accuracy 92%

Accuracy Improvement

When I first was able to get all parts working together, my accuracy was sometimes ranging from 60% on a good run to 30%. The first things I noticed, in order to improve the reuslts, was that I wasn't getting a lot of matches to start with! In addition, and directly related, I wasn't getting a lot of interest points either. I tried messing with the Gaussian, padding the image with 0's in order to suppress gradients near the edge, change the alpha, add a threshold and modify it - consistently testing, understanding, changing, guessing, etc.

The biggest improvements in accuracy, however, stemmed from two particular code snippets. These are the, #1: Cofiltering In GetFeatures

% COLFILT can be used to run a max() operator on each sliding window. You

% could use this to ensure that every interest point is at a local maximum

% of cornerness.

scalarMax = colfilt(scalarInterest, [feature_width/4 feature_width/4], 'sliding', @max);

This enabled each interest point to be at a local maximum - thus highering results. Messing with the dimensions to be 'maxed' also thoroughly affected accuracy. After the interest points function started to deliver 3-4k interest points instead of the original 2-3 hundered, and filtering/sorting/fine-tuning from there brought the accuracy from the grave!

#2: Only using 100 most confident matches

num_pts_to_visualize = 100;

%size(matches,1);

#3: Putting the feature array to a less than one exponent. I found that it performed well around here, although I changed it for many scenarios.

features = features .^ .6;

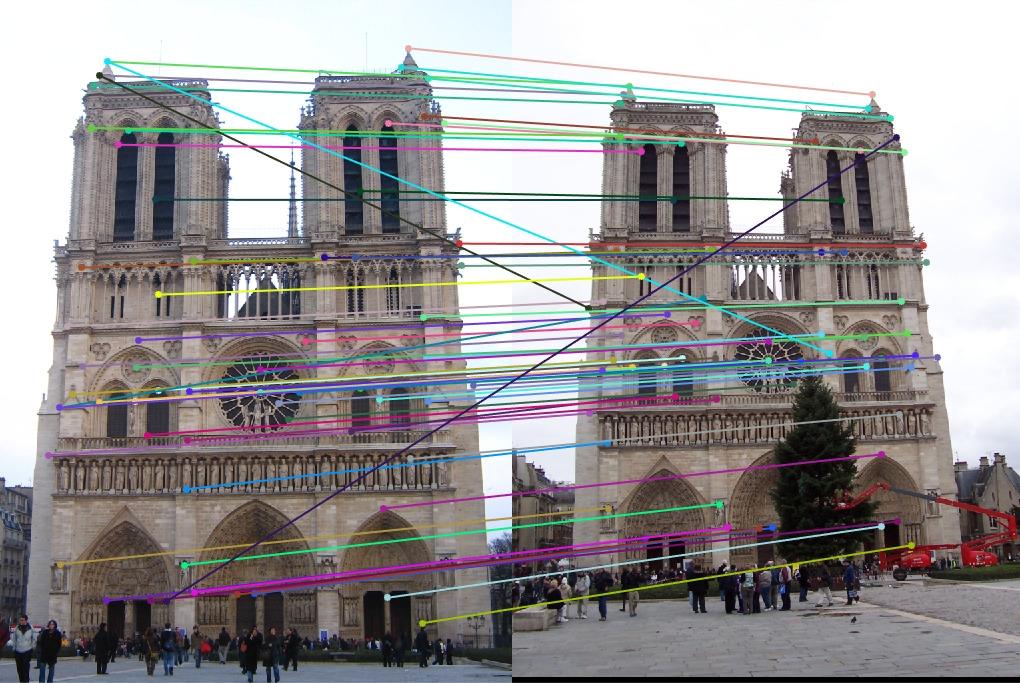

Straight Lines Indiciate Accuracy

|

Here we see in the Mount Rushmore example that mostly straight and/or parallel lines are formed between the two images with only a few strays. Let's compare that with the original cheat_interest points run of project 2.

|

Compared to the Notre Dame Version, even the cheat_interest implementation has more lines that don't have as simple and/or as defined relation/translation to the other image. The more direct lines connecting the two, the generally more accurate the points/matches are. However there are many exceptions to this general rule of thumb, such as in the cheat_interest points and even the 92% accuracy real run of Notre Dame, there are some points on the closer columns of each that corresponded that shouldn't have. I perceive that this may be more relevant in project 3.

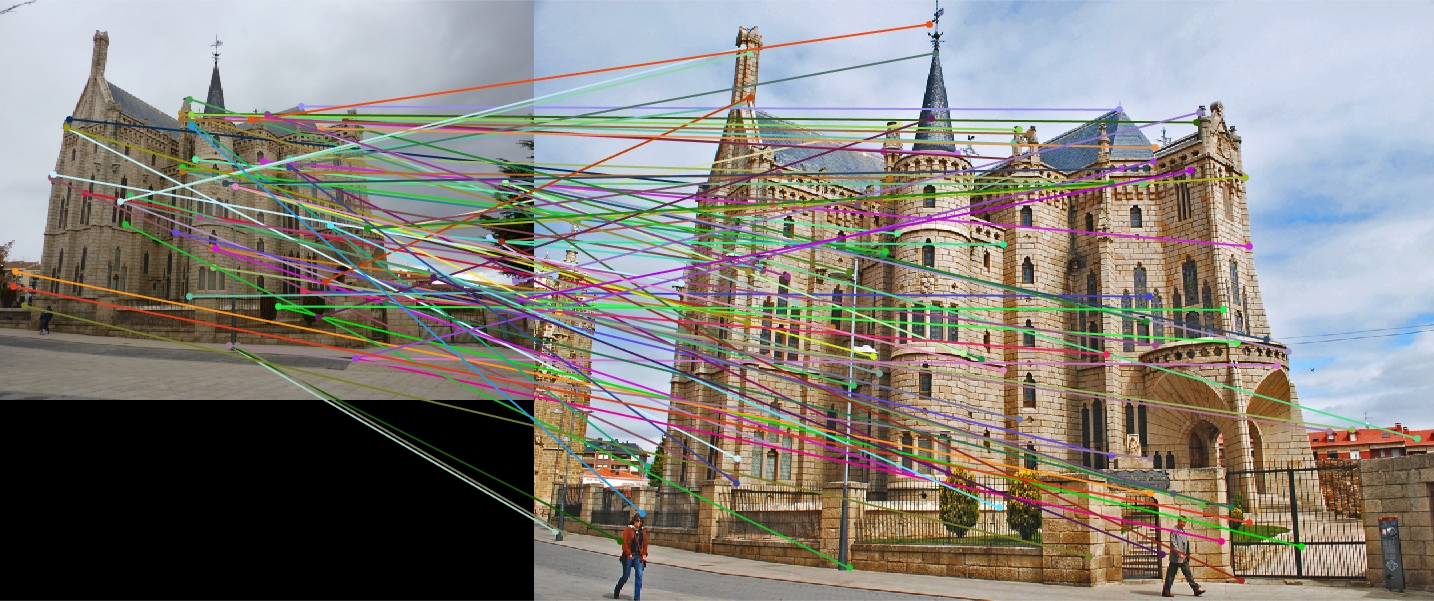

Here is how my tests on Episcopal Gaudi went, not well. I am excited to learn more about project 3 and how to incorporate geometry into making better accuracies! I would like to talk to a google engineer who works on google cars to learn about how their sensors work and which princples they apply/and/or any other creative ideas they have put to work!

|

And finally, here is an expounded version of the baseline implementation of project 2 on the Notre Dame example. Accuracy on this run was 92%.

|