Project 2: Local Feature Matching

As part of this project, we had to match two different images of an object/place. There were three steps involved, first step is to detect keypoints or corners in both the given images, second is to build feature descriptors for each keypoint and finally third step is to match different features from the two images. I implemented the basic pipeline and further added the step of using PCA to reduce feature dimensions to 32 for graduate credit. This made the third step of matching faster.Interest point detection

For interest point detection, I implemented the basic Harris corner detection algorithm. I used sobel filters to calculate x and y gradients. I used imgradient before trying this and got poor results (maximum accuracy was around 70%). Once I had the gradients, it was straightforward to calculate cornerness function. I did have to use a gaussian blur before squaring derivatives and tuning its size made some impact. I used alpha = 0.04, this wasn't making so much difference. I used different thresholds on cornerness function to get different levels of accuracy (number of corners to find potential matches). I was able to get upto 96% accuracy on Notre Dame but this required 4k points. But it was fairly easy and fast to get 92% accuracy with threshold of 0.005. Finally, I implemeted Non-max supression using colfilt and this helped with the issue of getting too many corners from a small area of the image. I settled on 3*3 window after some experimenting.

Feature Construction

Before implementing SIFT like features, I used normalized patches which gave accuracy of 72% with Harris corners and 50% with cheat points. SIFT significantly improved accuracy in both the cases. As part of SIFT implementation, I applied a gaussian blur before using imgradient to calculate gradient magnitudes and their directions at each pixel of the image. I took 16*16 neighbourhoods around each keypoint and for each 4*4 cell within that, I had a histogram of size 8. I tried weighting the counts with magnitude of gradients which didn't make much difference. So I went back to using just directions. This helped create the 128 dimensional SIFT feature. I normalized each feature using norm() function. Most of the implementation here was pretty straightforward.

Feature Matching

Matching stage was the simplest of the three stages in the basic pipeline. We compare each feature from image 1 to each feature from image 2 and use euclidean distance to find the best match. The metric d(NN1)/d(NN2) is used to calculate the confidence of each match. Finally, I sorted the matches by confidences and took the top 100 matches for evaluation. This was the slowest step in the whole pipeline especially when I wanted to use around 5k corners for higher accuracy. I later used PCA to build 32 dimensional features which made this stage faster.

Results

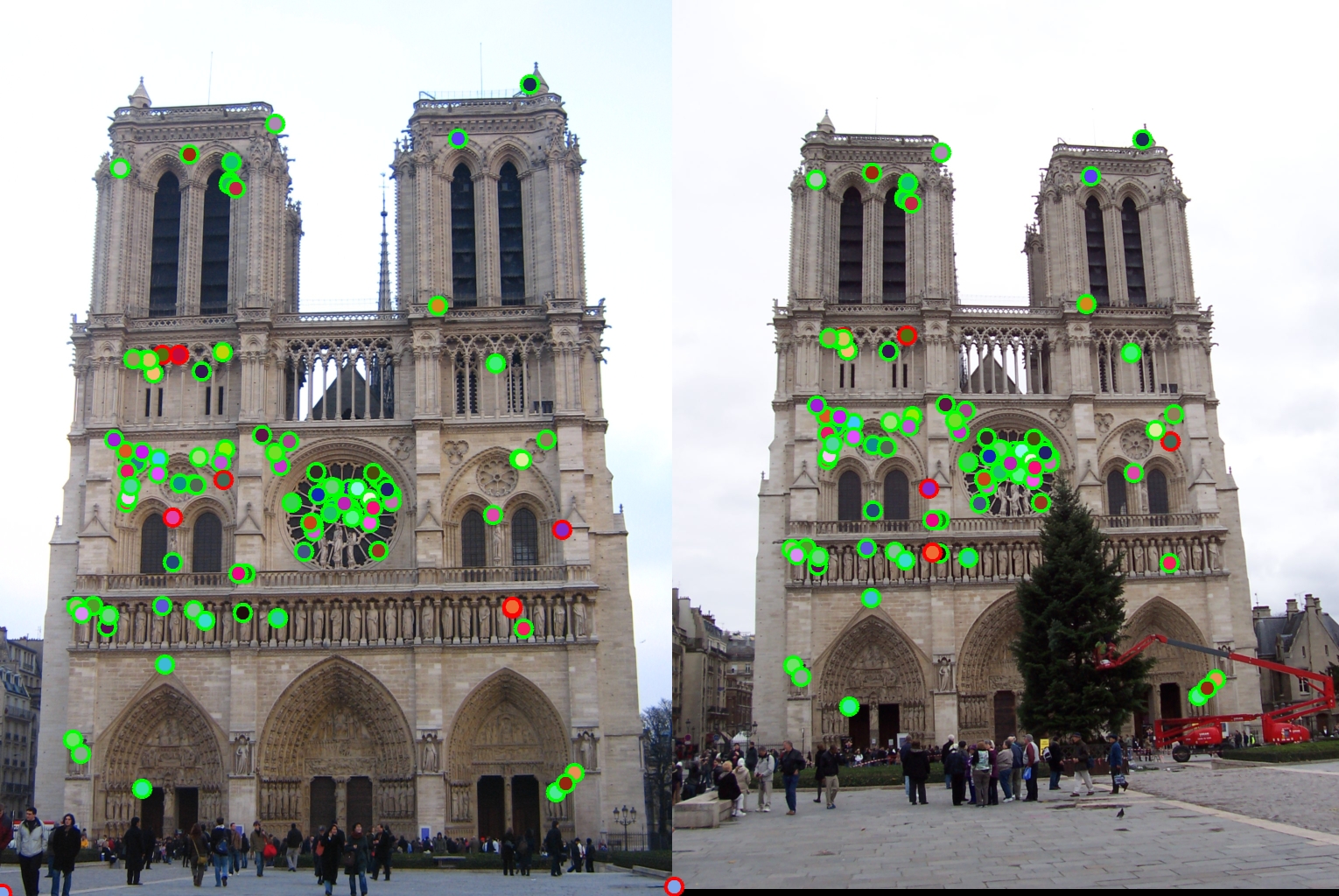

The result is obtained using threshold 0.005 and has accuracy of 92% (run time for matching 20.9)

The result is obtained using threshold 0.005 and has accuracy of 95%

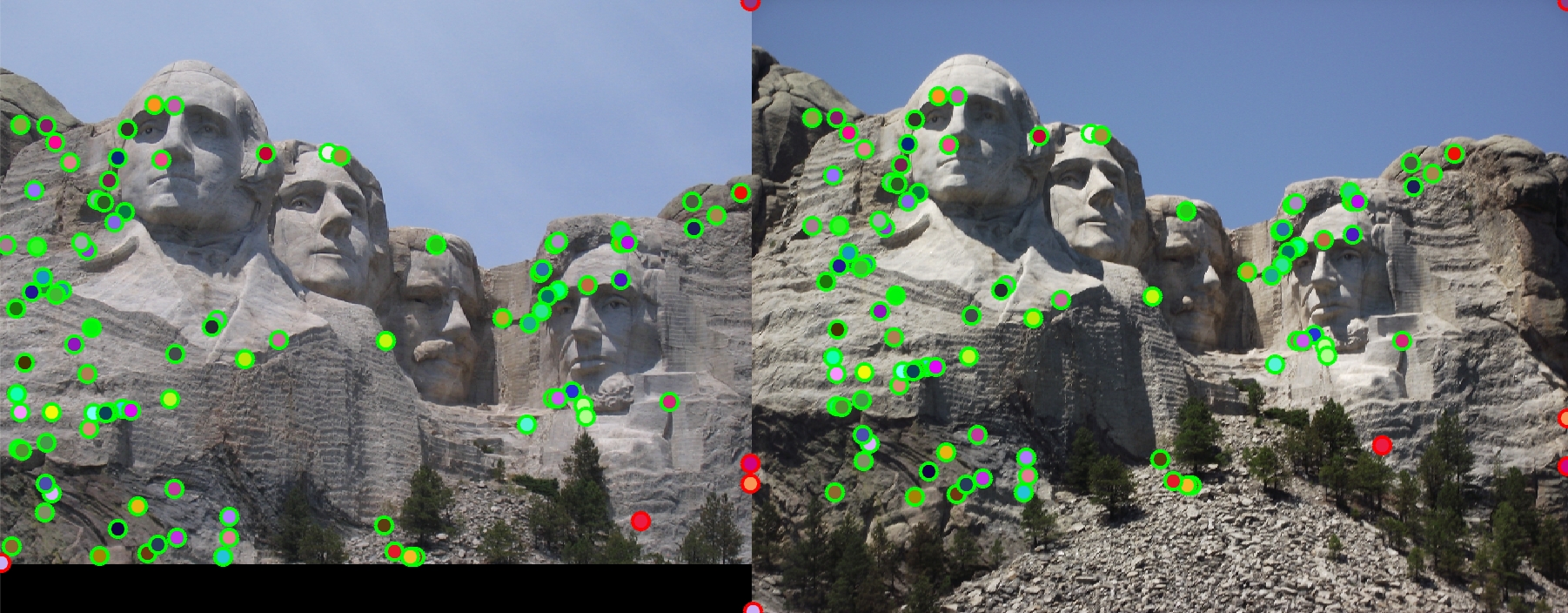

The basic pipeline performs badly on test cases involving scale, orientation changes like the case of Episcopal Gaudi.

PCA extension for graduate credit

Matching is the slowest step in the basic pipeline. Each feature is 128 dimensional, reducing it to 32 dimensions makes it significantly faster though it could potentially reduce accuracy a bit. I took a training set of 20 images from the internet and extracted about 1000 features from each of them. This set is written to 'pca_training_set.dat' file. I performed PCA and obtainted the basis matrix which I wrote it to 'pca_basis.dat'. My algorithm now simply reads the basis matrix and multiplies it with features matrix for each test case. I take only the top 32 dimensions for matching phase. I tested this on Notre Dame case. Where I was prevosuly getting 92% accuracy with threshold 0.005, I can now get 80% accuracy with smaller features. The drop in accuracy is small and expected, however run time is faster now. The increase in speed is more apparent with more than 5k points. I used tic() and toc() to calculate the run time.

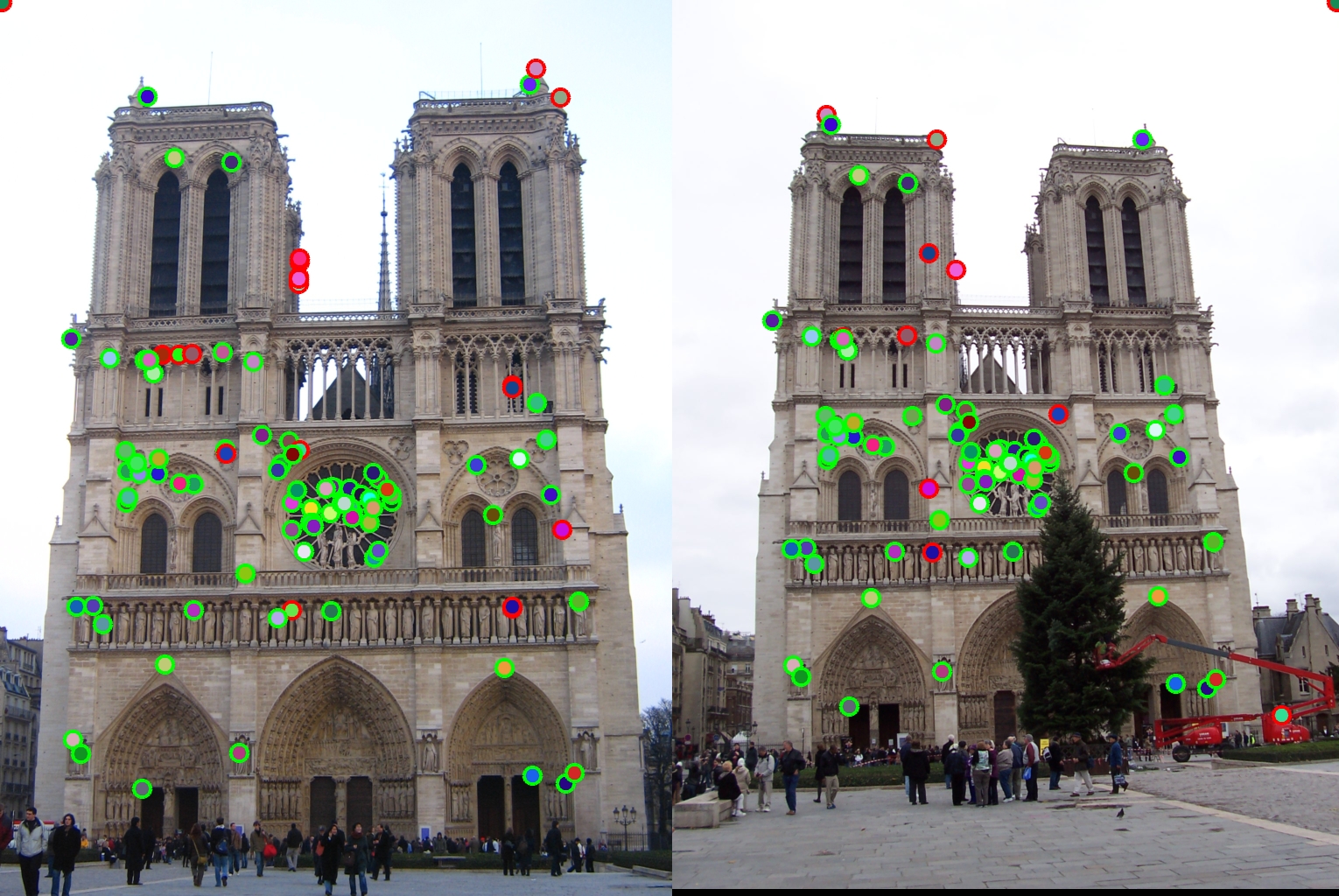

The result is obtained using 32 dimensinal features, threshold 0.005 and has accuracy of 80% (runtime for matching 19.45)