Project 2: Local Feature Matching

Introduction

Feature detection and matching for images is a fundamental aspect of computer vision. These processes are neccesary for many applications such as image stitching, object recognition, and scene recognition. This project implements a simplified version of the SIFT pipeline, which involves three main steps. First, interesting points within an image are generated using a Harris corner detector. Second, SIFT descriptors are evaluated for each point of interest. Finally, matching features between two images are determined using a ratio test algorithm.

Interest Point Detection

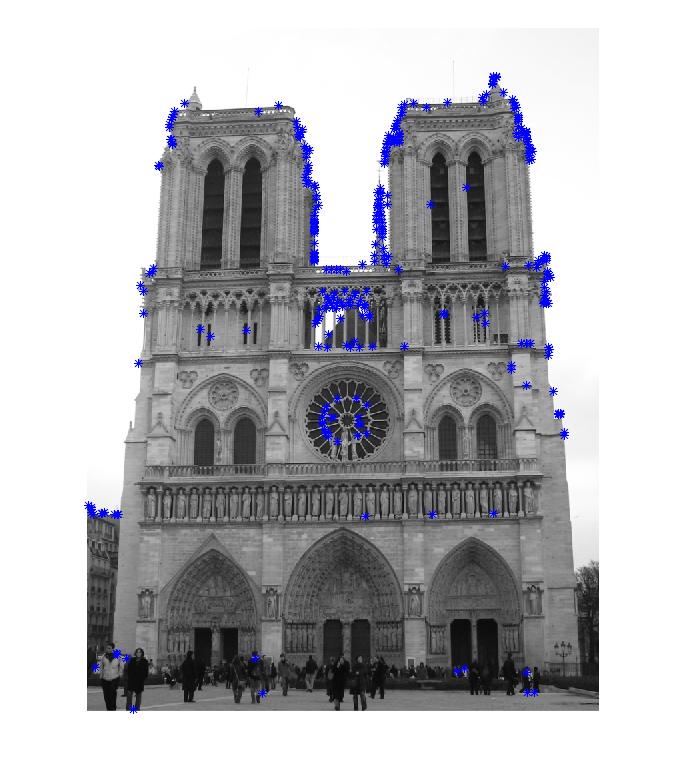

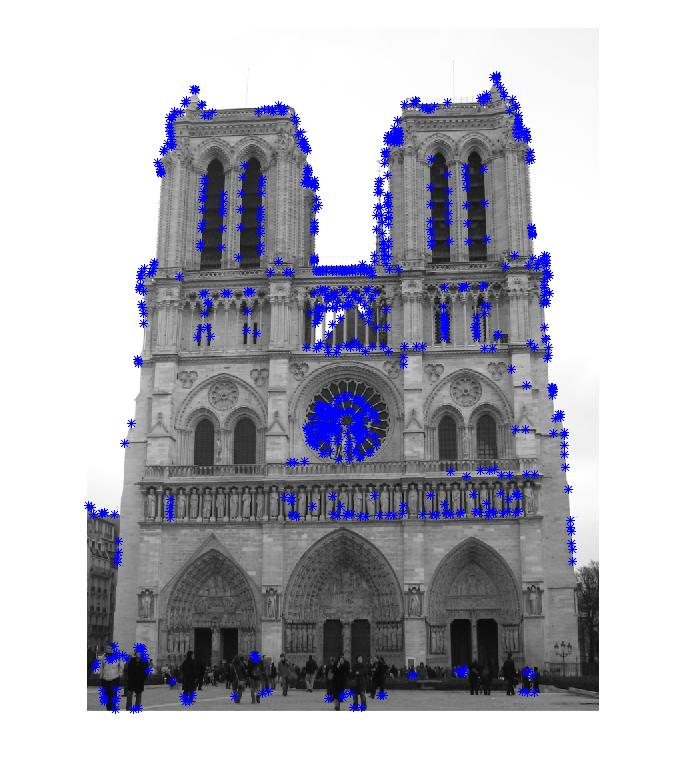

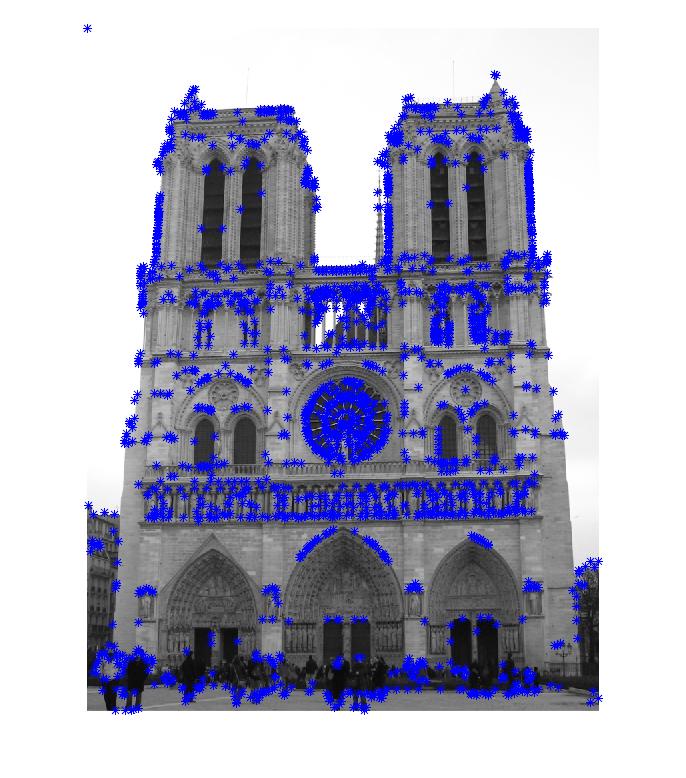

The Harris corner detector utilizes information about gradients in an image to assign a measure for "cornerness". In the implementation used for this project, images are convolved with horizontal and vertical derivatives of a Gaussian filter. The gradient images are then used to generate a second moment matrix (auto-correlation matrix), describing local quadratic shape on a per-pixel basis. Interest points that can be reliably matched are determined, by finding the local maxima of a rotationally invariant scalar measure which is dependent on the second moment matrix. Points detected around the edges of the image are removed. Figure 1 shows detected interest points at varying threshold levels.

|

Fig. 1: Harris corners at varying thresholds (0.1, 0.05, 0.1).

Local Feature Description

A ratio test

Feature Matching

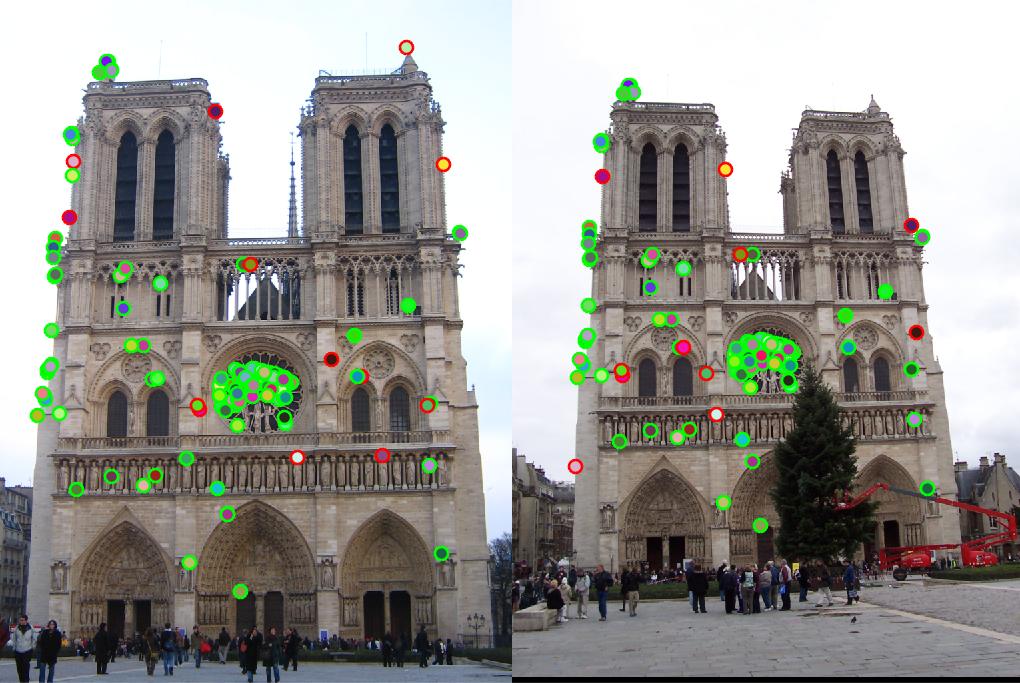

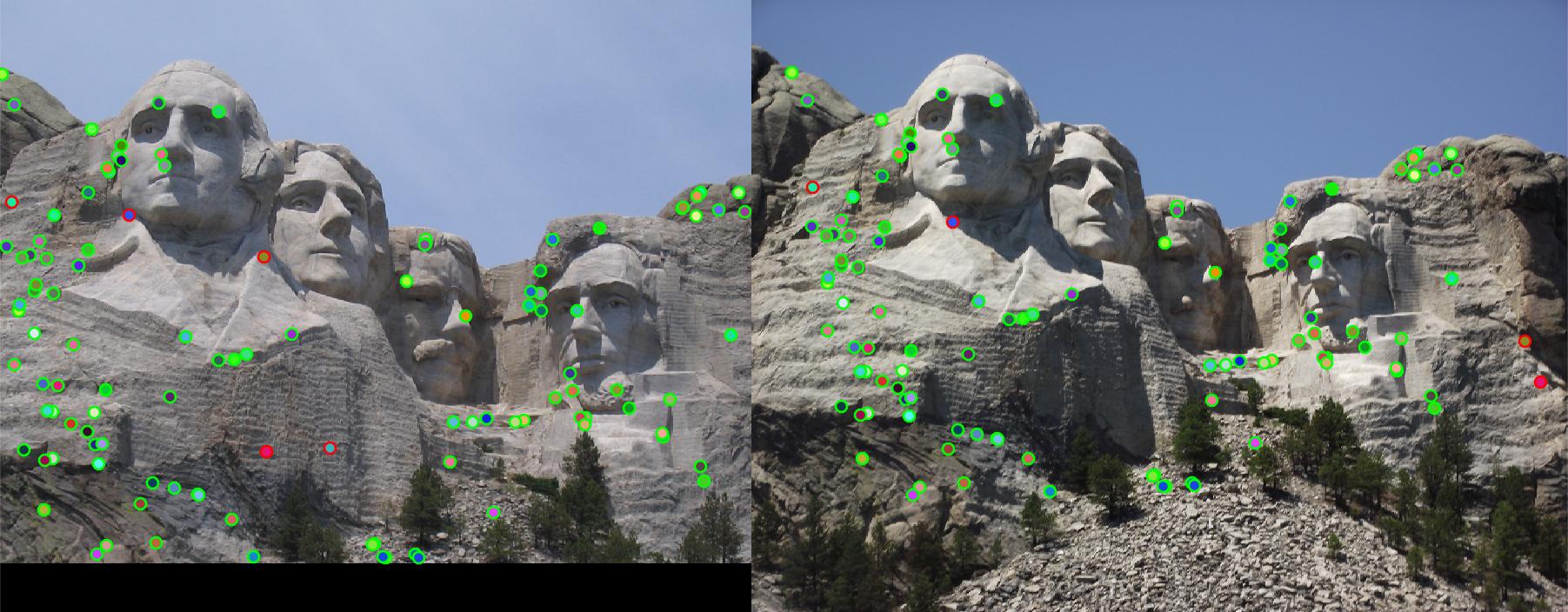

A ratio test was used to find matching features between two images of the same object. This method compares fatures between images through a Euclidean distance measure. The shorter the distance, the better the features match. Matched features are then determined by setting a threshold for the ditance value. If the threshold is too high there will be more false positives, conversely if it is too low there will be too many false negatives. Figures 2 and 3 present image pairs that have undergone succesful feature matching. Figure 4 presents a case where the presented algorithm does not provide successful matches. The poor performance for the final image is because the algorithm presented is not scale-invariant, but only orientation-invariant. Extensions and alterative methods to the implemented interest point detector can provide better performance in such cases.

Notre Dame

Fig. 2: Accuracy: 91%, 128 correct matches, 13 incorrect matches.

Mount Rushmore

Fig. 2: Accuracy: 96%, 108 correct matches, 5 incorrect matches.

Epsicopal Gaudi

Fig. 2: Accuracy: 75%, 3 correct matches, 1 incorrect match.