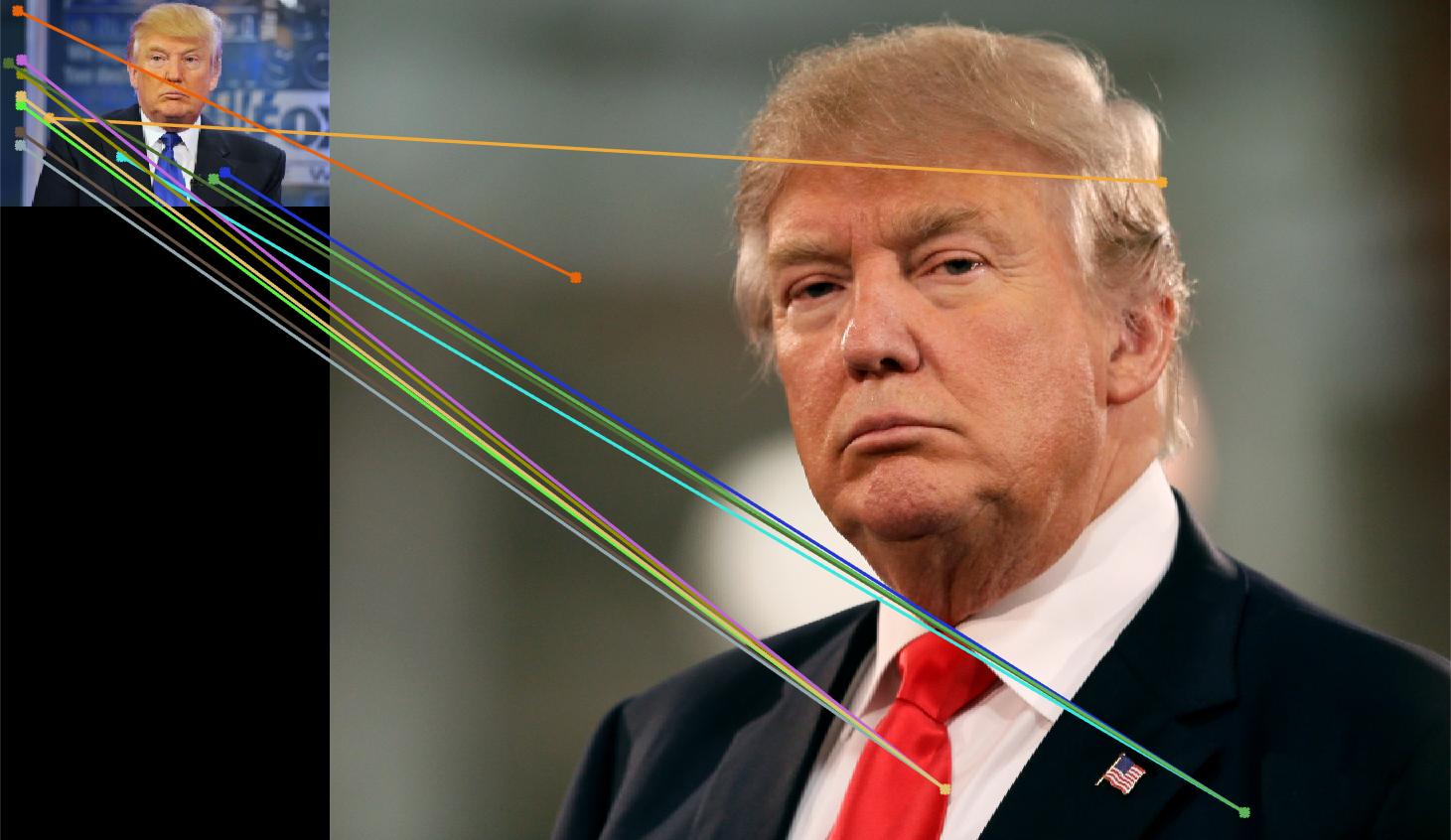

84 good matches, 17 bad ones.

Pipeline Description

In the first step of our pipeline, we identify interest points, which are areas that are commonalities between similar images. These areas are preferably able to be picked out even if they're viewed from different angles/orientations/lighting. We first use harris corner detector to find these points, by convolving images with gaussians repeatedly and then using the eigenvalues of the resulting images to find out fundamental properties about them. Next, we find features using SIFT. It grabs the window that we're looking at and rotates the window 8 times, to create 8 different points of view. We then look through small 4x4 patches of each window of different orientation, and calculate a value that is representative of that patch. Finally, comes the feature matching stage. I used euclidean distance to compute the distance between prospective feature matches. I used a threshold of .67 for an accuracy of 83% on Notre Dame.

Bells and Whistles

I implemented adaptive non-maximum supression, and it helped dramatically. ADNMS is used to find local points that are local maxima, or "stand out", so to speak. When I didn't use it, there were far too many interest points in a small radius that didn't really provide that much information about the nature of the entire image. It's adaptive because the threshold changes with respect to the number of interest points found. I chose to find a range of 1250-1700 interest points.

Image and Filter Rotations

I wasn't aware of Matlab cells, and I just thought that they were a neat way to do all of my rotations neatly.

%Rotating sobel filter 8 times

sobel = [-1 0 1; -2 0 2;-1 0 1];

sobelCell{1} = sobel;

for i=2:8

sobelCell{i} = imrotate(sobel, (i - 1)*45, 'bilinear');

end

%Taking the image gradients for the 8 rotations of the filter

origGaussImg = imgaussfilt(image);

image = imgaussfilt(imfilter(origGaussImg, sobel));

imageCell{1} = image;

for i=2:8

imageCell{i} = imgaussfilt(imfilter(origGaussImg,sobelCell{i}));

end

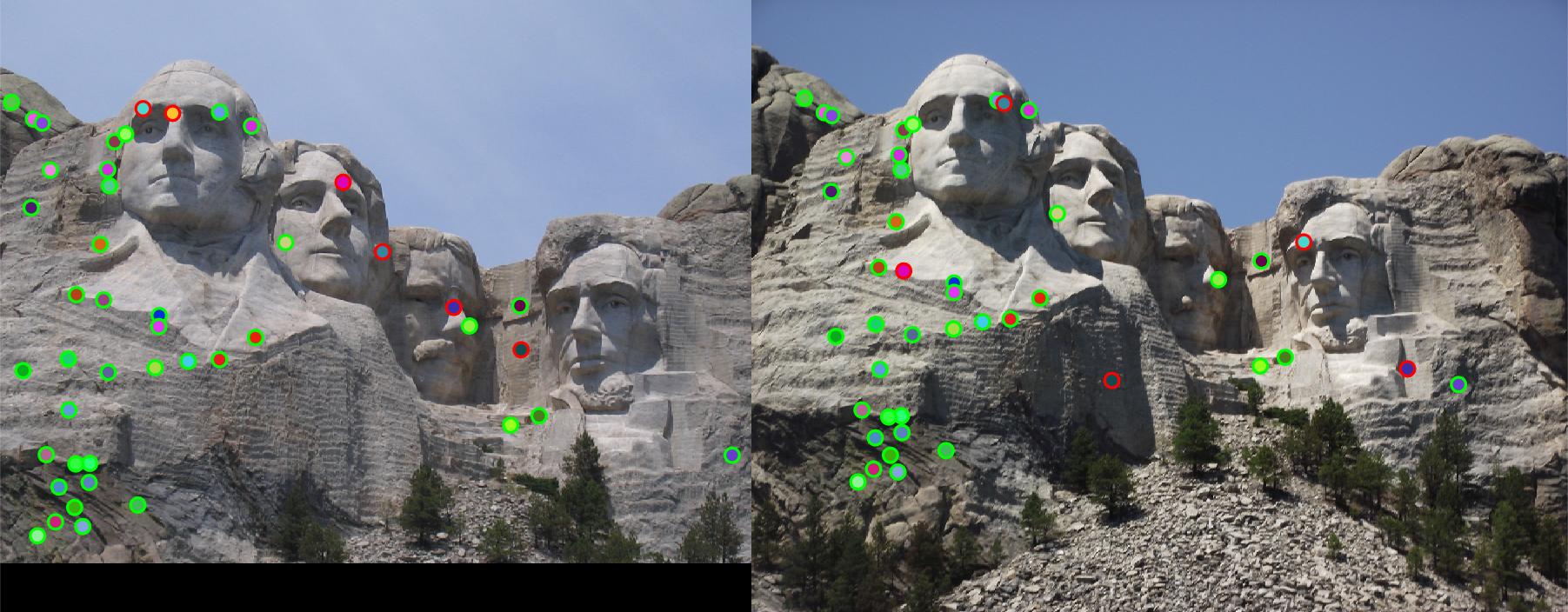

Results

Mt. Rushmore, 40 good matches and 6 bad ones.

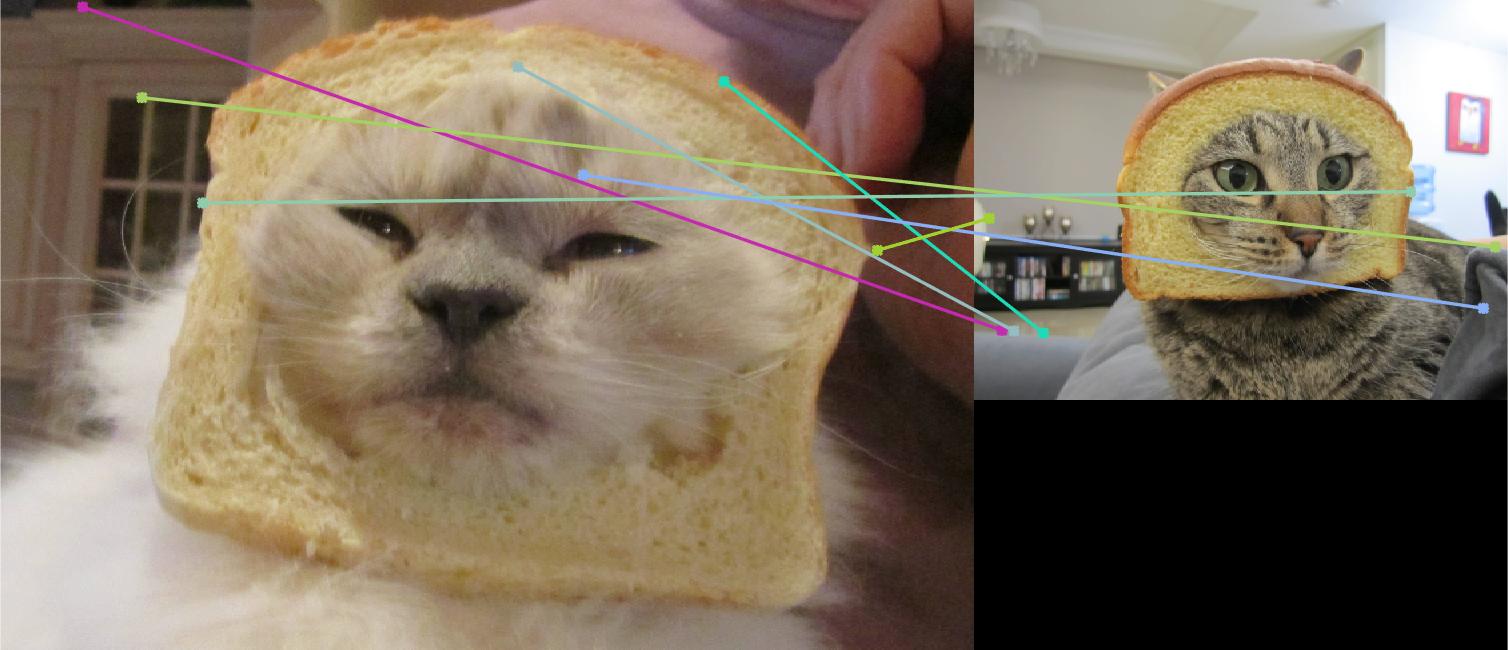

The performance on breadcat was rather... clawful.

The wildly differing backgrounds made this quite tricky.