Project 2: Local Feature Matching

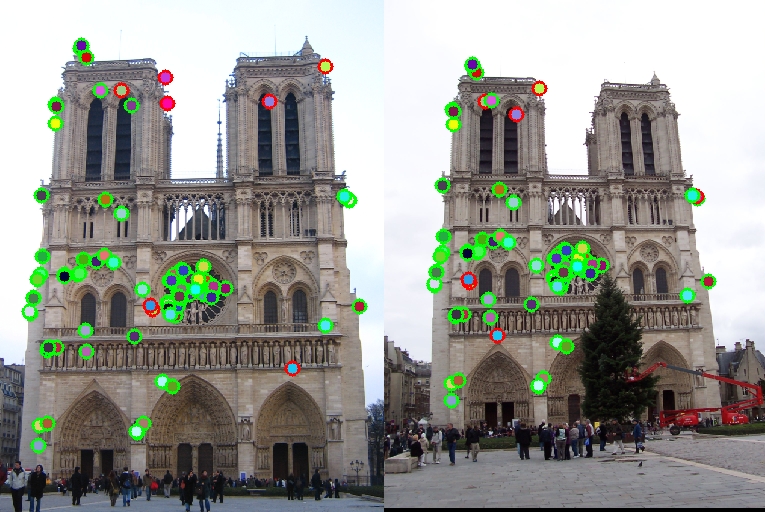

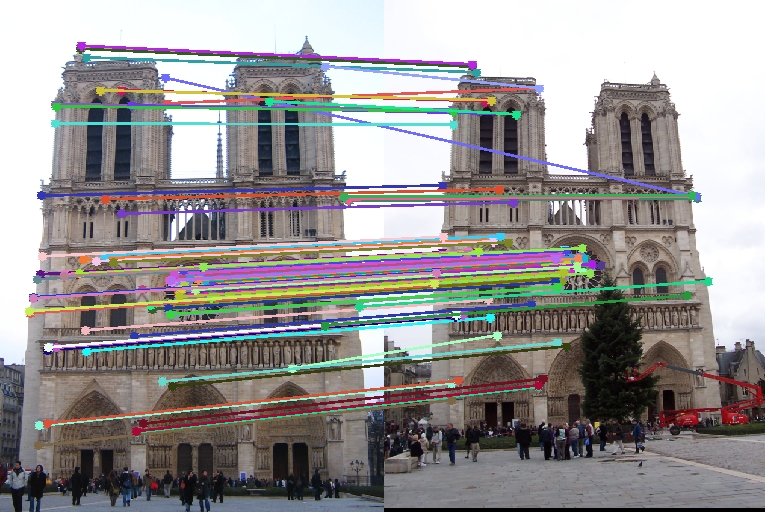

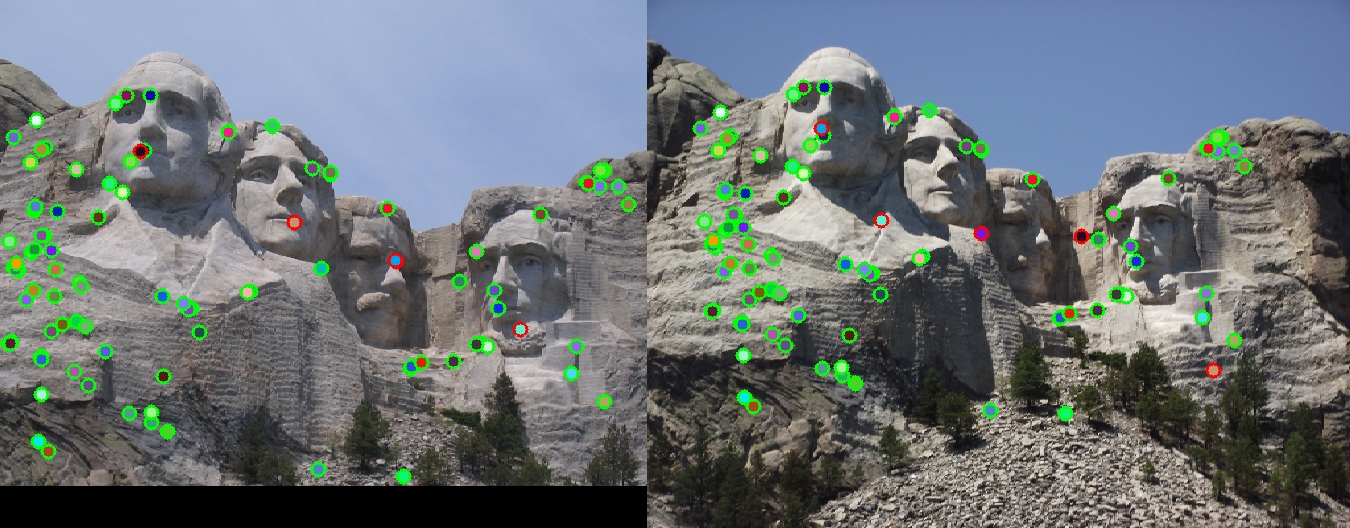

The top 100 most confident local feature matches from a baseline implementation of Project 2. In this case, 92 were correct (highlighted in green) and 8 were incorrect (highlighted in red).

The aim of this project is to create a local feature matching algorithm using techniques described in Szeliski book, chapter 4.1. A simplified version of the famous SIFT pipeline is implemented for feature extraction. The matching pipeline is intended to work for instance-level matching -- multiple views of the same physical scene. The 3 main steps are:

- Obtaining the interest points using Harris Corner Detector

- Extracting the feature around the interest points

- Matching the points in both the images

Interest Point Detection

- Form the derivatives,

- Harris' Score of a corner is computed, using the formula

- Suppress the features which are close to the edges

- Find the connected components using bwconncomp

- Find the local maximum from each of the components

Here, the Harris corner detector was used to capture the Interest Points. The overall algorithm is,

Observation

In my code, using a Gaussian filter to blur the images, reduced the accuracy of the matches. Instead, a simple Sobel filter was used for this.

Alpha with a value of 0.06 worked the best.

Feature Extraction

Around the detected interest points, a feature is constructed (which would uniquely define the points). For this,a basic SIFT descriptor is used. Around each keypoint that was detected, a window is created and its Magnitude adn Direction are computed.

The window is subdivided into cells of size 4x4 each, and a histogram is computed for each of these cells. The histogram is first computed for angles of difference 45 degrees, from -pi to p1.The dominant direction is found,and the descriptor is thus formed, by adding the magnitude values across these cells.

The descriptor is normalised, and each element is raised to a power less than 1 (0.8 in my case)

Feature Matching

The extracted features are matched and the confidence of matching is checked in this part of the code. The correspondence of both the image pairs are figured out, by taking the ratio of the Euclidean distance between the points in both the images. A threshold of 0.8 is taken, and the first 100 matches are sorted and retuned back to the calling program.

Results

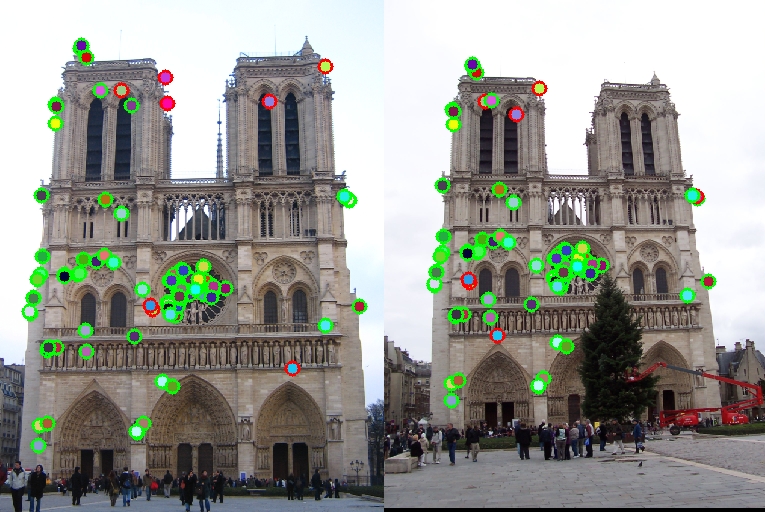

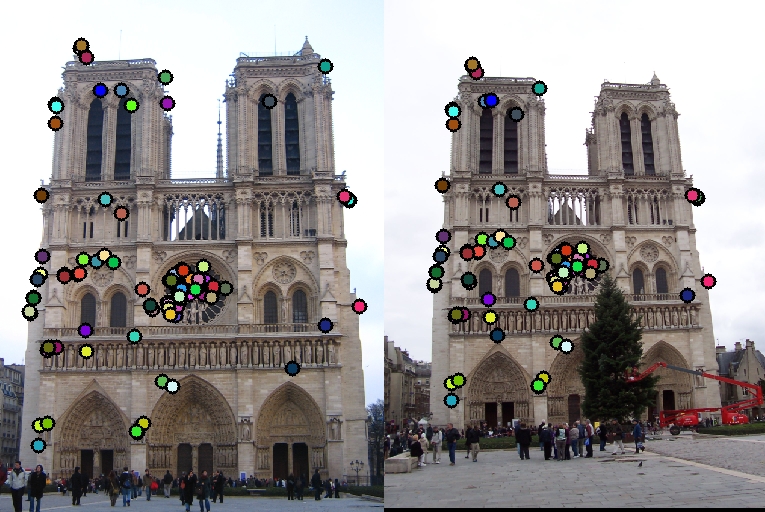

Image Pair 1: Notre Dame

For the Notre Dame image pair, an accuracy of 92% is achieved (92 good matches, and 8 bad matches among the first 100 matches))

|

|

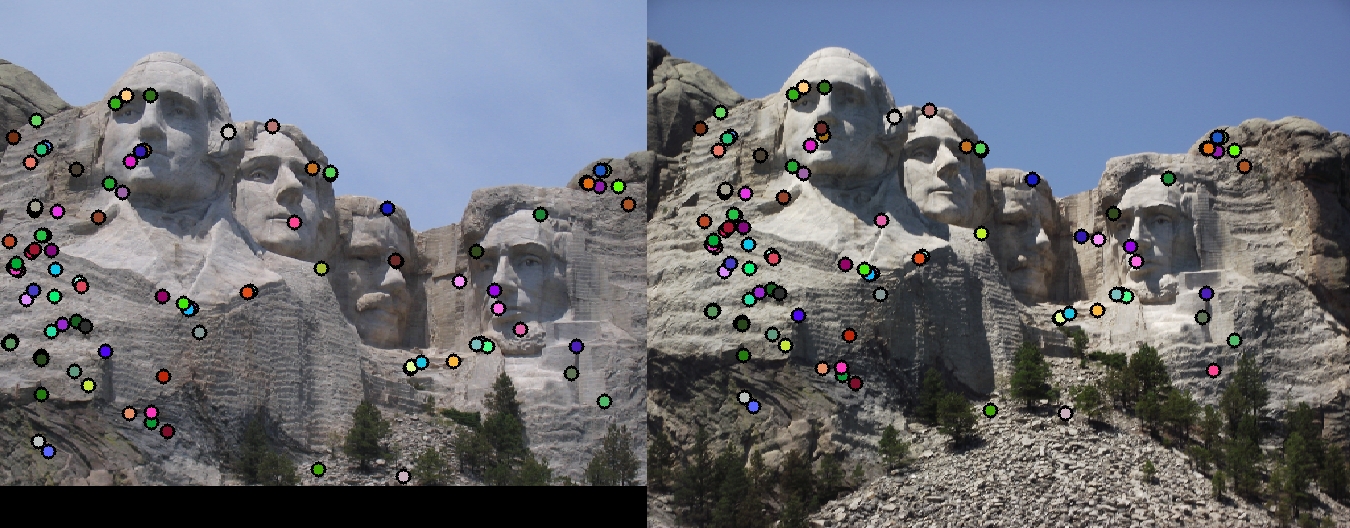

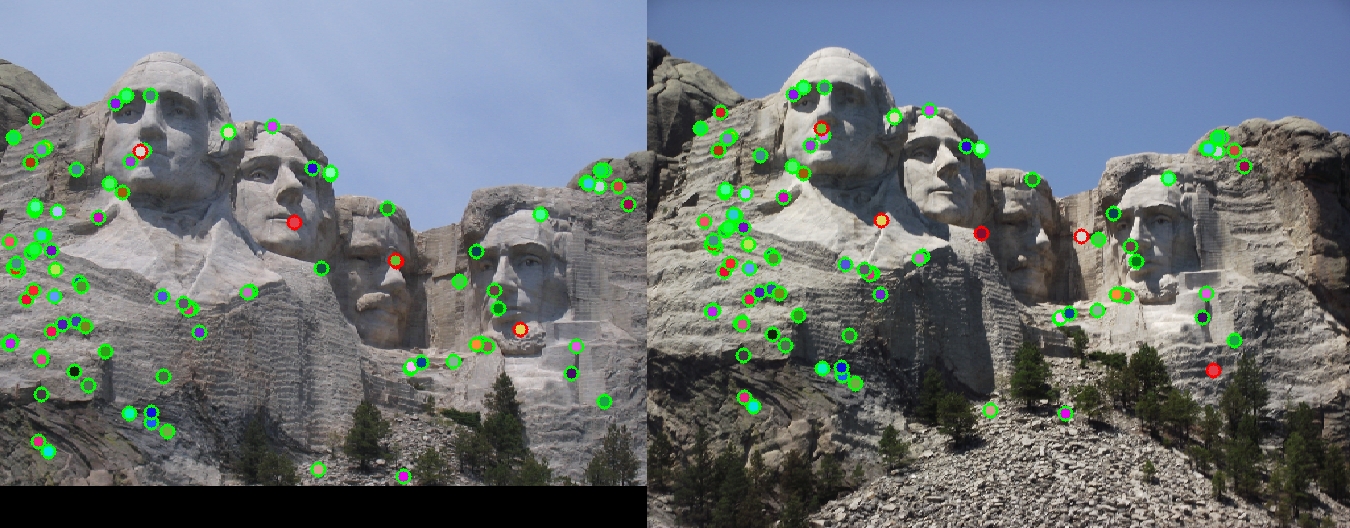

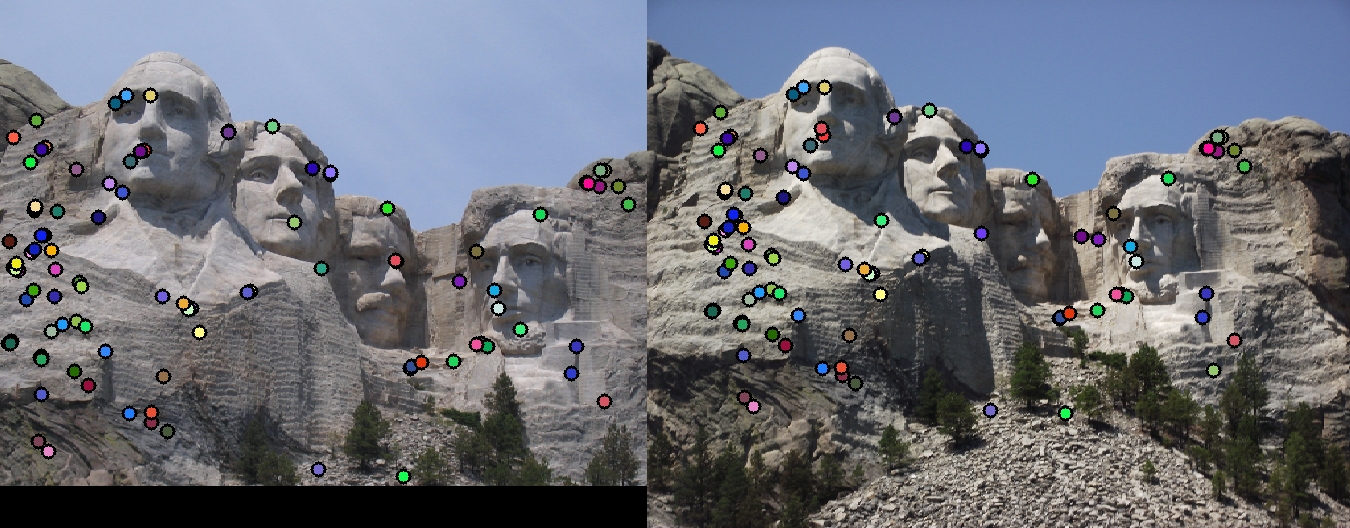

Image Pair 2: Mount Rushmore

The feature matching of Mount Rushmore image pair was 95% accurate (95 good matches and 5 bad matches among the first 100 matches)

|

|

Image Pair 3: Episcopal Gaudi

Feature matching for this image pair was quite tough, and it resulted in an accuracy of 29% (4 good matches and 10 bad matches)

|

|