Project 2: Local Feature Matching

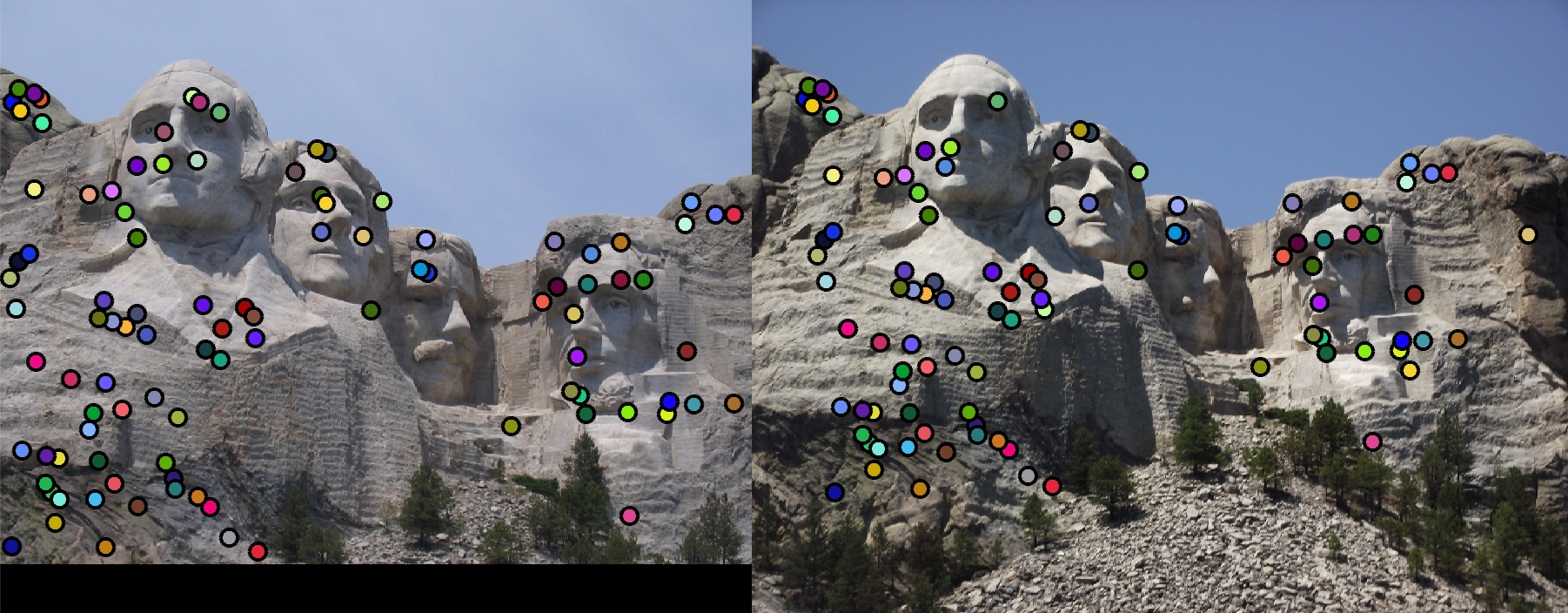

For get_interest_points.m, I implemented the basic Harris Corner Algorithm.

- I convolved the image with a derivative form of the small gaussian right to blur out noise. Next I zero out the edges so as instructed.

- I calculated the Harris Corner Detector value as per the formula. I then found keypoints that had the maximum gradient in its neighborhood using cofilt().

- Then I returned keypoints that were the maximum in its neighborhood and is greater than a particular threshold.

For get_features.m, I implement a basic SIFT like algorithm.

- I first obtain the directional gradients.

- I then find the value for orientation at each keypoint.

- Then I divide each window into 4 by 4 sub windows and place the orientations into their respective bins.

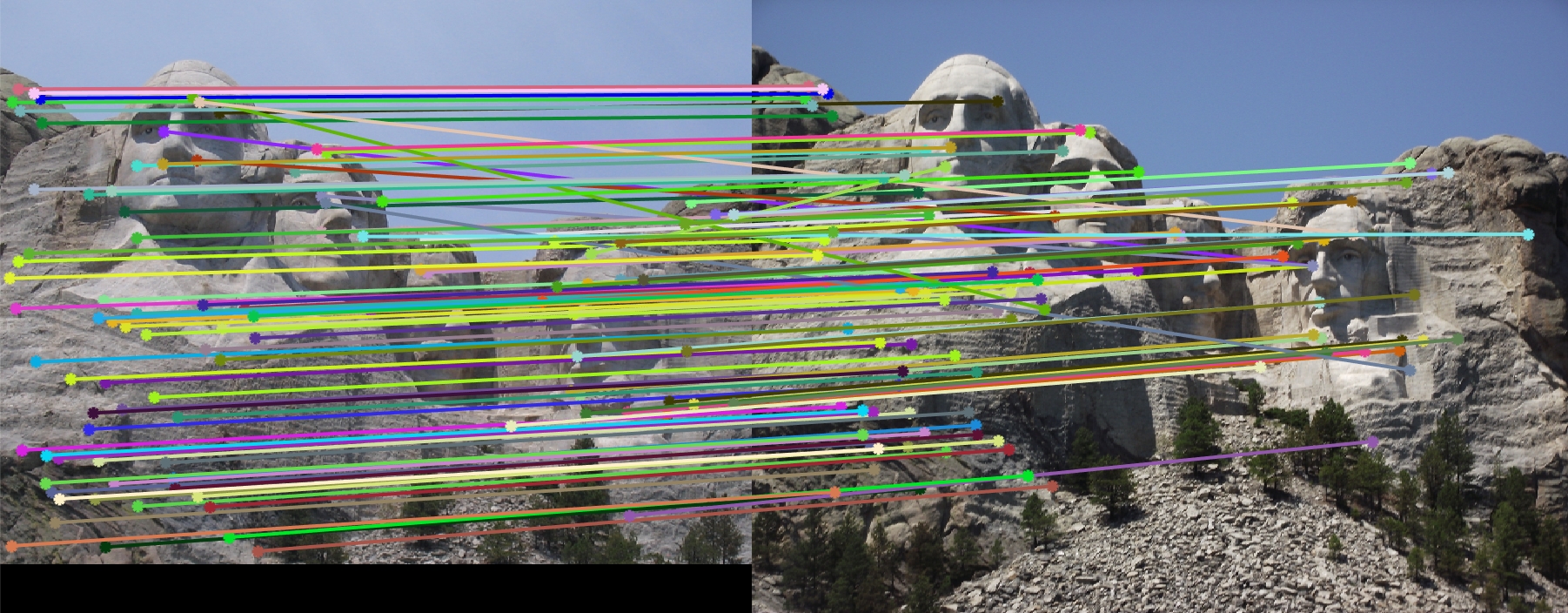

Feature Matching

- I calculated the similarity of the two features by their euclidian distance and NNDR.

I had to play around with the sigma value of the second gaussian filter in get_features.m. I hypothesize that down scaled images require smaller values of sigma. So changing the sigma value from feature width to three fourth the value increased accuracy by about 10%. I started with a threshold equal to the mean of the values in the matrix but I received way too few values. Reducing the threshold to mean/6, however, increased the number of keypoints to greater than 100.

Results in a table

|

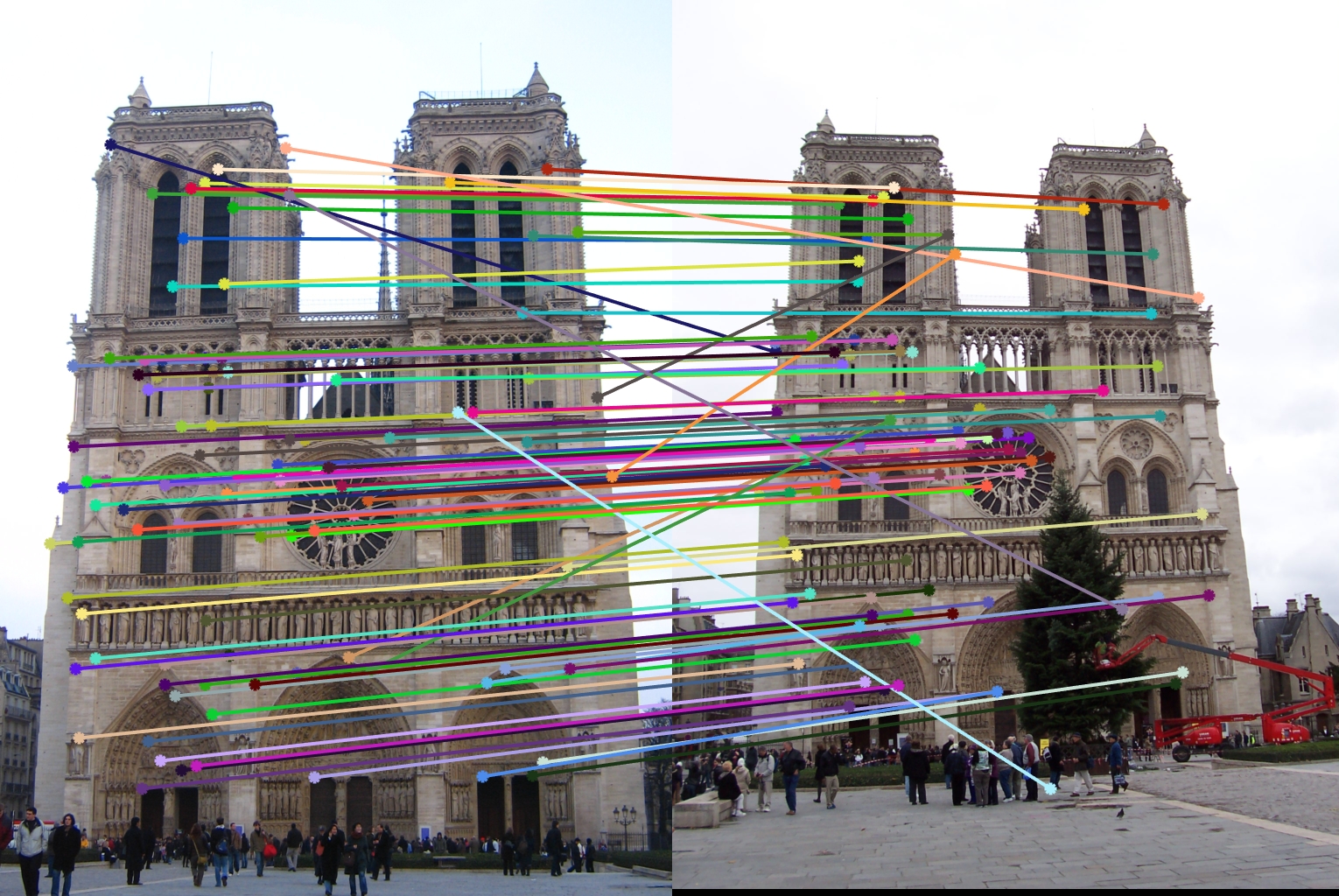

Results: 89% - 134/150 correct |

|

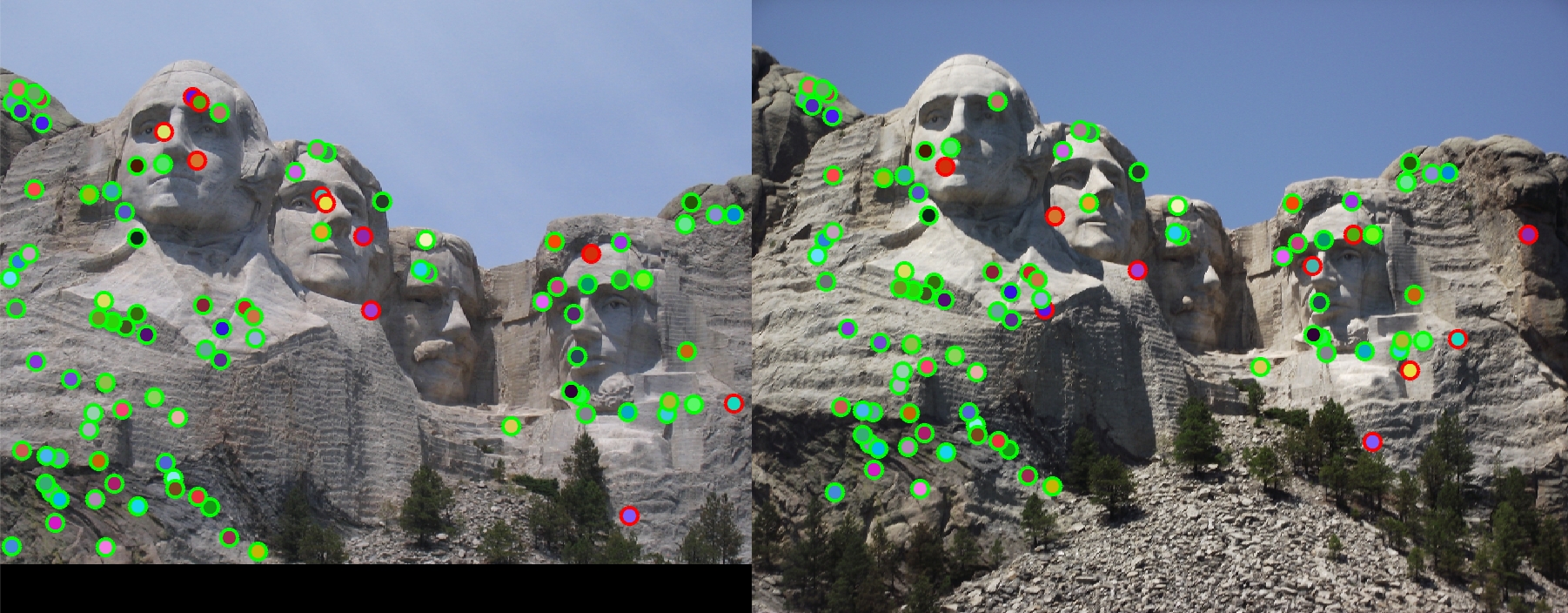

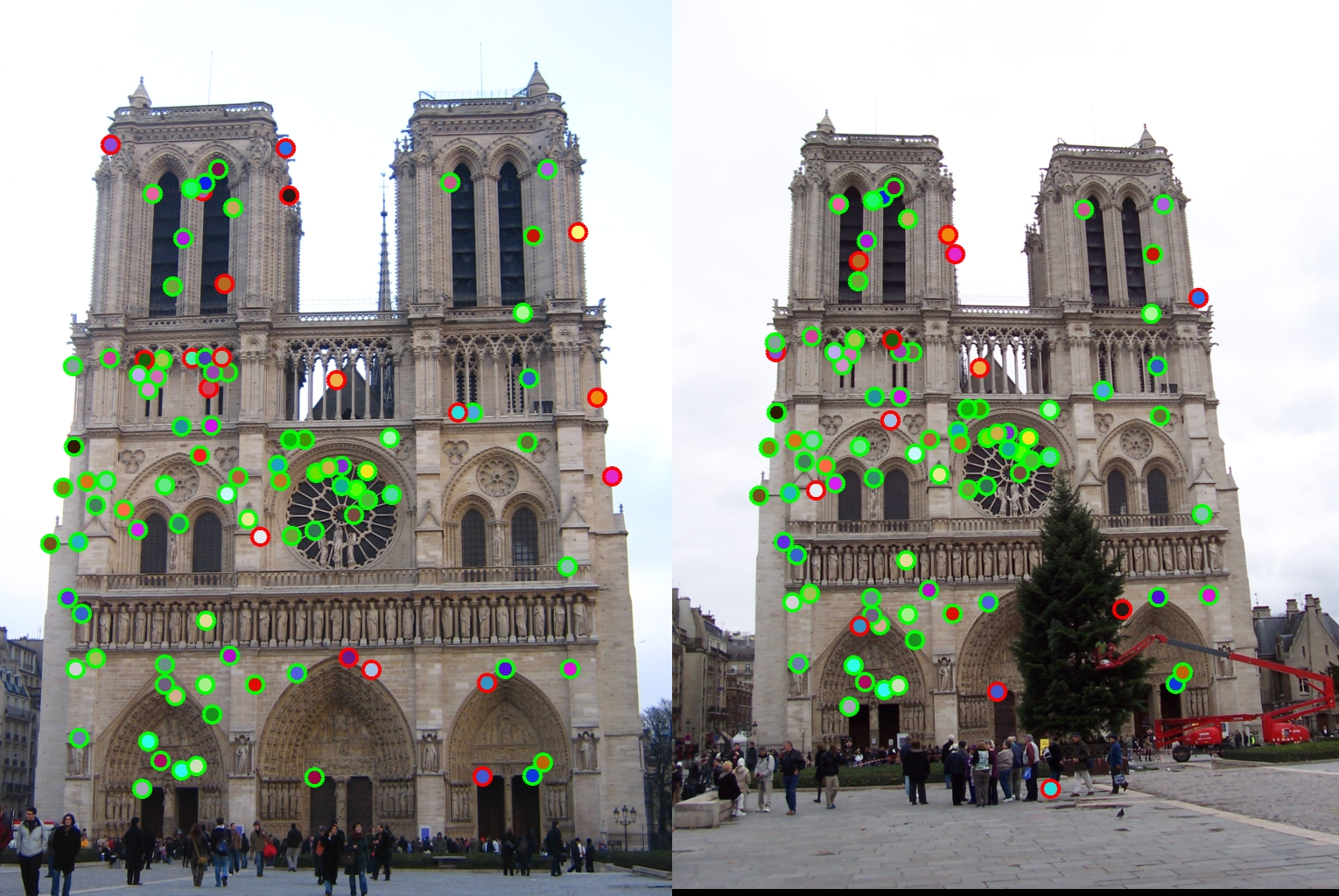

Results: 82% - 89/108 correct |

|

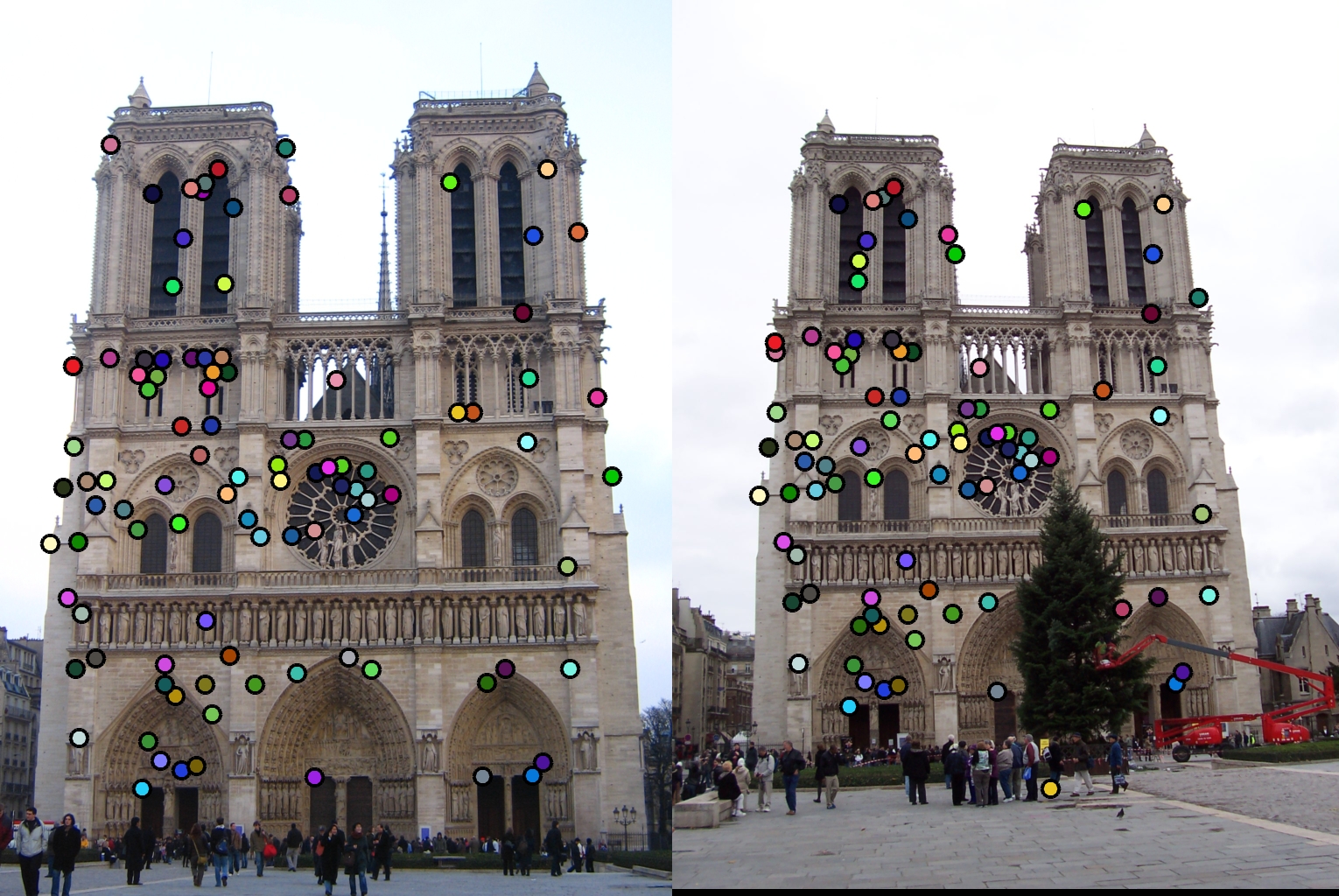

Results: 0.00% - 0/7 correct |