Project 2: Local Feature Matching

Overview

The goal of this assignment is to create a local feature matching algorithm using techniques described in Szeliski chapter 4.1. The pipeline we suggest is a simplified version of the famous SIFT pipeline. The matching pipeline is intended to work for instance-level matching -- multiple views of the same physical scene.

Details

The SIFT pipeline used specifically in this assignment consists of three parts: a keypoint detector which detects a list of keypoints from the input image, a feature creator which calculates SIFT-like descriptors from keypoints generated by the detector, and finally a matcher to match features and sort them by confidence. In the following paragraphs, I will talk about how I implement each part of them in high-level.

Implementation

Keypoint detector 1: Harris Corner Detector

The Harris Corner Detector is a simple but powerful way of getting keypoints. By calculating gradients in both x and y direction on a grey-scale image, we can use the formula in Szeliski 4.1 to get a cornerness value for every point on the image. It is simple and fast, and the result is not too bad if the differences between the two images are not too large. However, it is sensitive when, for example, the image is rotated.

Keypoint detector 2: SIFT DoG Detector

The second detector I implemented is the original filter in the SIFT pipeline, the DoG(Difference of Gaussian) filter. The difference-of-Gaussian function is

a fast and stable approximation to the Laplacian of Gaussian which is proved to be more stable than Hessian or Harris corner function. The basic idea is that

we pre-calculate a Gaussian Pyramid, and use that to calculate DoG images. Figure 4.11 in Szeliski shows this idea. After we get the DoG pyramid, we filter out

the interest points by searching local extrema in every 3x3x3 grids. Then, some extra processing steps are needed to reject some points in low contrast regions

and some edge points. Gradient direction is calculated at this stage in order to have rotation invariant features. And we also detect features from different

octaves and scales in the pyramid.

To read more about detailed information and theories, here is Lowe's paper: Lowe, D.G. International Journal of Computer Vision (2004) 60: 91. doi:10.1023/B:VISI.0000029664.99615.94

Local Feature: SIFT descriptor

The feature I go for is the actual SIFT descriptor as mentioned in Lowe's paper. The basic idea is the same as described in lectures: we have some area around

the keypoint, and we calculate the magnitude and orientation of gradient at each point in that area. After that, we apply a Gaussian function to it, do sub-pixel

interpolation, and distribute the magnitude into 4x4 windows with 8 bins each according to its orientation. The orientation of gradient is adjusted according to

the information we obtained from the detector. The scale information is used to adjust the area covered and the Gaussian function. The normalize -> threshold ->

normalize is used to reduce the influence of illumination change. This resulting descriptor is a 128 dimension vector, with rotation invariance.

As before, detailed information can be found in Lowe's paper.

Matching features: k-d tree

The naive search is O(n^2), which is quite slow. Instead, in Lowe's paper he proposed a search method based on k-d tree called Best-Bin-First search. Here, however, I do not implement that search. Instead, I use the knnsearch() comes with Matlab, and it can do a normal k-d tree search. Nonetheless, the search is still much faster than a exhaustive one.

Performance

Harris Corner Detector

Harris Corner Detector will normally give out 2000~3000 interest points each image with gradients calculated by sobel filter and thresholded at 1e-6. The calculation are all matrix operations so it is fast. On the Norte Dame pair it will take less than 5 seconds.

SIFT DoG Detector

The DoG Detector is slower in the sense that it has to check local extrema and reject some of them pixel-by-pixel. Also, time of orientation calculation is also linearly correspond to the number of detected keypoints. It will give out over 5500 interest points each image on the Norte Dame pair, and it normally take 40 seconds to finish.

SIFT descriptor

This part is rather slow because of my implementation. The problem comes from that because the rotation calculation and trilinear interpolations, it is hard to use matrix operations. Instead, I can only do pixel-by-pixel calculations, which is slower in Matlab. The running time is also linearly corresponding to number of keypoints. When paired with the DoG filter, because it gives out much more interest points, the calculation is much slower. The calculation normally takes 1 minute when using with DoG filter.

Feature matching

The native k-d tree search in Matlab is very quick. It will take about 10 seconds to do the matching with about 5000 points each image.

Some results

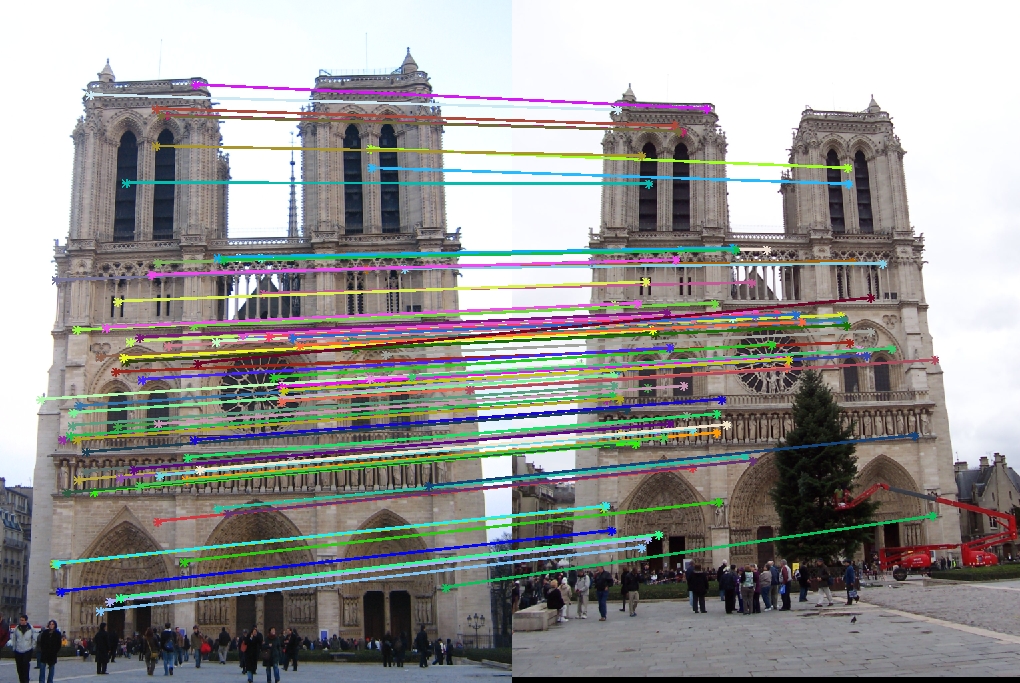

|

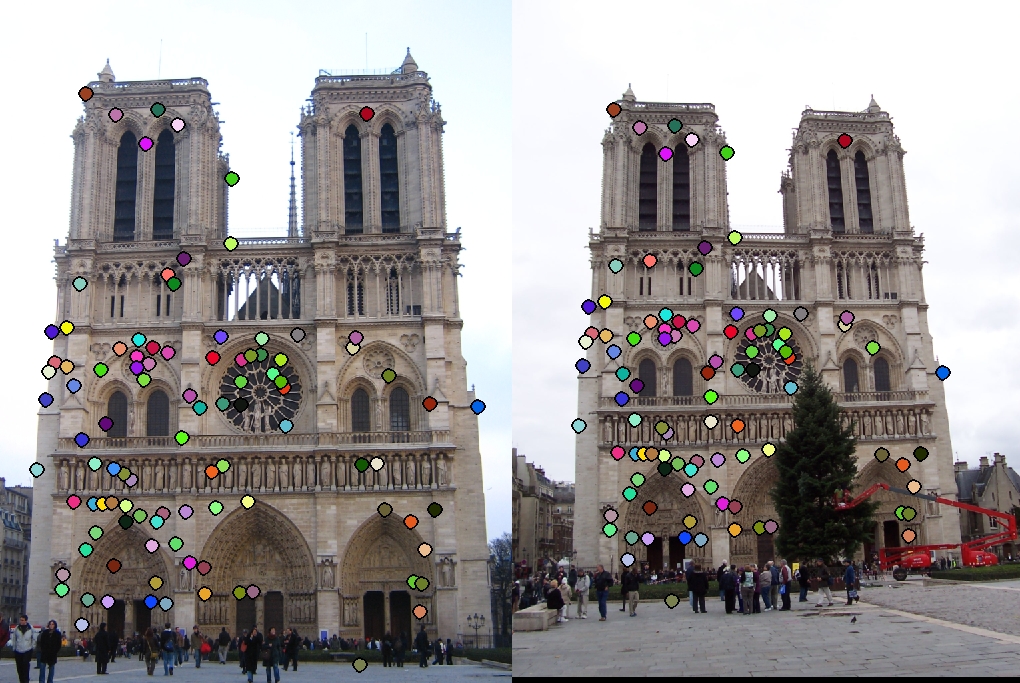

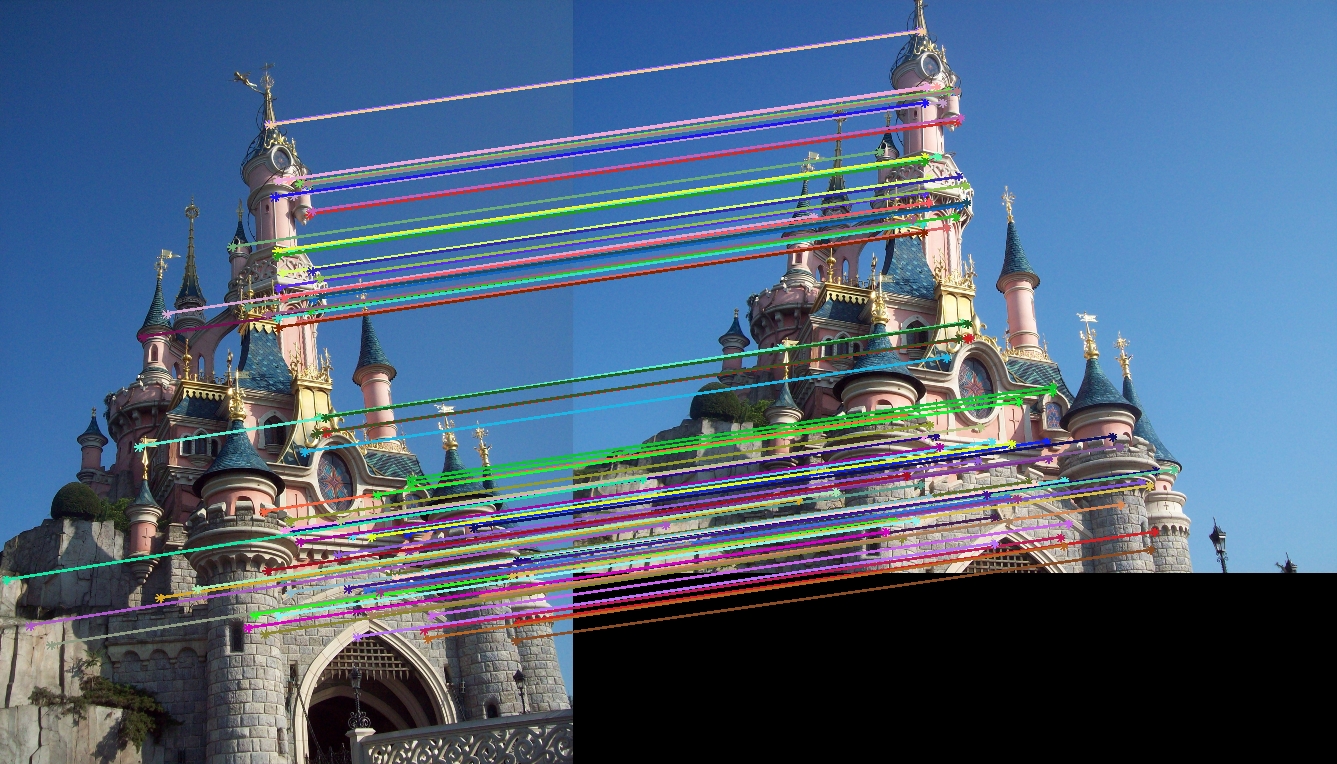

| Harris Corner Detector, accuracy:90% |

|

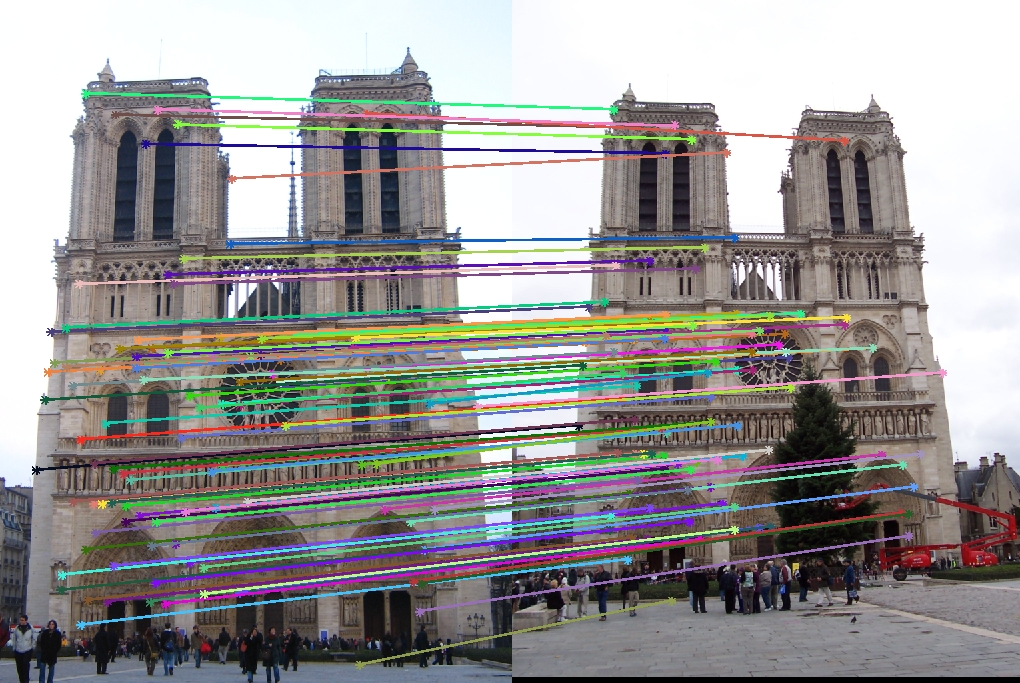

| SIFT DoG Detector, accuracy:93% |

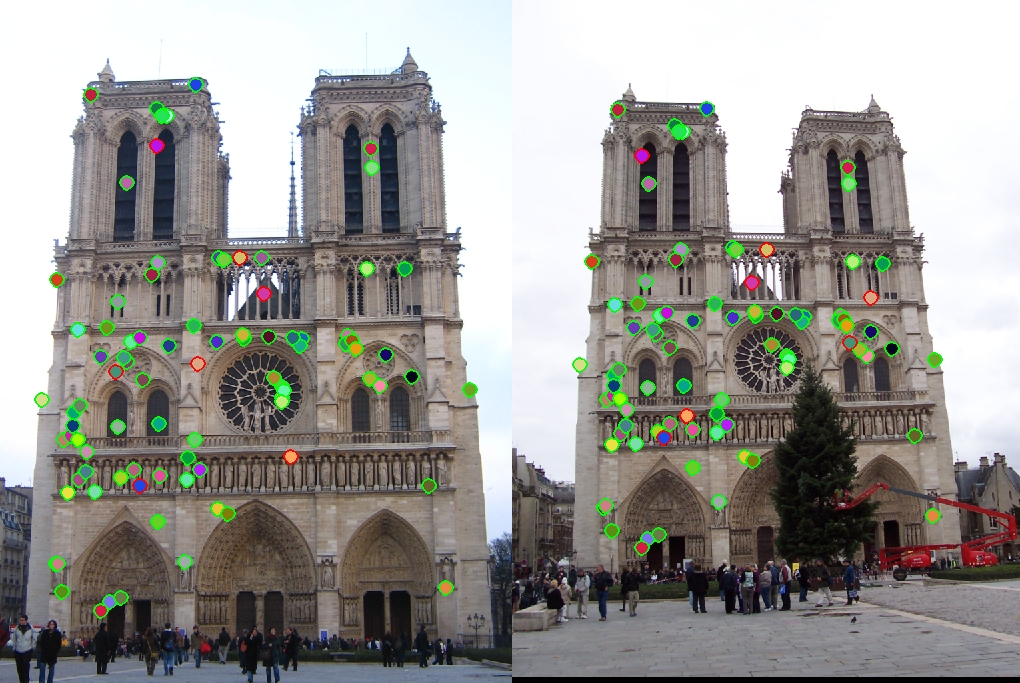

|

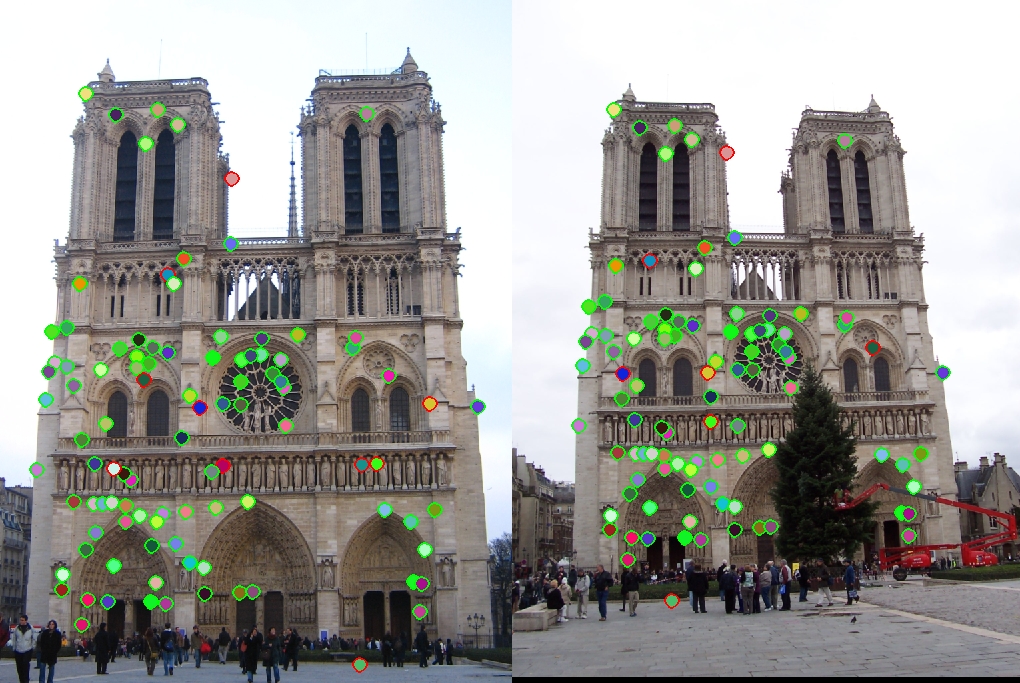

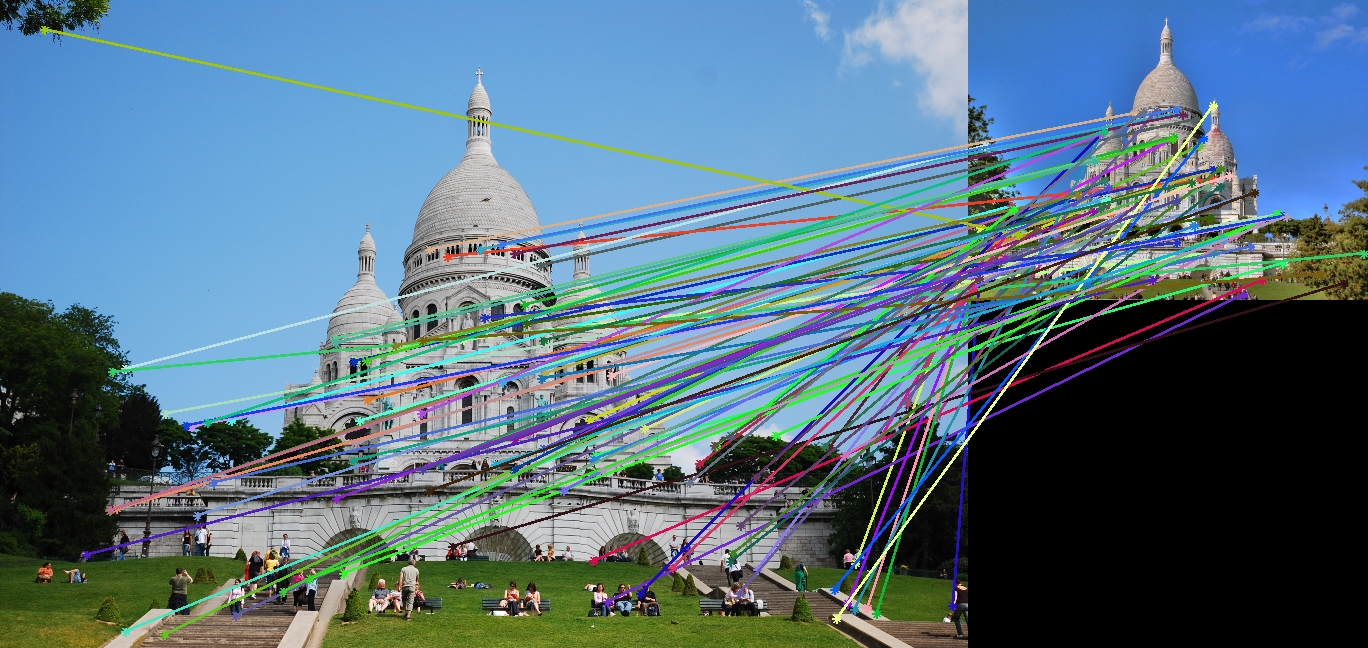

| Harris Corner Detector, accuracy:95% |

|

| SIFT DoG Detector, accuracy:100% |

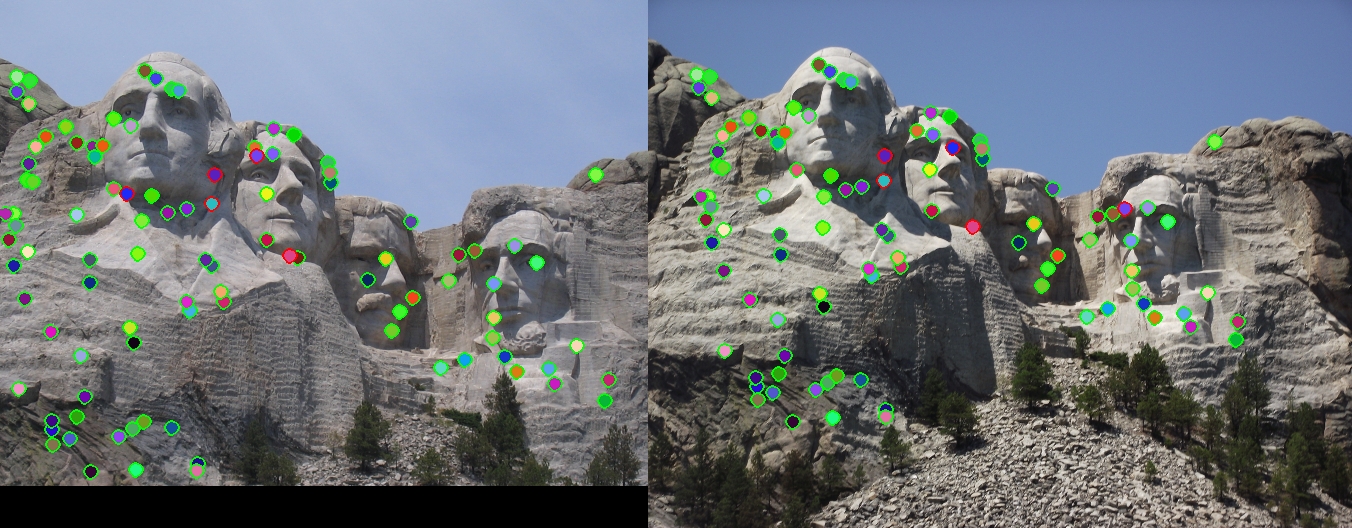

|

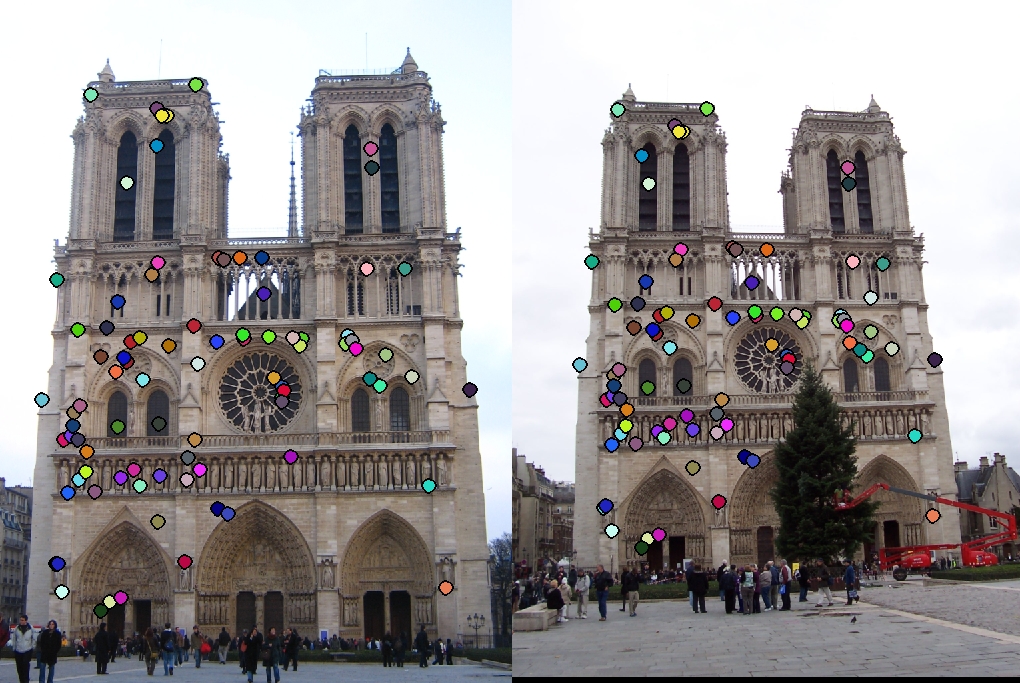

| Harris Corner Detector, accuracy:9% |

|

| SIFT DoG Detector, accuracy:61% |

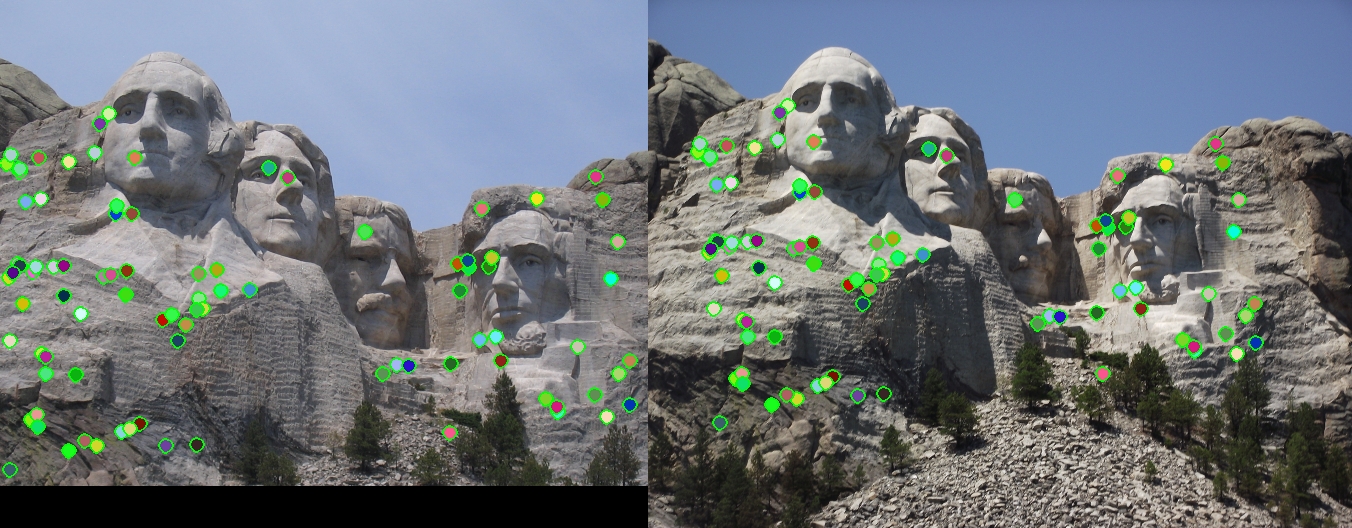

|

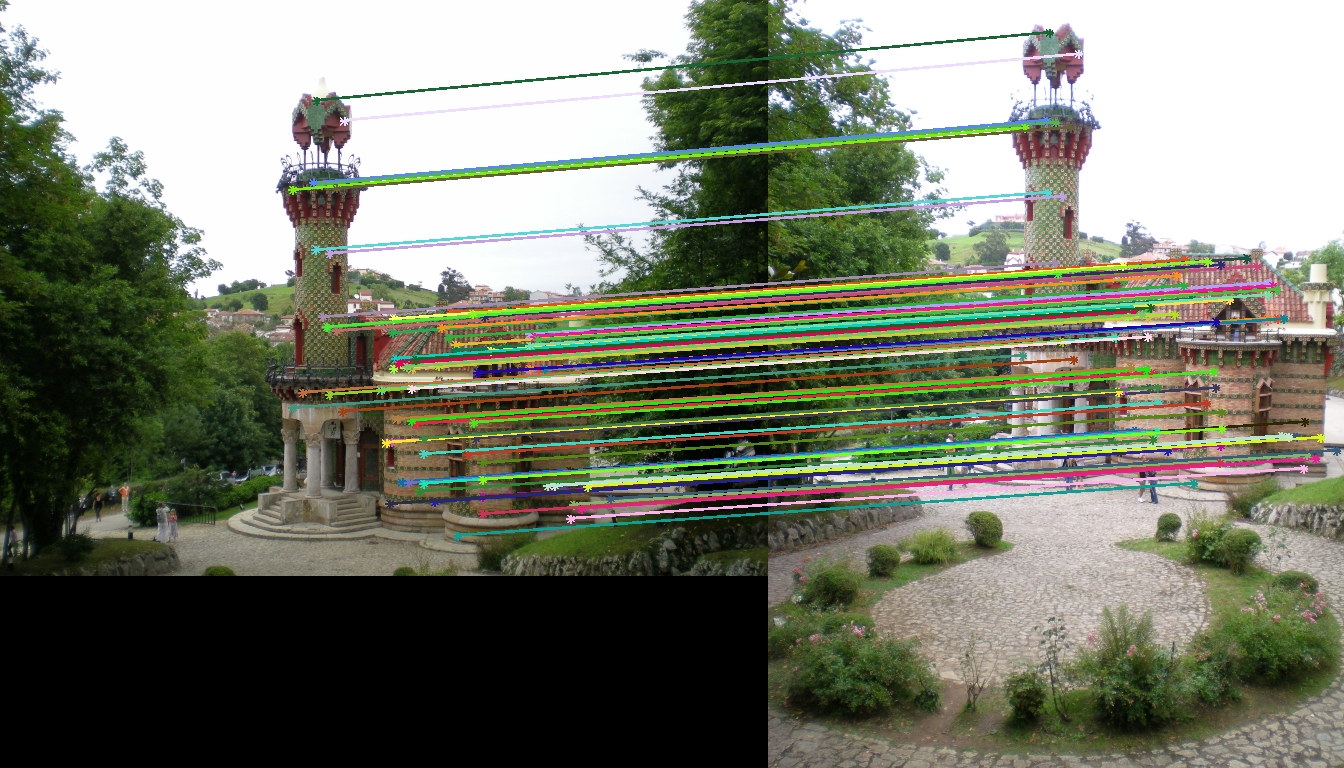

| SIFT DoG Detector |

|

| SIFT DoG Detector, not very well because the background introduces much noise |

|

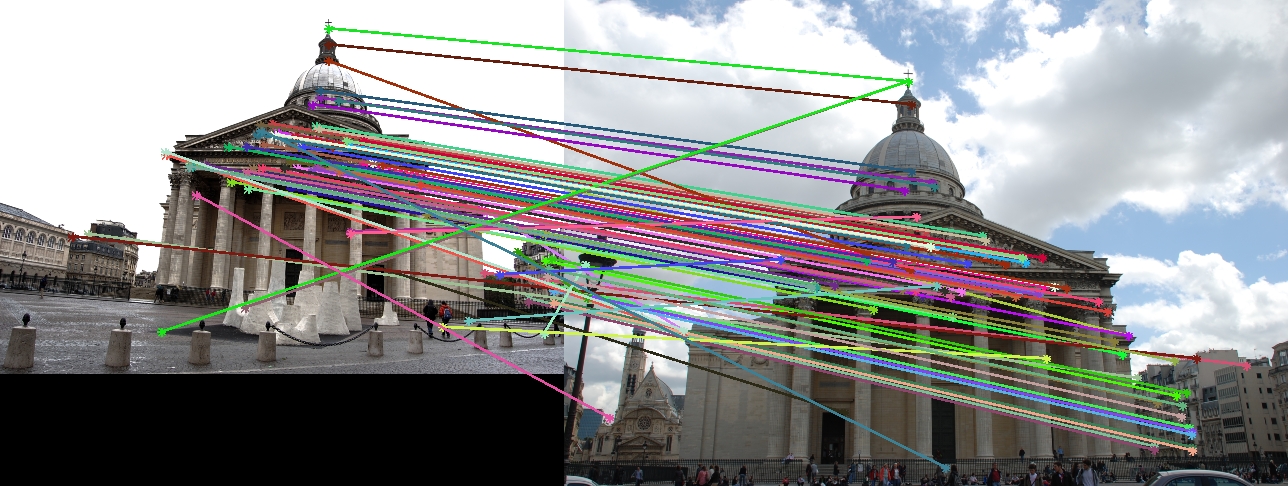

| SIFT DoG Detector, good match |

|

| SIFT DoG Detector, interesting to see it matches the house on the right side of the image |

|

| SIFT DoG Detector, it tries to match trees but not very successful |