Project 2: Local Feature Matching

The objective of this project is to create a local feature matching algorithm using a basic SIFT pipeline. If implemented correctly, it allows us to match two different photos of the same object based off distinctive points in the object, such as corners and other areas of high intensity change.

get_interest_points.m

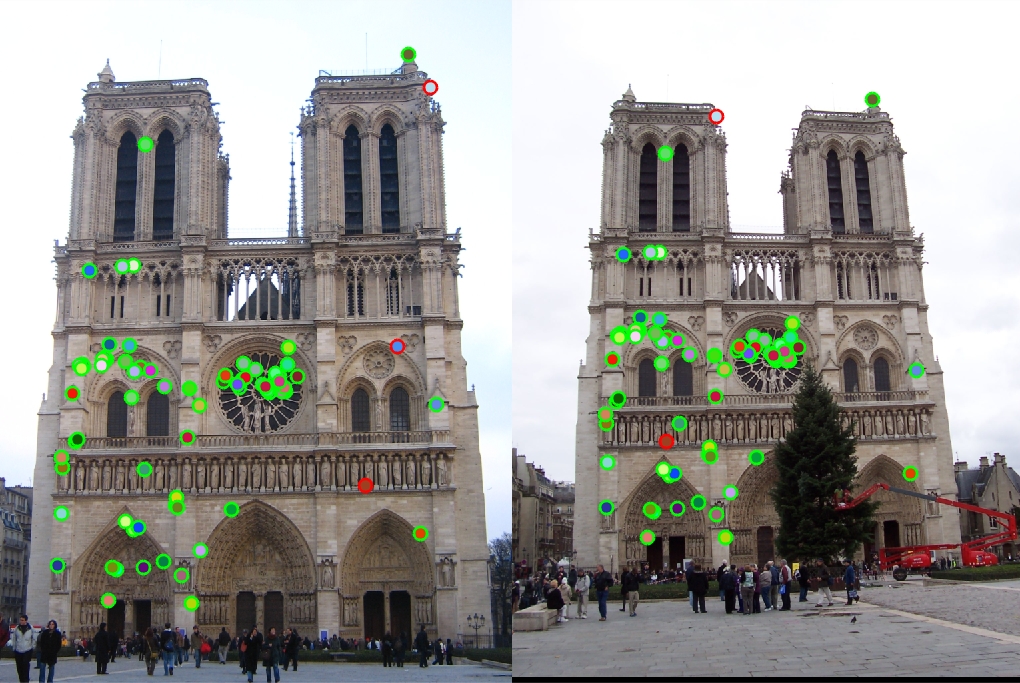

For this function, a simple Harris Corner detection algorithm was implemented. Two 3x3 sobel filter are generated to convolve with the image with respect to the X and Y directions. This convolution is repeated another time in each direction and also performed once on the Y direction followed by the X direction to yield three new images. These images are then convolved with a large 20x20 gaussian filter to give use the three images needed for the Harris Corner detection equation. The distinctive interest points are then counted and then mapped to their x and y positions in the image.

get_features.m

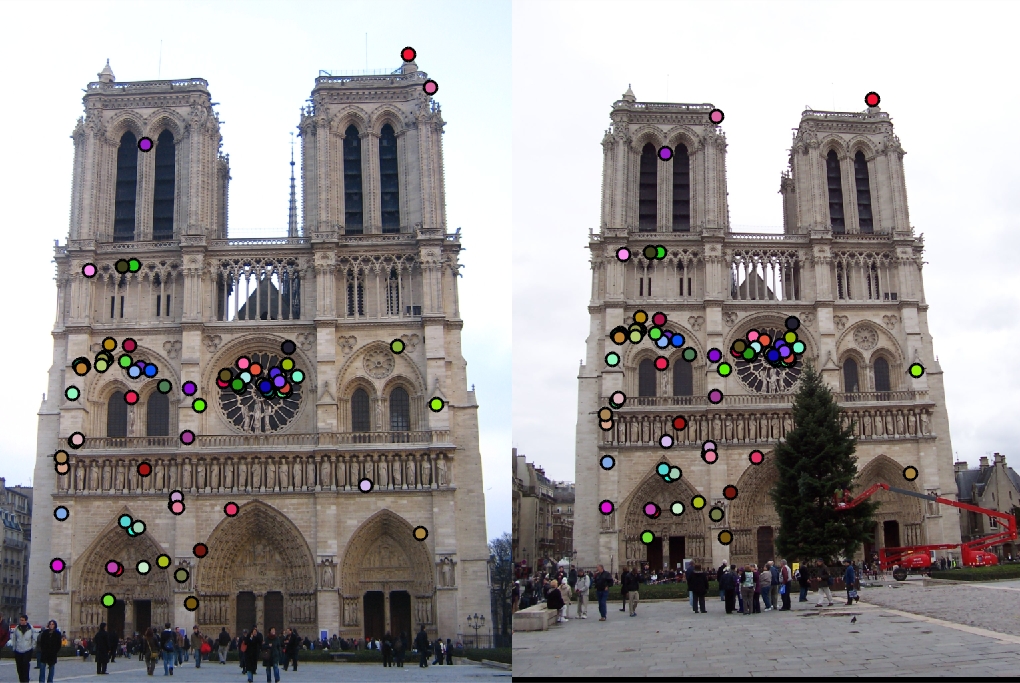

The simple SIFT feature descriptors are implemented in this function to meet the requirements for this phase of the project. The first step is to generate a 16x16 frame around each interest point. Then the gradient direction and magnitude are computed for each frame. The direction magnitudes are weighted based on their distance from the central interest point. Next, the frames are subdivided into 16 smaller 4x4 frames. A histogram is generated for each 4x4 frame and the gradients are split between the 45 degree seperated vectors of -180, -135, -90, -45, 45, 90, 135, and 180. The weighted magnitudes of each vector are summed for each of the vectors. Finally, a 128 element feature descriptor vector is appended with each frame's histogram.

match_features.m

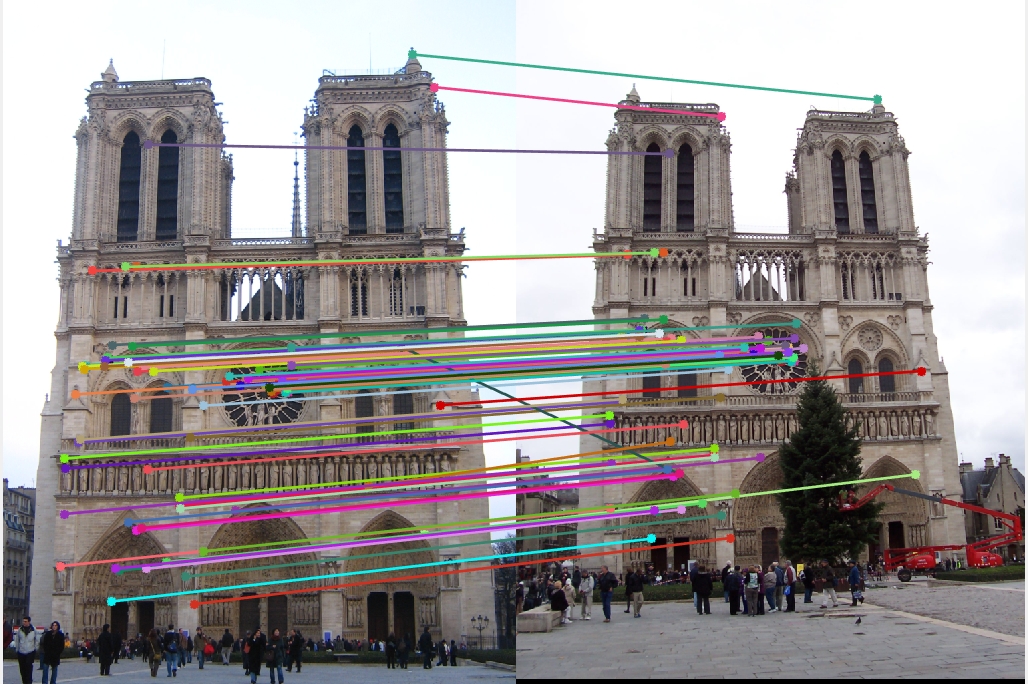

Following the lecture advice for feature mapping, euclidian distances between features were calculated and sorted. A distance threshold of 0.65 was chosed. The confidences of each match were computed and these confidences were sorted from highest to lowest.

Examples of Results

Notre Dame (96% accuracy)

|

|

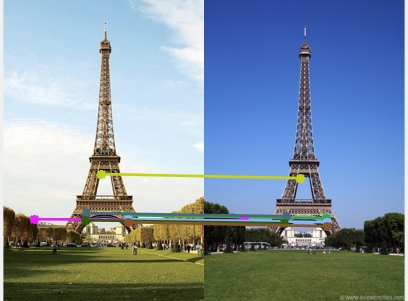

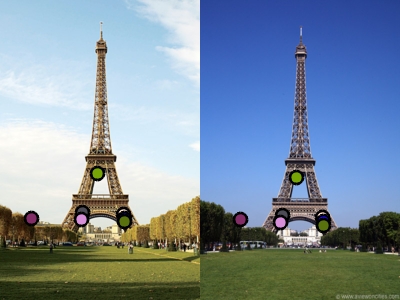

Eiffel Tower

|

|