Project 2: Local Feature Matching

In this project, I implemented a local feature matching algorithm using a simplified version of SIFT pipeline. It has three main steps:

- Get Interest Points

- Get Feature Descriptions

- Match Features

Algorithm

Get Interest Points

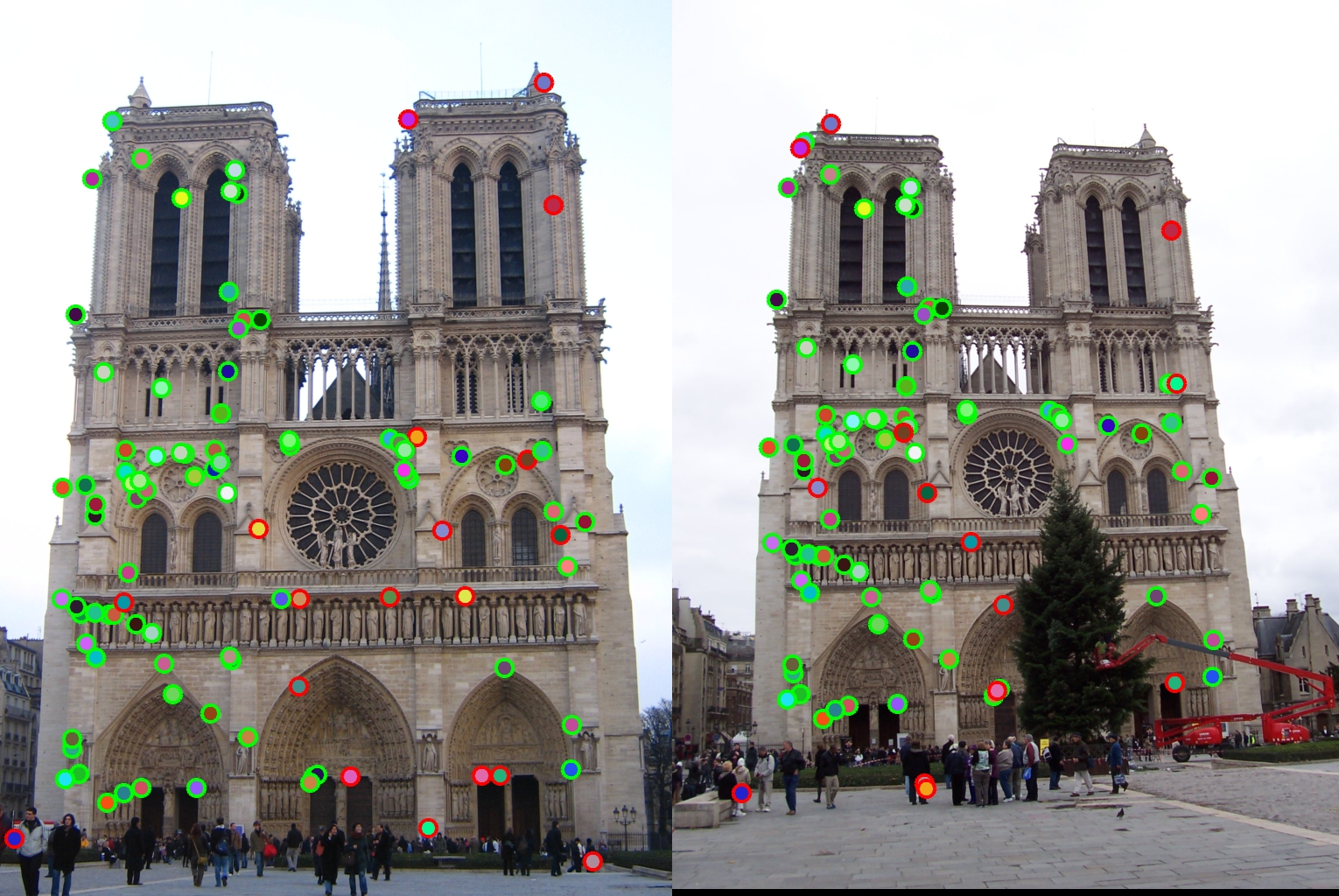

To detect the interest points, I first used sobel filter to get the x and y gradient of the image, then applied gaussian filter to blur the square of derivitives, calculated the Cornerness function and finally applied Non-maxima suppression. The following image is the image after applied non_maxima suppression.

For non-maxima suppression I used BWCONNCOMP to get the local maxima of each connected component. To achieve better accuricy, I did some experiment with different alpha, threshold values, and I also tried to modify the hsize used to create the gaussian filter. These modifications improved the accuricies on Notre Dame dataset for 15%, from 68% to 83%.

Get Feature Descriptions

I first implemented this function using normalized patches as features. Then I implemented SIFT like features. I created two sobel filter to get the x and y gradient of the image, and then use atan2 to compute the gradient orientation of each pixel. Then for each interest point, I made a 4x4 grid of cells around it. For each cell, I traversed through the pixels and distributed the pixel to a bucket corresponding to its gradient. There are 8 buckets in total. In this way, each feature will have 4*4*8 = 128 dimensions. Finally I normalized each feature to unit length. This SIFT like features improved the accuracy on Notre Dame dataset for around 30% with cheat_interest_points function.

Match Features

Here I implemented the ratio test described in Szeliski. For each feature in image1, I search for the two nearest neighbours n1 (closest) and n2 in image2. Then the smaller the ratio of n1/n2 is, the more confident I am about this feature pair. I set the threshold of d1/d2 as 0.7 to only return the features that I am confident enough.

Results

For the Notre Dame pair, the accuracy is 81%. For the Mount Rushmore, the accuracy is an amazing 97%. For Episcopal Gaudi however, the accuracy is unfortunately 0%. I tried to tune the parameters, but the accuracy never went up to 5%. I think this is because one image is much smaller and darker than the other, thus a unified scale simply did not work out.