Project 2: Local Feature Matching

The project is about SIFT pipeline for local feature matching. I have implemented the followings.

- Harris corner detector

- SIFT-like feature descriptor

- "Ratio Test" matching

Harris Corner Detector

In computing the cornerness score, we use the ratio lamda1*lamda2/(lamda1+lamda2) instead of lamda1*lamda2-alpha*(lamda1+lamda2)^2. In this way, we only need to tune the threshold value. We tune the threshold to select over 1000 key point candidates. Then reject those who lies near the boundary of image and select only local maxima with radius 1(the pixel that have higher score than its 8 neighors). Next, we use adaptive non-maximum suppression to further reduce the candidate sets.

To implement non-maximum suppression, we sort the confidence (i.e. cornerness score) in descending order for all candidates. Build a vector D storing the minimum distance to pixels that have higher confidence. Since the confidence is sorted, we only need to compute distance from the pixels that is on the left of current position in the sorted confidence list. Only about 2000 candidates left, the computation complexity is ok although computing D takes O(n^2) time. Then select the pixels corresponds to the top 2000 of D. In this way, the key points selected are more "uniformly" distributed around the image.

To calculate the orientation of key points, we select feature_width*feature_width pixels around the key point as a patch. Calculate the magnitude and direction of the gradient of patch. (To make it less affected by boundary, first calculate the gradient of the whole image then select the patch in it.) Use a gaussion to compute the weighted average of magnitude so that the pixels near the key point have higher influence. Divide 360 degree in to 36 10-degree bins and compute the histogram by sum all magnitude falling in the bin. The orientation is the bin with highest value, and estimate the corresponding degree with the center of bin.

It would also help to filter the original image with a small gaussian filter to reduce noise in the first place. For the other gaussian filter to convolve with the matrix M, we choose the size to be equal to the feature size, and sigma = 20.

SIFT-like Feature Descriptor

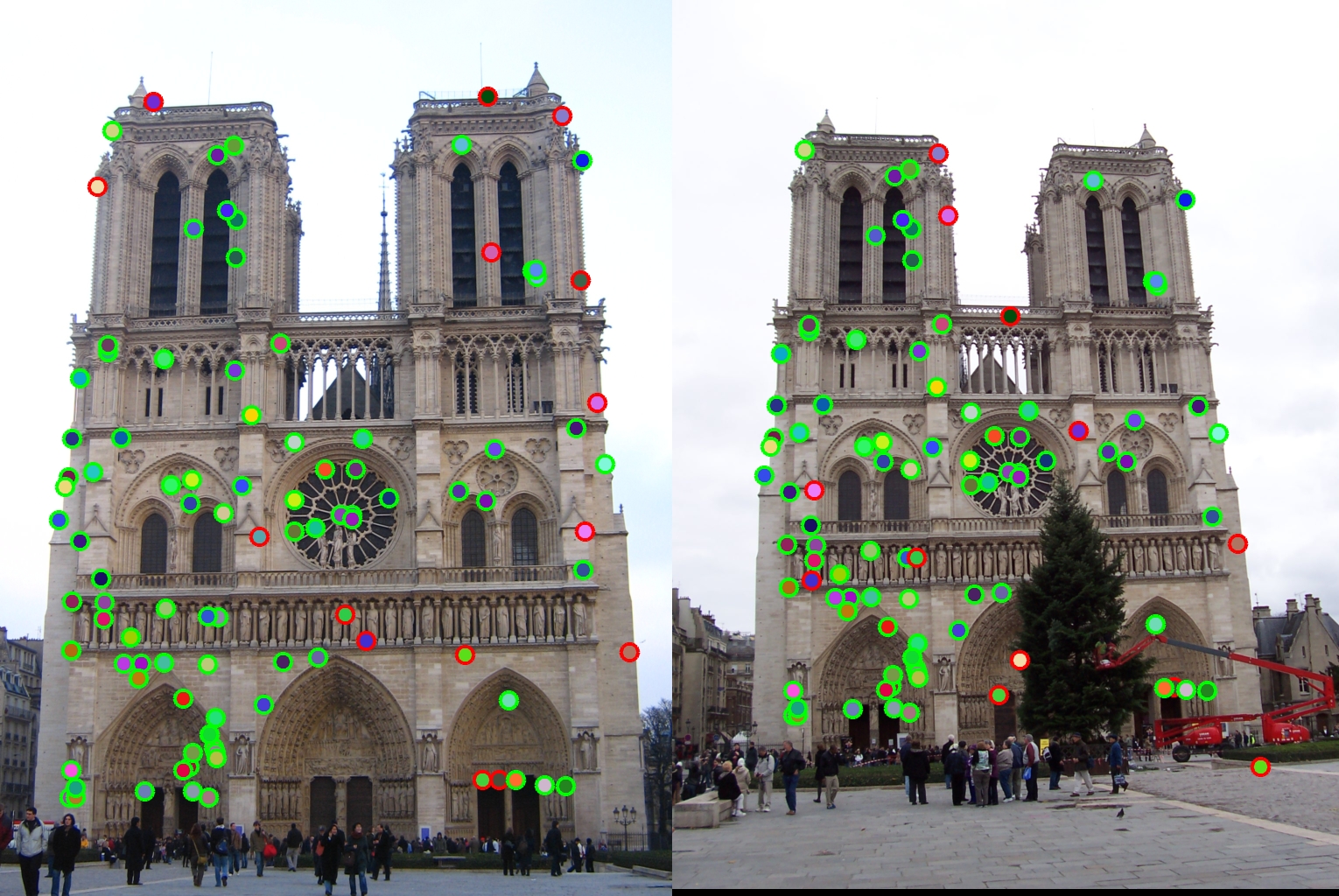

I have tried 2 ways of implementation:SIFT(with trilinear interpolation), and Combining response to sobel filters of different orientation. For Nortre Dame image, SIFT achieves 85% and Combining responses achieves 67% in accuracy for the 100 most confident matches.

To incorporate orientation of keypoints, we first calibrate the direction of gradient of pixels in the feature patch so that it is 0 degree when aligning with the key-point orientation.

It would also help to filter the original image with a small gaussian filter to reduce noise in the first place.

"Ratio Test" Matching

For every feature from image 1, compute the distance to every feature from image 2, the ratio = miniDistance/secondMinDistance. The smaller the ratio, the less ambiguity the matching is. So confidence = 1 - ratio. To speed up, we use kdtree for find 2-nearest neigbors. If we do not use kdtree, the step for matching features takes 1.28s. After using kdtree, the maching features step takes only 0.38s.

Results

|

The above table shows the results of Notre Dame pair. The most confident 100 matches achieve accuracy of 85%

|

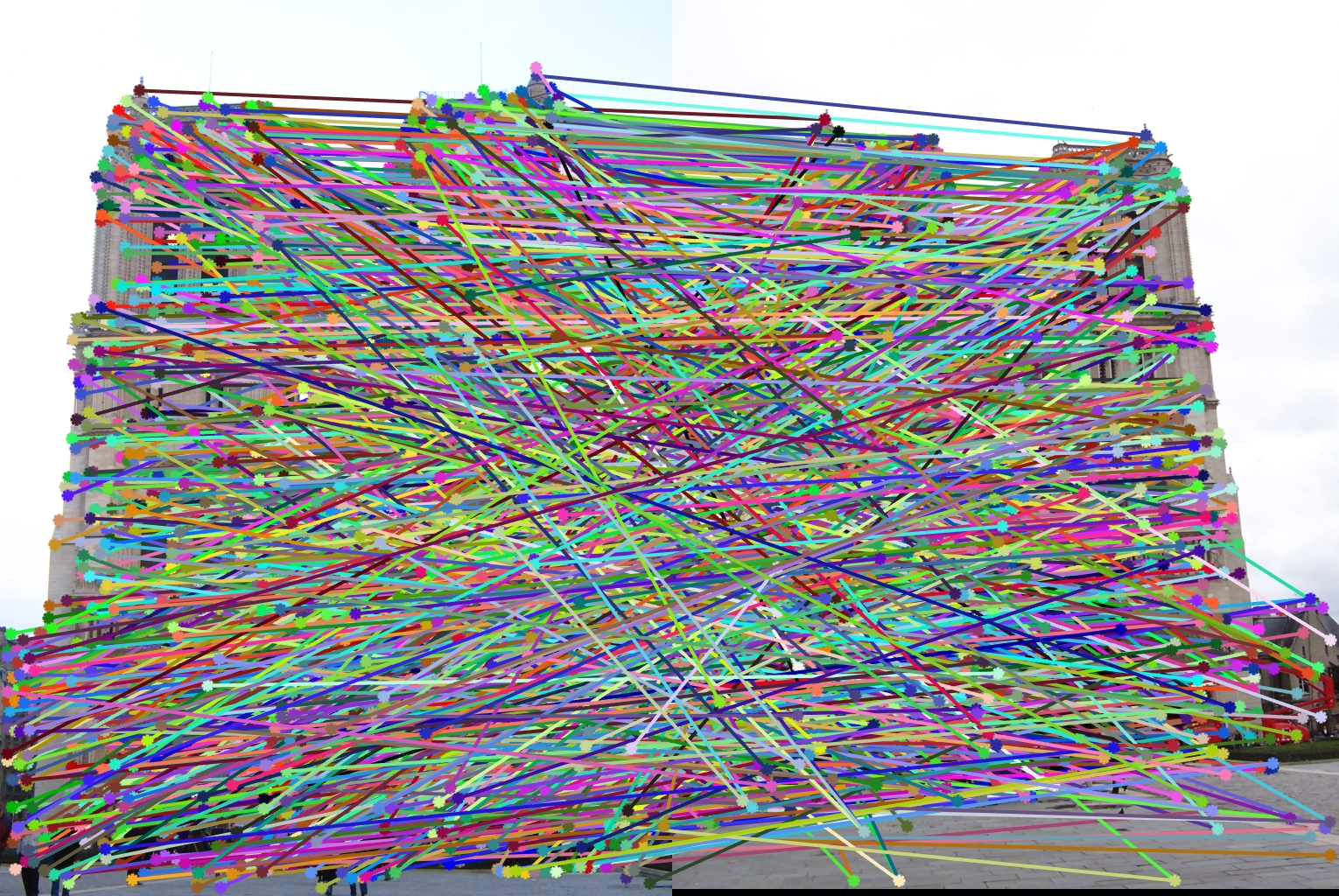

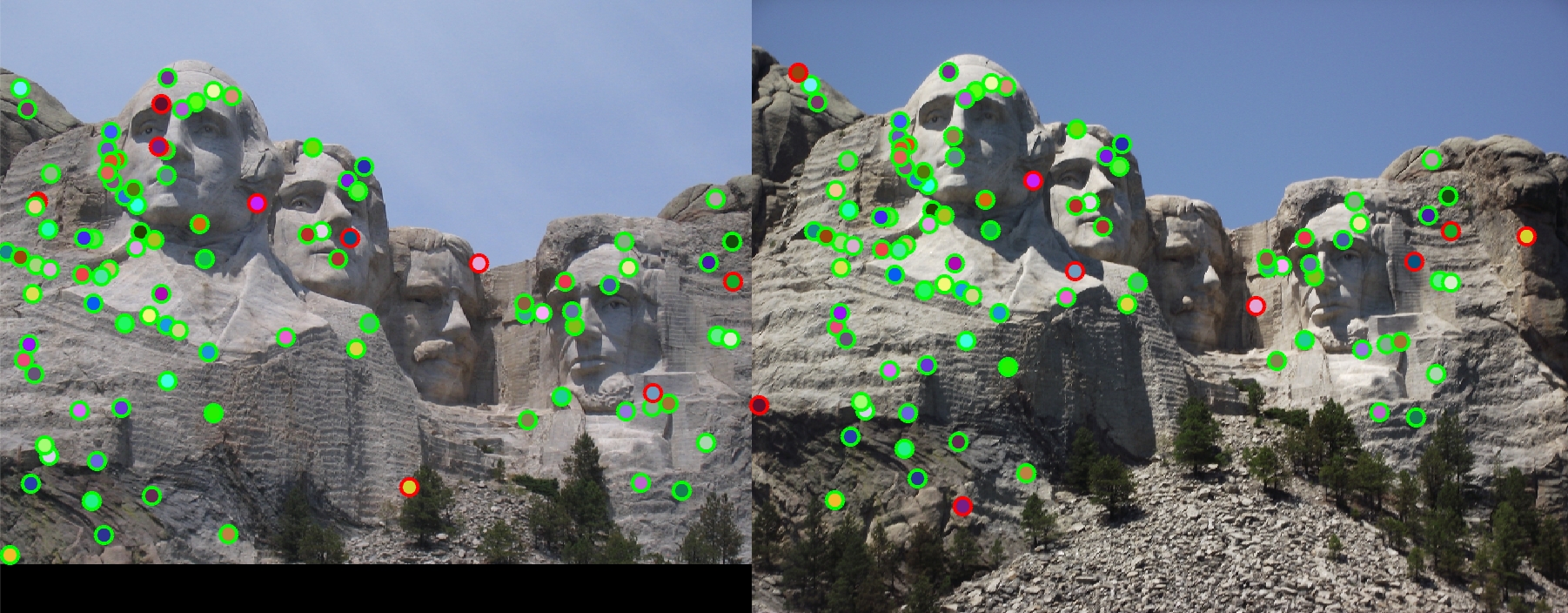

The above table shows the results of Mount Rushmore pair and Episcopal Gaudi pair with accuracy 91% and 2% respectively. The implementation does not take into account of scaling, so the accrucy is low for Episcopal Gaudi pair.