Project 3 / Camera Calibration and Fundamental Matrix Estimation with RANSAC

Project 3 is all about discovering what a big difference understanding the camera parameters and geometry can have on finding matching features across images. In project 2, we learned how to find good interest points and descriptors for them across images, and determine the best matches of the points across the two images. Now, we need a way to further trim down our number of matches, as we obviously saw some outliers that appeared to match very well given our descriptor, but ultimately didn't actually match due to the points being on different places of the building. This is where project 3 comes in.

Part 1: Camera Projection Matrix

Part 1 of the project was to calculate the camera projection matrix, which is an intrinsic matrix that we can calculate given 2D and 3D corresponding points in an image. This includes camera specific parameters, as well as the rotation and the translation of the camera with respect to the rest of the scene. I compute this by solving a system of equations with the point correspondances and using singular value decomposition to get the results of my matrix. The projection matrix for the given key points that my code produces is below (the residual was 0.0445):

Another aspect of this step was to compute the camera center given the coordinate system I used to calculate the projection matrix. You can easily extract this from the projection matrix, as I have done for this project. The camera center that my code produces with the given keypoints and above projection matrix is shown below:

Part 2: Fundamental Matrix Estimation

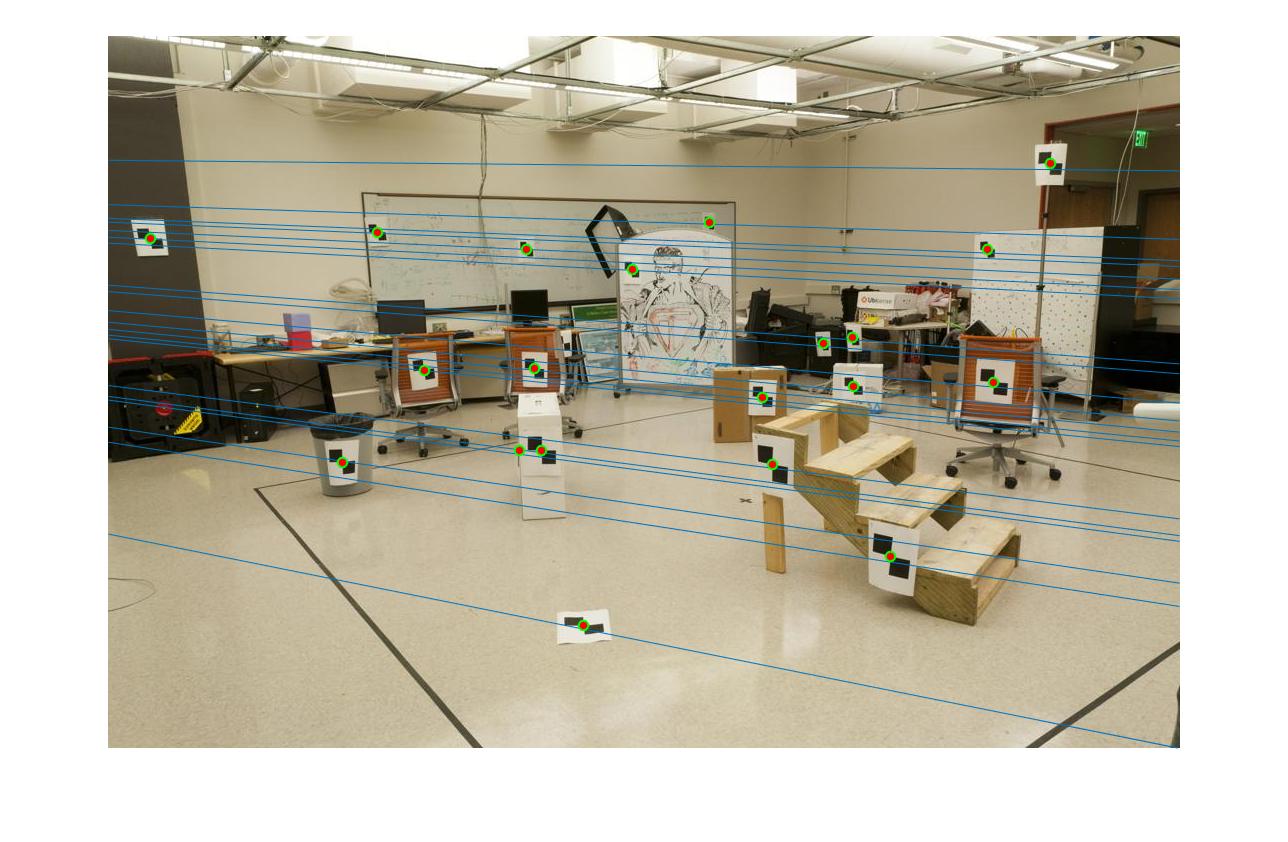

Part 2 of the project is to calculate the fundamental matrix correspondence for two images. This is similar to part 1 in that we have to solve a system of equations for point correspondences, but instead of the points being matched across 2D and 3D space for one image, we are matching two 2D points across two different images. With perfect correspondences, we can get a really good idea of the epipolar lines that demonstrate the rotation of the images with respect to the camera. For the given images and keypoints, here is the fundamental matrix that my code computes:

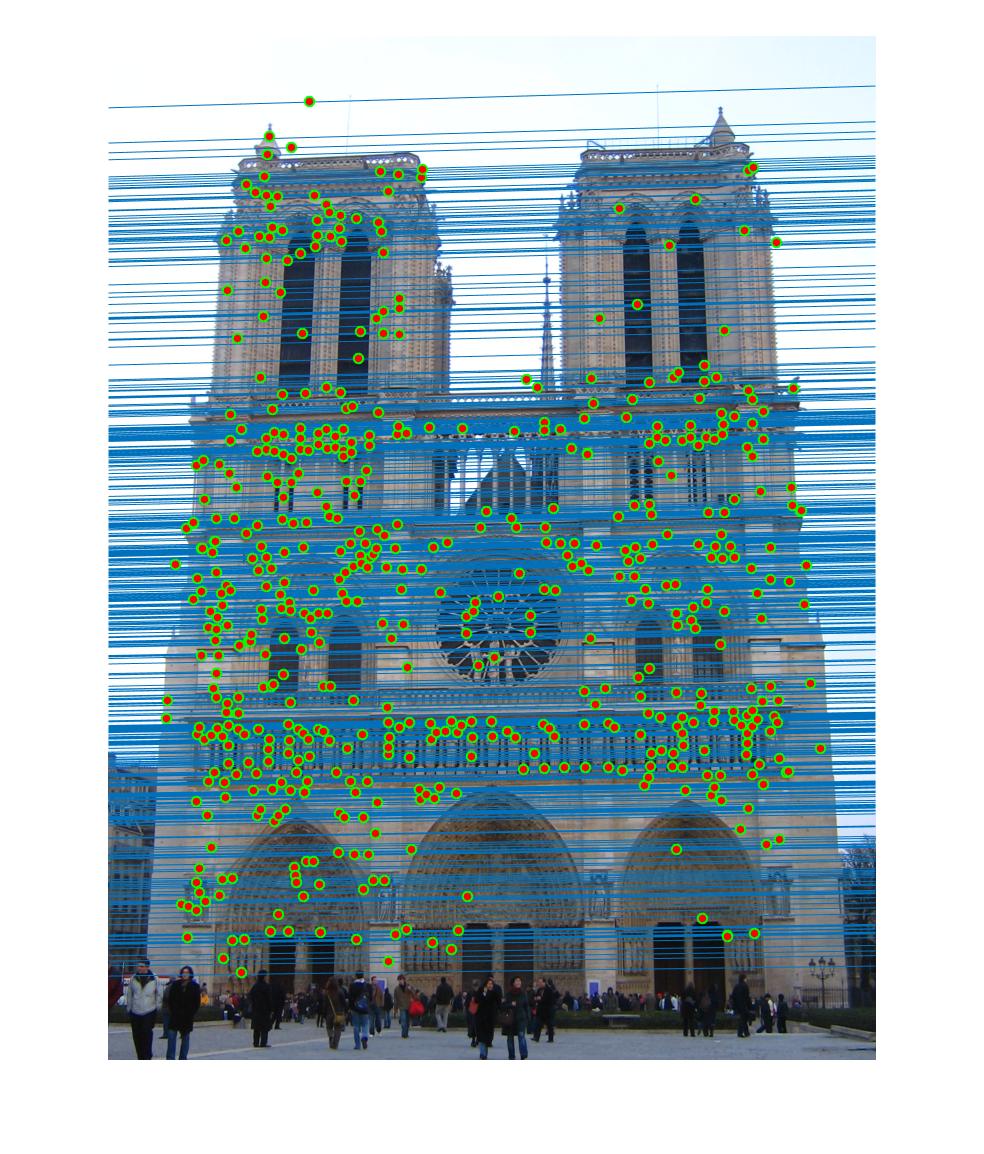

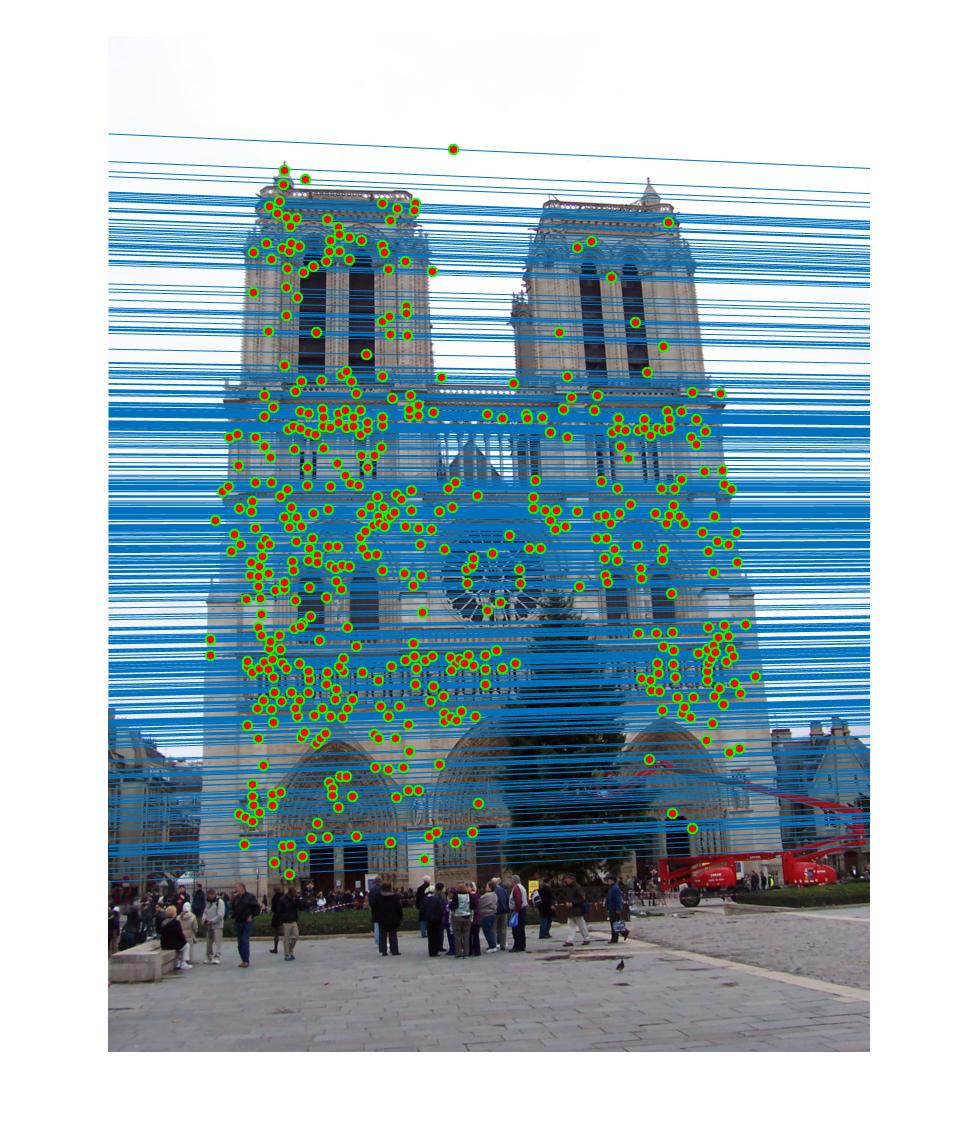

Here are the images with the epipolar lines drawn. My line are slightly below or above the actual points in some cases, but appear to line up and match the sample given fairly well.

|

Part 3: Fundamental Matrix with RANSAC

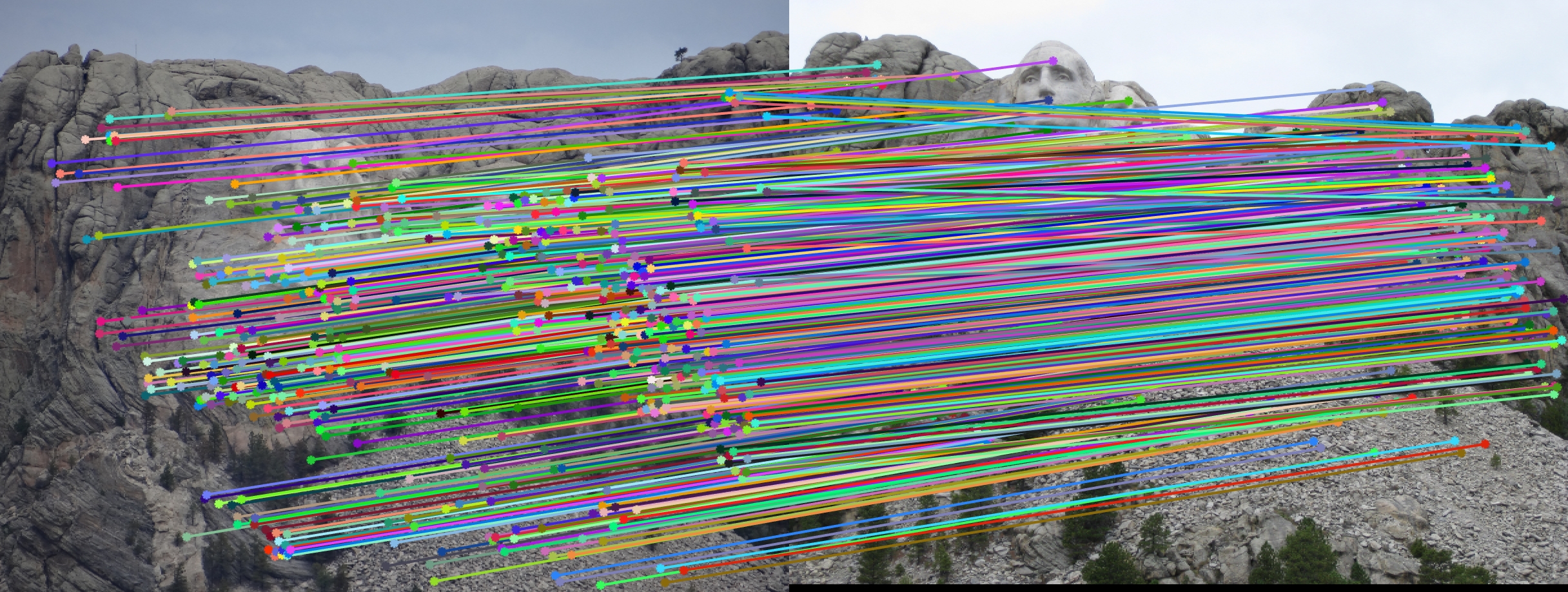

The third and last part of the project brings the idea of matching points full circle. In a real world situation, you won't be guaranteed perfect point correspondence (what would be the point of trying to find matches if you already knew perfect correspondences?) so you can't build a fundamental matrix with all of the proposed pairs and assume that it will be a good estimator. Outliers likely exist, and therefore we have to have something in place in order to prevent them affecting our fundamental matrix. The way we get around this is by taking a random sample of proposed pairs of points, and using them to construct a fundamental matrix. Then we check how many of our proposed points appear to best match our new matrix. We repeat this process many times in case we get bad samples a few times, and we keep track of which matrix we create that has the most "inliers". Eventually, we settle on the matrix that had the most matches, and consider those matches to be the ones that are the best, as any others are likely outliers. This algorithm is called RANSAC (Random Sample Consensus). After some trial and error, I chose to use 1000 iterations and a "cutoff" level for inliers of 0.05 to achieve my results.

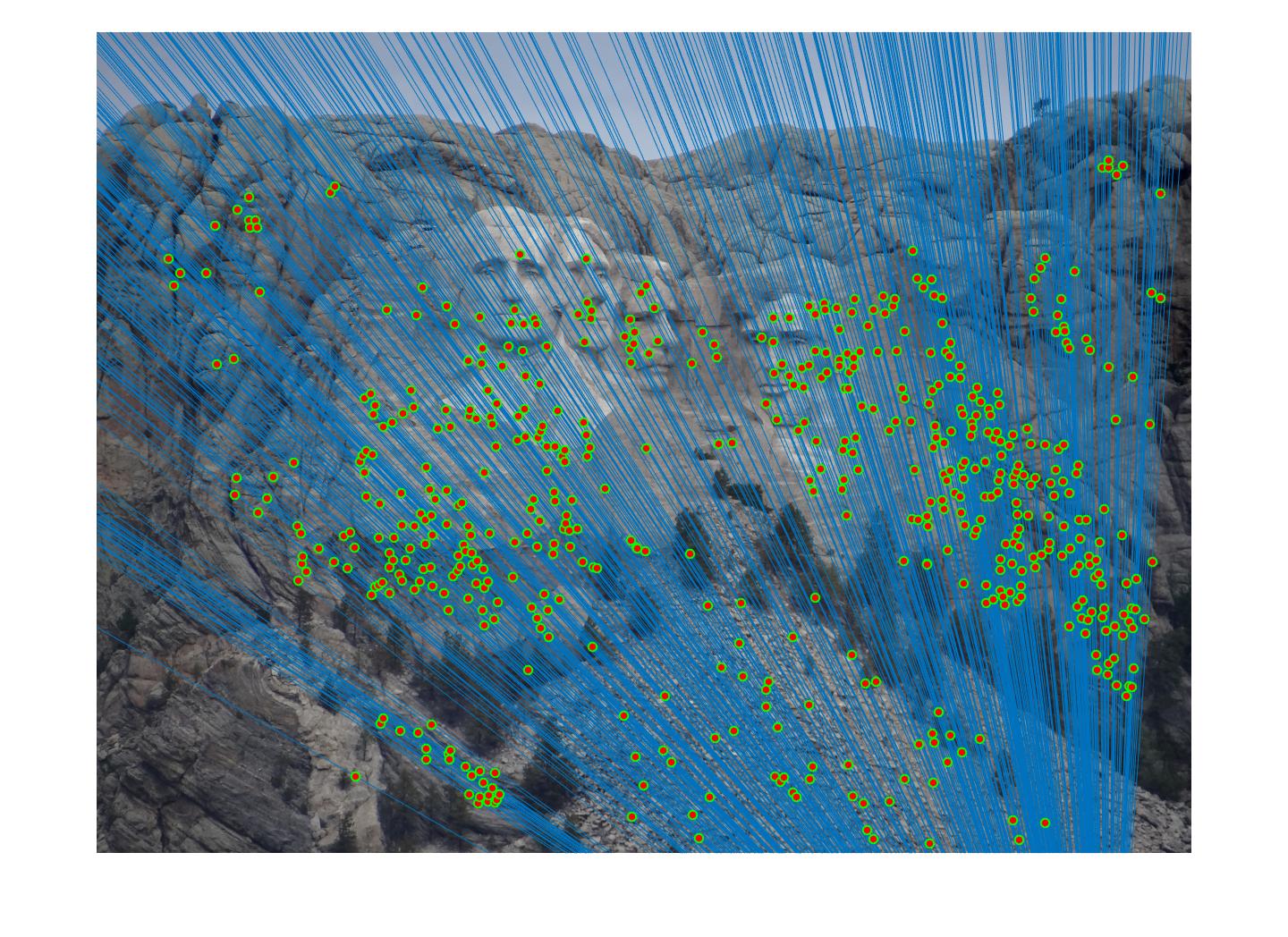

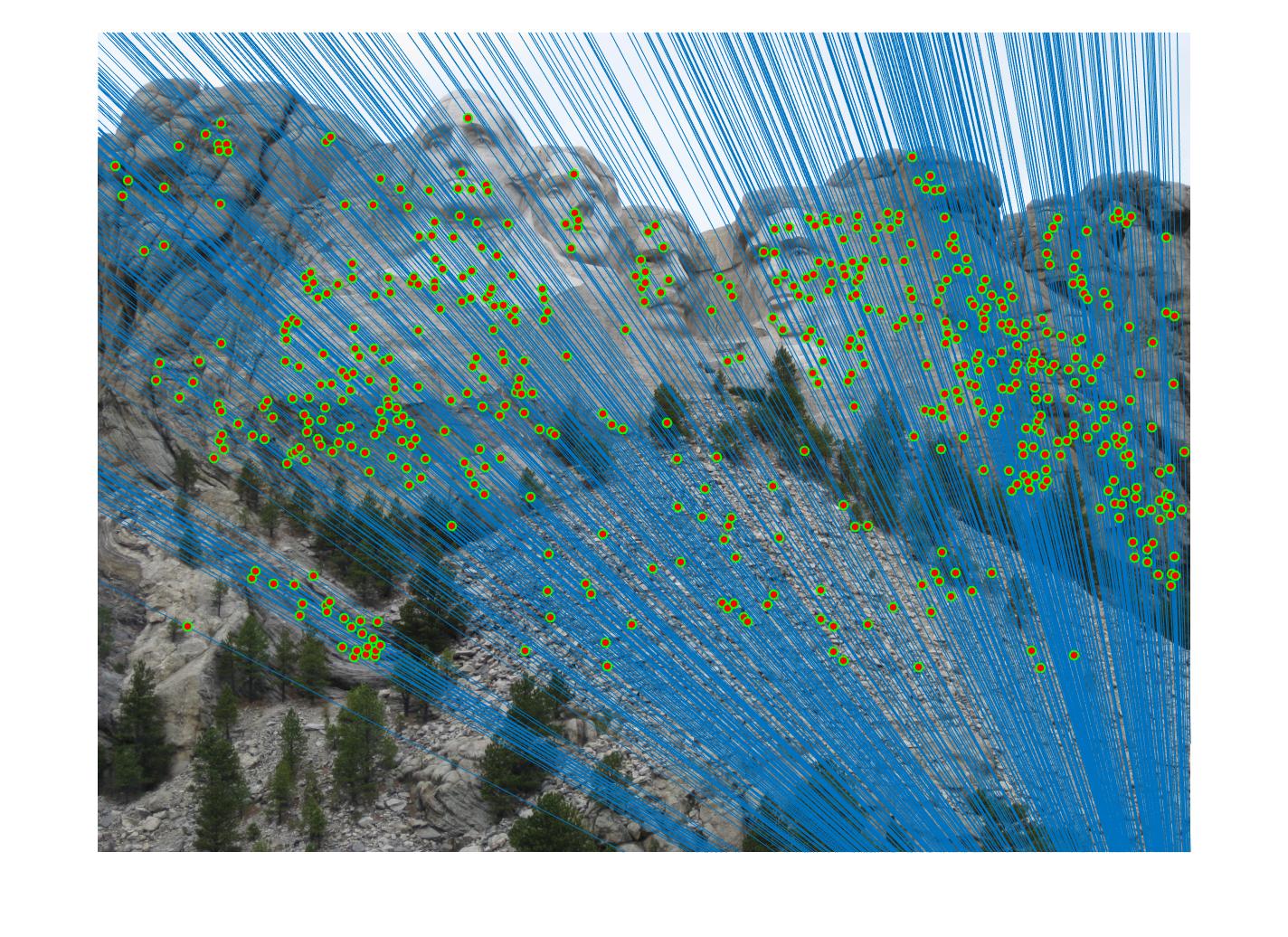

The first image set that I ran my code for RANSAC over was the two Mount Rushmore images. The results clearly show a lot less matches then the original result of the VLFeat SIFT, and the matches look to be pretty accurate as well. The epipolar lines also appear to cross a lot of the given points as well.

|

|

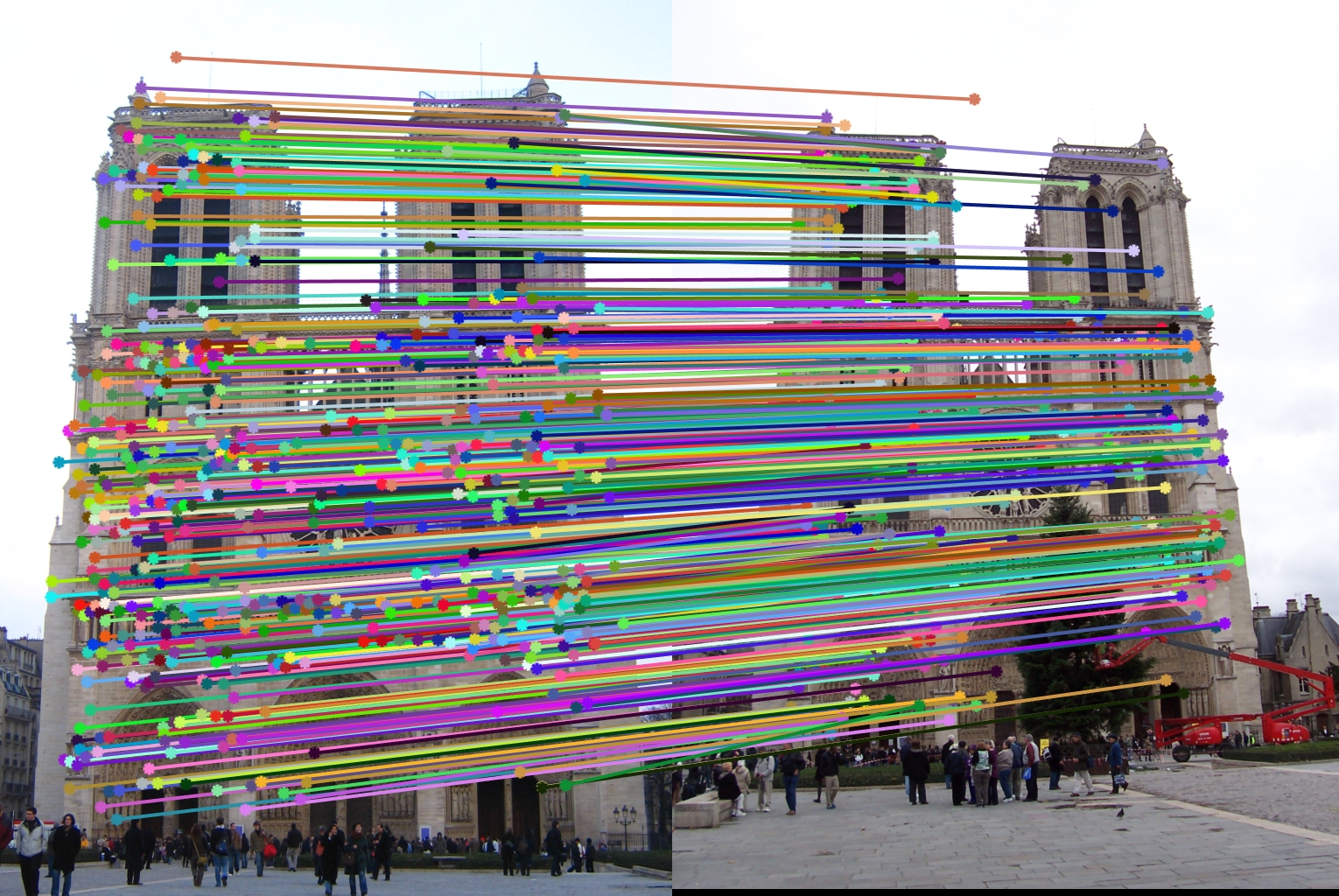

The second image set that I ran was on the two Notre Dame images, and this also had a pretty strong result. There are some outliers that still made it through (the point in the sky) as well as some points that aren't a perfect match due to the archeitecture being similar and close together in some places, but this is still a lot better of an estimation then the one that VLFeat SIFT gave by itself.

|

|

I also ran my algorithm on the Gaudi pair as well as the Woodruff pair, but the results were not very strong. The epipolar lines ended up in a sunburst like effect for most times that I ran it, and that is clearly incorrect, as well as a lot of obvious mismatches still existing. These pairs may need a lower cutoff value, more iterations, or the implementation of normalization in order to get better results.