Project 3 / Camera Calibration and Fundamental Matrix Estimation with RANSAC

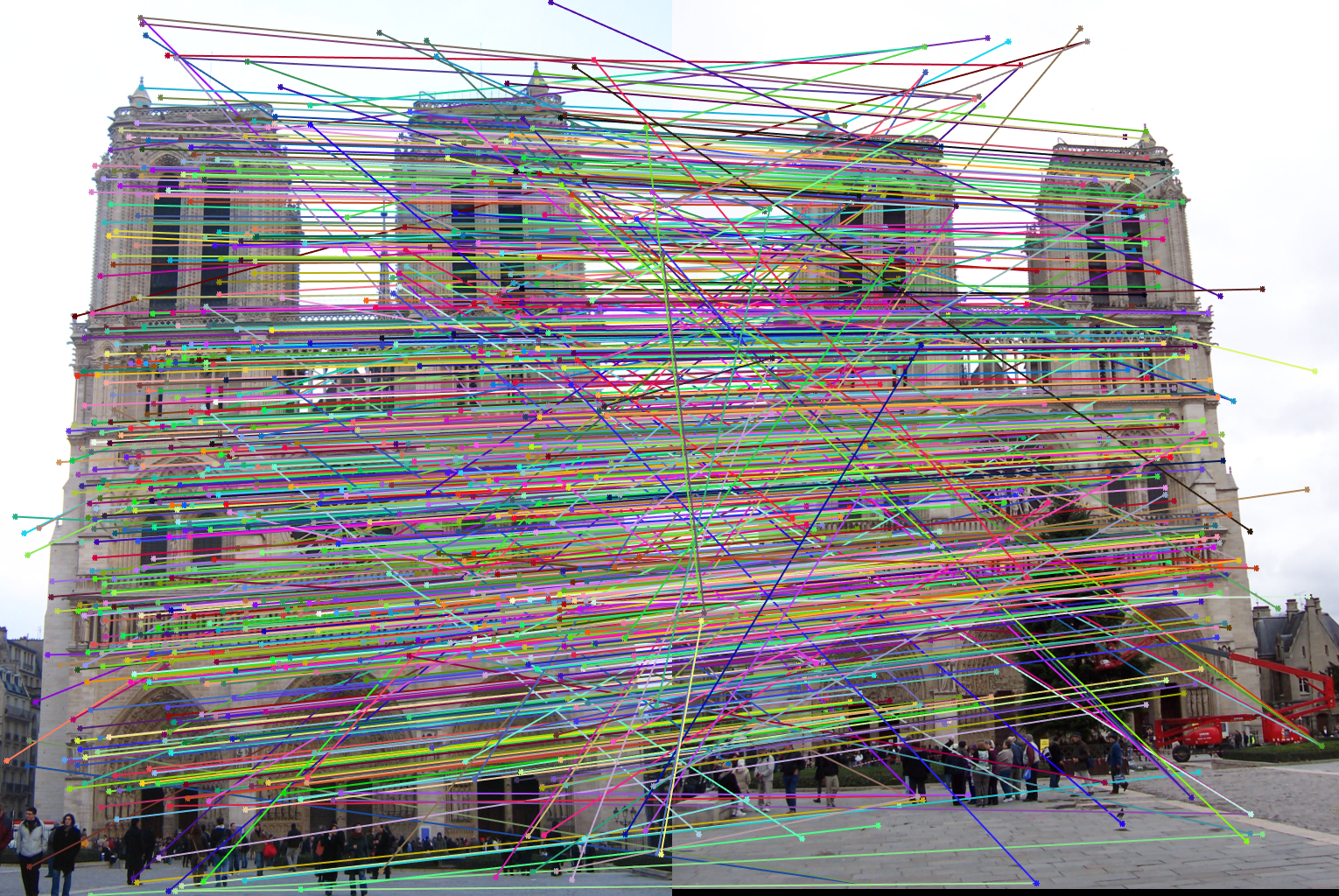

Features can be difficult to match without global context

Introduction

In Project 2, we found that it is difficult to match features in an image based solely on local features. Images have a lot of global information, and it would be valuable to be able to use this global data to better help us match features. For example, the position of features relative to one another could be a helpful tool. Similarily, ensuring that features are matched in a consistent direction could also be helpful. In this project, we focus on the latter. From two images, we build an estimation of the Fundamental Matrix, which relates the perspective of one image to that of the other. We can then ensure that our matched features are consistent with the model we made. This project was split into 3 parts to build up to this overall goal.

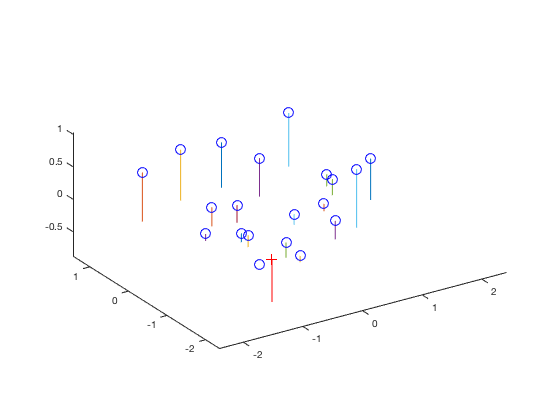

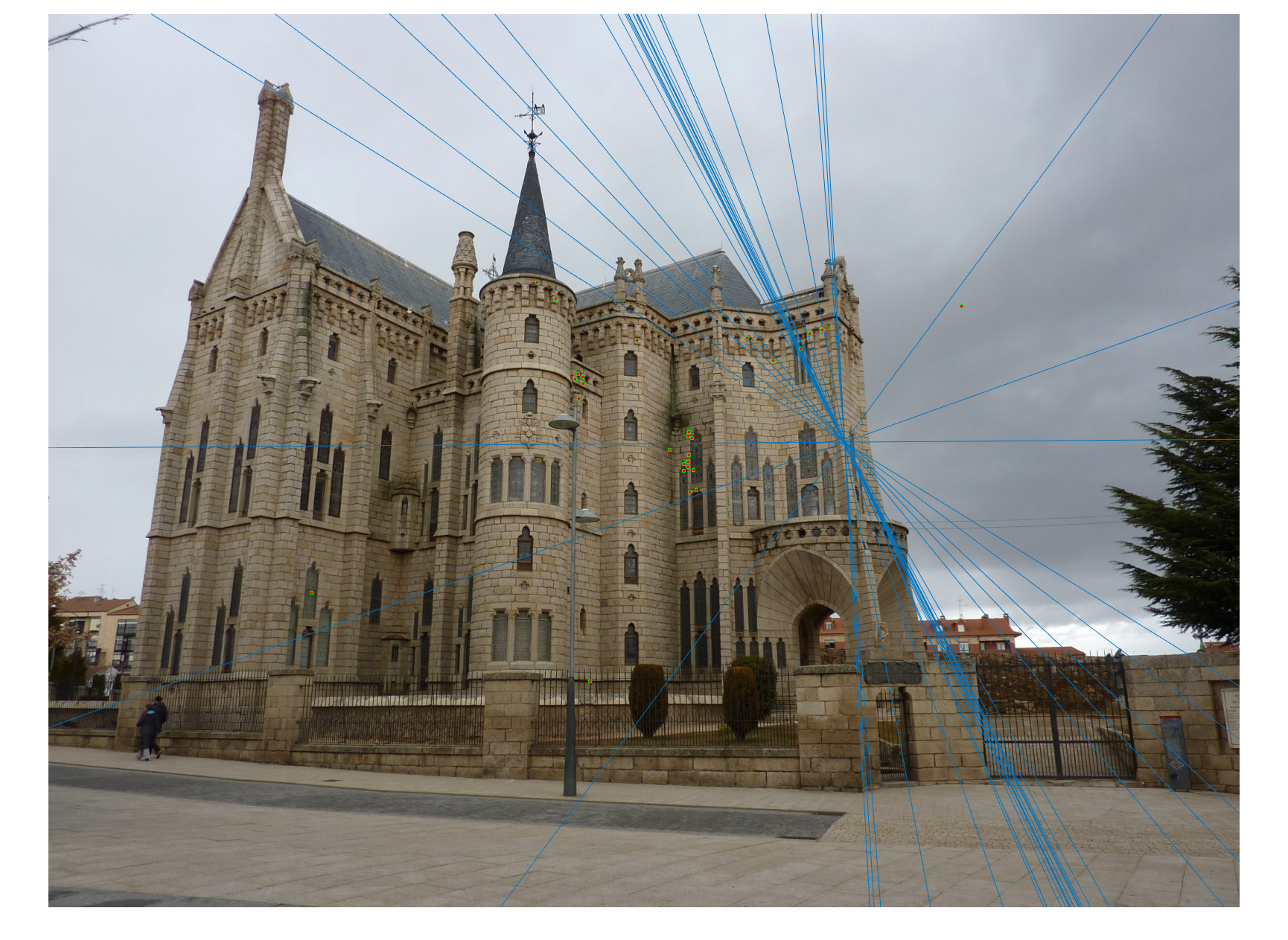

Results from Part 1

Part 1

The first part of the project involved calculating the projection matrix for a given camera. This matrix relates coordinates in the 3D world to points on the 2D image plane. Given a set of 3D and 2D points, we can set up a linear regression to solve for this matrix. However, there are many scaled solutions to this matrix, so we solve for the minimum by using Singular Value Decomposition. We can use the singular value decomposition to solve the linear regression in a way that minimizes the solution. Once we have the regression and reshape into the matrix M, we can break this matrix down into the intrinsic and extrinsic parameter matrices. In this project, we extracted the camera center parameter from our matrix M and used this to visualize where the camera is located relative to the provided points.

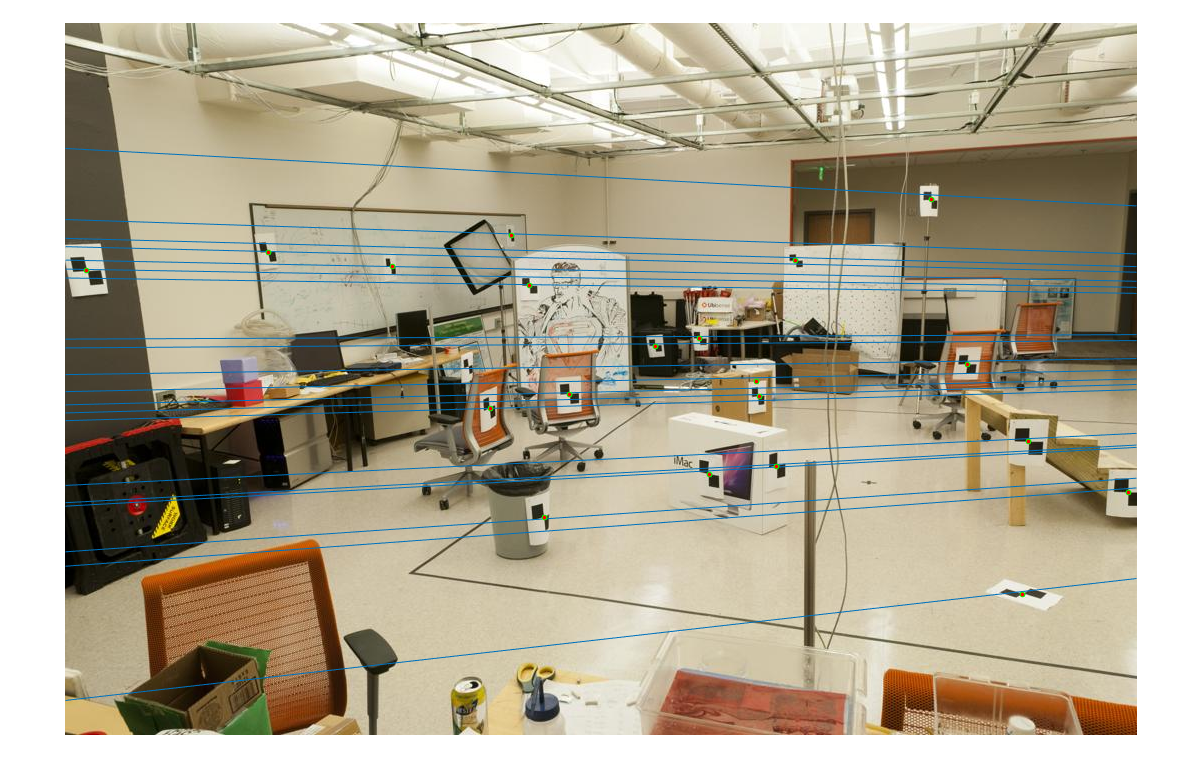

Part 2

In this part of the project we created a function to estimate the fundamental matrix. Given two images of the same subject, our goal is to quantify the relationship between the perspective of these two images. The important property of the fundamental matrix is that when a homogenized coordinate from one image is multiplied by the matrix and then multiplied by the corresponding point in the other image, the result is 0. This property will be important in the last part of the project, when we need to estimate how correct our fundamental matrix is.

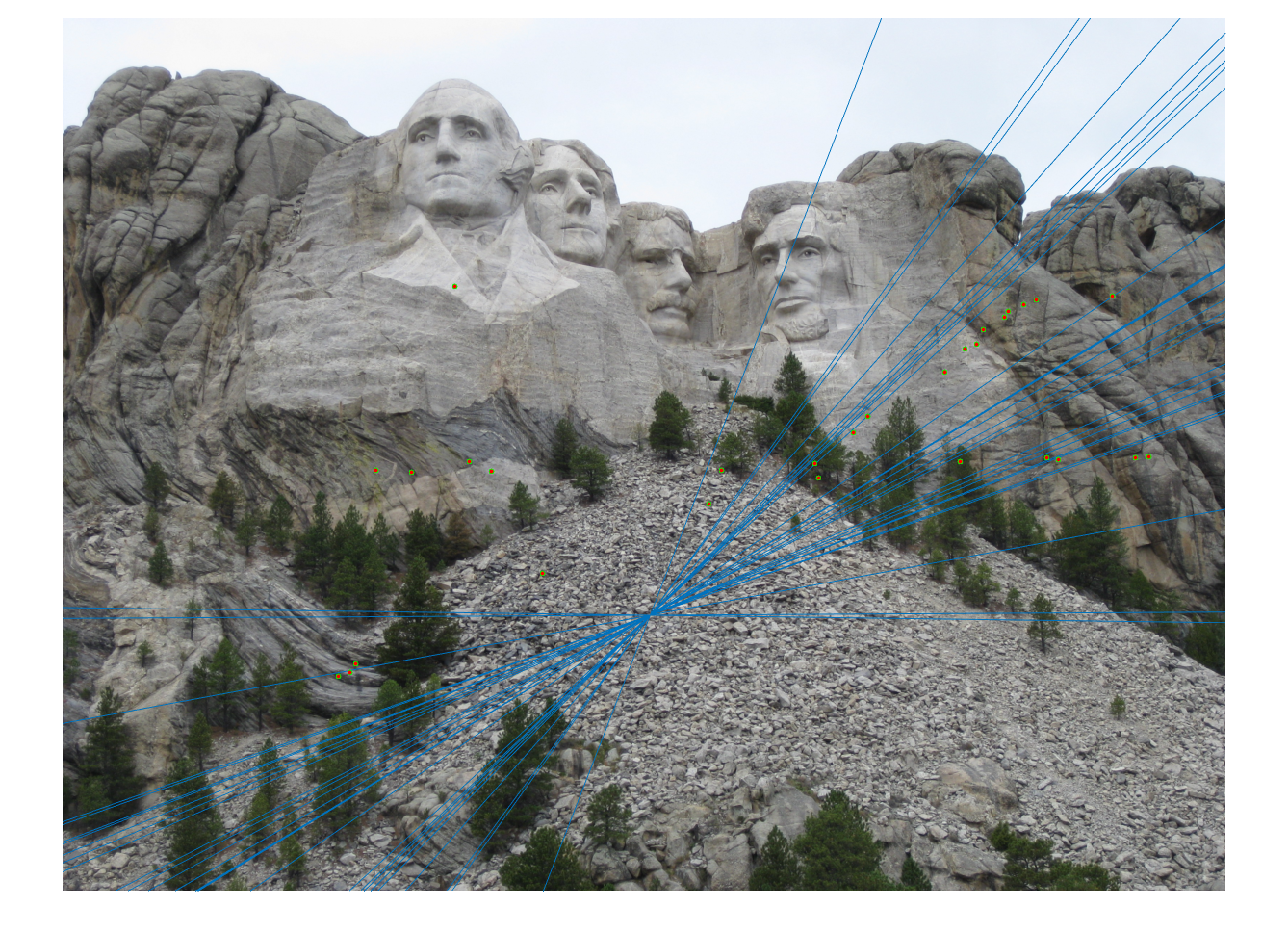

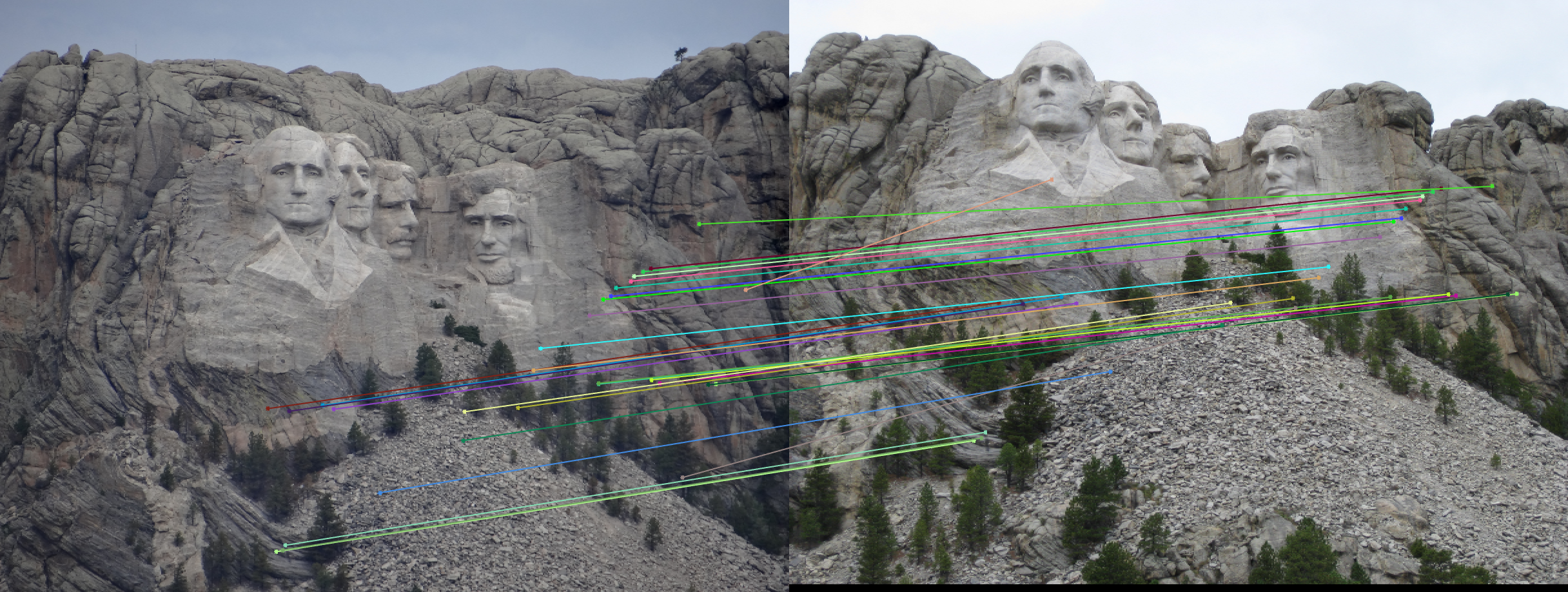

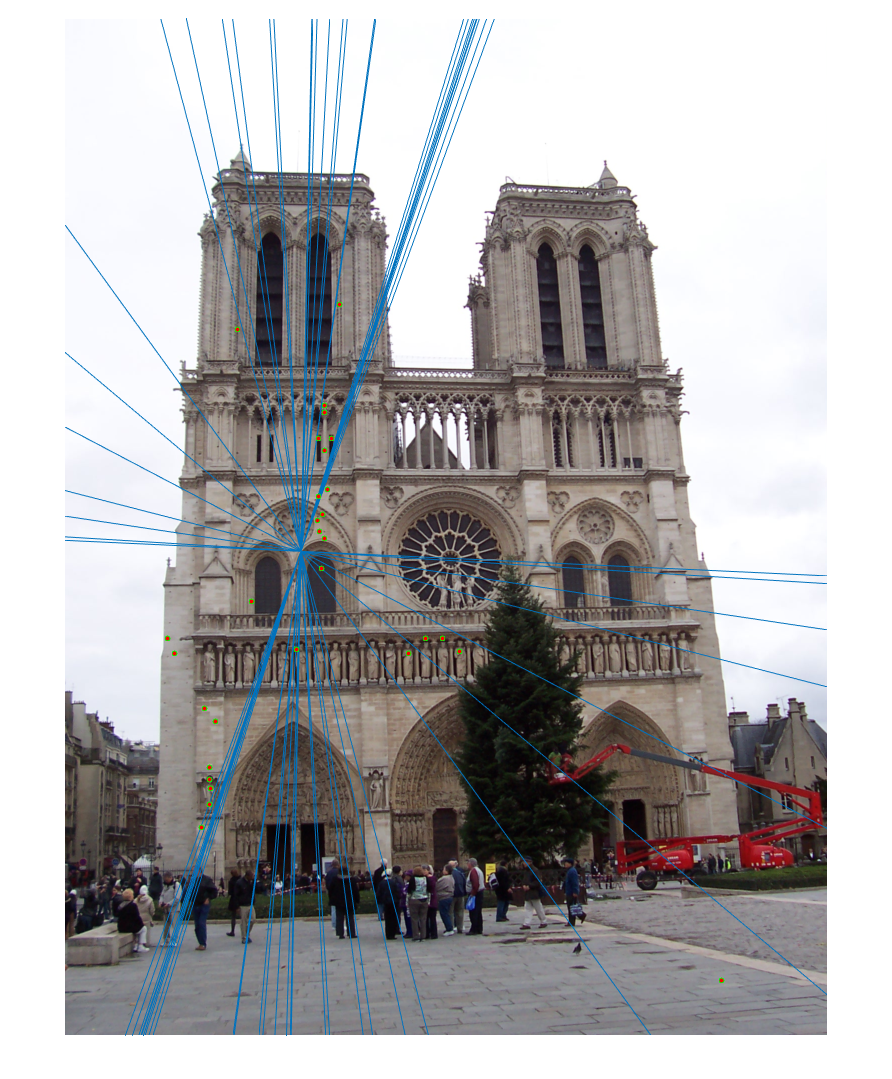

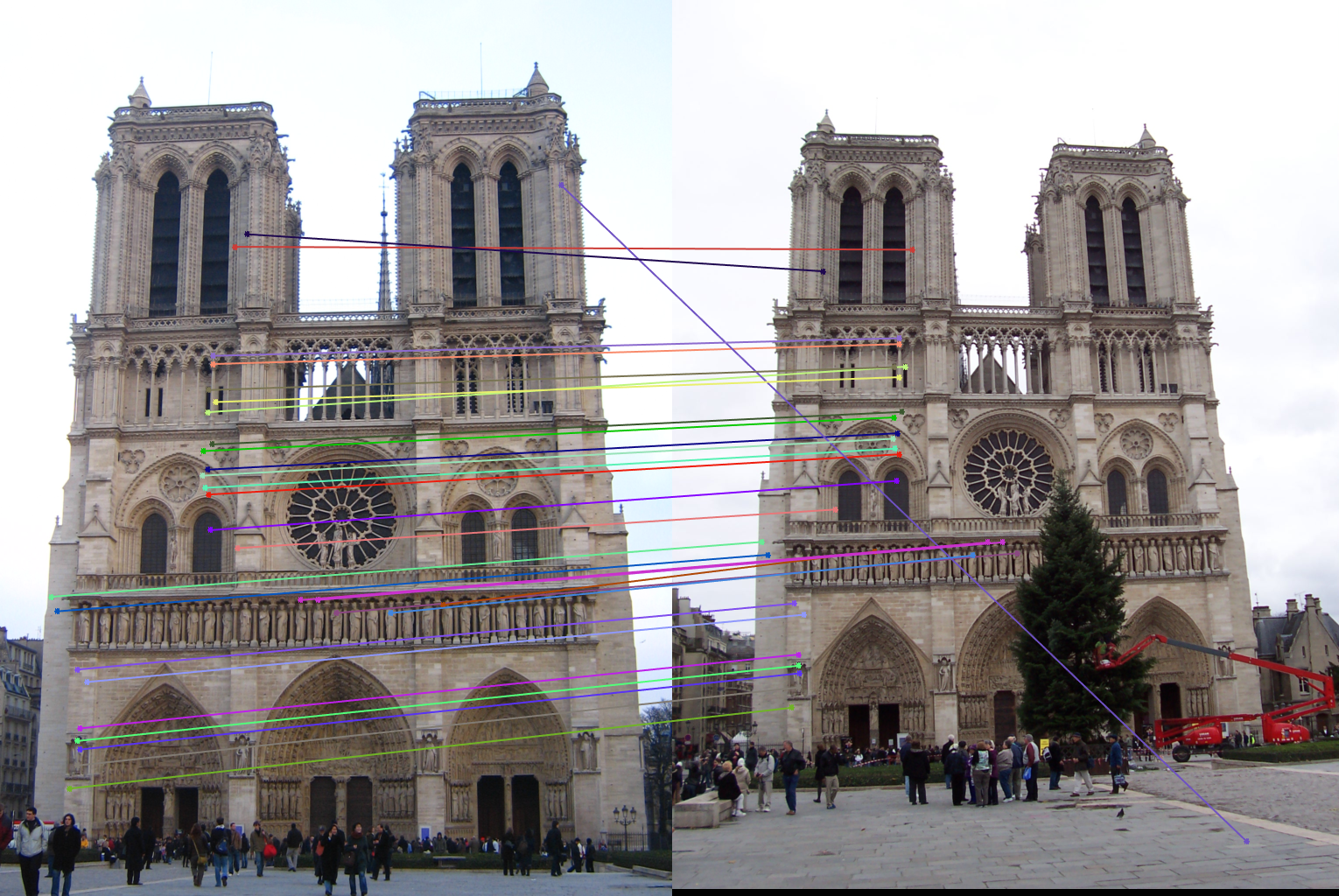

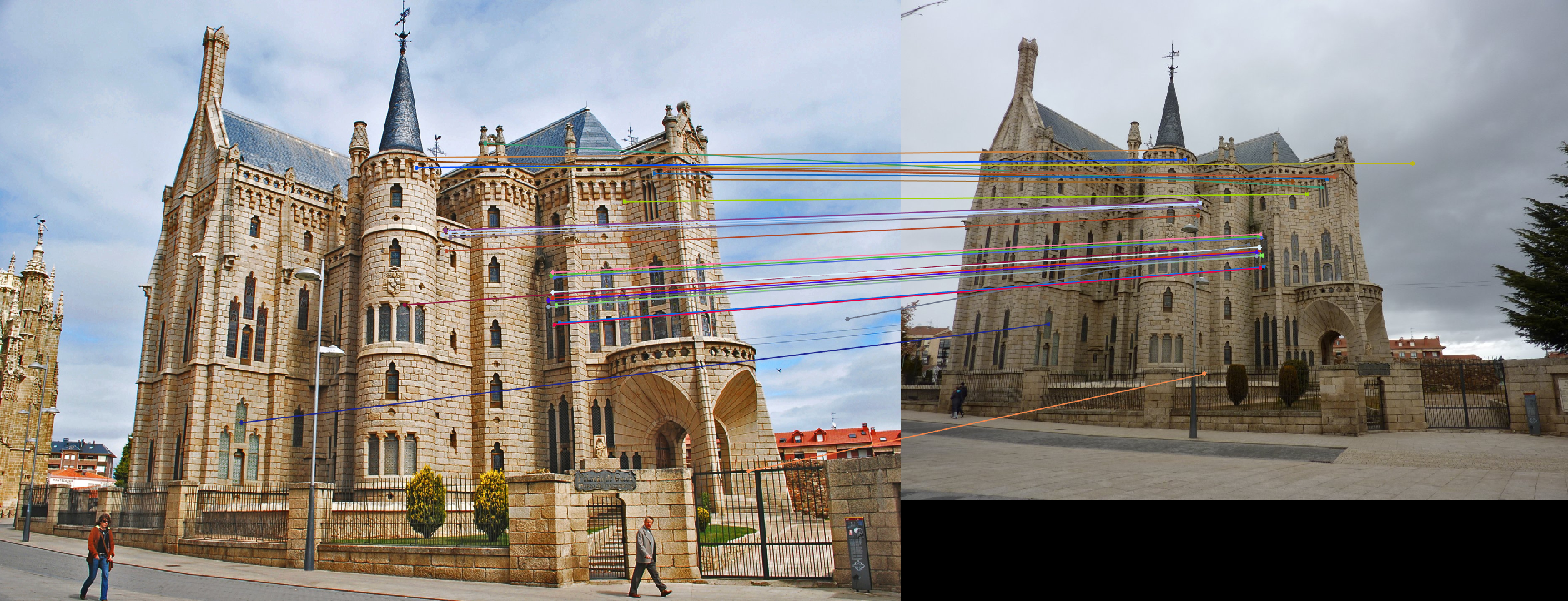

Epipolar results from Part 2

Once we have a minimum of 8 point correspondences between the images, we have enough information to solve for the fundamental matrix. We again use the Singular Value Decomposition to find the solution to the series of points we have. Using SVD ensures that we do not get the degenerate case as our solution.

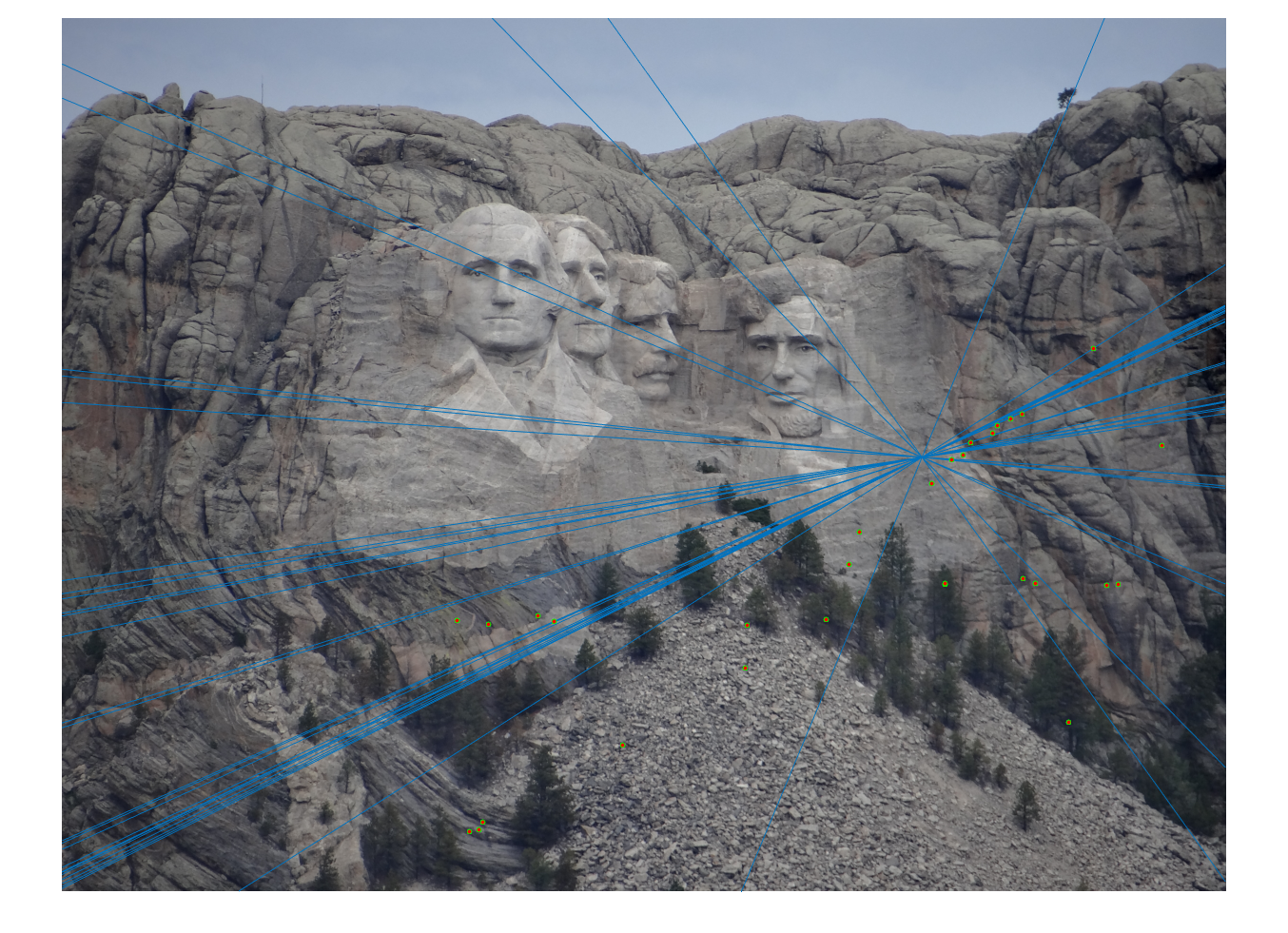

Part 3

In part 3, we brought together all of the elements of this project to solve a practical problem. Once we extract a series of SIFT matches from two images, we can attempt to improve our correct match rate by finding the fundamental matrix relationship between the two images. The algorithm we use to do this is called RANSAC. First, we randomly sample the number of points we need to solve for the fundamental matrix: 8 feature pairs. We then find the fundamental matrix associated with these 8 points, and assign it a score based on how well all other match pairs align with this hypothesis. We repeat these steps, each time selecting another 8 random features, until we are confident that we have a good fundamental matrix for as many features as possible. We then only accept the 30 top matches that align with this "best" fundamental matrix. This approach ensures that the SIFT matches that we select are all consistent with one another. When we do this, we can achieve a higher rate of correct matches as shown in the following pictures.

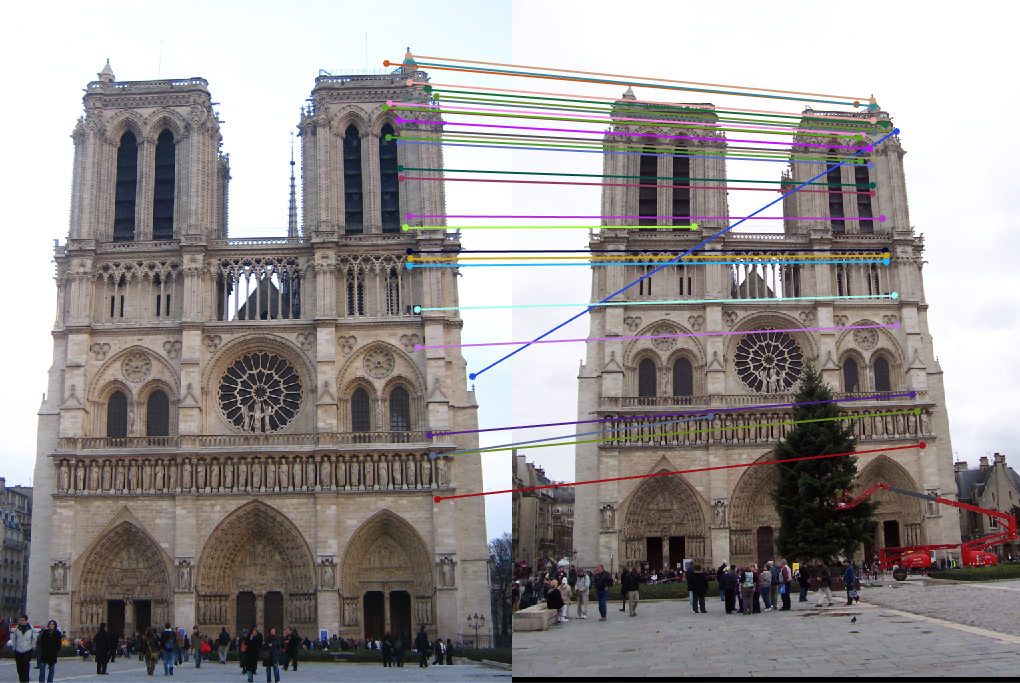

Rushmore results, with p=0.6

One important free parameter here is the number of RANSAC iterations. This is based on a simple formula that includes one important parameter, the probablity that a given point is an outlier, or "p". If this parameter is set to a high value, such as 0.8, the algorithm will run for a significantly greater number of iterations. However, the advantage you gain is a higher likelihood that the final result fundamental matrix is the best solution. Throughout this project I adjusted this parameter to find a good tradeoff between speed and quality, and found that often any value between 0.2 and 0.6 was sufficient.

Notre Dame results, with p=0.4

Another important parameter is the threshold used to categorize outliers vs inliers. We use the above mentioned property of the fundamental matrix to check how well the guessed fundamental matrix relates two points. The closer we get to 0, the better the fundamental matrix is for that pair. I found that setting this threshold to 0.02 provided me with about 200 matches. This number seemed large enough that the 30 selected best points would be the best possible, yet not too large that computation time increased or all fundamental matrices had a large score.

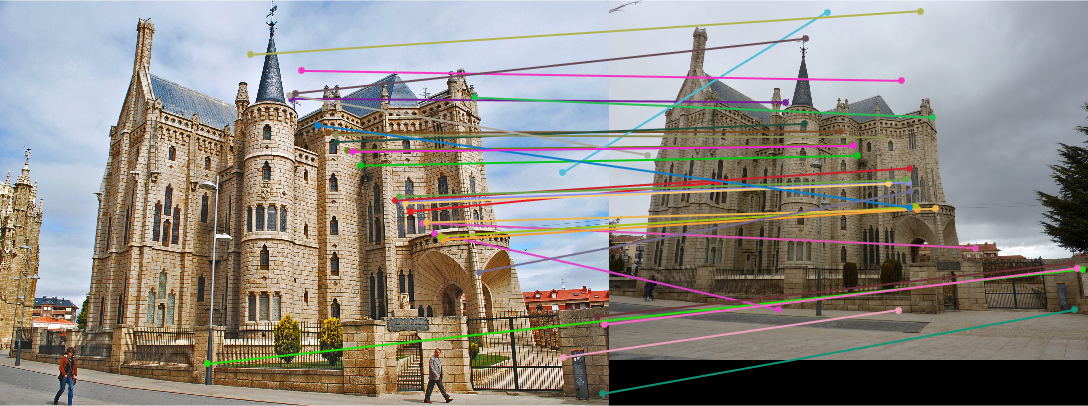

Gaudi results without normalization, p=0.4

Extra Credit

I attempted to improve my results further by implementing the coordinate normalization process. Within my estimate_fundamental_matrix method, I normalized the coordinates before calculating the matrix. First, I calculated the mean coordinate for each set of points (image 1 and image 2). After subtracting the mean from each point in the image sets, I calculated the standard deviation of the coordinates. I calculated the scale of the points as the reciprocal of this standard deviation. I multiplied each coordinate point by this scale to normalize the coordinates. I calculated the fundamental matrix as usual, but did not immediately return this normalized fundamental matrix. Instead, I obtained a matrix up to scale by multiplying the tranpose of the transform matrix of image 2 by the normalized fundamental matrix multiplied by the transform matrix of the first image. I then returned this new calculated fundamental matrix.

Using this method, I observed a noticeable improvement in the success rate of my matches. This is shown in the following images.

Image results with coordinate normalization.

Limitations and Improvements

Even after implementing the coordinate normalization process, I still did not receive optimum matching on the Gaudi image pair. Some of the "best" matches were placed in not well defined areas like the sky above the building or other low-detail areas. This is most likely an issue with coordinate normalization process, because I did not receive this issue beforehand. However, considering that my accuracy improved with the normalization, I decided to keep it implemented.