The objective of this project was to improve upon image matching by leveraging epipolar geometry of a stereo image pair while applying RANSAC to reject poorly matched feature points. After performing a simple camera calibration, SIFT feature points detected in an image pair were used to estimate the Fundamental Matrix; using the estimation of the Fundamental Matrix, RANSAC was used to match the image pair. The following sections describe the approach and highlight the results of the project.

Camera calibration consisted of two main tasks: determining the projection matrix and determining the camera center. The task was formulated as a system of linear equations, and two distinct methods were applied.

Determining the Projection Matrix The projection matrix can be written as a transformation from homogeneous image coordinated to homogeneous world coordinates of the form:

| (1) |

which can be rewritten in the form

Determining the Camera Center The projection from image to world coordinates is typically written as:

![x = K [R |t]X.](index2x.png) | (2) |

With Q ∈ 3×3 and m ∈

3×3 and m ∈ 4×1, decomposing the matrix M = [Q|m] allows the

camera center C to be calculated according to:

4×1, decomposing the matrix M = [Q|m] allows the

camera center C to be calculated according to:

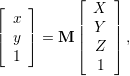

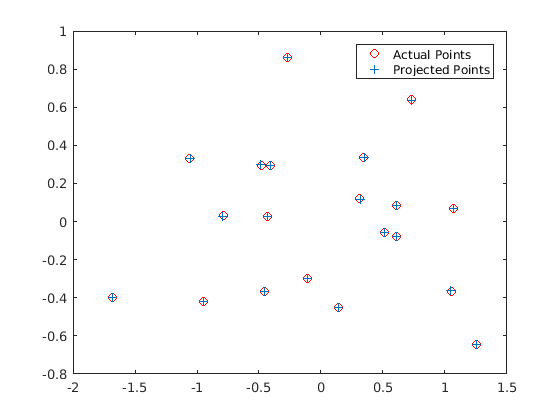

For the calibration scenario, the camera location was determined to be C = [-1.5126,-2.3517,0.2827]. This location is correct according to the ground truth camera location. Figure 2 shows the camera location relative to the observed points.

As with determining the projection matrix, the Fundamental Matrix was determined by formulating a system of linear equations. The Fundamental Matrix obeys the relationship:

Normalization The estimation of the Fundamental Matrix was improved by performing the estimation on normalized coordinates. A transformation matrix for each image in the stereo image pair was determined by first subtracting the respective centroids of the feature points from their coordinates. The resulting coordinates were divided by the maximum magnitude of the coordinate pairs to put the coordinates in [0,1]. After performing the Fundamental Matrix estimation on the normalized coordinates as listed above, the transformation matrices of the image pair were used to transform the Fundamental Matrix back into the original coordinate frame according to:

RANSAC was performed to reject incorrect matches between an image pair based on the Fundamental Matrix. 8 potential matches were chosen randomly to estimate the Fundamental Matrix. Using the relationship

| (3) |

for an imperfect Fundamental Matrix, all possible matches were tested against the estimated Fundamental Matrix, and matches for which the relationship in 3 was less than a threshold value were added to a list of inliers. The estimation of the Fundamental Matrix was repeated, and the best Fundamental Matrix, evaluated based on increasing numbers of inliers, was kept.

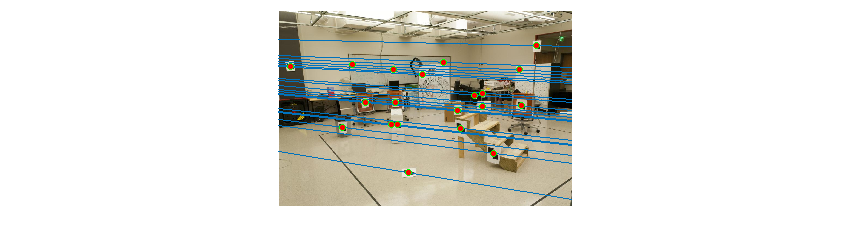

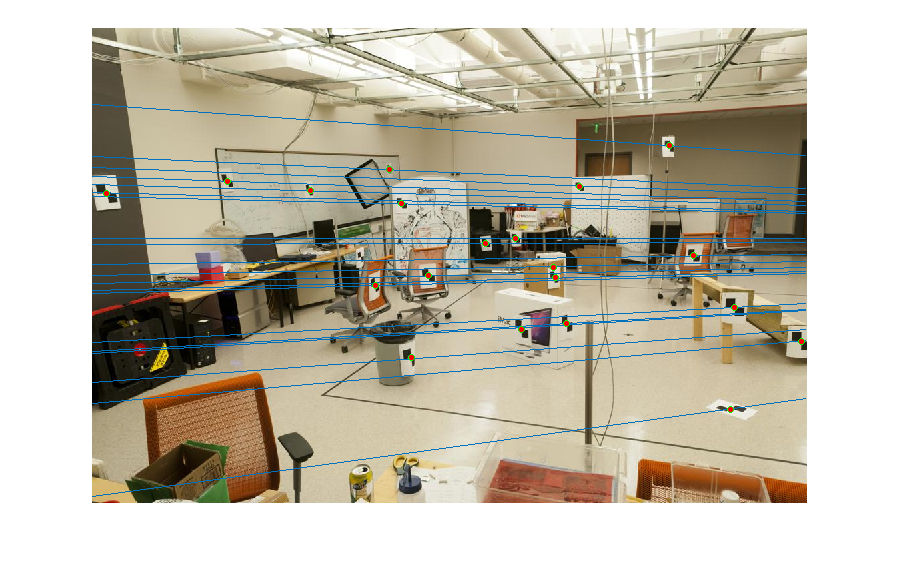

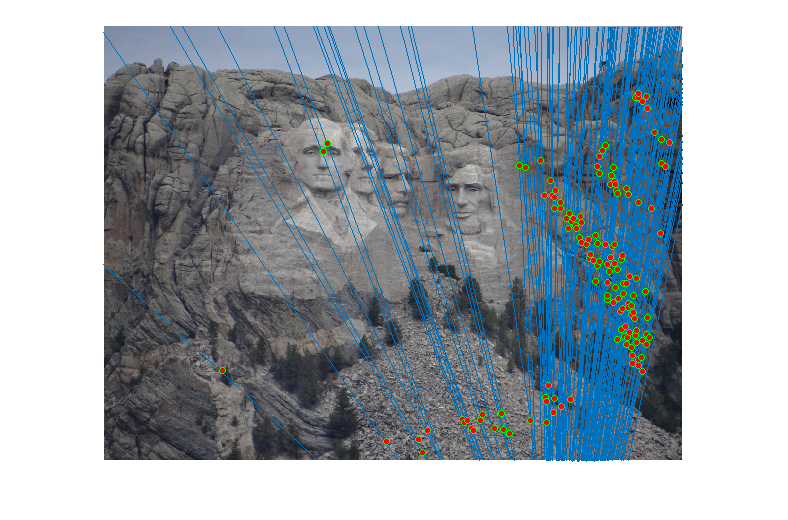

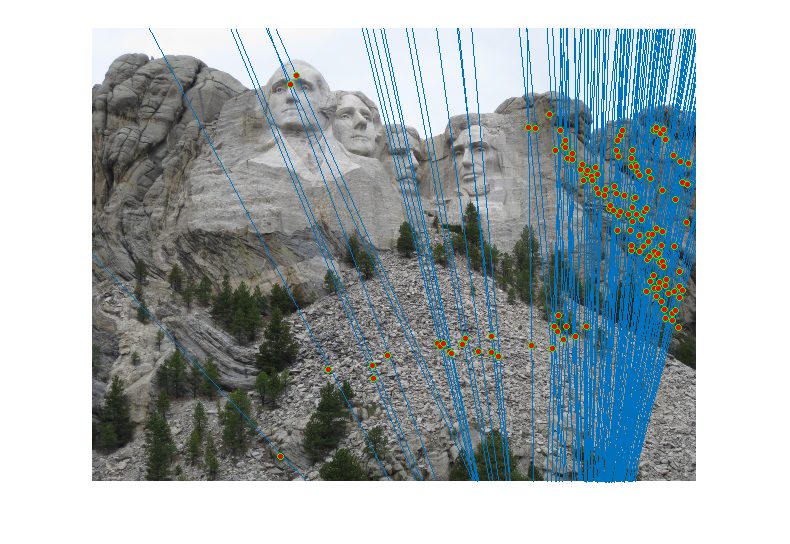

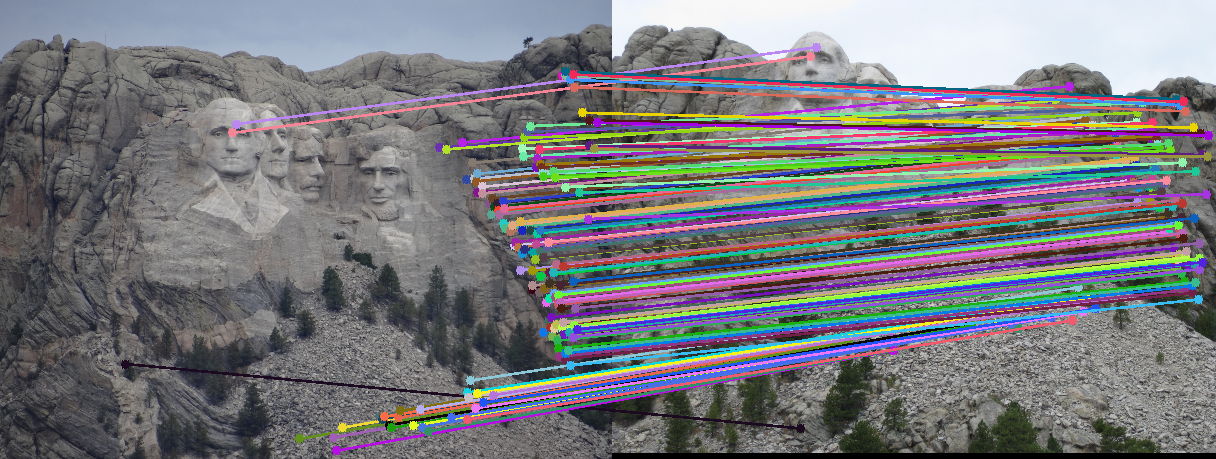

Figure 4 shows the epipolar lines of the Mount Rushmore image pair, and Figure 5 shows feature point correctly matched between the image pair.

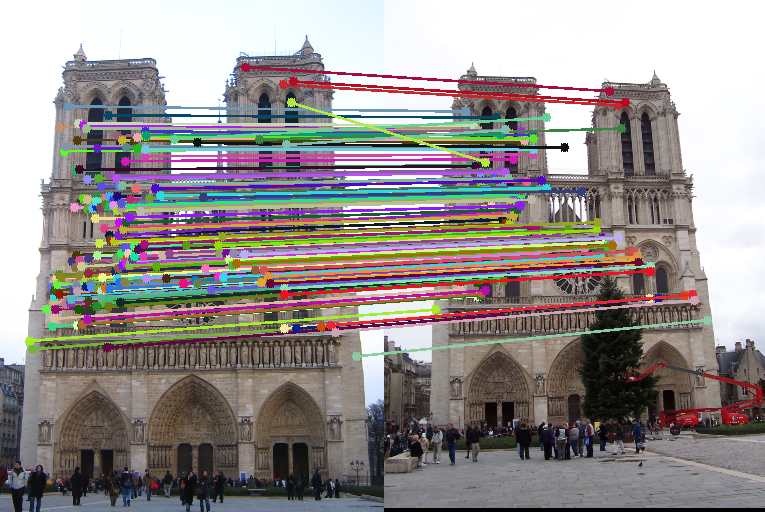

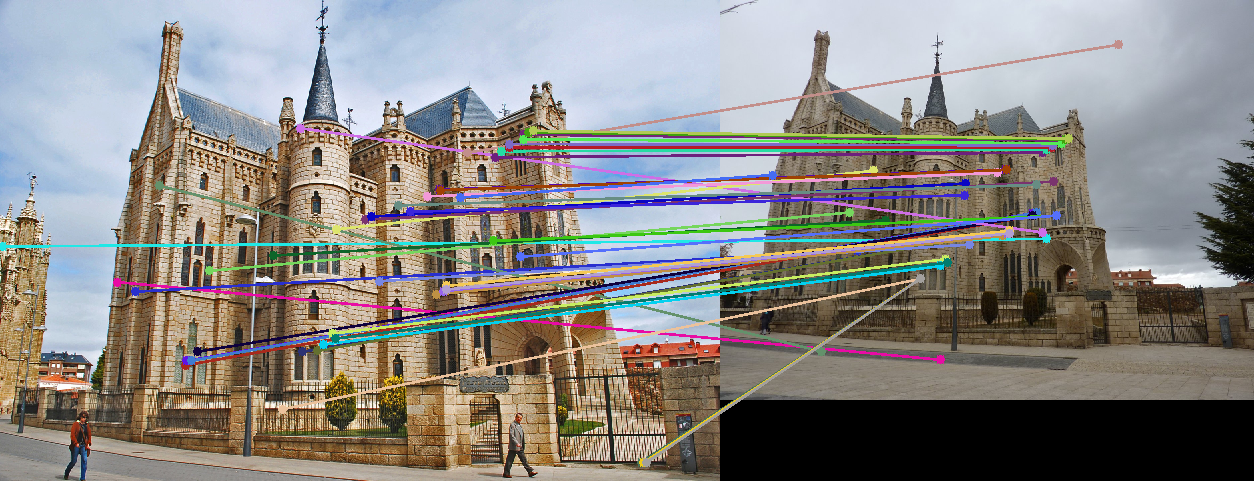

Figures 6 and Figure 7 demonstrate that the estimation of the Fundamental Matrix and feature point matching with RANSAC performed well on a number of image pairs.

Figure 8 shows the match generated with normalized coordinates in the Fundamental Matrix estimation for the Gaudi image pair. To highlight the improvement resulting from coordinate normalization, the same image pair was matched without normalizing the coordinates in Figure 9, and the result is a visibly worse match between the images.

The objective of this project was to improve upon image matching by leveraging epipolar geometry of a stereo image pair while applying RANSAC to reject poorly matched feature points. Compared to the local feature matching performed in Project 2, the results of Project 3 are considerably more satisfying, yielding obvious matches for the image pairs. In the context of computer vision for robotics, it would be interesting to explore these topics in the context of SLAM, even more so for monocular SLAM.