Project 3 / Camera Calibration and Fundamental Matrix Estimation with RANSAC

This project focuses on estimating the camera projection matrix and fundmental matrix. Estimating the camera projection matrix will use ground truth 3D->2D point maps from a test scene. Estimating the fundamental matrix will rely on keypoint pairs from two images found using SIFT methods.

Camera Projection Matrix

Given a set of 3D world coordinates and their corresponding 2D points in an image, I can estimate the camera's projection matrix using the following system of equations.

% [M11 [ 0

% M12 0

% M13 .

% M14 .

%[ X1 Y1 Z1 1 0 0 0 0 -u1*X1 -u1*Y1 -u1*Z1 u1 M21 .

% 0 0 0 0 X1 Y1 Z1 1 -v1*X1 -v1*Y1 -v1*Z1 v1 M22 .

% . . . . . . . . . . . . * M23 = .

% Xn Yn Zn 1 0 0 0 0 -un*Xn -un*Yn -un*Zn u1 M24 .

% 0 0 0 0 Xn Yn Zn 1 -vn*Xn -vn*Yn -vn*Zn v1] M31 .

% M32 0

% M33] 0 ]

In this project, we are given a set of 2D points (Points_2D) and a set of 3D points (Points_3D). The above system is constructed as follows:

A = [];

for i = 1:size(Points_3D,1)

p2 = Points_2D(i,:);

p3 = Points_3D(i,:);

partial = [p3 1 0 0 0 0 (-1*p2(1)*p3(1)) (-1*p2(1)*p3(2)) (-1*p2(1)*p3(3)) (-1*p2(1)); 0 0 0 0 p3 1 (-1*p2(2)*p3(1)) (-1*p2(2)*p3(2)) (-1*p2(2)*p3(3)) (-1*p2(2))];

A = [A; partial];

end

This system of equations is solved in Matlab using SVD as follows:

[U, S, V] = svd(A);

M = V(:,end);

M = reshape(M,[],3)';

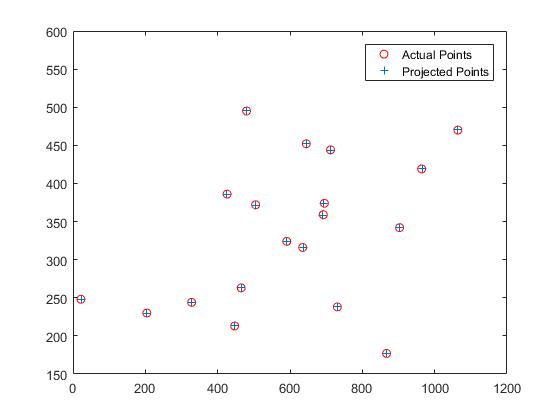

For the non-normalized example points in part 1 of the project, this code yields the following estimate for the projection matrix and gices a 15.5450 residual.

| 0.006931546861693 | -0.004016844701489 | -0.001326029284433 | -0.826700554062832 |

| 0.001547687322194 | 0.001024527595362 | -0.007274407143761 | -0.562523255556009 |

| 0.000007609460504 | 0.000003709539886 | -0.000001902032444 | -0.003388077116079 |

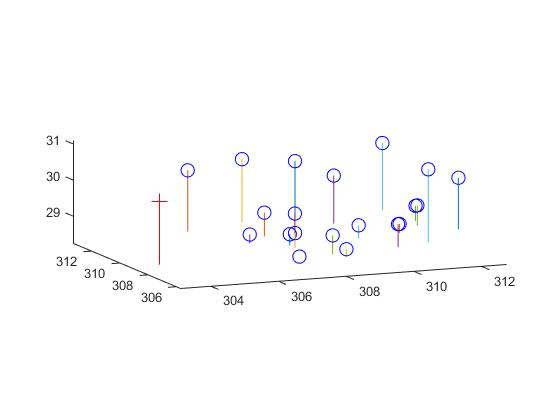

From this projection matrix, the camera's center point in world coordinates was found to be <303.1000, 307.1843, 30.4217>.

b)

b)

a) Visual inspection of projection accuracy. b) Plot of camera position and 3D point set.

Fundamental Matrix Estimation

The fundamental matrix represents the relationship between corresponding points in two images of the same scene. Given atleast eight matching pairs of points from these two images, the fundamental matrix relating the two can be estimated with the following system.

% [f11

% f12

% f13

% f21

%[ u1*u1' u1*v1' u1 v1*u1' v1*v1' v1 u1' v1' 1 f22 [ 0

% u2*u2' u2*v2' u2 v2*u2' v2*v2' v2 u2' v2' 1 f23 .

% . . . . . . . . . * f31 = .

% un*un' un*vn' un vn*un' vn*vn' vn un' vn' 1] f32 0 ]

% f33]

For part 2 of this project, I build and solve this system from the given sets of points with this code:

function [ F_matrix ] = estimate_fundamental_matrix(Points_a,Points_b)

A = [];

for i=1:size(Points_a_norm,1)

p1 = Points_b(i,:);

p2 = Points_a(i,:);

partial = [p1(1)*p2(1) p1(1)*p2(2) p1(1) p1(2)*p2(1) p1(2)*p2(2) p1(2) p2(1) p2(2) 1];

A = [A; partial];

end

[~, ~, V] = svd(A);

F_matrix = V(:,end);

F_matrix = reshape(F_matrix, [3 3])';

[U, S, V] = svd(F_matrix);

S(3,3) = 0;

F_matrix = U*S*V';

end

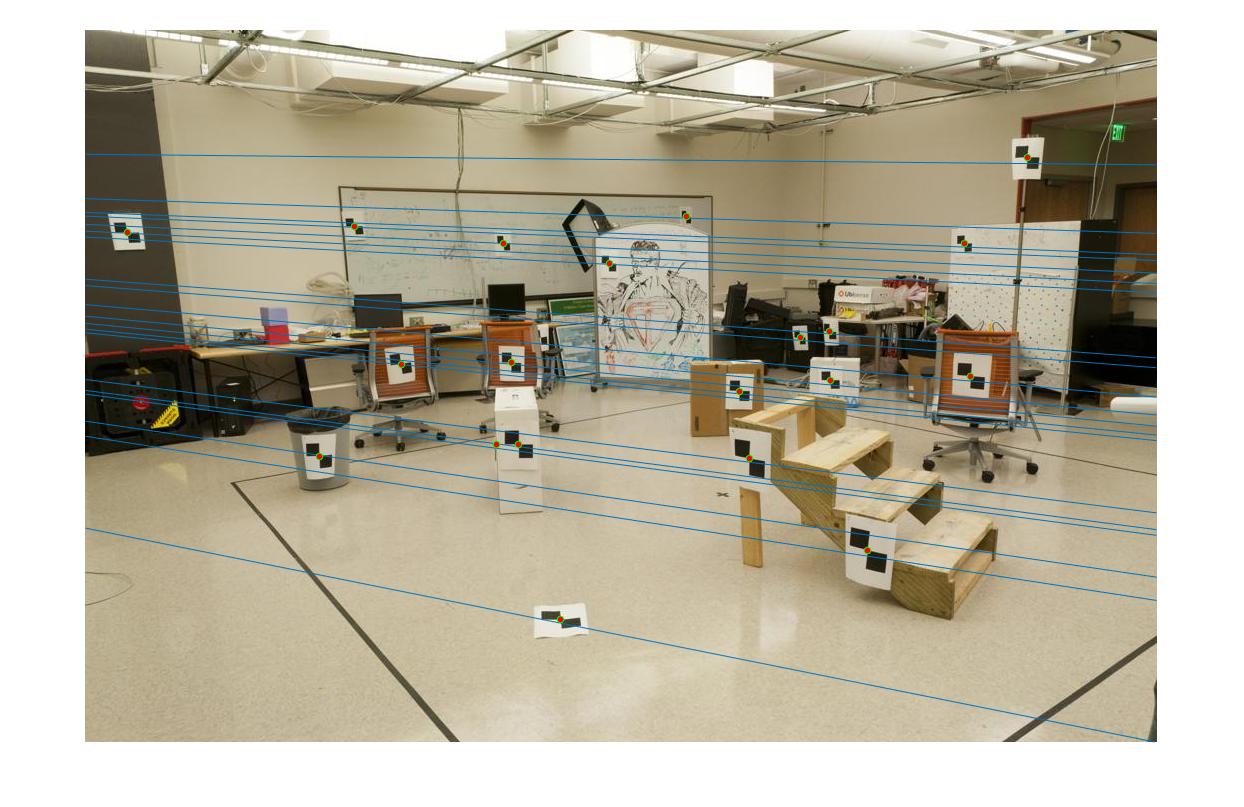

Running this estimation on the image pair in part 2 of this project gave the following fundamental matrix.

| -0.000000536264198 | 0.000007903647709 | -0.001886002040236 |

| 0.000008835391841 | 0.000001213216850 | 0.017233290101449 |

| -0.000907382264408 | -0.026423464992204 | 0.999500091906703 |

Epipolar lines generated by estimated fundamental matrix plotted on image pair along with points used in matching.

Fundamental Matrix with RANSAC

The approach in the previous section works well as long as there is no noise in the set of points. This is fine for ground truth data, but not for data estimated using a matching algorithm on automatically detected features, where false matches will inject points into the data set that do not fit the ground truth model of the camera motion. A RANSAC approach allows us to get a more accurate estimate of the fundamental matrix and throw out outlier matches which are likely not correct.

The following code implements the RANSAC approach to estimating the fundamental matrix. The number of RANSAC iterations to run is determined using the probability (p) of finding a sample of 8 inliers in a set with an outlier ratio e.

Inliers are determined to be points (from matches_b) who are less than eps pixels away from the epipolar line generated using the corresponding point (from matches_a) and the estimated fundamental matrix.

function [ Best_Fmatrix, inliers_a, inliers_b] = ransac_fundamental_matrix(matches_a, matches_b)

function d = distance(point, line)

d = abs(line(1)*point(1) + line(2)*point(2) + line(3)) / sqrt(line(1)^2 + line(2)^2);

end

p = 0.99;

e = 0.50;

eps = 10;

N = round(log(1-p)/log(1-(1-e)^8));

inliers_a = [];

inliers_b = [];

Best_Fmatrix = [];

for iter=1:N

inds = randperm(size(matches_a,1),8);

points_a = matches_a(inds,:);

points_b = matches_b(inds,:);

F = estimate_fundamental_matrix(points_a, points_b);

inls_a = [];

inls_b = [];

for p=1:size(matches_a)

point_a = matches_a(p,:);

point_b = matches_b(p,:);

line_b = F*[point_a 1]';

d = distance(point_b, line_b);

if d < eps

inls_a = [inls_a; point_a];

inls_b = [inls_b; point_b];

end

end

if size(inls_a,1) > size(inliers_a, 1)

inliers_a = inls_a;

inliers_b = inls_b;

Best_Fmatrix = F;

end

end

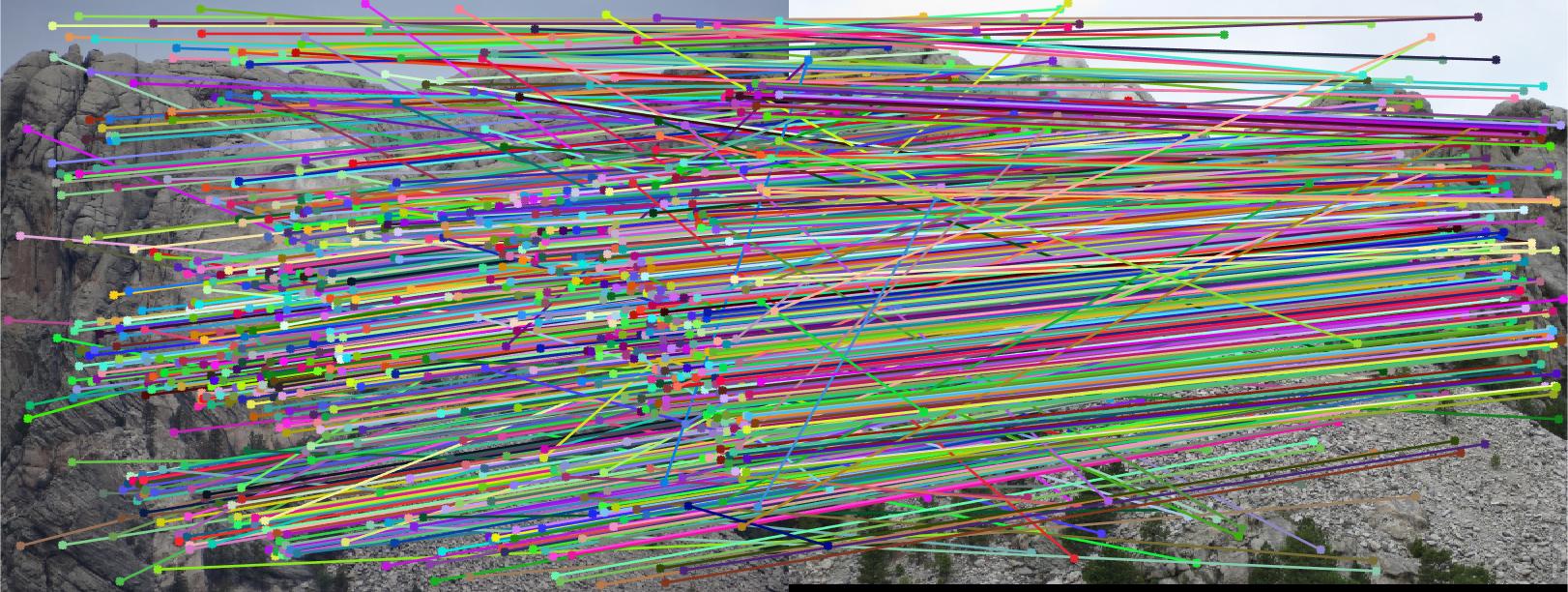

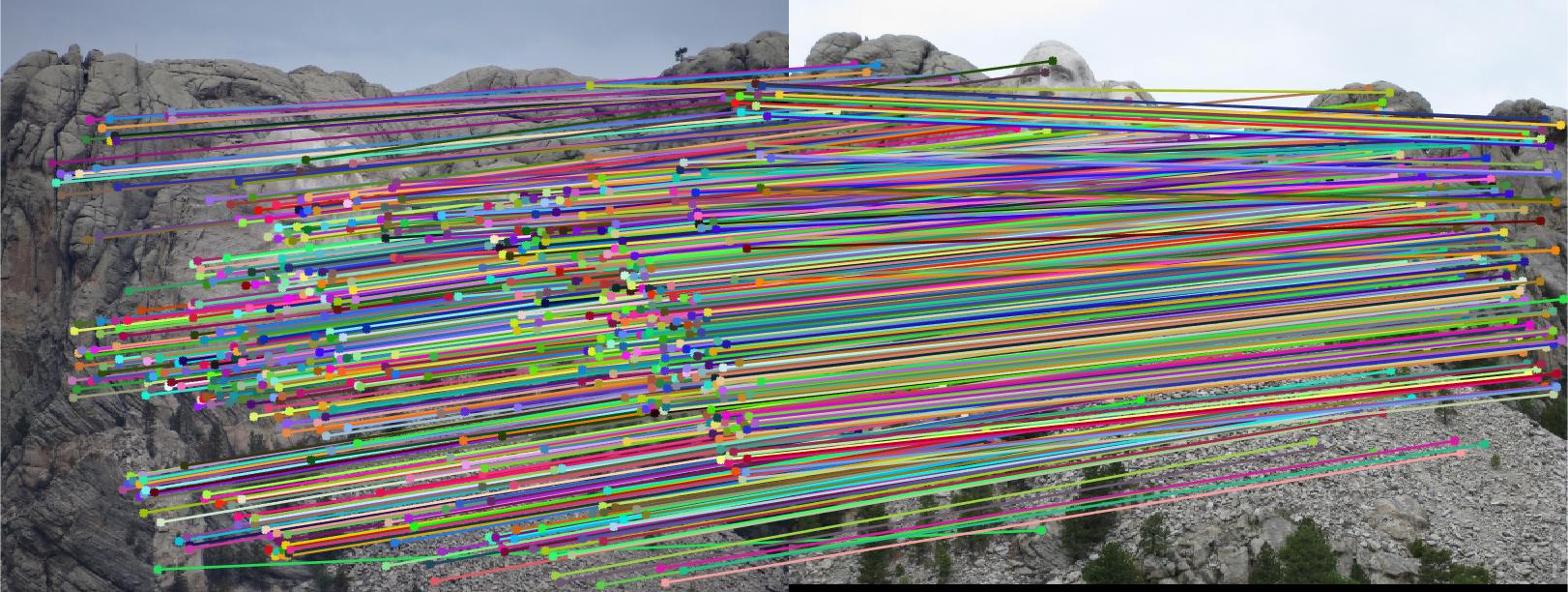

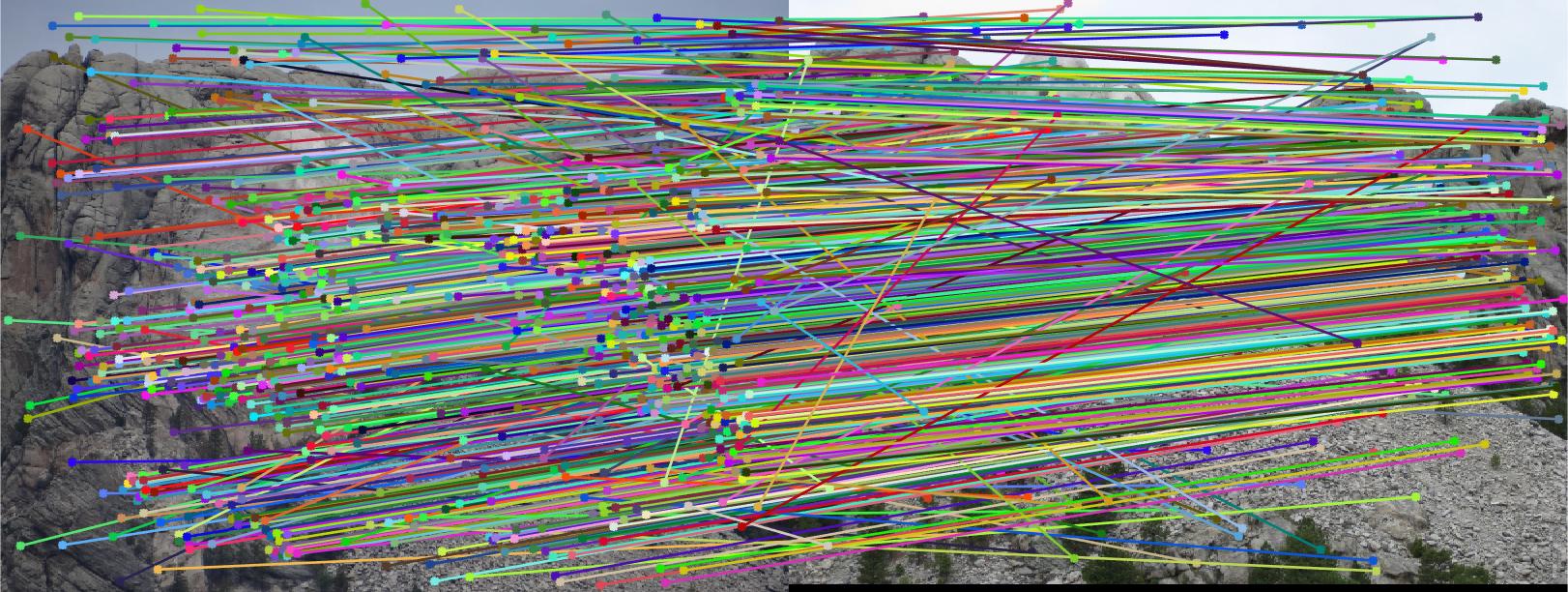

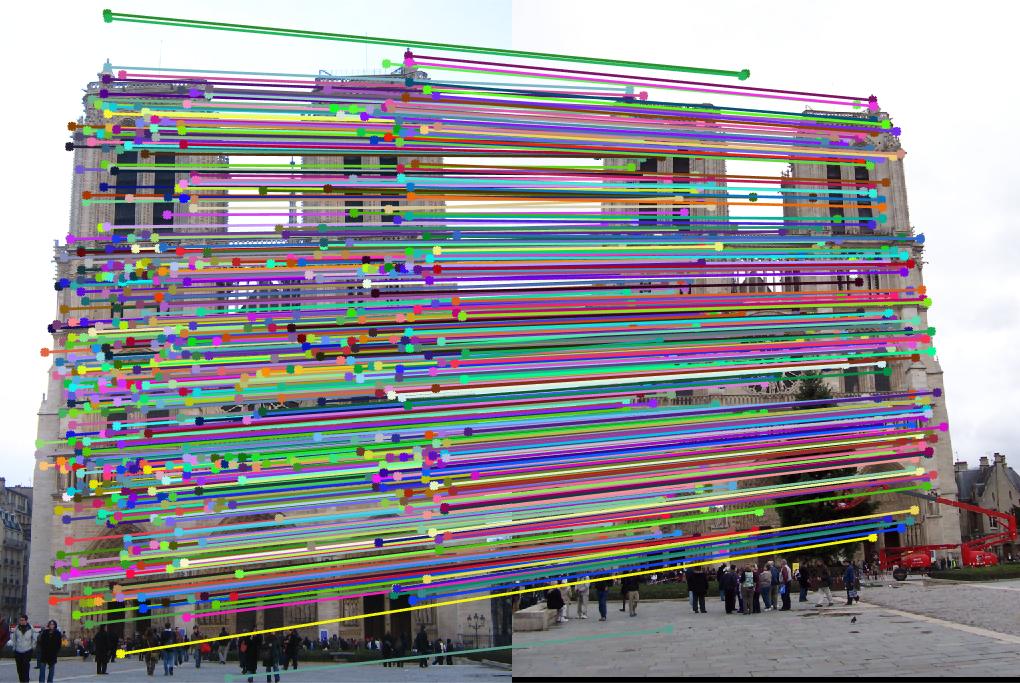

For the mount rushmore image pair from this project, RANSAC rejected 160 (19.4%) matched point pairs as outliers. The following two images show the set of matches before and after RANSAC, showing that the inlier matches all correspond to the same camera motion, while those matches which didn't match the motion of the camera have been removed.

Matching pairs of SIFT keypoints before and after RANSAC estimation of the fundamental matrix removed all outlier matches.

Extra / Grad Credit

To further improve accuracy of the RANSAC estimation of the fundamental matrix, the estimation algorithm was adjusted to normalize each set of input points to be centered at its mean and have an average point-to-center distance of sqrt(2). Each input set of points to estimate_fundamental_matrix() is passed to normalize_coordinates().

function [ T, points_normalized ] = normalize_coordinates( points )

C = sum(points) / size(points,1);

points_centered = points - C(ones(size(points,1),1),:);

avg_sqr_norm = 0;

for i = 1 : size(points_centered,1)

p = points_centered(i,:);

avg_sqr_norm = avg_sqr_norm + (norm(p)*norm(p));

end

avg_sqr_norm = avg_sqr_norm / size(points_centered,1);

s = 2.0 / avg_sqr_norm;

T = [s 0 0; 0 s 0; 0 0 1] * [1 0 -C(1); 0 1 -C(2); 0 0 1];

points_normalized = zeros(size(points));

for i=1:size(points,1)

tmp = T * [points(i,:) 1]';

points_normalized(i,:) = [tmp(1) tmp(2)];

end

end

The fundamental matrix is estimated using these normalized coordinates and then the estimated matrix is converted back to the original coordinate system with the code

F_matrix = Tb' * F_matrix * Ta;

where Ta and Tb are the normalizing transforms which were applied to the input sets.

Unfortunately, making this change did not have a significant effect on results for any of the four image pairs (percentage inliers were within 1-3 percentage points and resulting inlier renderings were visually very similar).

Results

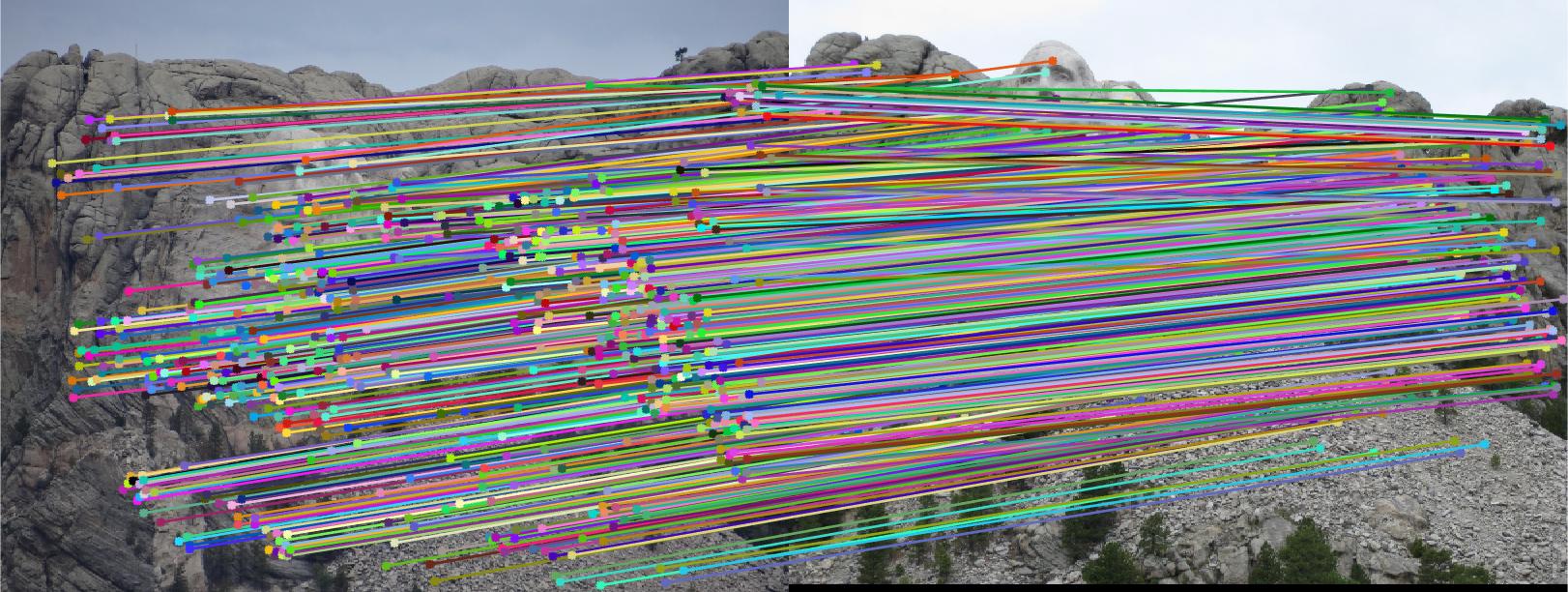

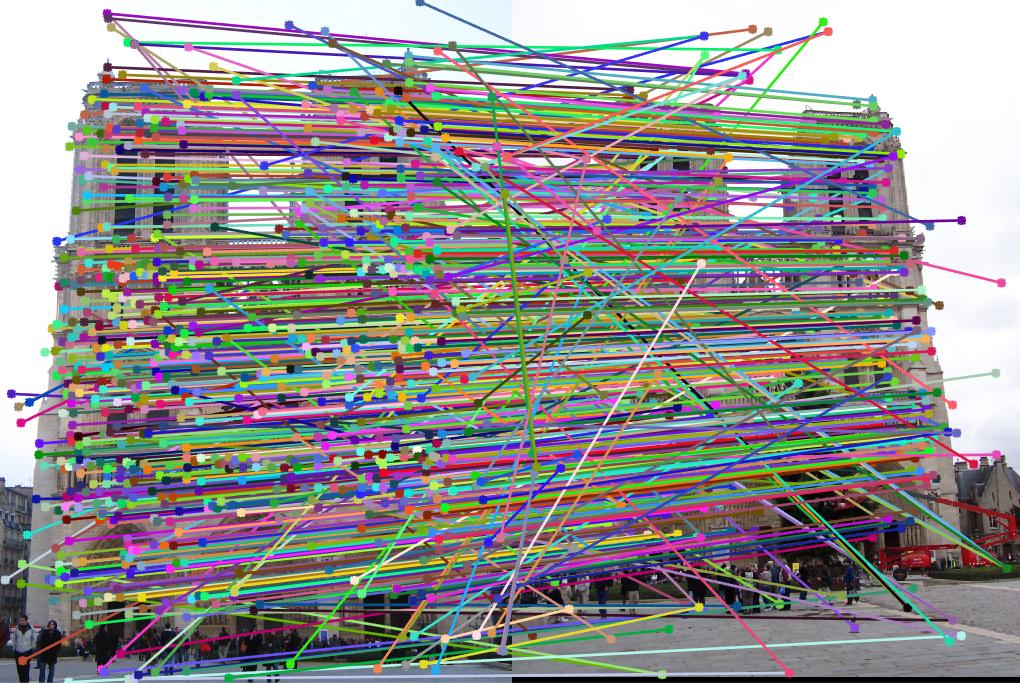

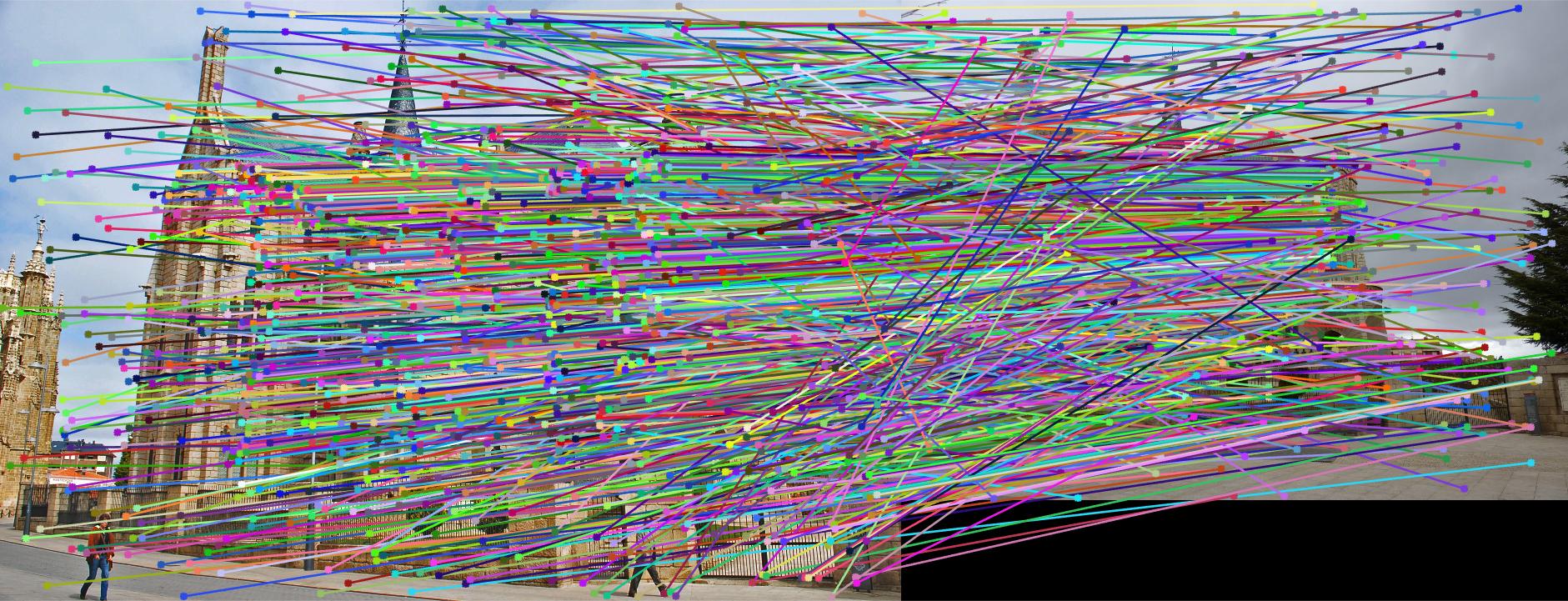

The following image pairs show the outlier rejection results of the final code (including coordinate normalization) on the four image pairs in part 3.

Mount Rushmore

RANSAC accepted 664 inliers of 825 matches (80.48%).

Notre Dame

RANSAC accepted 623 inliers of 851 matches (73.21%).

Episcopal Gaudi

RANSAC accepted 473 inliers of 1062 matches (44.54%).

Woodruff Dorm

RANSAC accepted 207 inliers of 887 matches (23.34%).