This report summarizes the results and work for the third assignment for CS6476. This particular assignment dealt with estimating the camera center and fundamental matrix for image pairs and utilizing the estimate fundamental matrix for feature matching.

In this portion of the project, we handle the computation of the projection matrix, which projects 3-D world coordinates onto the 2-D image plane, and the camera center, which shows where the camera is located.

Given two images of the same scene, the projection matrix may be determined through a linear least squares algorithm, provided enough potentially corresponding points. As such, the projection matrix is given by

| (1) |

where M is the projection matrix from world into image coordinates and s is the scale. For this problem, we would like to fix s = 1. This result may be obtained via a few methods. In this case, we elected to use a Singular Value Decomposition (SVD) to obtain the desired, normalized solution.

To solve for M one may re-write the problem as a linear system of equations as

![[ ]

Xi Yi Zi 1 0 0 0 0 − uiXi − uiYi − uiZi − ui M = 0.

0 0 0 0 Xi Yi Zi 1 − viXi − viYi − viZi − vi](main1x.png) | (2) |

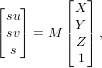

In this case, we have elected to use an SVD to solve for M, resolving the scaling issue. Using this solution with the provided points yielded the matrix

| (3) |

where the residual was 0.0445.

Next, we compute the center of the camera utilizing M through the equation

| (4) |

where M = ![[Q | m4 ]](main4x.png) and Q ∈ ℝ3. Given the previously determined matrix M, we

calculated the camera center to be

and Q ∈ ℝ3. Given the previously determined matrix M, we

calculated the camera center to be

![C = [− 1.5127 − 2.3517 0.2826]T](main5x.png) | (5) |

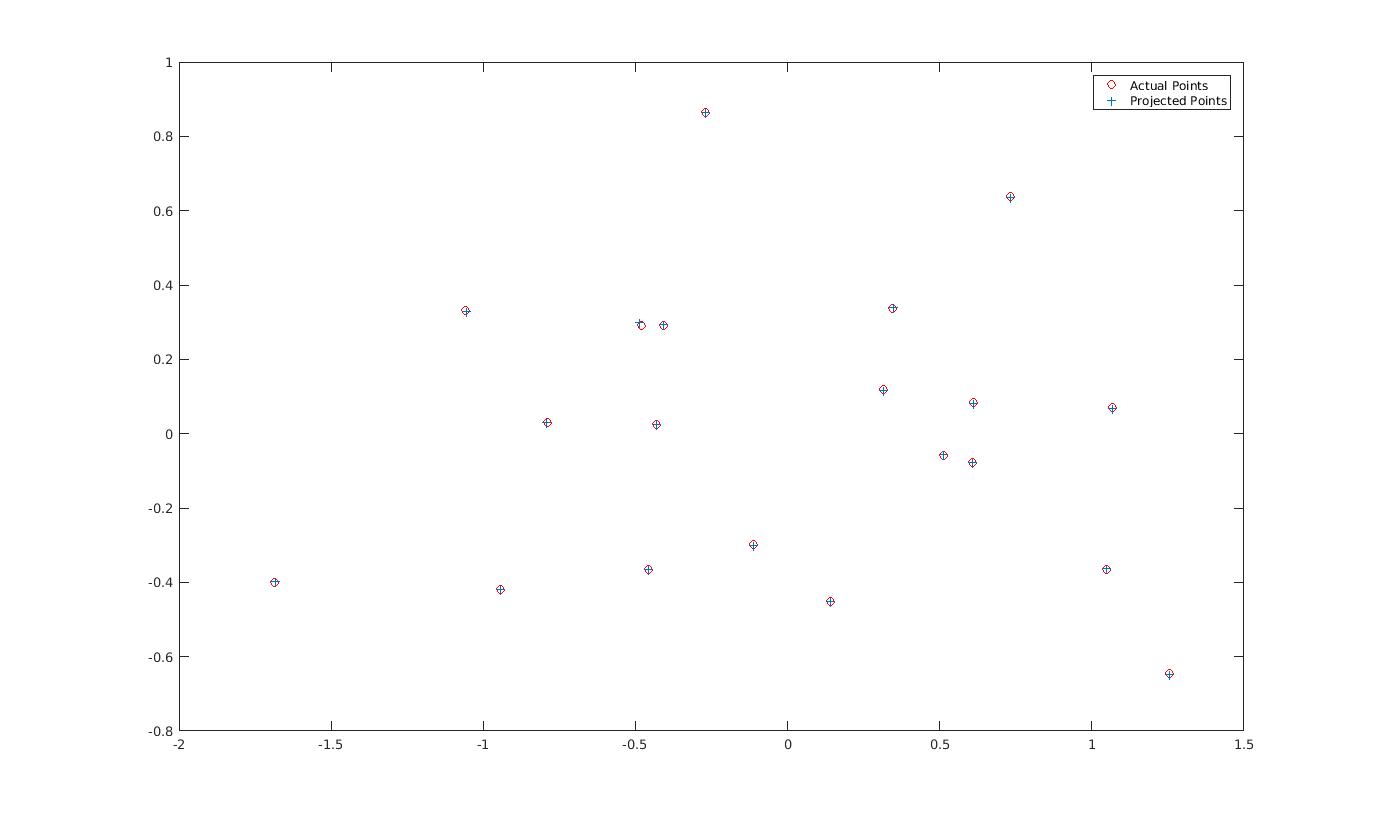

, which is very close to the supplied value in the assignment description. Fig 1 shows the relationship between the projected points, using M, and the actual points. Utilizing the non-normalized coordindates, the results closely resembled those of Fig. 1.

This section contains work for estimating the fundamental matrix of the system. This matrix generates epipolar lines that relate two view of the same image. In particular, they allow one to determine where corresponding points lie in some line of the corresponding image, yielding a useful tool in a feature-matching pipeline.

Given two images of the same scene, the fundamental matrix F between two scenes is such that

| (6) |

where x′,x are from two corresponding images. Given 8 points from the images, it is possible to compute F. Again, we solve for F utilizing a system of linear equations in the form

![[ ]

A = xix′i yix′i x′i xiyi′ yiy′i y′i xi yi 1](main7x.png) | (7) |

Here, we utilize an SVD to solve the linear system of equations

| (8) |

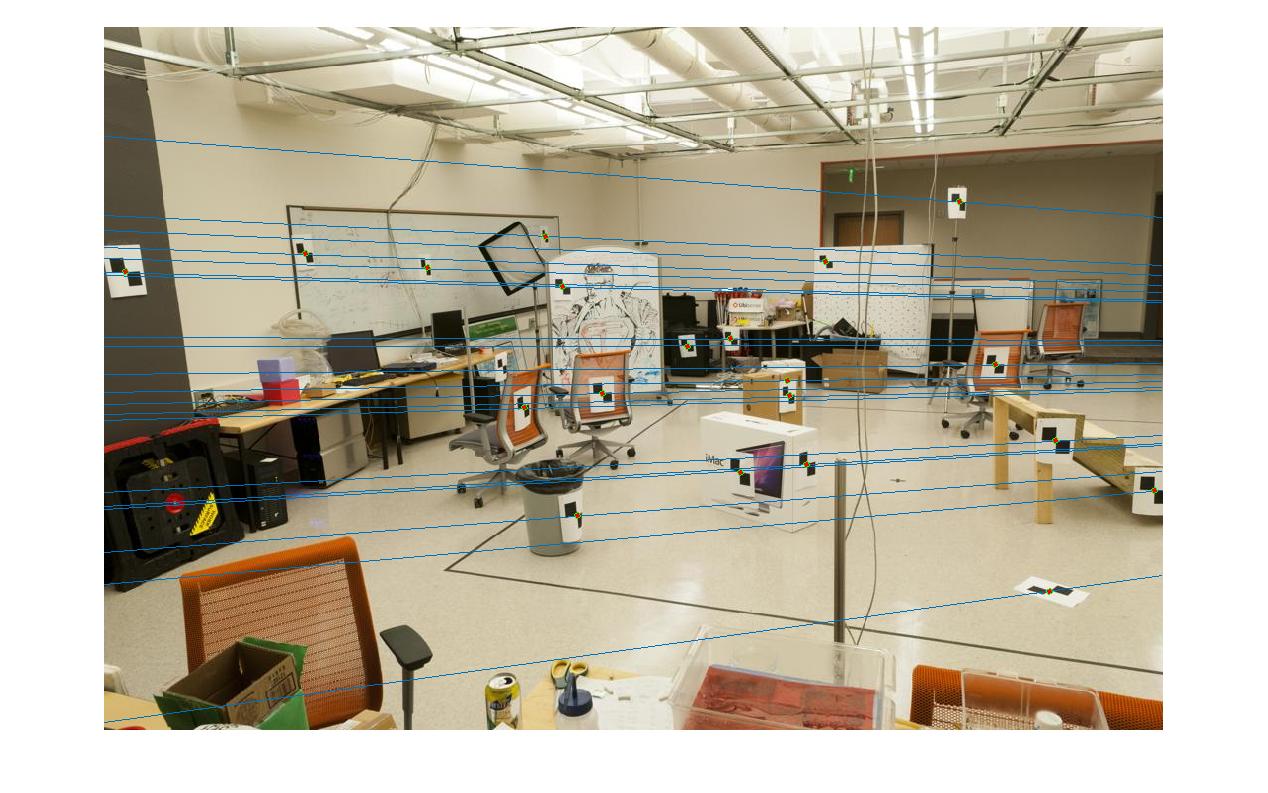

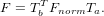

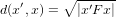

which normalizes the scale automatically. This approximate solution for F will be full rank, due to the problem formulation. Thus, we perform a second SVD and set the least singular value to 0, ensuring that F is rank two. This process ensures that the projected epipolar lines emanate from the same location. Fig. 2 shows epipolar lines produced via the approximated matrix F. In particular, the epipolar lines pass through the corresponding points, showing that the fundamental matrix has been correctly estimated.

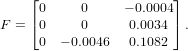

The estimated matrix F is given by

| (9) |

Note that the graduate credit coordinate normalization has been applied before calculating this matrix.

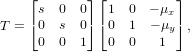

To prevent numerical issues, one strategy is to perform a coordinate normalization between the two sets of points. We performed this utilization using the mean and standard deviation to estimate the scale of the image. These transformations for each set of points are given by

| (10) |

where a separate T matrix is calculated for each set of points. Then, the normal fundamental matrix estimation is performed with this change of basis. After esimating Fnorm, F is given by

| (11) |

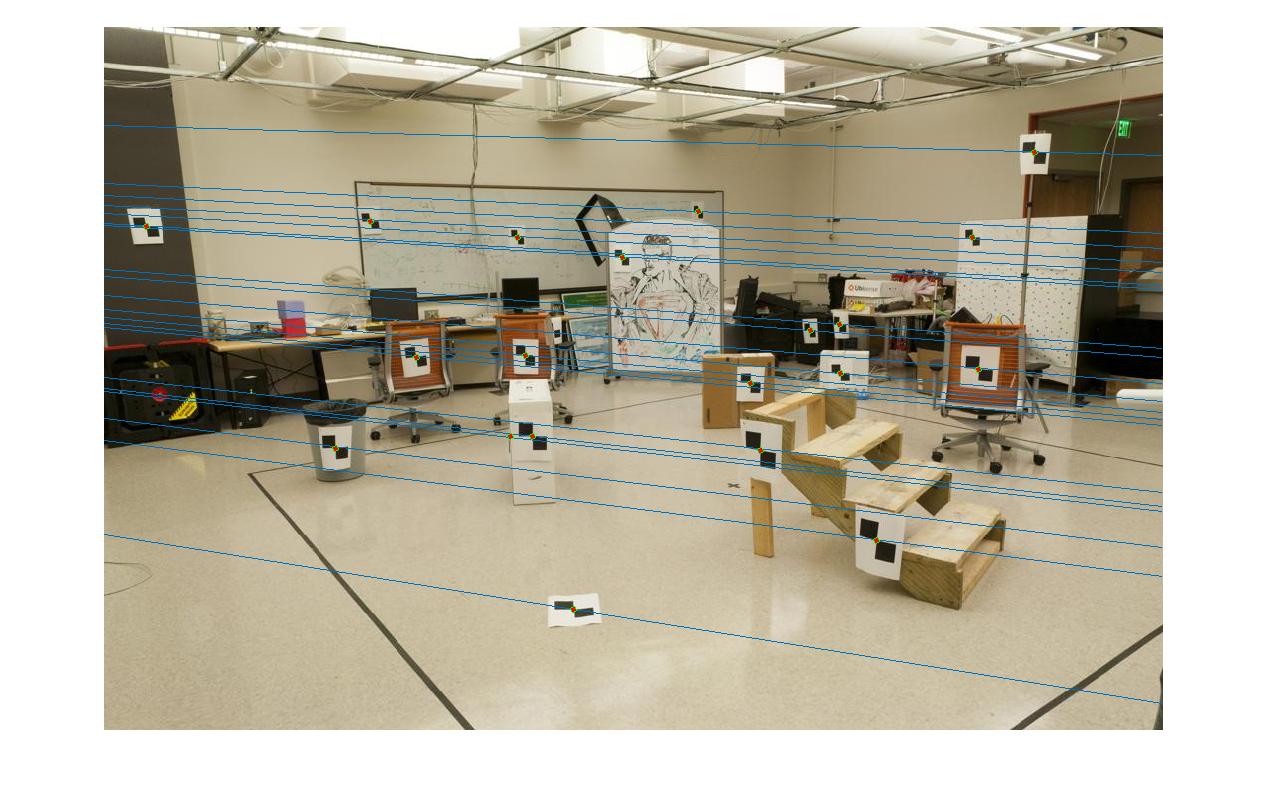

This normalization greatly improved the accuracy of the fundamental matrix approximation. Fig. 3 shows the operation of the fundamental matrix before the coordinate transformation. This process encounters numerical issues, causing the lines to pass by the corresponding points, decreasing the accuracy of the approximation. Fig. 2 displays the fundamental matrix calculated with the coordinate normalization.

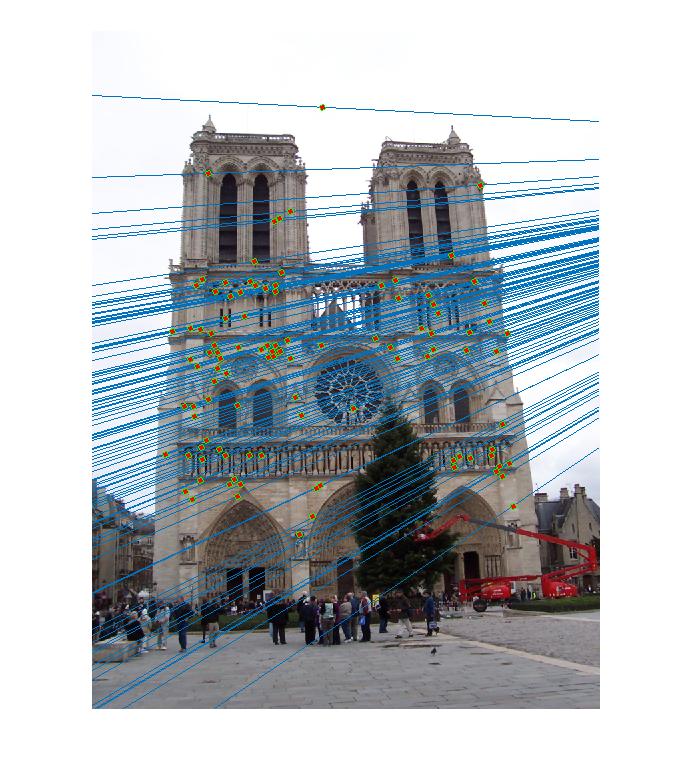

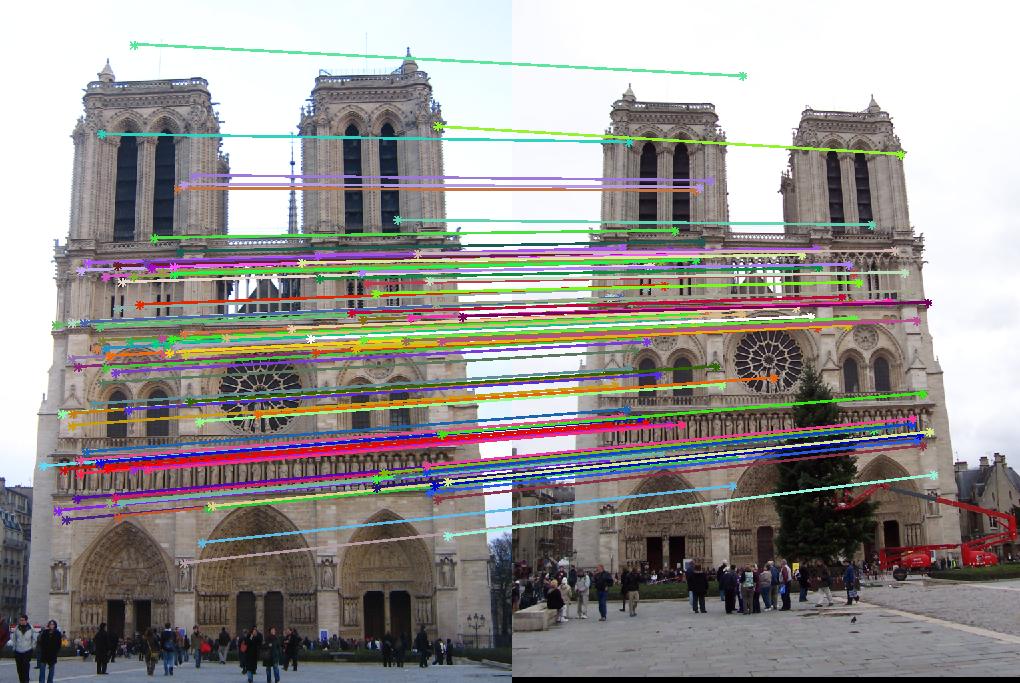

This portion of the assignment utilizes the RANSAC algorithm to perform feature matching between pairs of images. In particular, we discuss our RANSAC algorithm and present pairs of feature with points identified through inliers of the estimated fundamental matrix.

To perform our RANSAC algorithm, we select 8 points at random from the pairs and count the number of ”inliers” that agree with this particular matrix. To determine the inliers, we utilize the ”distance” metric

| (12) |

and take all inliers such that

| (13) |

where c ∈ R+ is some constant. After several thousand iterations, we return the fundamental matrix with the most inliers and the inliers. Figs. 4,5 display the results of the RANSAC algorithm on the Notre Dame image pair. Even with these planar images, the RANSAC-estimated fundamental matrix allowed the algorithm to achieve 99% accuracy.

Figs. 7-11 display this matching on more of the supplied image pairs.

This assignment proposed using linear least squares techniques to solve for the projection matrix and camera center between two known image pairs. Additionally, we estimated a fundamental matrix, which displays the epipolar geometry, between these pair of images. Utilizing coordinate normalization, we improved the results of this method. Finally, we utilized the RANSAC algorithm to approximate a fundamental matrix between an image pair amoung the presence of outliers. Using this method, 99% accuracy was achieved in finding corresponding image pairs.