Project 3: Camera Calibration and Fundamental Matrix Estimation with RANSAC

Introduction

This project's goal was given two images of the same scene at different viewpoints, identify the correspondences at near perfect accuracy. Using camera calibration and the fundamental matrix can enhance the results we obtained from project 2 with SIFT features alone to the near perfect accuracy. The calibration project consisted of three major steps: 1) Estimate camera center and projection matrix 2) Estimate fundamental matrix 3) Determine best fit fundamental matrix for given SIFT features with RANSAC. I will discuss how I went about implementing each portion of the pipeline.

Component 1: Projection Matrix Calculation and Camera Center Estimation

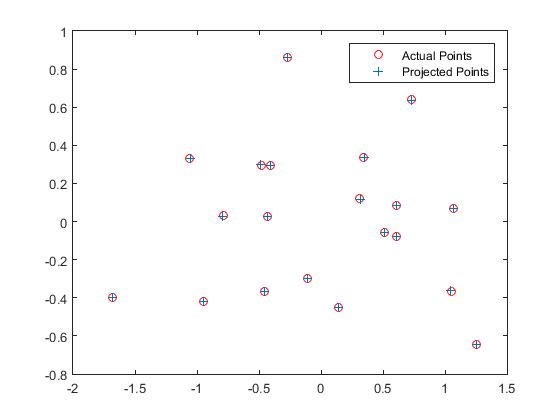

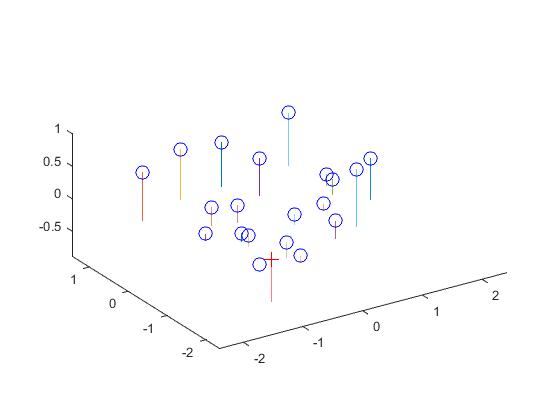

I first implemented calculation of the projection matrix. The projection matrix represents the parameters of the camera that convert 3D world coordinates to their 2D image representation. The decomposition of the projection matrix into intrinsic and extrinsic parameters is the following: x = K [ R t] X. In order to calculate the projection matrix given 3D and 2D coordinates, I simply set up a system of equations to solve with a linear least square fit, since the system is usually not difficult. Since M is a 3x4 matrix, the system must have at least six pairs of corresponding 3D and 2D coordinates to estimate the projection matrix. I first created equations for the u component of the 2D coordinates, and then the v component. After combining the two and setting equal to zero, I was able to solve for projection matrix. Since the projection matrix has 11 degrees of freedom, I concatenated a one to end of the calculated values and reshaped the matrix to 3 x 4. I then scaled the matrix by -1.6755 to obtain a projection matrix similar to that obtained in the Project 3 description. The residual I obtained was 0.0475.

To compute the camera center, I obtained Q (the 3x3 part of the projection matrix) and m4 (the remaining fourth column of M). I computed the center by taking the negative inverse of Q times m4. I obtained a camera center of (-1.5126, -2.3517, 0.2827), close to the camera center reported in the project 3 description.

|

Projection Matrix Estimation Camera Center Estimation

|

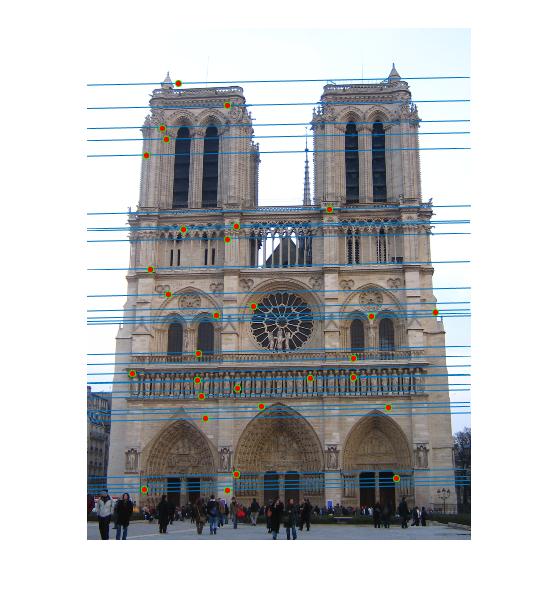

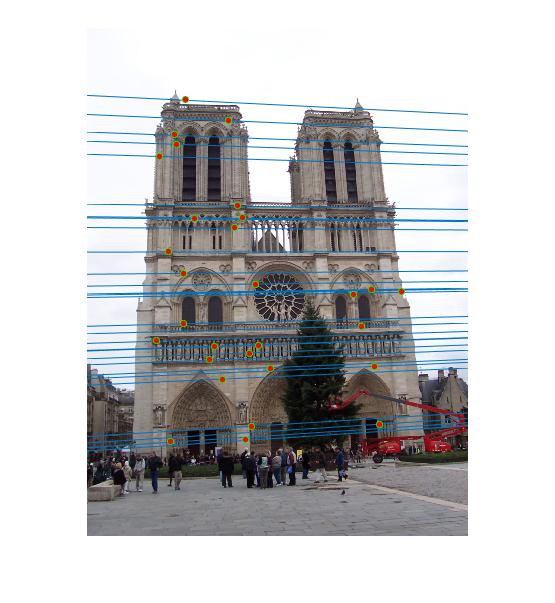

Component 2: Fundamental Matrix Estimation

For the second part of the project, I computed the fundamental matrix. The fundamental matrix is the essential matrix multiplied by the projection matrices of the corresponding views (transpose(K')EK = F). The fundamental matrix is powerful in that given a set of at least seven, usually eight corresponding point pairs, a fundamental matrix can be estimated that calculates the exact relationship between the two views, and all correspondences in the images can be identified, allowing for image meshing and 3D reconstruction without ever knowing the original projection matrices of the cameras used. Similar to the estimation of the projection matrix, the fundamental matrix can be calculated using a set of corresponding points and setting up a system of equations. Once the matrix is constructed, because calculation of the fundamental matrix is usually not tame, singular value decomposition is performed due to the method's robustness. Once the fundamental matrix values are reshaped to 3x3, we have to resolve that the fundamental matrix is rank deficient (the determinant of F = 0). We perform singular value decomposition on F again and set the S matrix's last value to 0, and them combine U *S * V to get our constrained F. Computing the fundamental matrix with the normalized points given creates epipolar lines that go through all the points in both image views, suggesting that the calculated fundamental matrices have “calibrated” the cameras. See the image below for the calculated epipolar lines for part 2.

|

View 1 Epipolar Lines View 2 Epipolar Lines

|

Component 3: Estimate Fundamental Matrix with RANSAC and SIFT features

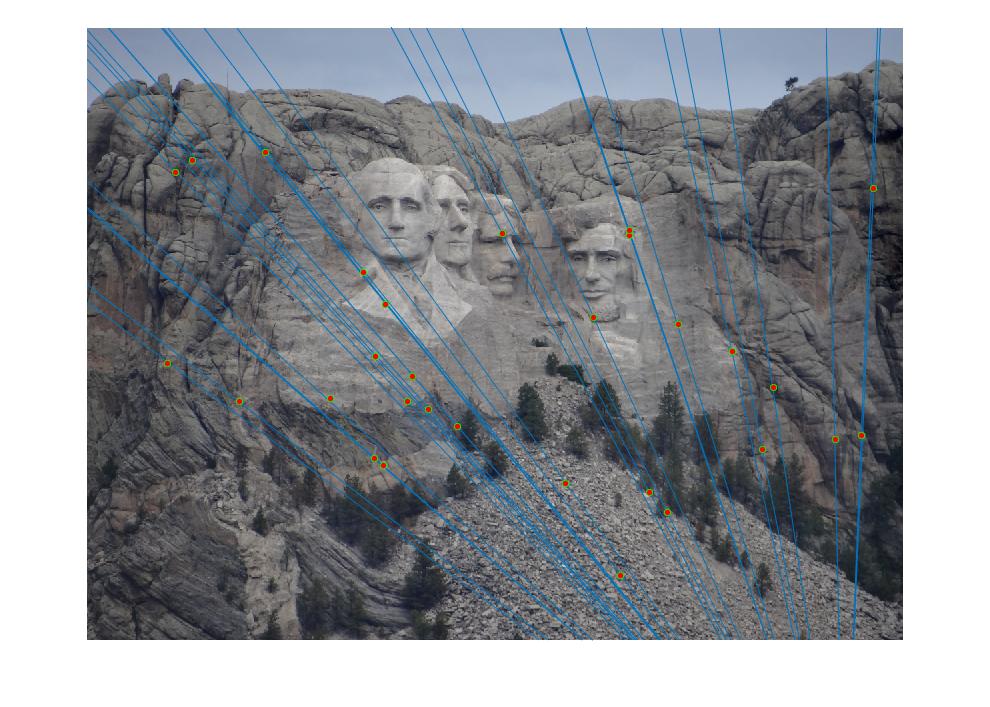

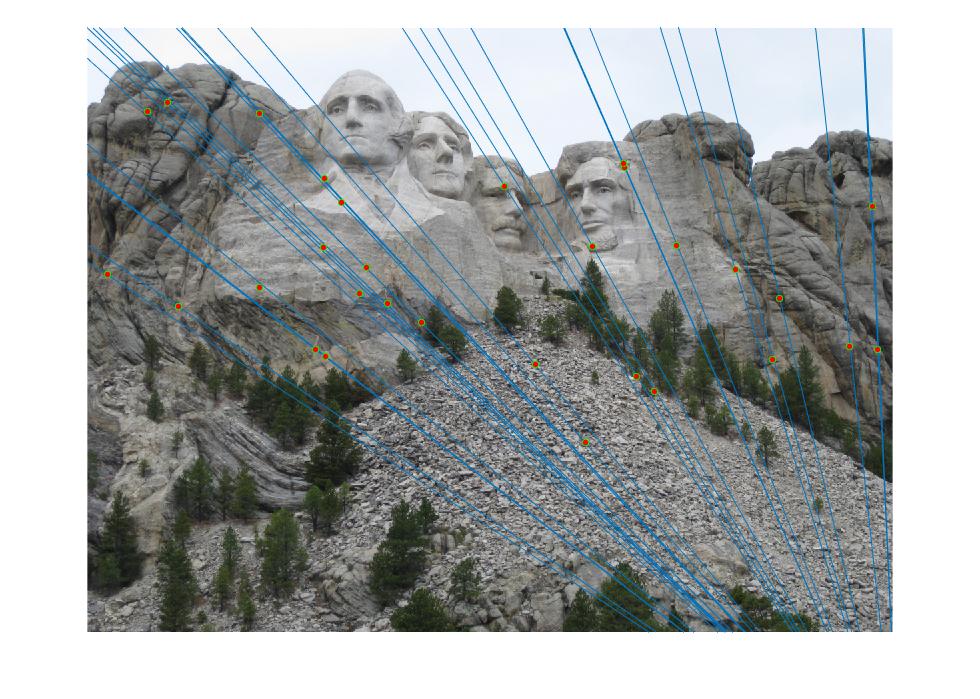

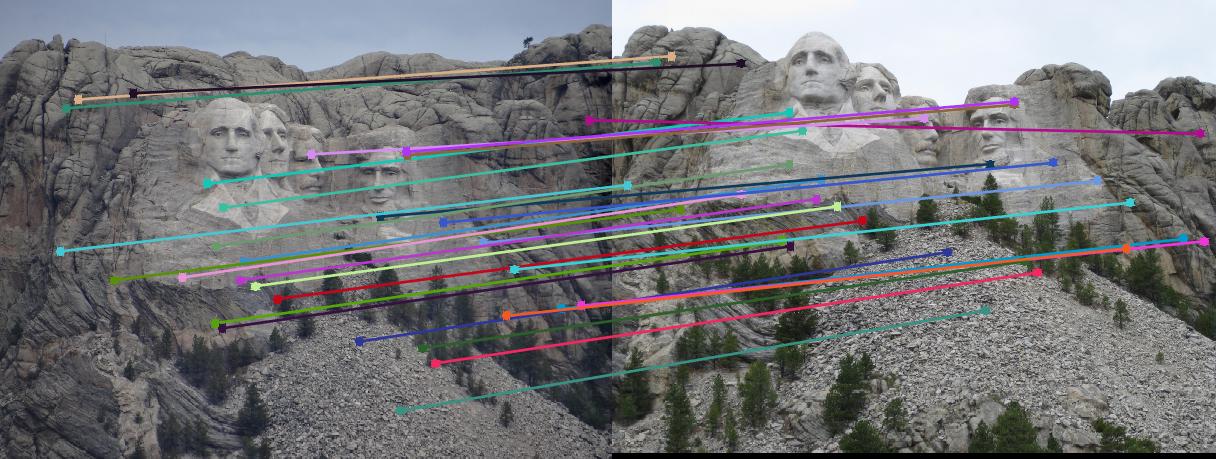

The last part I implemented was utilizing RANSAC (random subset consensus) for a robust fitting of the fundamental matrix to the two image views despite outliers. First, the corresponding points were calculated using VLFeat's library that implements a SIFT pipeline to find potential matches. Then, I ran RANSAC on the potential matches to compute the best fit fundamental matrix. I computed the number of iterations to run RANSAC by using the equation from lecture slides of Professor Hays: round(log(1-p) / (log(1-(1-e)^s))), where p = 0.99 (probability that this fit was formed without any outliers), e = 0.5 (fraction of outliers found in the SIFT set), and s = 8, the sample subset of points used to form a fit for the fundamental matrix. I implemented RANSAC by obtaining a random subset of potential SIFT matches using randsample(), and estimating the fundamental matrix using these eight pairs of points. In order to assess the performance of the fit, I minimized p' * F * p, where p and p' are the image vectors for the image coordinates. Below are the results for Mount Rushmore

|

Rushmore View 1 Rushmore View 2 Rushmore 30 Correspondences

|

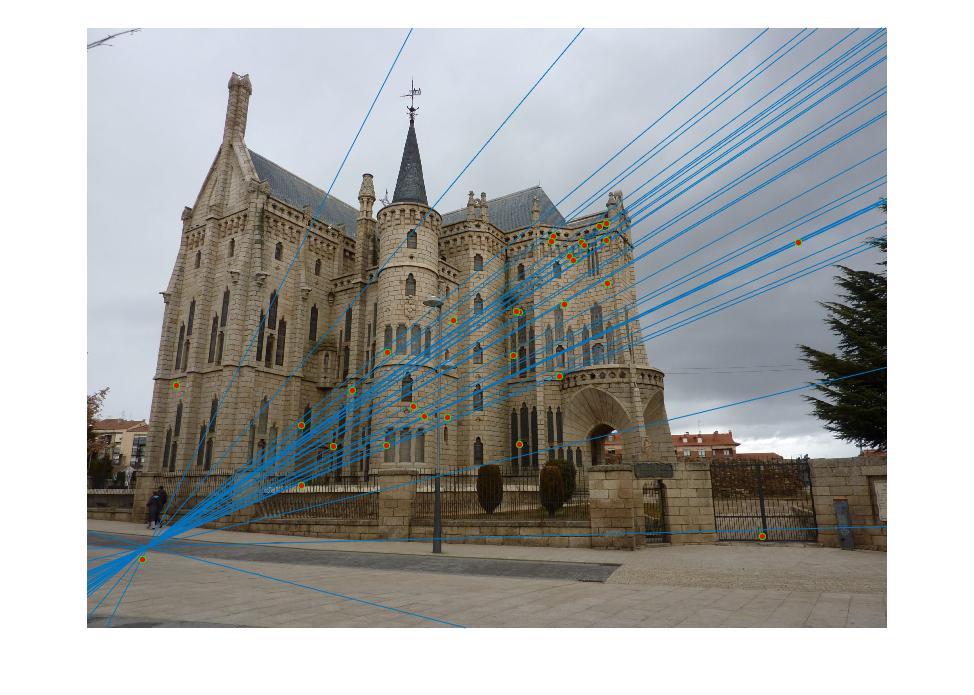

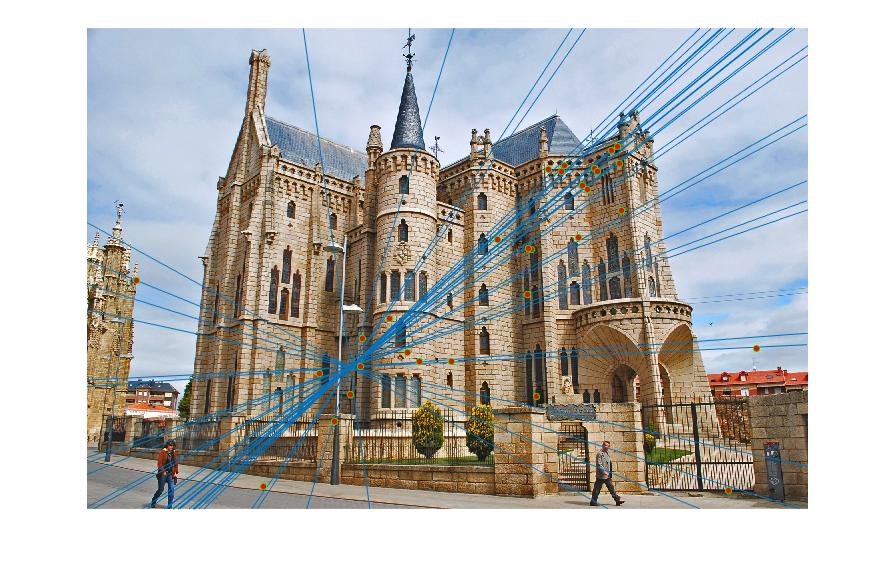

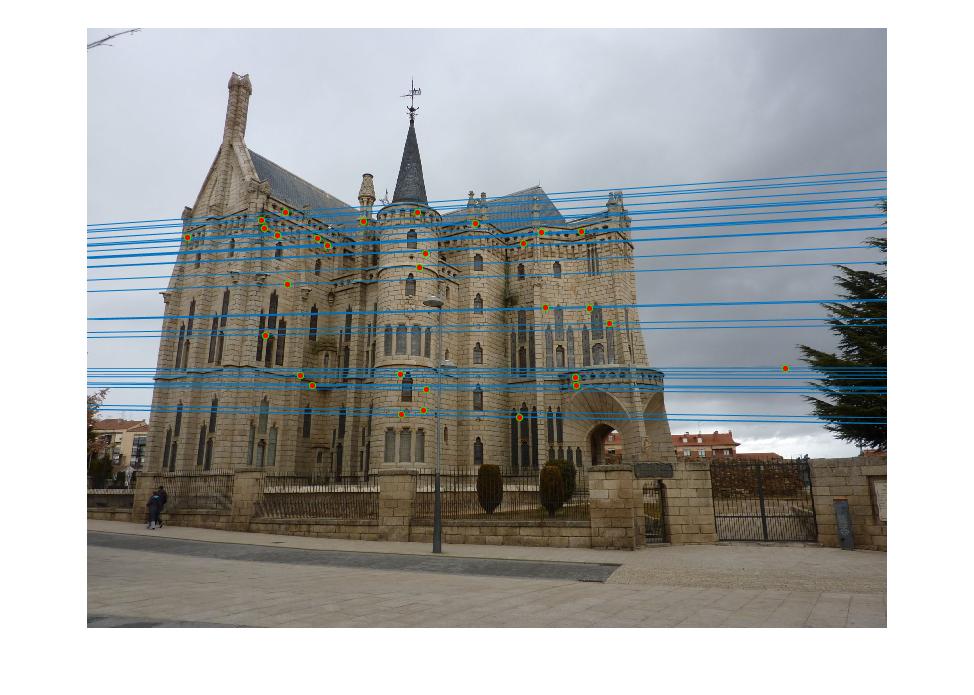

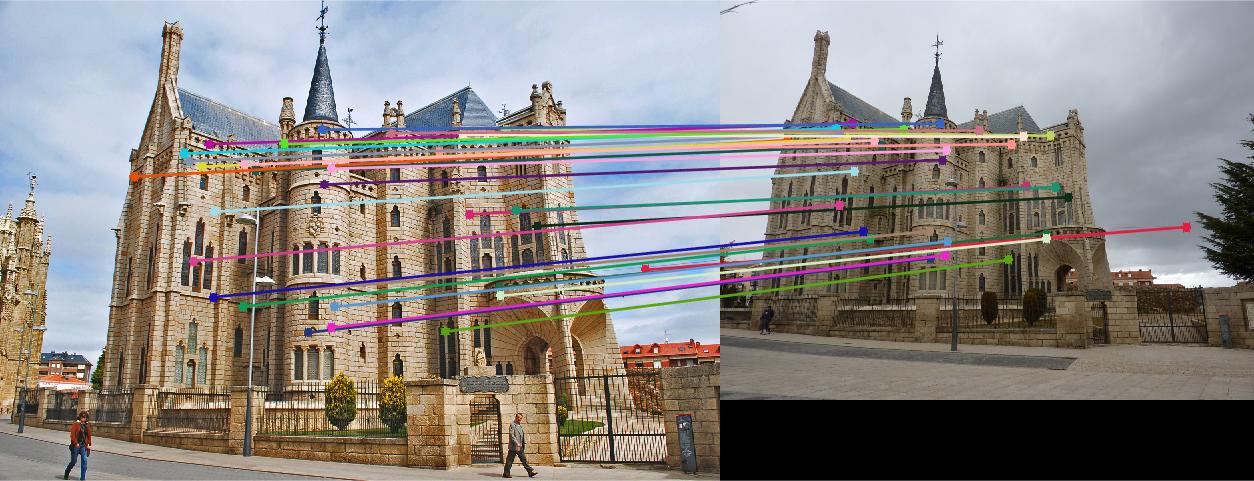

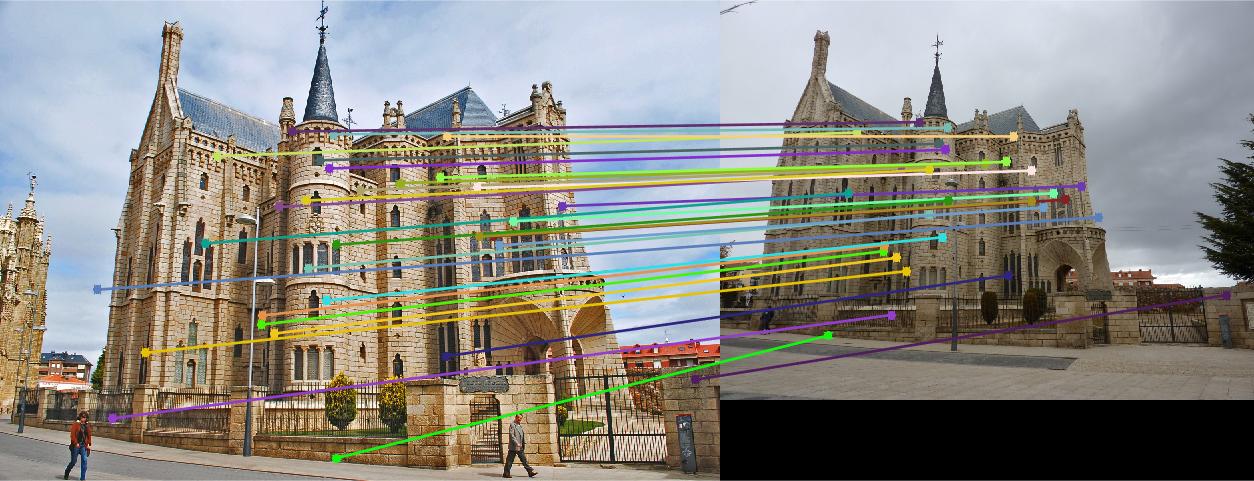

I was still not appeased with the current performance of the RANSAC camera calibration implementation. I decided to improve the performance of the fundamental matrix estimation by normalizing the coordinates of both images' feature points separately. I set the mean of each set of points to 0, and scaled the points by the inverse distance of the point furthest away from the mean. This modification significantly improved the performance for some of the tougher image views such as the Episcopal Gaudi. Below are the image correspondences and their respective epipolar lines for Episcopal Gaudi (with and without normalization).

|

Gaudi View 1 - No normalization Gaudi View 2 - No normalization Gaudi Correspondences - No normalization

|

|

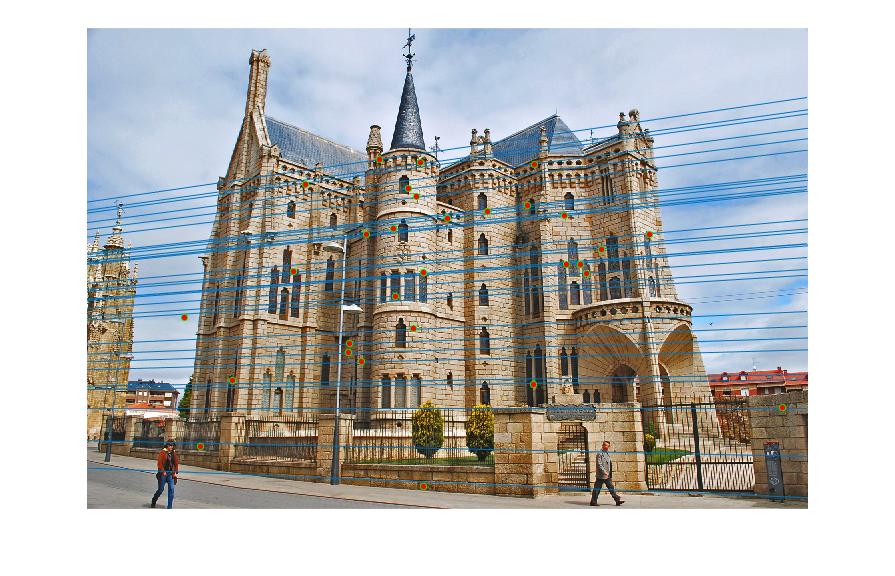

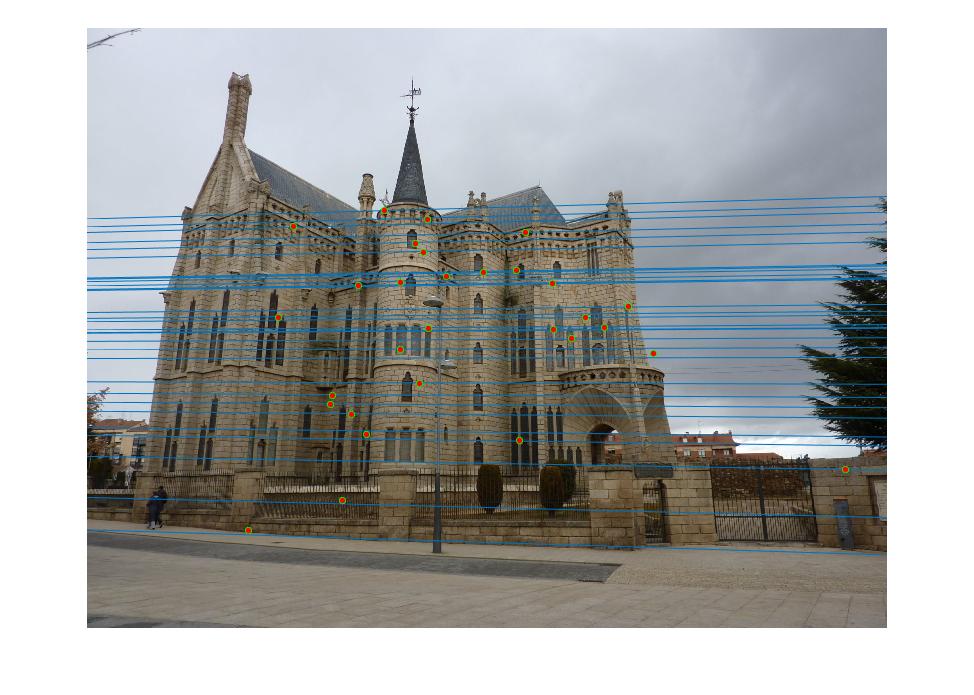

Gaudi View 1 Gaudi View 2 Gaudi Correspondences

|

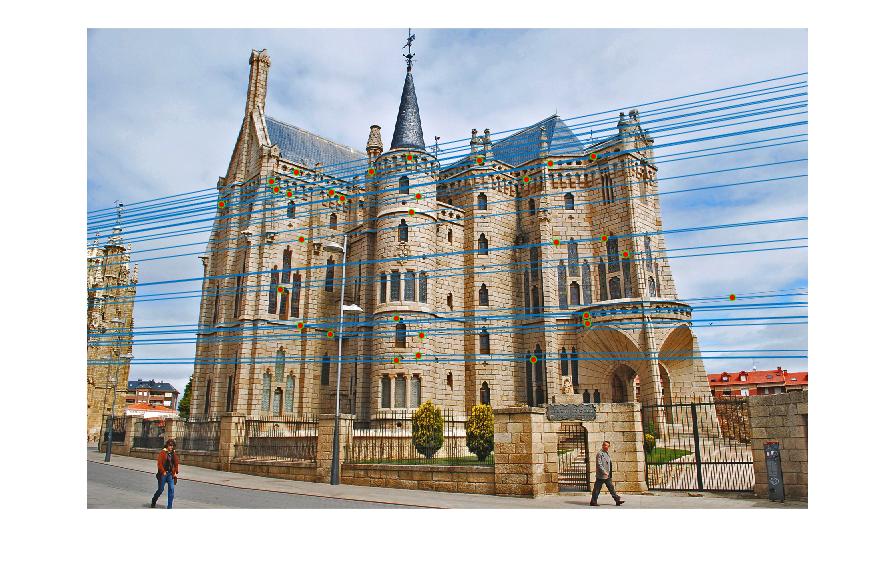

Even with normalization, the correspondences for Episcopal Gaudi were sometimes incorrect. I improved the accuracy by increasing the number of iterations RANSAC ran, and thresholding to remove outliers from the SIFT matches set, displaying points only with confidence above this threshold (of which there were still several hundred).

|

Improved Gaudi View 1 Improved Gaudi View 2 Improved Gaudi Correspondences

|

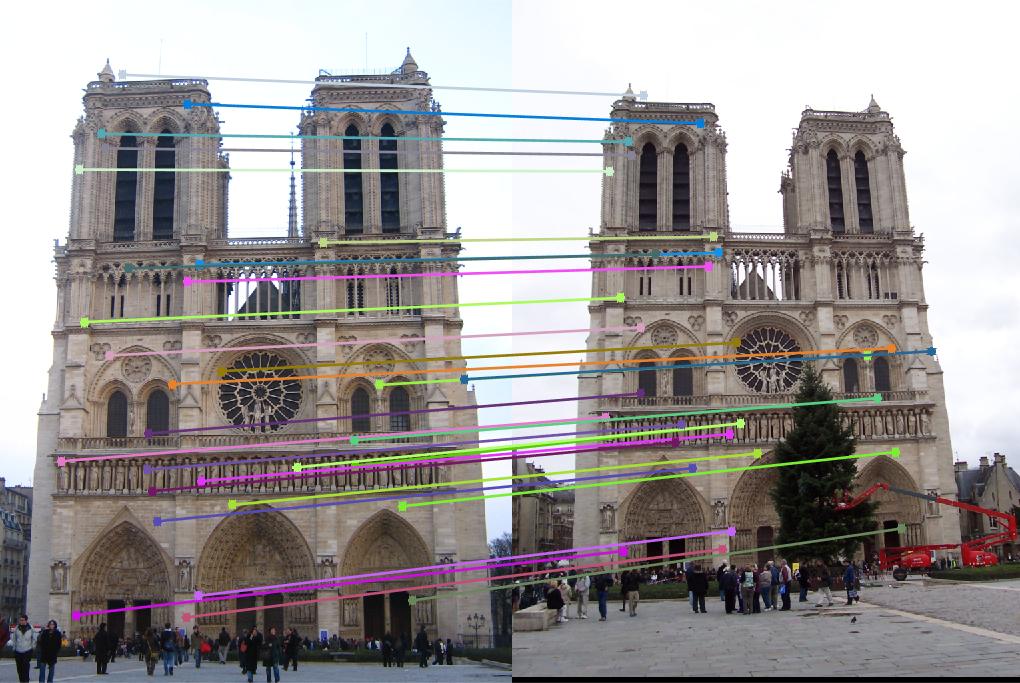

Component 4: Additional Images

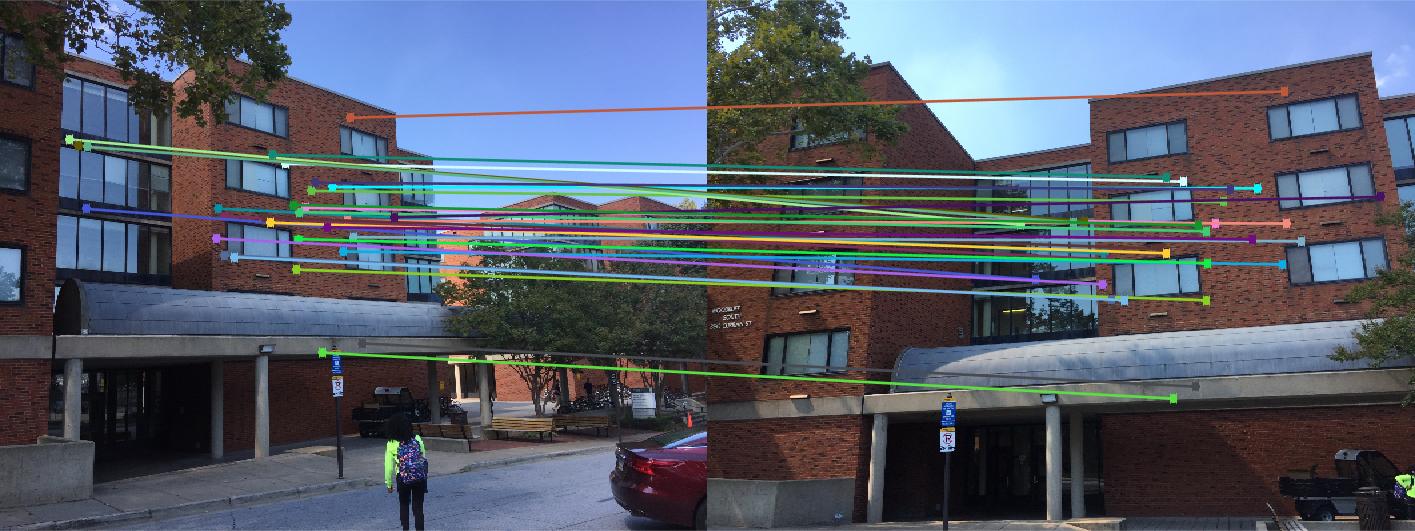

Below are additional images when the fundamental matrix and coordinate normalization are implemented.

|

Notre Dame View 1 Notre Dame View 2 Notre Dame Correspondences

|

|

Woodruff View 1 Woodruff View 2 Woodruff Correspondences

|

Component 5: Conclusion

Conclusion: By first performing camera calibration based on the projection matrix (as well as computing intrinsic parameters such as camera center), estimating the fundamental matrix (with coordinate normalization), and finally using RANSAC to use the best SIFT feature points that are also not outliers, I was able to implement camera calibration and correspondence from multiple views.