Project 4 / Scene Recognition with Bag of Words

For this project we were tasked with performing scene recognition using a combination of different techniques in order to compare their relative effectiveness. We had to code the creation of tiny images, bag of sift features, nearest neighbor classifier, and support vector machine clasifier. The testing combinations were as follows

- Tiny Images + Nearest Neighbors

- Bag of Sift + Nearest Neighbors

- Bag of Sift + Support Vector Machines

Tiny Images

This is perhaps the easiest part of the assignment. I chose to normalize the image vector to get better results. This increased my accuracy by 0.2%. There isn't much else to say the code for this can be found below.

image_feats = zeros(size(image_paths,1), 256);

for i = 1:size(image_paths,1)

image_feats(i,:) = reshape(imresize(imread(image_paths{i}), [16 16]), [1 256]);

%normalize

image_feats(i) = image_feats(i)/norm(image_feats(i));

end

Bag of Sift

This is the other feature extracting part of our assignment. We start by building a vocabulary of size 200. We do this by grabbing sift features for every image with a relatively large step size, for my project I chose a step size of 20. Then we take all of the sift features and perform kmeans clustering on the features with the number of clusters equal to our vocab size. Then once that is built we can actually build our bags of sift features. For this we grab the sift features for each image, for my best results I used a step size of 5 with fast sift off and for the faster results I used a step size of 10 with fast sift on. Then we bin the sift features into their appropriate cluster which creates a histogram of counts for each cluster. Then I normalize the histogram in order to keep images with a large amount of sift features from over powering the smaller ones.

Nearest Neighbor

This is the more naive of the two classifiers that we created. For this part I implemented single nearest neighbor. To do this I calculated the minimum distance for each for each test feature to each training feature. I then take the label from the training feature that is closest to the test feature and assign it to that test feature. I attempted to do k-nearest neighbor as can be seen in the commented out code; however, it seemingly made no difference to the overall accuracy.

Support Vector Machines

This task was the smarter classifier of the two that we created. For this I created a vector of 1 vs All SVMs using vl_svmtrain.

models = cell(num_categories, 1);

biases = cell(num_categories, 1);

for i = 1:num_categories

labels = -1*ones(size(train_image_feats,1), 1);

matching = strcmp(categories{i}, train_labels);

labels(matching) = 1;

[model, bias] = vl_svmtrain(train_image_feats', labels', lambda);

models{i} = model;

biases{i} = bias;

endI tried this with many different lambdas from 0.00000001 to 1 and found that 0.00001 provided the highest accuracy in all cases for me. This is likely due to underfitting/overfitting problems. The larger lambda is the closer you get to having an underfitting problem and the smaller lambda gets the closer you are to having an overfitting problem. This is because lambda controls how many different iterations the SVM undergoes when trying to divide the hyperplane.

Results

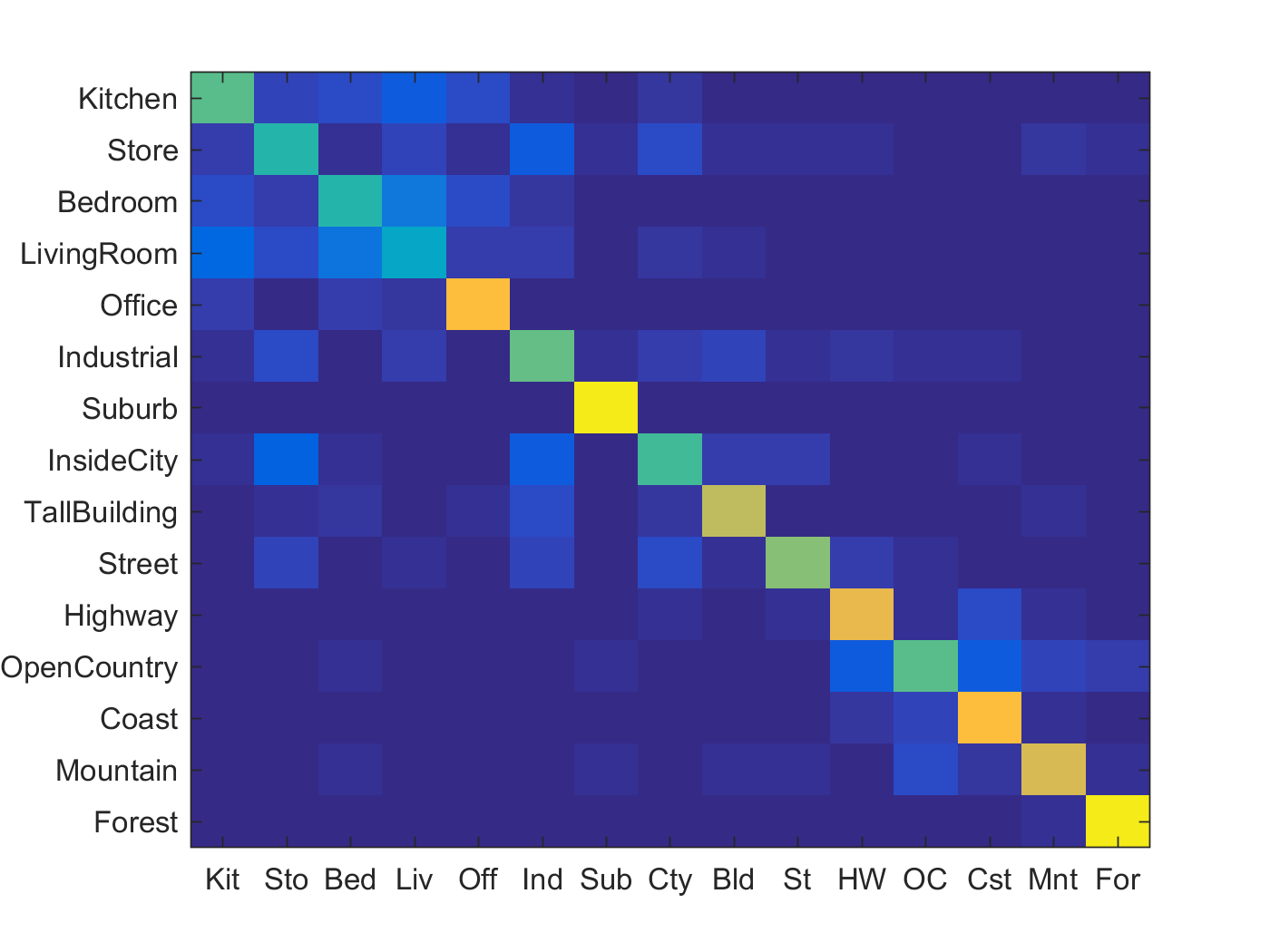

Accuracy is 0.667

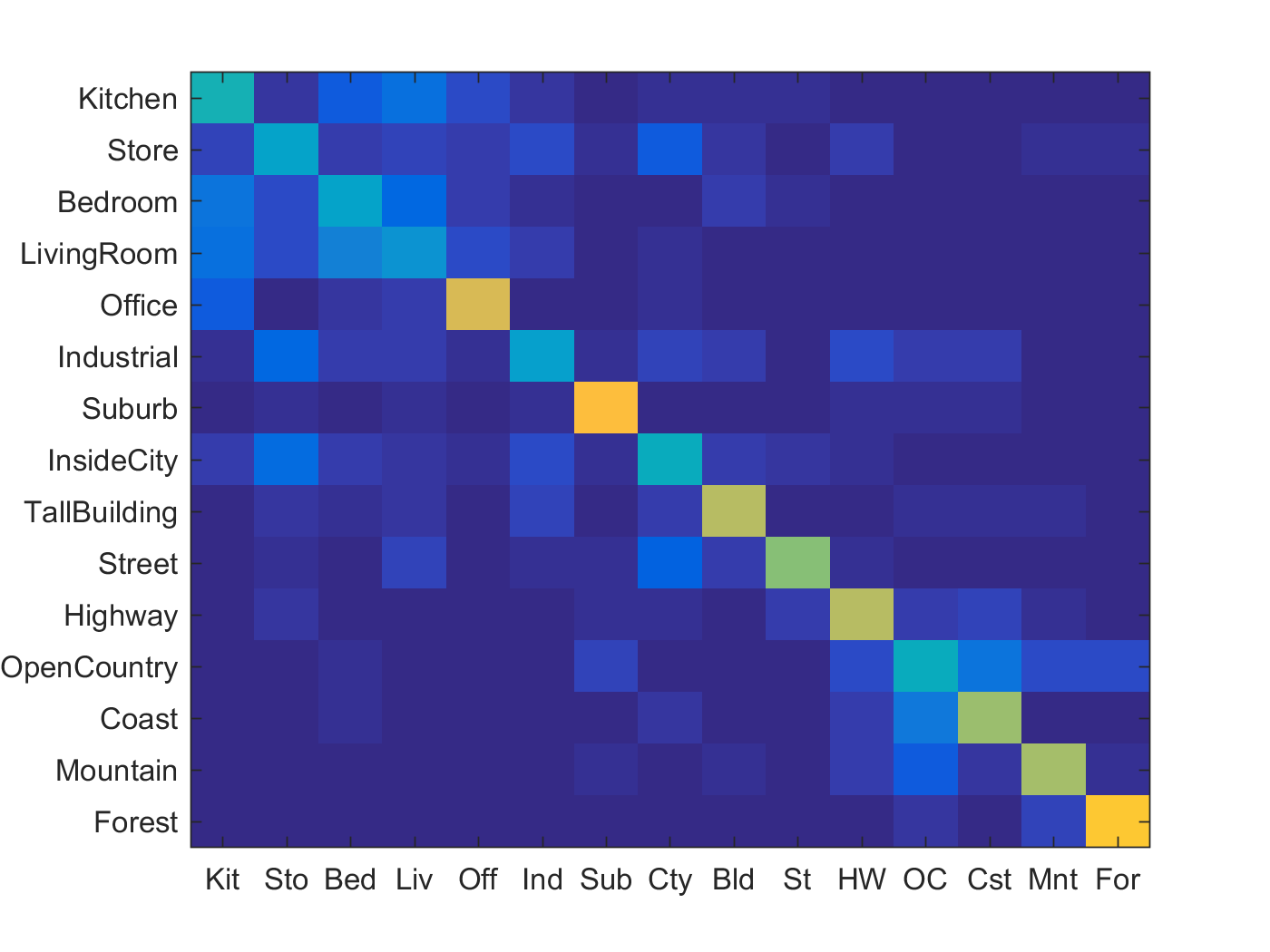

Accuracy is 0.562

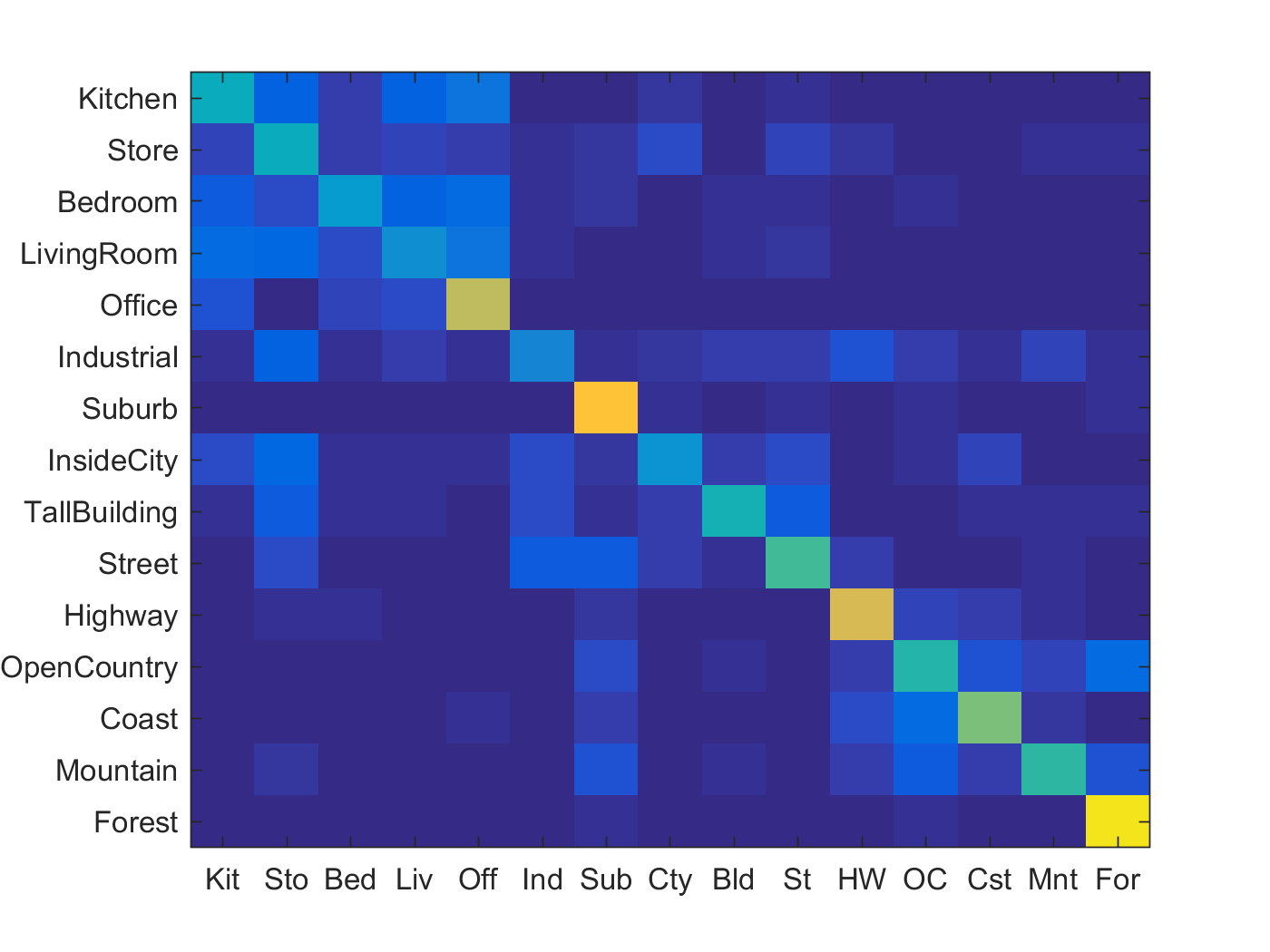

Accuracy is 0.521

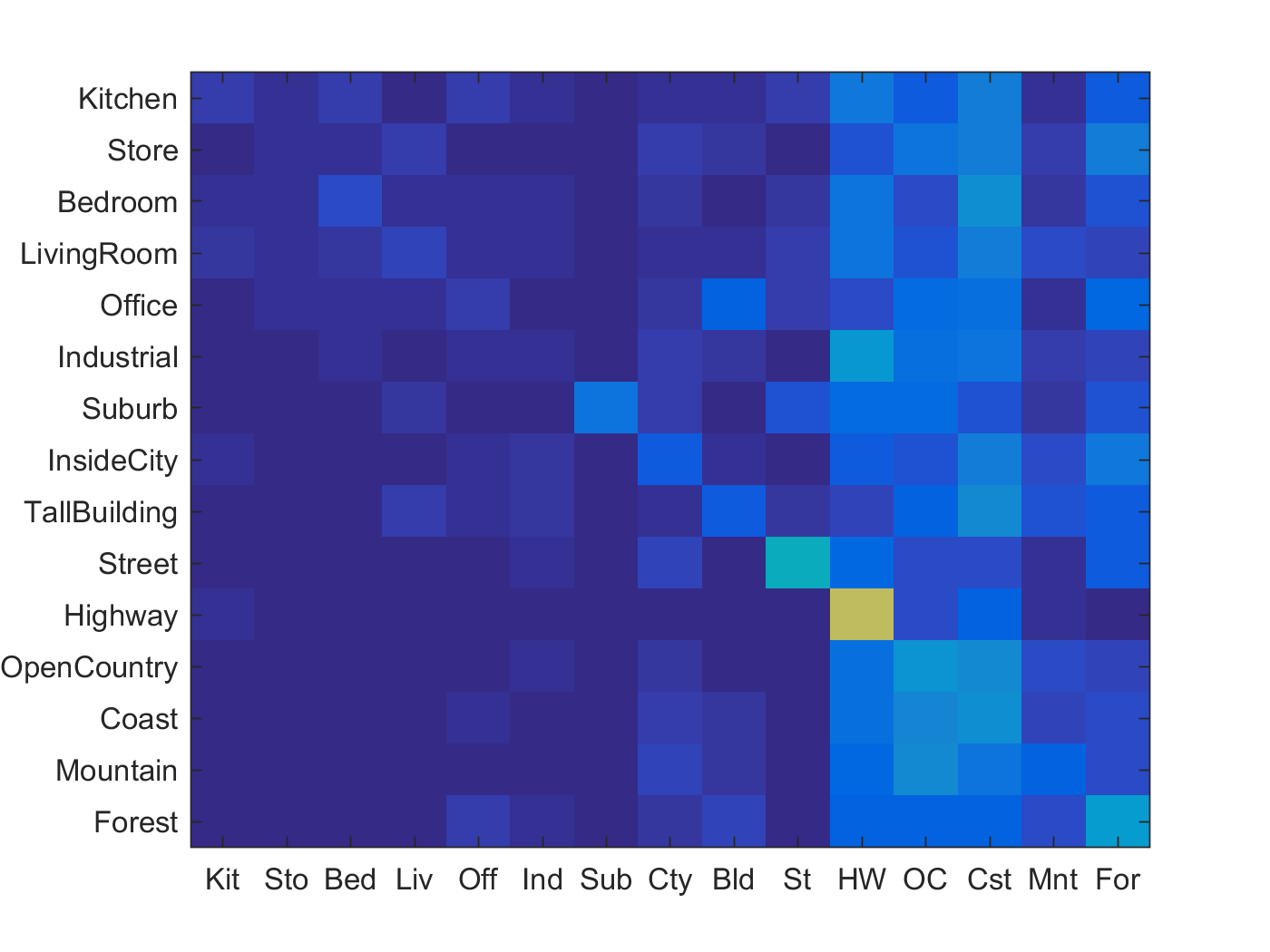

Accuracy is 0.193

| Category name | Accuracy | Sample training images | Sample true positives | False positives with true label | False negatives with wrong predicted label | ||||

|---|---|---|---|---|---|---|---|---|---|

| Kitchen | 0.080 |  |

|

|

|

Industrial |

Forest |

Highway |

Forest |

| Store | 0.040 |  |

|

|

|

Office |

TallBuilding |

TallBuilding |

Coast |

| Bedroom | 0.200 |  |

|

|

|

InsideCity |

Store |

OpenCountry |

Mountain |

| LivingRoom | 0.100 |  |

|

|

|

Office |

Kitchen |

Bedroom |

Office |

| Office | 0.190 |  |

|

|

|

Mountain |

Bedroom |

Industrial |

InsideCity |

| Industrial | 0.130 |  |

|

|

|

LivingRoom |

TallBuilding |

InsideCity |

Store |

| Suburb | 0.470 |  |

|

|

|

Coast |

InsideCity |

Kitchen |

Street |

| InsideCity | 0.030 |  |

|

|

|

Suburb |

Coast |

TallBuilding |

Store |

| TallBuilding | 0.180 |  |

|

|

|

Store |

Forest |

LivingRoom |

Coast |

| Street | 0.600 |  |

|

|

|

Kitchen |

Store |

Highway |

Suburb |

| Highway | 0.750 |  |

|

|

|

Suburb |

Industrial |

Coast |

Mountain |

| OpenCountry | 0.310 |  |

|

|

|

Forest |

Kitchen |

Suburb |

Coast |

| Coast | 0.400 |  |

|

|

|

Mountain |

Mountain |

InsideCity |

OpenCountry |

| Mountain | 0.290 |  |

|

|

|

Store |

Bedroom |

Kitchen |

Forest |

| Forest | 0.140 |  |

|

|

|

Mountain |

Street |

Suburb |

Kitchen |

| Category name | Accuracy | Sample training images | Sample true positives | False positives with true label | False negatives with wrong predicted label | ||||

Lorem ipsum dolor sit amet, consectetur adipisicing elit, sed do eiusmod tempor incididunt ut labore et dolore magna aliqua. Ut enim ad minim veniam, quis nostrud exercitation ullamco laboris nisi ut aliquip ex ea commodo consequat. Duis aute irure dolor in reprehenderit in voluptate velit esse cillum dolore eu fugiat nulla pariatur. Excepteur sint occaecat cupidatat non proident, sunt in culpa qui officia deserunt mollit anim id est laborum.