Project 4 / Scene Recognition with Bag of Words

Feature Representation

In this project, two different feature representations were explored: tiny images and bag of sifts. Features succinctly describe the input image in a standardized form, which allows for analysis and machine learning.

The tiny image feature representation was used with the nearest neighbor classifer, while the bag of sifts representation was used with both the nearest neighbor classifer and the support vector machine classifer.

Tiny Images

The tiny images feature representation resizes an input image to a small size, then vectorizes the shrunken 2-D image into a 1-D vector. This resulting 1-D vector is the feature which describes the image.

In practice, the most effective size for the tiny image representation was found to be 16 x 16, which results in a feature vector of size 256.

Bag of Sifts

The bag of sifts feature representation is much more complex than the previous tiny image method. Bag of sifts first builds a vocabulary from a set of training images. This vocabulary is generated from aggregating sift features from all of the training images, then clustering them into k-clusters via the kmeans algorithm. The vocabulary then is defined to be the k cluster center features from the kmeans algorithm.

Bag of sifts then uses this vocabulary to create image features. For each image, sift descriptors are generated then binned into a histogram according to the closest cluster center. This histogram is then L2-normalized and used as the image feature.

Classification

Classification algorithms take a set of training data, classification labels for the training data, and test data. From these inputs, classification algorithms assign a predicted label to the output test data.

Two different classifers were used in this project: nearest neighbor and support vector machine (SVM). The nearest neighbor classifer was used with both tiny image and bag of sift feature representations, while the SVM classifier was only used with the bag of sift representation.

Nearest Neighbor

The nearest neighbor algorithm is very simple. For a given test feature, return the majority classification label of the k nearest neighbors, where nearest is defined by some distance metric. In this project, k was chosen to be 1 and the distance metric used was L2 distance.

Support Vector Machine

SVMs are a more complicated classifer than the nearest neighbor. Linear SVMs seek to binarily divide the input feature space with hyperplanes. SVMs can also use kernel functions to map the input space into a more linearly separable space. All inputs on a given side of the division are labeled positive and the inputs on the other side are labeled negative. To accomodate multiple classification labels, an one-versus-all scheme was used. The most confident label (i.e. maximum distance from the margin) was reported as the label.

For this project, the SVM regularizing constant lambda was 0.0001. No kernel function was used to manipulate the input space.

Results

| Classifer | Feature Rep. | Accuracy |

|---|---|---|

| Nearest Neighbor | Tiny Images | 21.0% |

| Nearest Neighbor | Bag of Sifts | 51.7% |

| Linear 1-v-all SVM | Bag of Sifts | 67.3% |

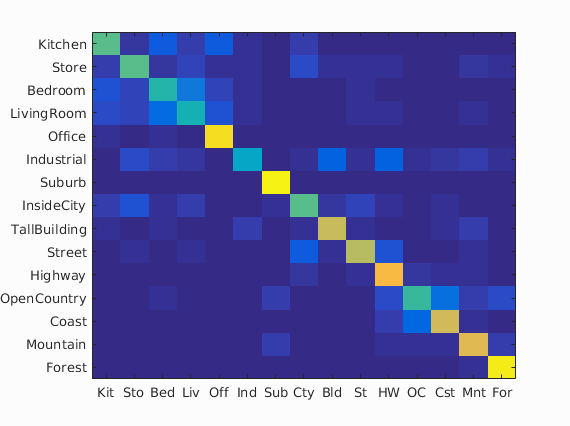

The best performing combination of classifers and feature representation was the linear SVM with a bag of sifts. This combination achieved an accuracy of 67.3%. The confusion matrix and full results for this setup is shown below.

SVM/Bag of Sift scene classification results visualization

Accuracy (mean of diagonal of confusion matrix) is 0.673

| Category name | Accuracy | Sample training images | Sample true positives | False positives with true label | False negatives with wrong predicted label | ||||

|---|---|---|---|---|---|---|---|---|---|

| Kitchen | 0.550 |  |

|

|

|

Bedroom |

InsideCity |

Office |

TallBuilding |

| Store | 0.550 |  |

|

|

|

Industrial |

InsideCity |

Office |

InsideCity |

| Bedroom | 0.470 |  |

|

|

|

TallBuilding |

OpenCountry |

Industrial |

LivingRoom |

| LivingRoom | 0.450 |  |

|

|

|

Industrial |

Store |

Kitchen |

Store |

| Office | 0.930 |  |

|

|

|

LivingRoom |

Bedroom |

Bedroom |

Bedroom |

| Industrial | 0.380 |  |

|

|

|

InsideCity |

Highway |

OpenCountry |

Coast |

| Suburb | 0.980 |  |

|

|

|

Mountain |

Coast |

TallBuilding |

LivingRoom |

| InsideCity | 0.550 |  |

|

|

|

Store |

Highway |

Industrial |

Kitchen |

| TallBuilding | 0.730 |  |

|

|

|

Industrial |

Bedroom |

Bedroom |

Street |

| Street | 0.700 |  |

|

|

|

Industrial |

InsideCity |

Highway |

Highway |

| Highway | 0.820 |  |

|

|

|

TallBuilding |

Store |

OpenCountry |

Mountain |

| OpenCountry | 0.510 |  |

|

|

|

Coast |

Coast |

Coast |

Highway |

| Coast | 0.740 |  |

|

|

|

OpenCountry |

OpenCountry |

OpenCountry |

OpenCountry |

| Mountain | 0.780 |  |

|

|

|

Coast |

Coast |

Highway |

Highway |

| Forest | 0.960 |  |

|

|

|

InsideCity |

OpenCountry |

Industrial |

Mountain |

| Category name | Accuracy | Sample training images | Sample true positives | False positives with true label | False negatives with wrong predicted label | ||||