Project 4 / Scene Recognition with Bag of Words

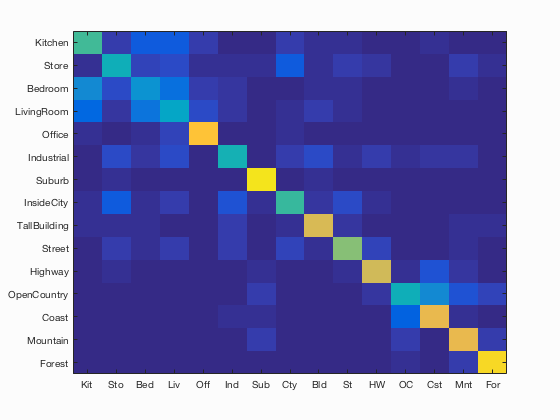

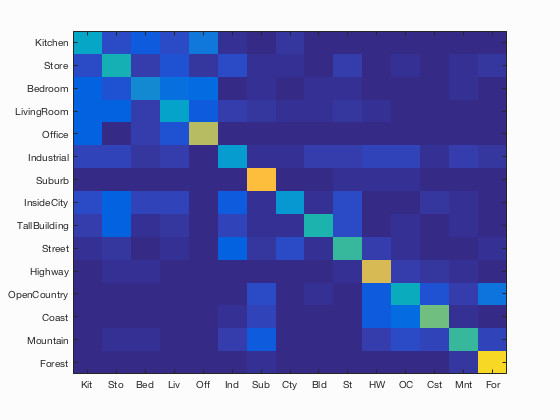

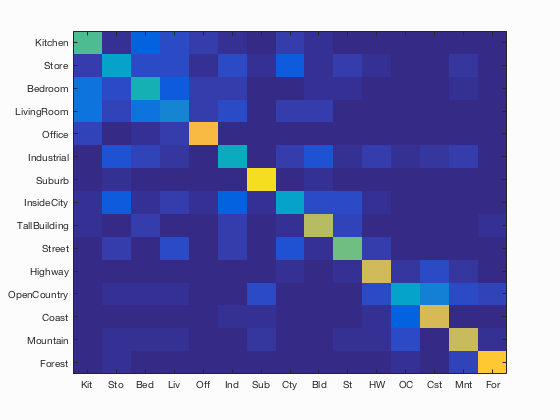

Confusion Matrix of most accurate combination of features and classifiers: SIFT bag and SVM.

Project 4 was really inspiring because it took the technical aspects of computer vision and combined them with the classification of machine learning, giving us a very useful use case! One interesting thought is that I noticed we are running paralell SVMs, which is often brought up in the discussion of ANN vs SVM, where ANN can essentially run the task of parallel SVM's with multiple categories. Anyways, that is to be disccused further in later assignments, but is extremely interesting!

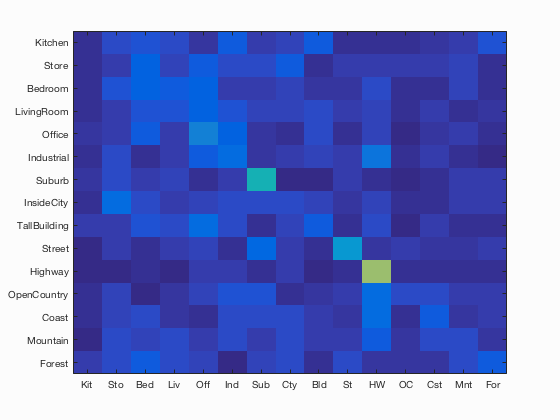

Tiny images don't give us as detailed as a representation as big of sifts, especially when using spatial pyramid (not done here, but just for sake of description of an image), so it was expected that the results would not be as keen as using SIFT. Vocab size was kept at the original constant.

Accuracy (mean of diagonal of confusion matrix) is 0.068

Accuracy with tiny images slightly increased from placeholder values. Accuracy improved again after normalizing and mean unit length, which was essentially the only design modification as far as the tiny images code goes.

Here is the confusion matrix that results when we run nearest neighbor classifier with tiny image feature. With this combination, we achieve about 20% accuracy, or 0.183 in this case. Given the guideline percentages for this however, I see that this accuracy could have been improved by using k nearest neighbors instead.

Accuracy (mean of diagonal of confusion matrix) is 0.189

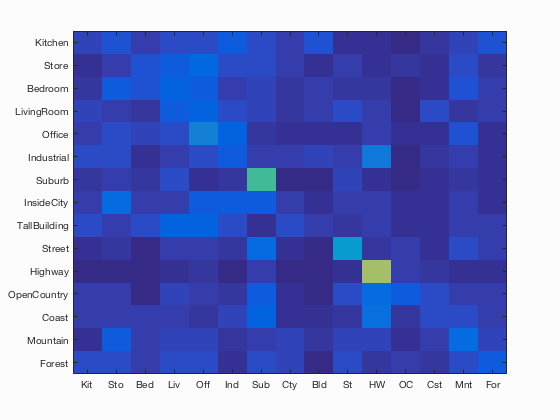

Accuracy with tiny images and SVM slightly increased from just using nearest neighbors classifier. For a useful classifier, however, we are going to need more detailed features to compare between images to make more accurate classifications.

Accuracy of correct classifciation increased GREATLY however when using bag of sift features regardless of classifier. However, linear SVM still takes the goal on this one after tinkering with lambda values. Indeed, there are too-regularized versions of the SVM that fall behind results from NN predictions.

Accuracy (mean of diagonal of confusion matrix) is .520 With more than half true positives, we are starting to see a useful classifier!

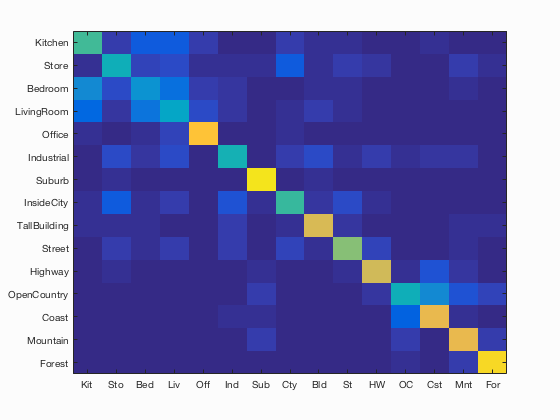

Pictured here was using SIFT bag of features with single Nearest Neighbor algorithm. When optimizing for bag of sifts, I ended up staying on 15 for the STEP value of our sift. This was because I noticed a general increase in accuracy when lowering this number which was noted that it (step variable) was a free variable to affect performance.

Now I will outline how altering different parameters lead to different results in SVM. Pictured here are results with different values for the free parameter, lambda, in the SVM linear classifier. As we can see by the results, there seems to be an optimum for these use cases which is around the .00005 range, which turned out to the best score of the bunch.

Accuracy (mean of diagonal of confusion matrix) is 0.625 with lambda .00005.

Accuracy with lambda 1 is 0.314

Accuracy with lambda 0.0005 is 0.619

Accuracy wihh lambda 0.00005 is 0.625

Accuracy with lambda 0.00001 0.590 (shown)

For some of these results I had changed the step values such ranging from 10-40.

Here is a visualization of more detailed results from the SVM with bag of sifts using lambda .00005 (the best run of the bunch).

| Category name | Accuracy | Sample training images | Sample true positives | False positives with true label | False negatives with wrong predicted label | ||||

|---|---|---|---|---|---|---|---|---|---|

| Kitchen | 0.510 |  |

|

|

|

Bedroom |

LivingRoom |

InsideCity |

TallBuilding |

| Store | 0.400 |  |

|

|

|

Industrial |

InsideCity |

InsideCity |

InsideCity |

| Bedroom | 0.330 |  |

|

|

|

Kitchen |

Mountain |

LivingRoom |

LivingRoom |

| LivingRoom | 0.350 |  |

|

|

|

Kitchen |

Bedroom |

Suburb |

Industrial |

| Office | 0.850 |  |

|

|

|

Bedroom |

Bedroom |

Bedroom |

LivingRoom |

| Industrial | 0.410 |  |

|

|

|

Kitchen |

TallBuilding |

Highway |

Store |

| Suburb | 0.930 |  |

|

|

|

OpenCountry |

LivingRoom |

Store |

TallBuilding |

| InsideCity | 0.540 |  |

|

|

|

LivingRoom |

Kitchen |

TallBuilding |

LivingRoom |

| TallBuilding | 0.800 |  |

|

|

|

Industrial |

Street |

Kitchen |

Street |

| Street | 0.640 |  |

|

|

|

TallBuilding |

InsideCity |

LivingRoom |

Forest |

| Highway | 0.720 |  |

|

|

|

InsideCity |

Store |

Coast |

Street |

| OpenCountry | 0.440 |  |

|

|

|

Coast |

Mountain |

Coast |

Coast |

| Coast | 0.780 |  |

|

|

|

OpenCountry |

Industrial |

Mountain |

OpenCountry |

| Mountain | 0.740 |  |

|

|

|

Street |

Bedroom |

Street |

Store |

| Forest | 0.930 |  |

|

|

|

OpenCountry |

Store |

Street |

Mountain |

| Category name | Accuracy | Sample training images | Sample true positives | False positives with true label | False negatives with wrong predicted label | ||||