Project 5 / Face Detection with a Sliding Window

For this project, we implemented a face detector. When it comes to training one, we have to consider the input we put in and how we classify it. The main parts to training the face detector are as follows

- Generating Positive/Negative Features

- Training Via the Features

- (Incomplete) Extra Credit: Running the detector on negative training images

- Running the detector (actually this time)

Generating Positive/Negative Features

For our face detector to work, it needs to be trained on data to help classify faces from non-faces. Our positive data was already small enough, but our negative data, not so much. The process for generating the features was nearly the same for each, use vl_hog to generate features to train on. But there one exception: for our negative features, we cropped random parts of non-face images to generate our random features. Since things were a bit more variable for negative features, we had a choice of the number of negative features we used. I chose to just use the default 10000 number of negative features because I did not see massive improvement with high numbers that justified the time it took (in fact, one time I even ran into an out of memory error). When we randomly cropped part of the non-face image, we had to make sure that they were standardized much like the original positive features, so the sections were cropped to be of a specified size determined by the feature_params argument. Also, unlike the positive images, the negative images were not necessarily grayscale. Thus, before we generate the features with vl_hog, we convert the images to greyscale. The default parameter for D was used as it seemed fairly reasonable.

Training Via the Features

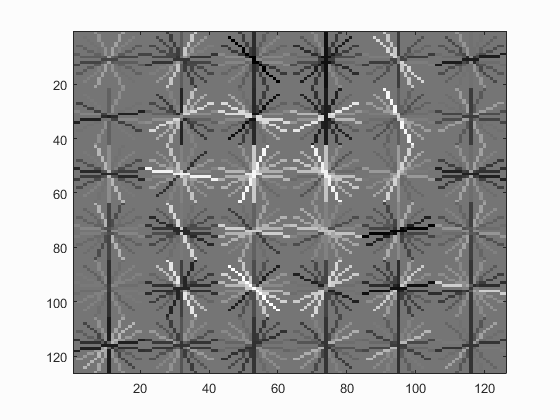

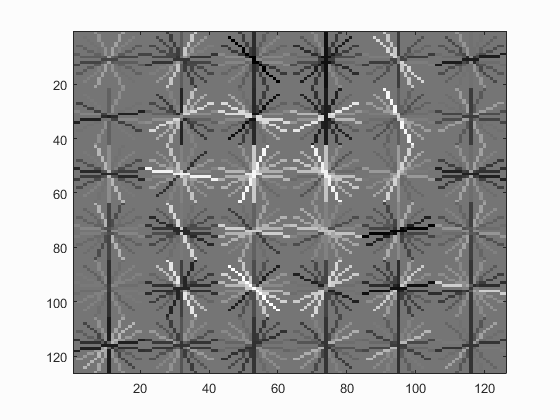

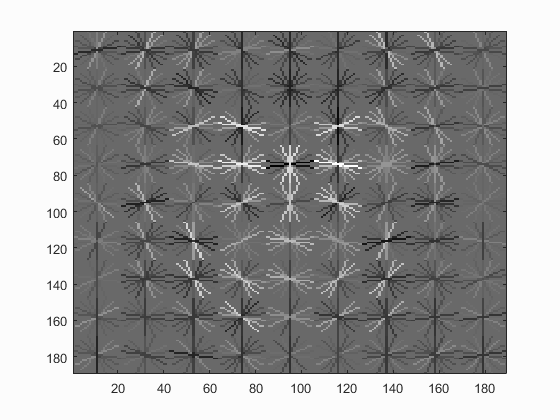

Now that we have our features, we train the classifier via SVM. SVM was covered in the previous project, so I won't focus too much on that here. With that said, I will say I used the default lambda value of 0.0001 because I recalled it working nicely for me in general with the last project, so I had a good feeling about it this time (this would hold true even if the comment suggesting it was not included.) Below we can see the resulting HOG template for the face classifier, and we can tell it worked because it resembles a face :D It's a bit harder to see with the pixel size of 4, but the round resemblance is still there. If anything, perhaps it accounts for multiple configurations the face could be like (I swear the middle looks kinda like a nose).

Face template HoG visualization with pixel size of 6.

Face template HoG visualization with pixel size of 4.

(Incomplete) Extra Credit: Running the detector on negative training images

I attempted to do the hard negative mining extra credit, but ended up realizing at the last minute that I had the wrong idea entirely. I originally interpretted it to mean that if the original classifier ends up being wrong on some of the original negative features, then readd them again and generate a new classifier with the extras. It confused me because it sounded like that would just lead to overfitting to the training data, but I ended up going with it because maybe it allows it to be more sure on particularly hard non-face examples that were randomly selected. Implementation-wise, I modified run_detector.m to give back some more values, namely the x and y values directly for where the detected features were, and made a get_all_negative_features.m file that was like random negative features except it took all the x and y values returned from the modified run_detector and used them to generate the features rather than using the x and y values. However, there's a bug in the script, so this does not actually run properly. Ideally, it'd make sense that the average precision would go higher because it'd end up getting better at recognizing non-faces and thus there'd be a lot less false negative bounding boxes. Parameter-wise, I'd cut the number of negative training examples to 5000 to be able to test the difference between the two, but I couldn't get that far.

Running the detector (actually this time)

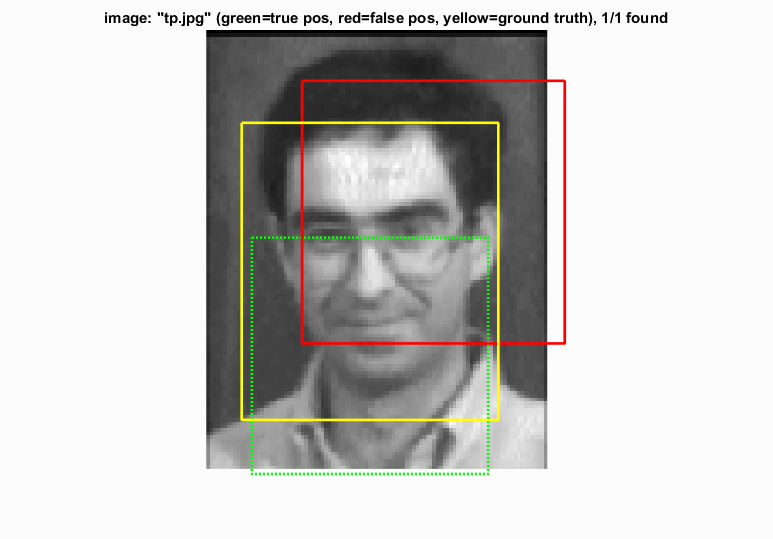

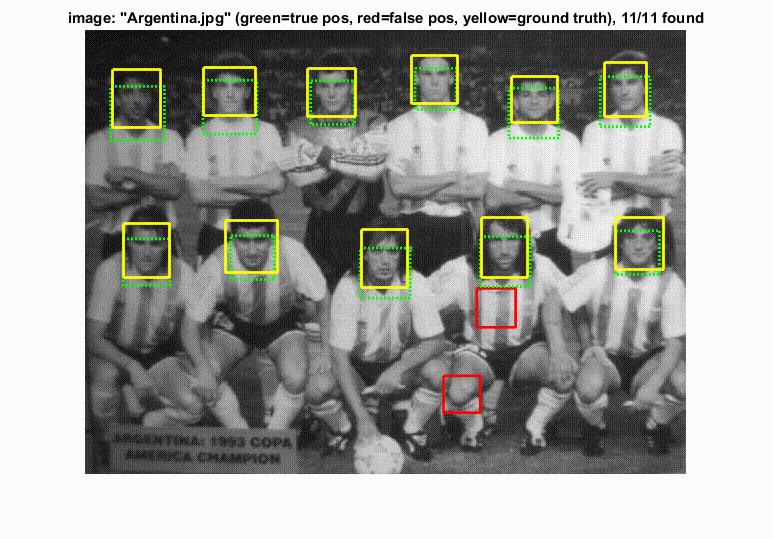

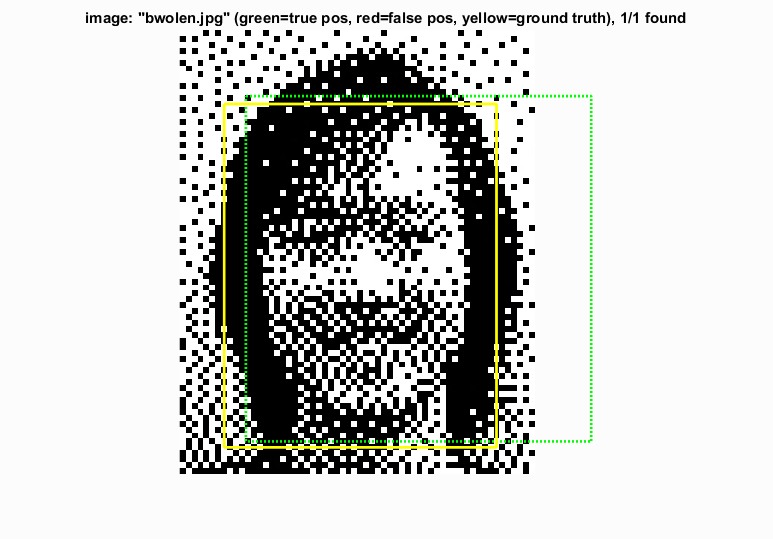

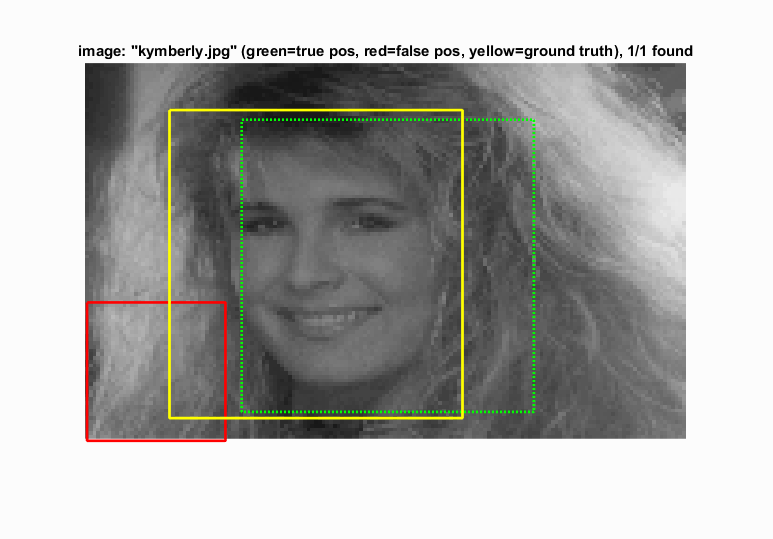

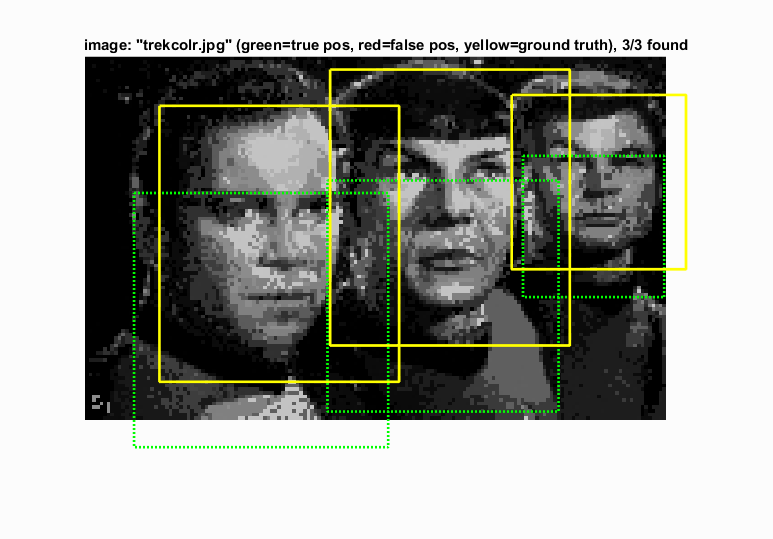

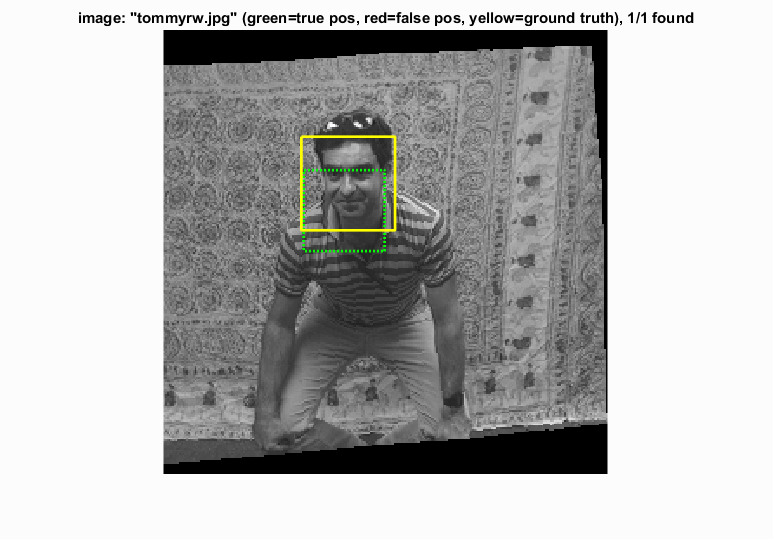

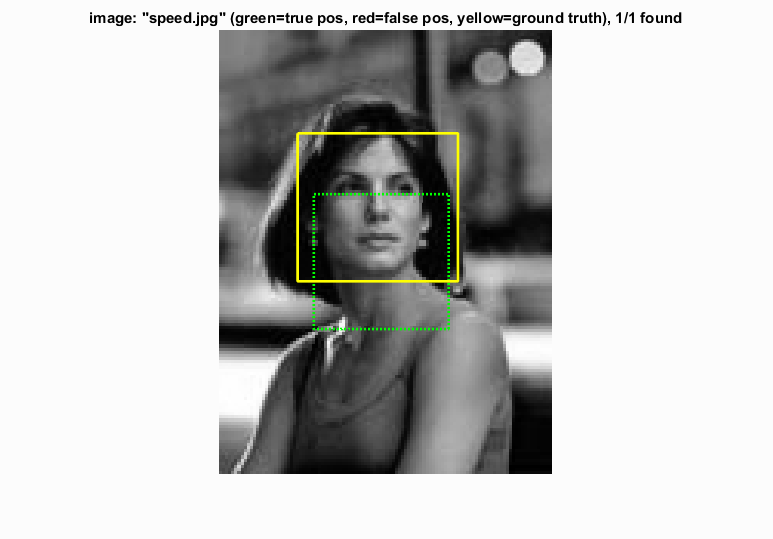

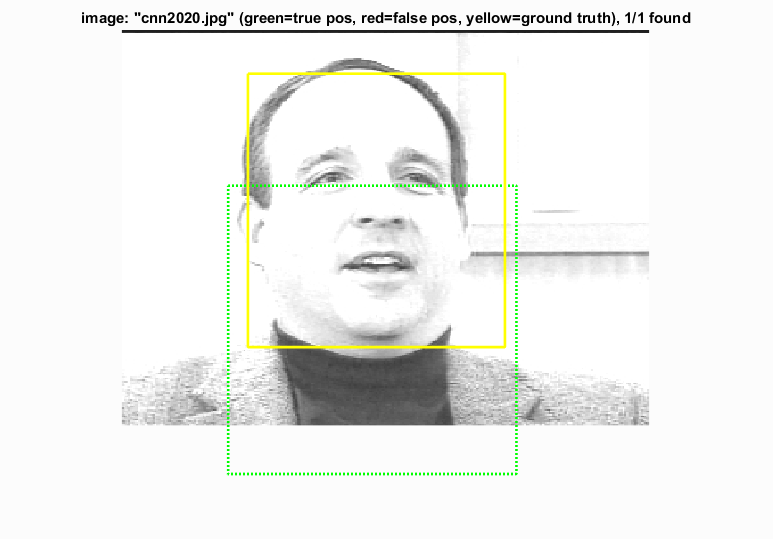

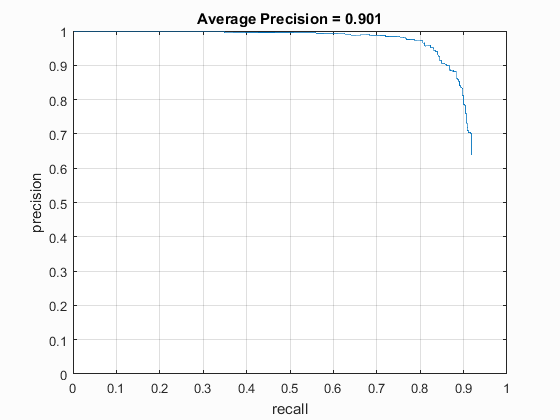

For running the actual detector, we're more or less taking a particular image at varying scales and looking at sections of the scaled versions of the image for features that our SVM classifier is confident to be faces. For the scaling, I chose to do scales ranging from 1 to 0.1 with increments of 0.5, so that I'd be pretty inclusive for a wide variety of different potential scales for the image, ranging from the original image itself to very small. Since the tested images are grayscale already, we don't need to convert them. At this point, we consider how well our face detector as a whole is doing, and we can see some example results below. As we can see, in general the detector is able to detect the faces, but could be tuned a bit better so that the bounding box fits a bit better. Also, it generates false negatives at times, but to it's to be expected because I chose to do a confidence threshold of 80% (didn't want to go for bare minimum with 70% but still wanted to have some leeway there). Also, interestingly enough, one of the false negatives generated actually arguably fit the face better than the true positive shown for the first image in the table. Either way, the detector's able to find the faces but definitely could use some tuning to make it greater.

Some example detections

|

|

Long story short, we're detecting faces by training a linear classifier with positive and negative examples, with a lot more negative examples because there exist plenty more non-faces than actual faces, and with hard negative mining we can find even more good examples to provide to improve the classifier's overall accuracy in practice. Once we detect the faces, we look at the image from various perspectives to make the final call for what faces, if any, exist in the image. The face detector I implemented is able to achieve the intended results with a lot of the default values along with some decisions like having a good range of scales in the final detector.