Project 5 / Face Detection with a Sliding Window

For this project we were tasked with training an svm to detect faces in pictures using a sliding window and histogram of gradients as our feature space. There were two major components to this project and 3 major parameters to tweak.

Training

For this part of the project we needed to train the SVM to recognize what a face looks like. We started by grabbing positive features, features that we know are faces. To do this we took a training set of pictures that are just faces. This is a training set that's used fairly regularly for this purpose. We took the HoG features using vl_hog and then flattened the HoG matrix. We then needed to get negative feature, meaning features that are not faces. To do this we took a series of images that do not have faces in them and randomly sampled image patches from them. With these image patches we calculated the HoG features and flattened them similar to what we did with the positive features. Then we combined the positive and negative features and created labels for our svm where the positive features correspond to 1 and the negative features correspond to -1. Two of the first parameters we can change are the number of negative examples and lambda which is the number of iterations the SVM undergoes. After testing I found a lambda of 0.00001 provides the best time to precision ratio. The higher the number of bad examples the better the svm will be; however, it takes much longer to sample a higher number of negative features. As a result I left it at only 10000 negative features which provided more than enough precision while also maintaining fast speed.

Testing

For this part of the project we had to create the sliding window to actually detect the faces in our image. This was the most complicated and interesting part of the project. The big parameter to tweak here is the threshold for considering faces. If we are too conservative with our threshold then we will lower average precision but will also lower false positives. If it is too liberal then we will let too many false positives through and lower our average precision while also making non-maxima suppression slower. For this I found that a threshold of -0.5 provided enough to filter out obviously bad results while also keeping our precision high. Another parameter I tuned is the multi-scales I use. I scale that image from 0.1 to 1 with a step size of 0.1. I found that this was both fast and provided great results for precision. Code for the sliding window can be found below

for j = 0.1:0.1:1

scaled = imresize(img, j);

hog = vl_hog(scaled, feature_params.hog_cell_size);

x_max = fix(size(scaled, 2)/feature_params.hog_cell_size);

y_max = fix(size(scaled, 1)/feature_params.hog_cell_size);

for y = 1:y_max - offset + 1

for x = 1:x_max - offset + 1

curr = reshape(hog(y:y+offset - 1, x:x+offset - 1, :), [], 1);

confidence = (w'*curr + b);

if confidence > -0.5

cur_confidences = [cur_confidences; confidence];

cur_x_min = ((x-1)*feature_params.hog_cell_size/j) + 1;

cur_y_min = ((y-1)*feature_params.hog_cell_size/j) + 1;

cur_x_max = ((x + offset - 1)*feature_params.hog_cell_size/j);

cur_y_max = ((y + offset - 1)*feature_params.hog_cell_size/j);

cur_bbox = [cur_x_min, cur_y_min, cur_x_max, cur_y_max];

%disp(cur_bbox);

cur_bboxes = [cur_bboxes; cur_bbox];

cur_image_ids = [cur_image_ids; {test_scenes(i).name}];

end

end

end

end

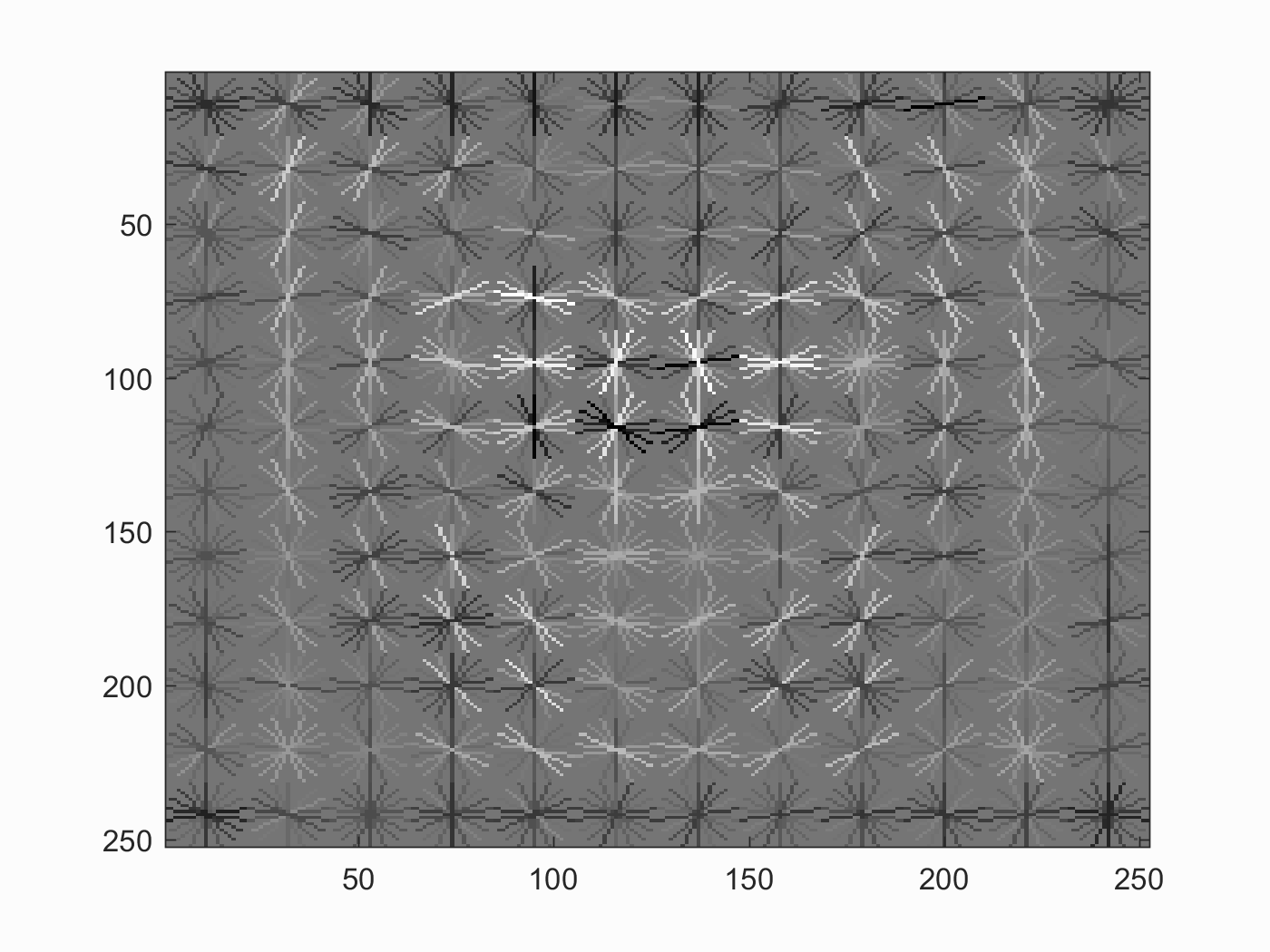

Face template HoG visualization

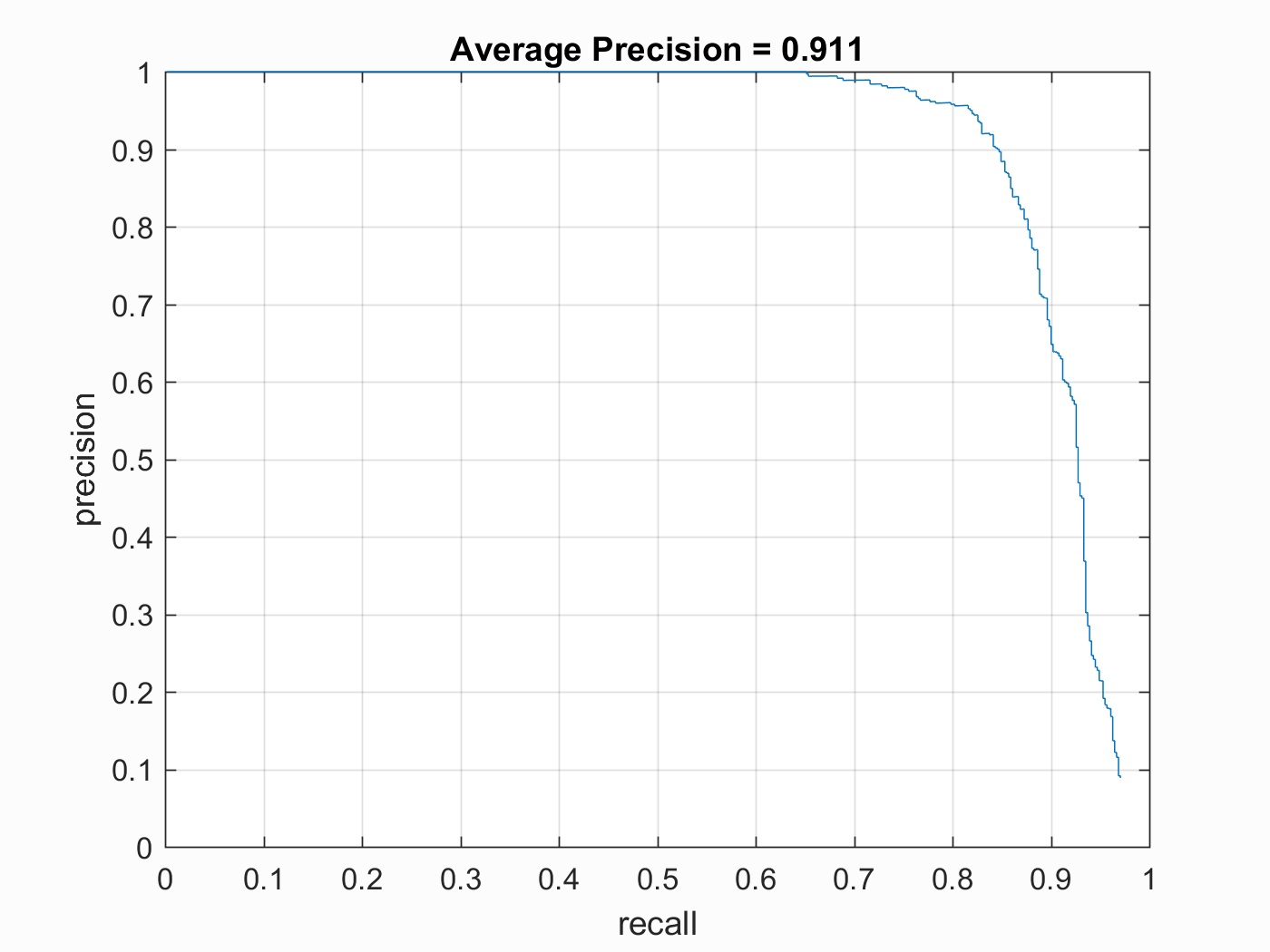

Precision Recall curve

Example of detection on the test set

Overall my detector had an average precision of 0.911 with the following parameter: Hog Cell Size=3, lambda=0.00001, Threshold = -0.5, and number of negative samples = 10,000.