Project 5 / Face Detection with a Sliding Window

Design Decisions

Get positive features

I added deformations to the faces in the training data. In particular I found

mirroring every face helped improve the classifier. Things that did not help

were:

- Adding noise, presumably this caused confusion with the background.

- Applying a median filter, this gave fewer total matches overall.

Get negative features

I implemented a multi-scale feature extractor which checks 7 different scales

of images. It picks a random set of features from each scale to add to the

negative training samples.

Run detector

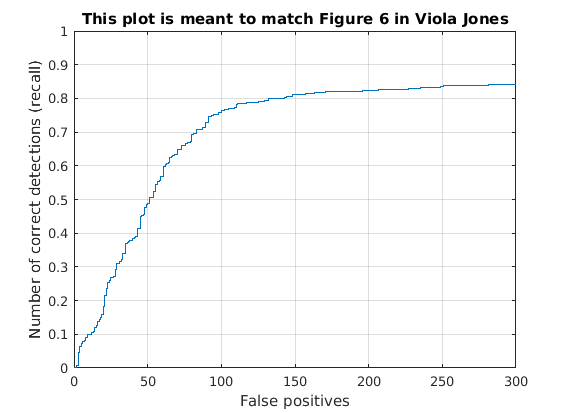

There are two possible detectors: the normal SVM and also a feedforward neural

network. I wanted to compare the two methods to see if a non-linear classifier can

improve on the SVM.

Evaluation

SVM classifier

Objective Performance

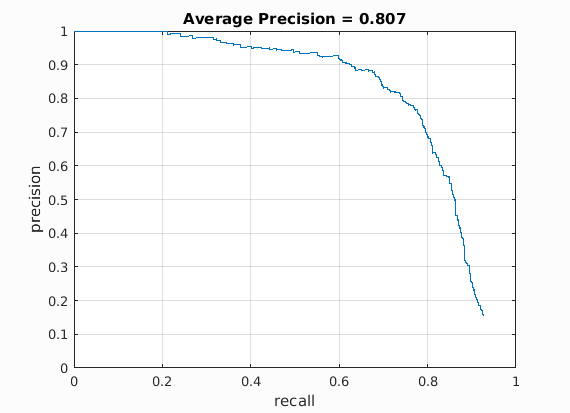

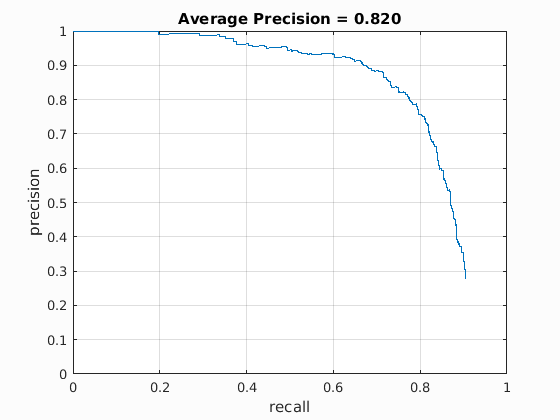

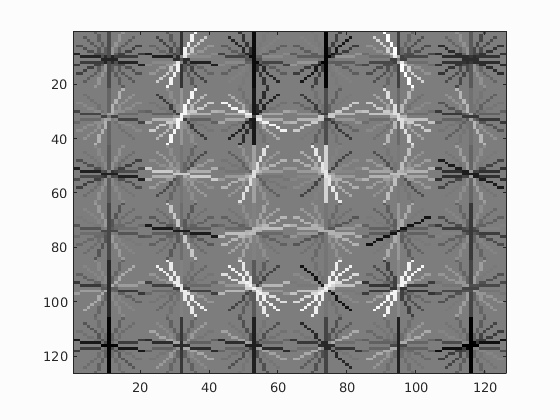

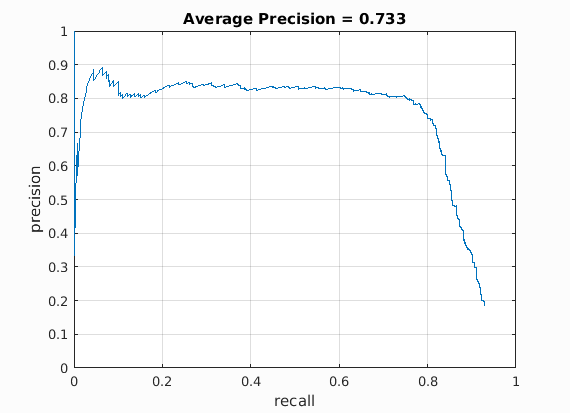

Objectively the classifier does pretty decently. The precision is relatively

high, but probably not good enough to be a useful face detector in real

applications. What's remarkable is that the highly positive

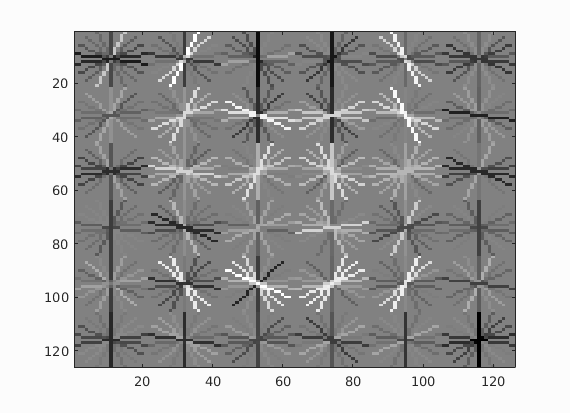

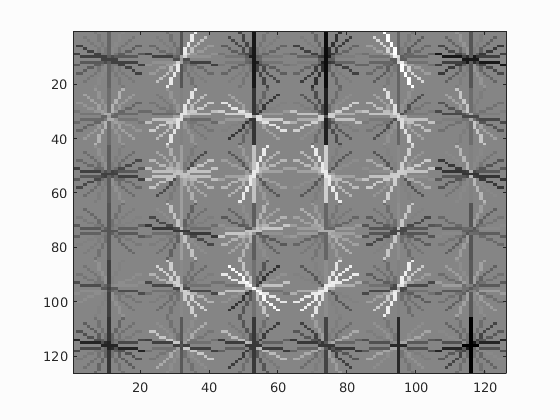

HOG features create a very convincing outline of a face. Edges that are

not at all face-shaped are given very negative weights. Vertical edges in

particular seem to be very unface-like.

Subjective Performance

Best Matches

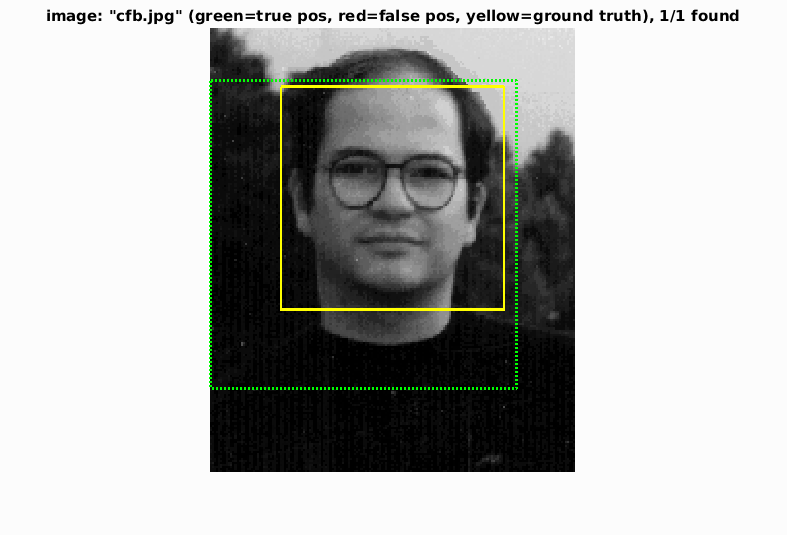

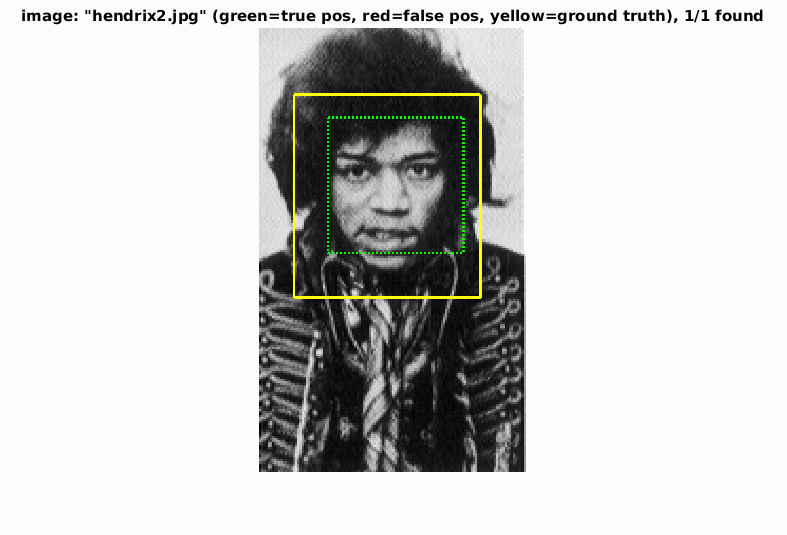

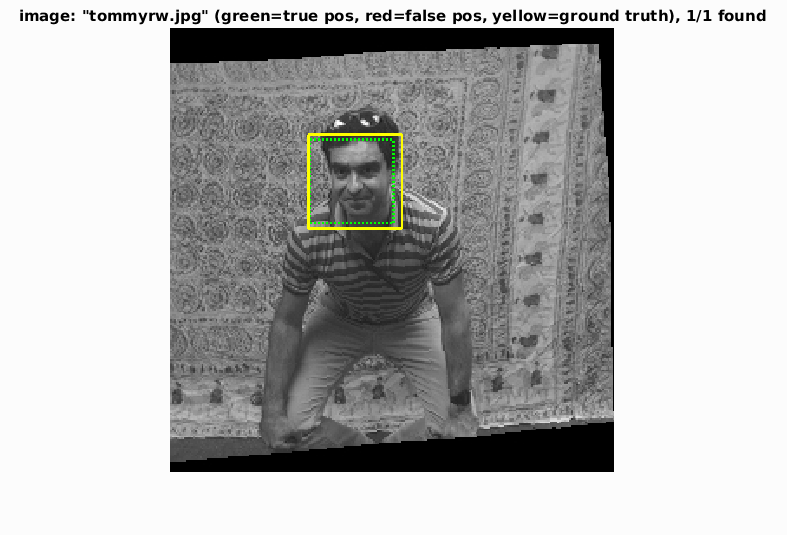

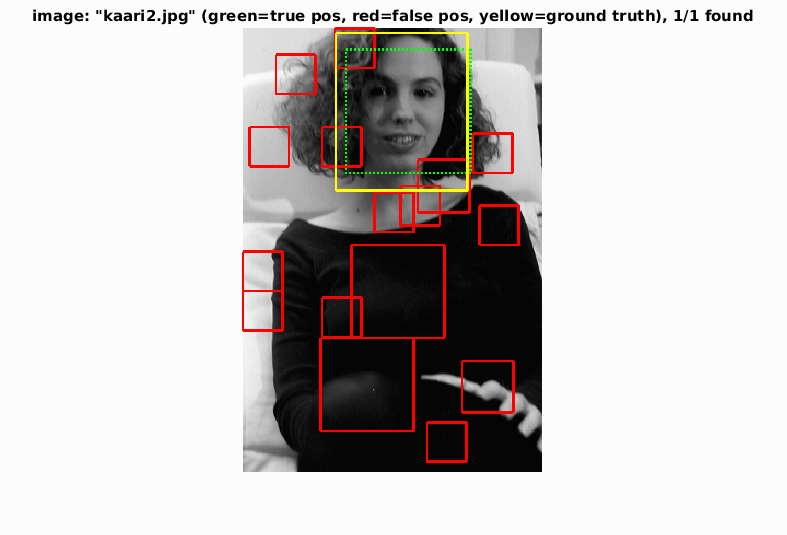

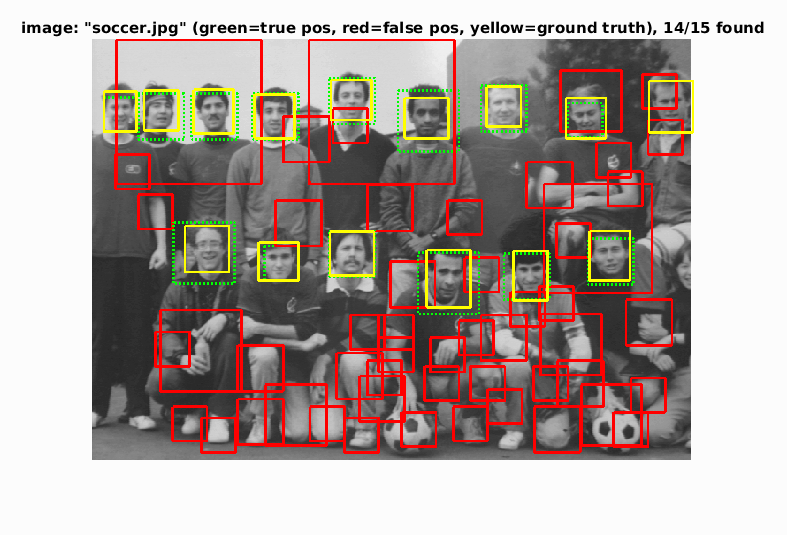

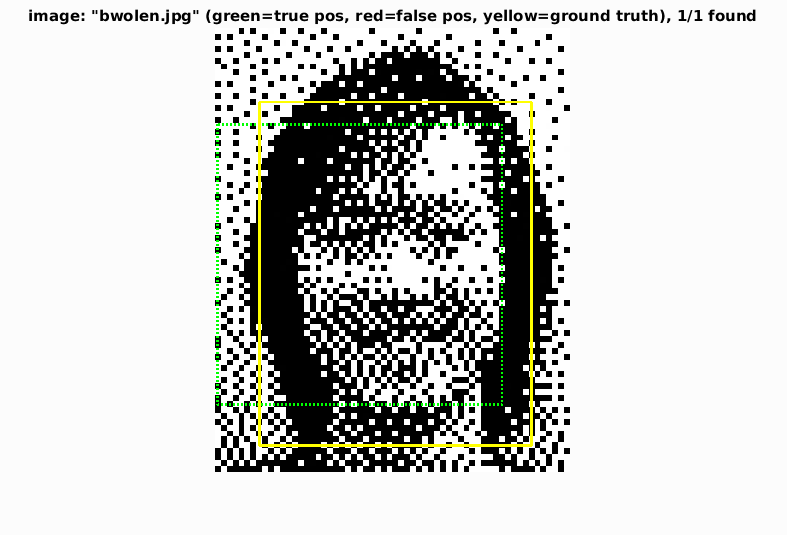

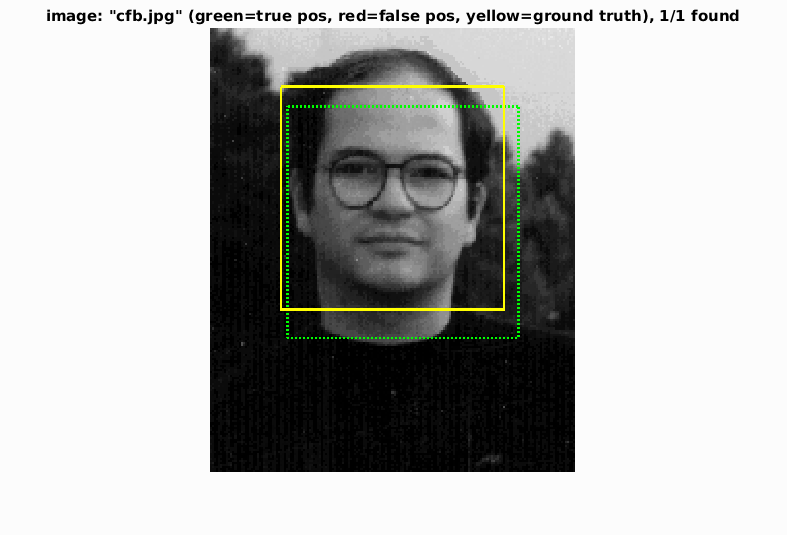

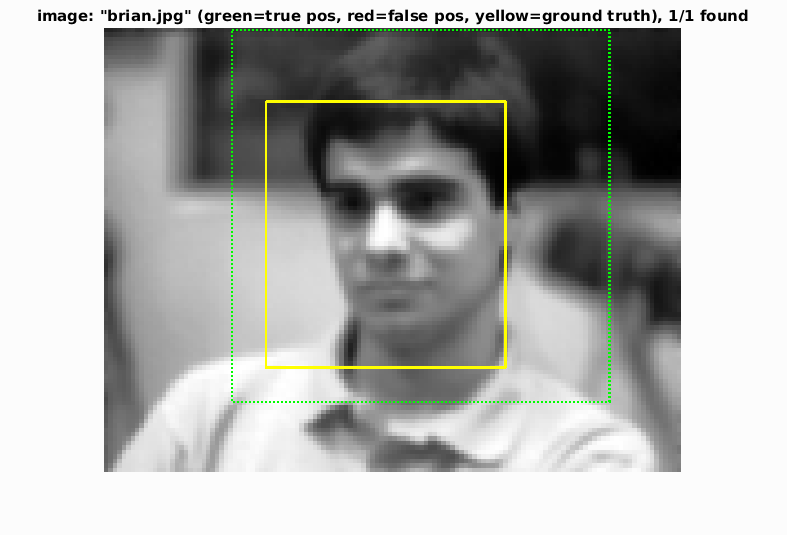

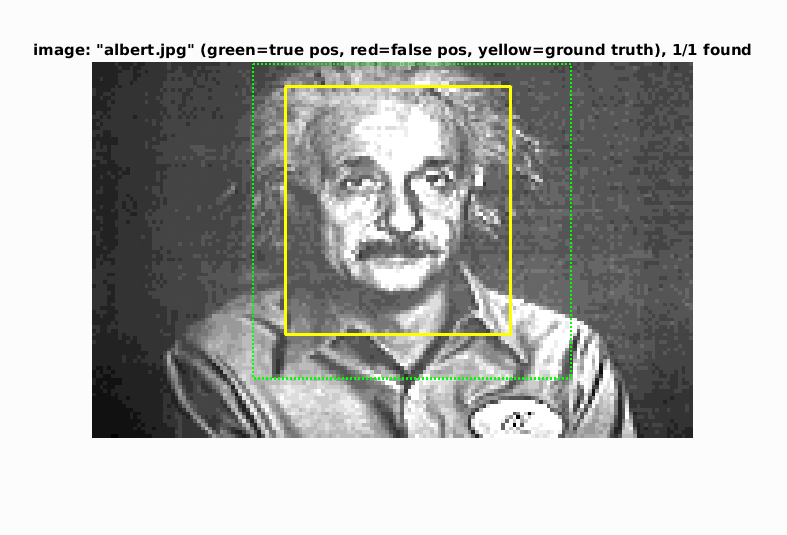

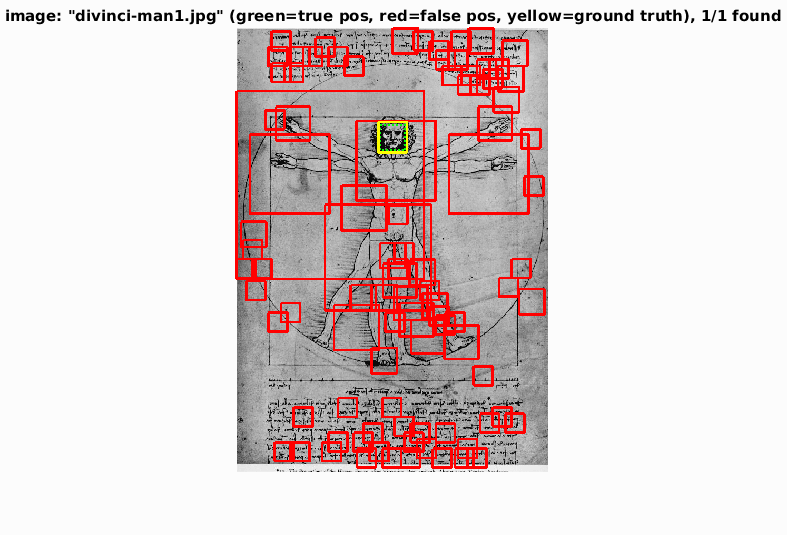

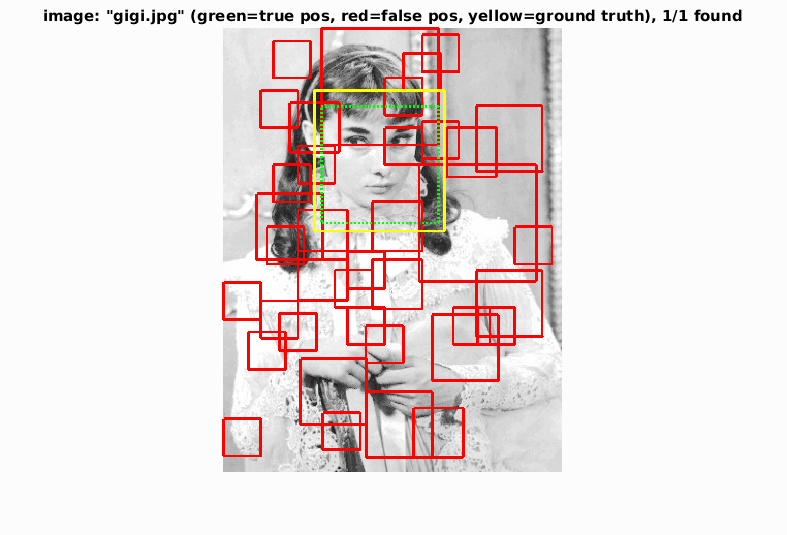

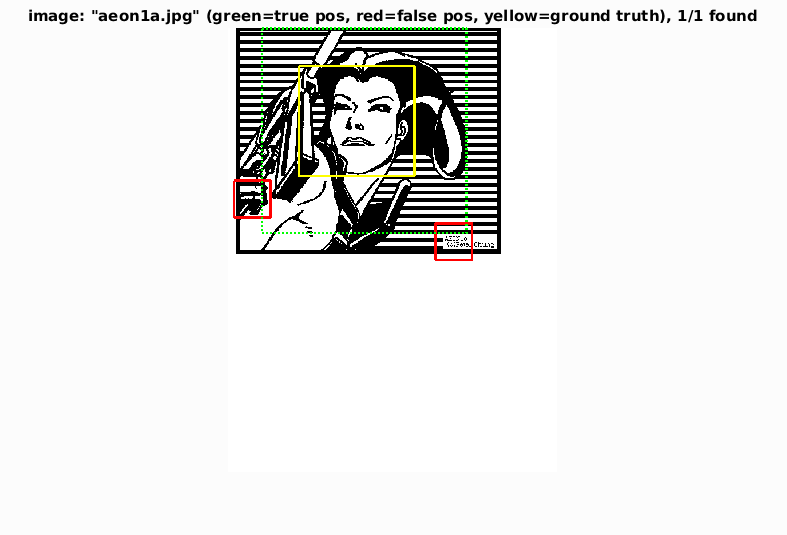

Holistically, some of the best matches were the images with a single face. This

makes intuitive sense since the training set were very similar to these images.

Worst Matches

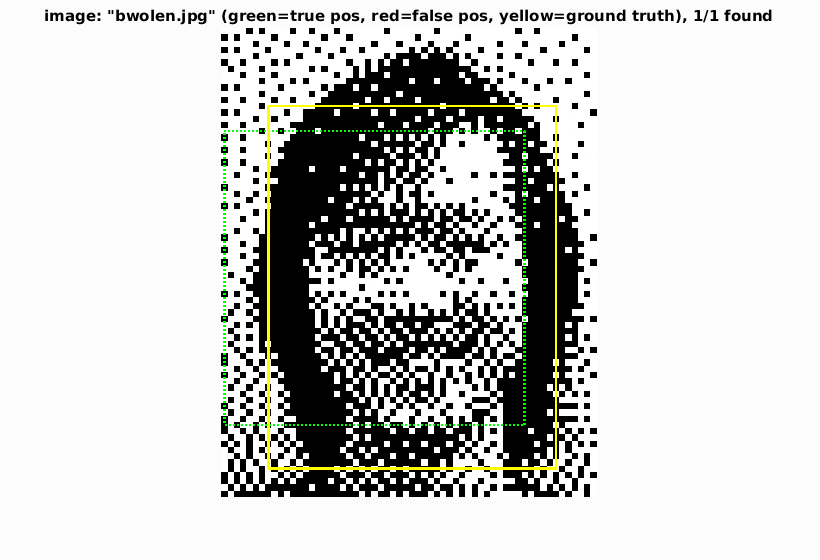

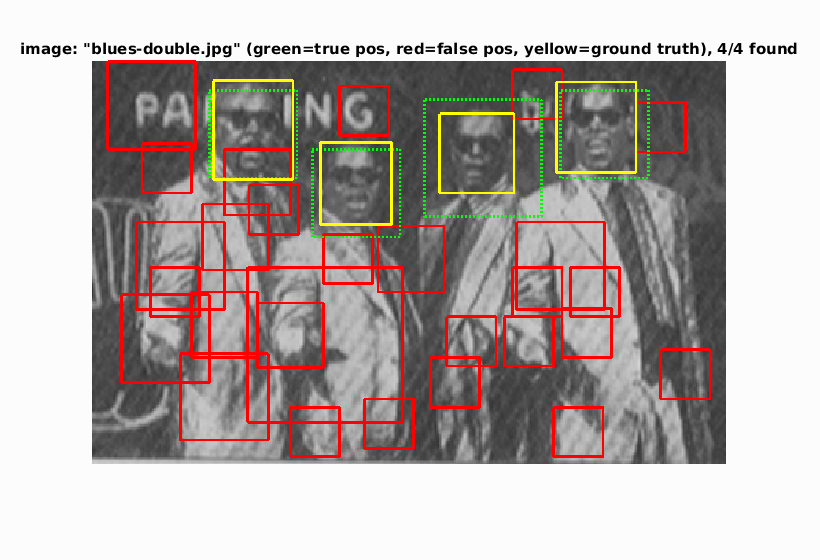

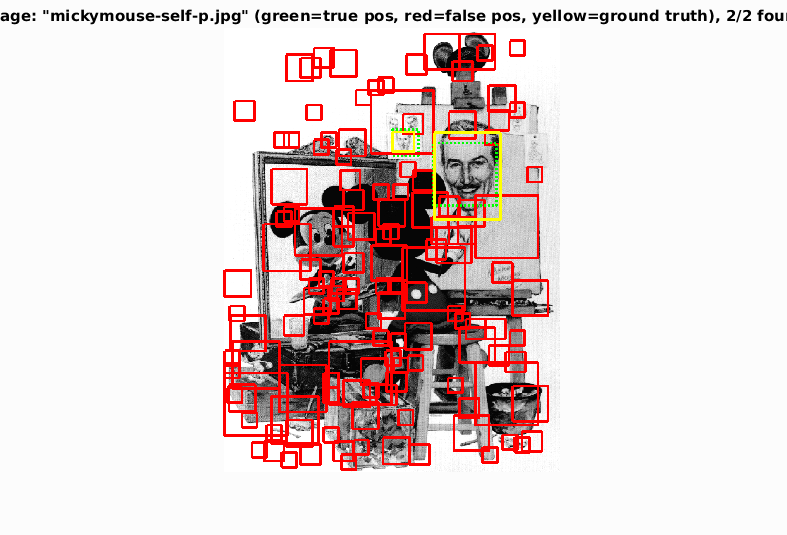

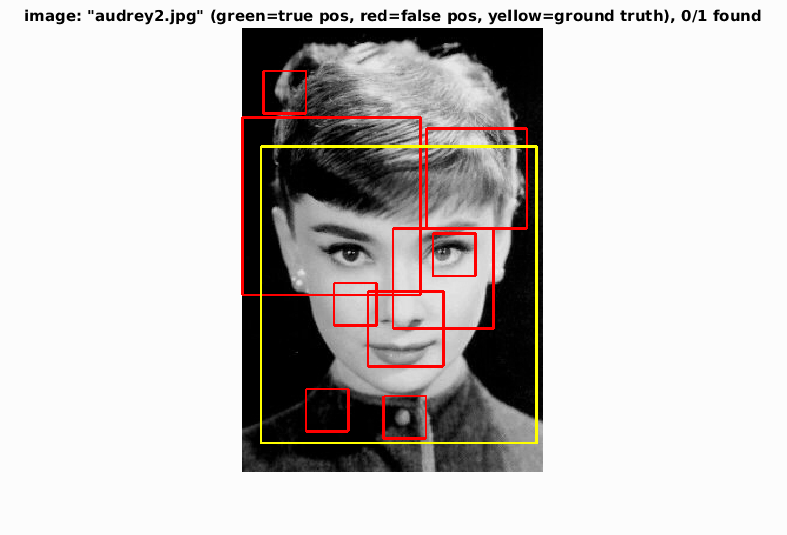

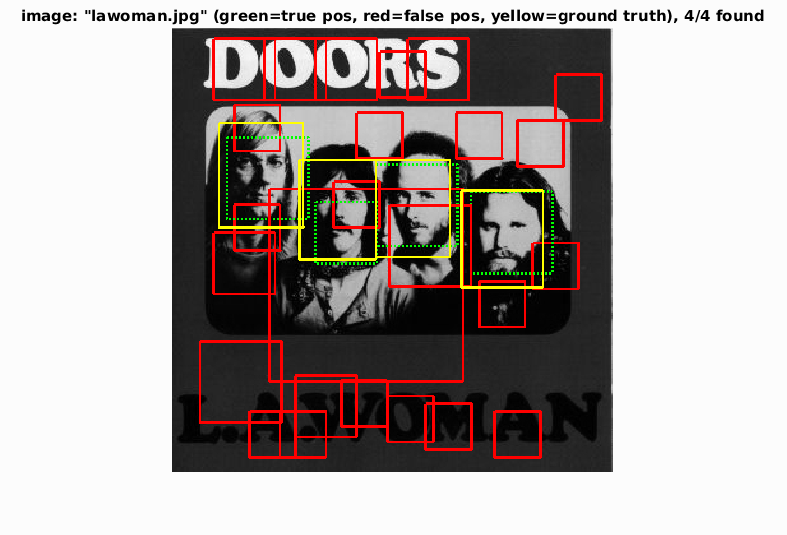

Noisy or busy images had a lot of false-positives. Sharp lines that correspond

to faces can fool the SVM. And unfortunately, despite the

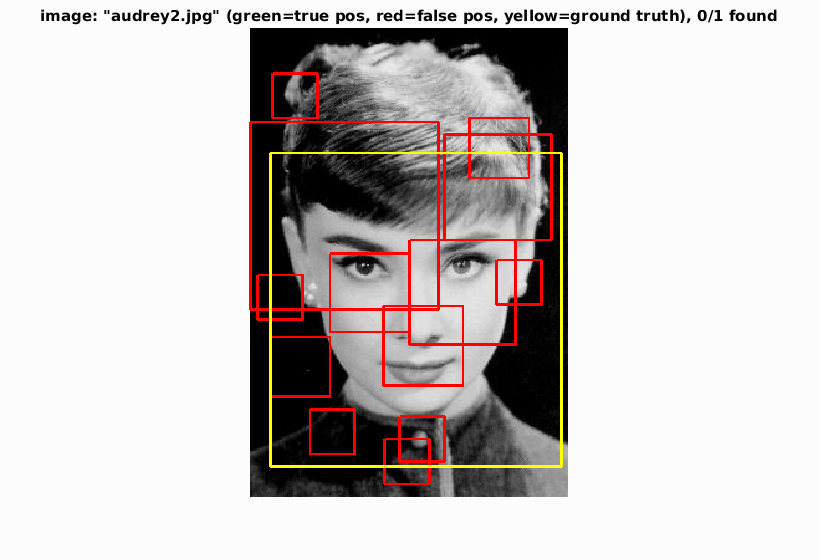

detector working well on single faces it still misses some. The picture of

Aubrey's face has been particularly challenging to find, partly because it is

a large image and requires a large scale detector.

Interesting Matches

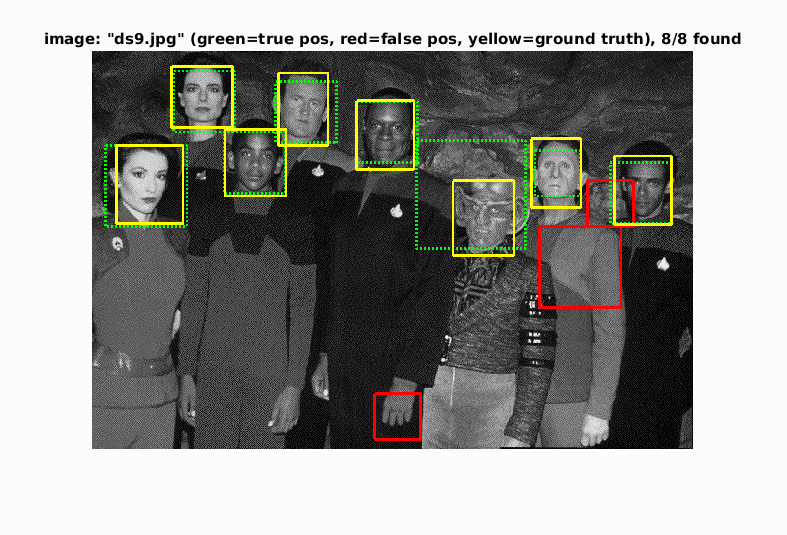

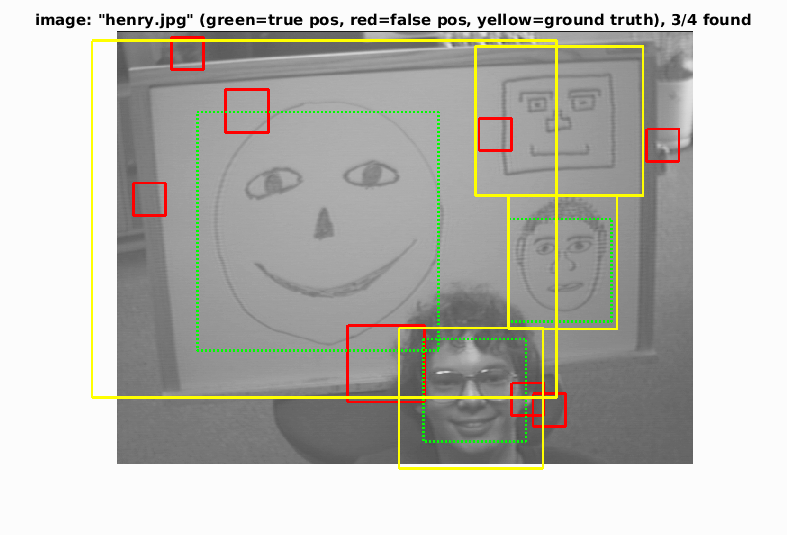

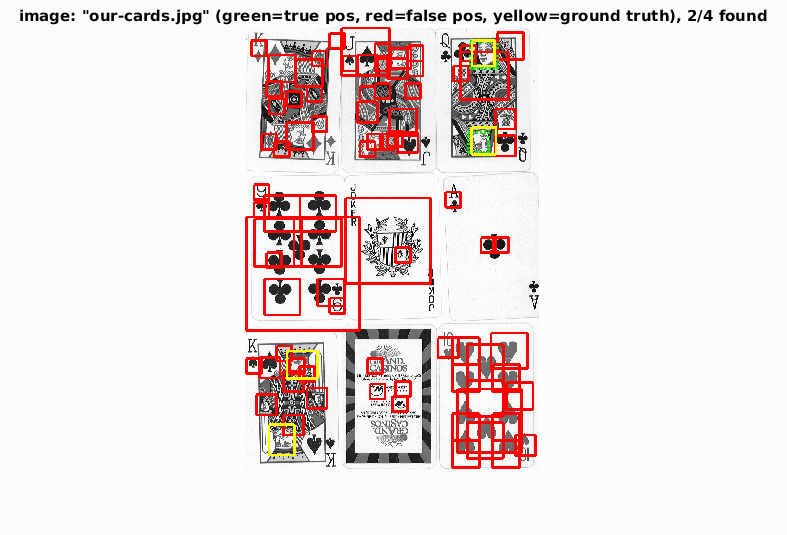

The Deep Space 9 image is interesting, because the face detector successfully

matches the alien's face. It is also good at detecting roundly drawn faces, but

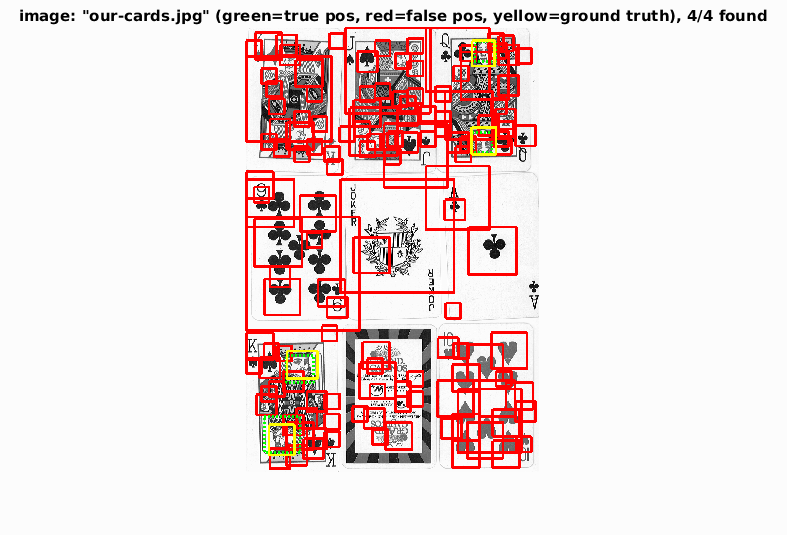

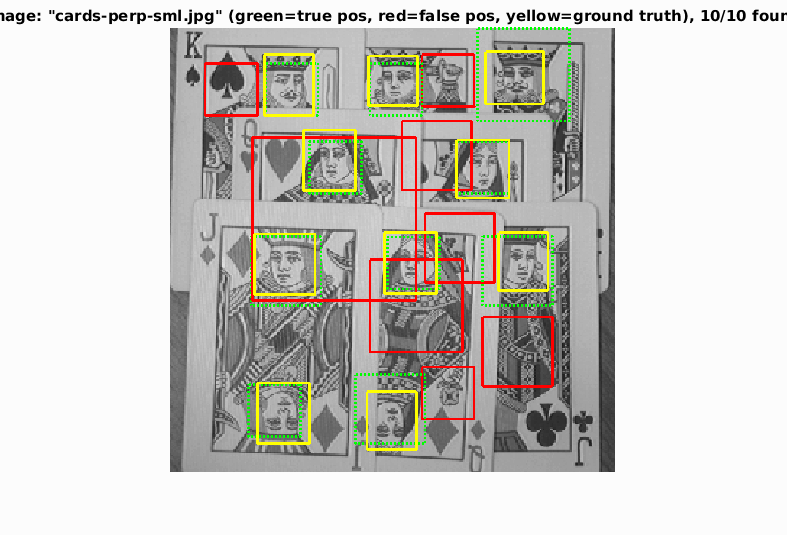

not square robots. In the cards picture it found the Queen's faces, but not

the King's. The detector also seems to almost perfectly detect letters as seen

in the Police album, despite being trained on faces.

Increasing the number of negative samples

By tripling the number of negative samples from 10,000 to 30,000 precision

increased slightly:

Without glasses, the HOG features look kind of like a skull with its mouth open.

Decreasing the threshold

By keeping the number of negative training samples the same and decreasing the

threshold from 0.0 to -0.2, the precision goes up even more. This is likely

because some faces are close to the SVM boundary, so they get misclassified

easily.

Neural Networks

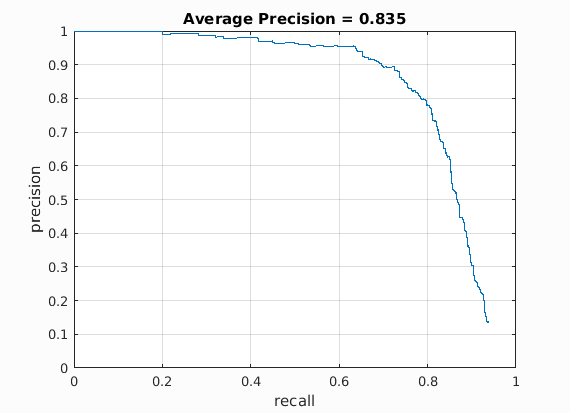

To compare the SVM to a nonlinear classifier, I trained a feedforward neural

network with two hidden layers: 100 nodes and 10 nodes respectively.

Objective Performance

Surprisingly the neural network performed worse. I believe this could be due to

insufficient training data. The SVM performs well on small amounts of

data, but a neural network needs more to be effective. The neural network is

also more prone to over-fitting, which could cause lower accuracy on the

testing set.

Subjective Performance

Best Matches

The best matches are still single faces. This is very likely because the testing

data closely resembles the positive training data.

Worst Matches

These matches are particularly bad because of the large number of false positives.

Once again the Audrey Hepburn photo gets unrecognized. The number of scales I

have are not big enough to capture it. You can see in some of the pictures it

is identifying totally empty space as a face.

Interesting Matches

Interestingly, the neural network gets all of the faces in the cards... but also

a lot of non-faces. It also recognizes drawn images and in the "DOORS" text it

finds almost perfectly all of the letters in the text.