Project 5 / Face Detection with a Sliding Window

In Project 5, we were asked to implement a version of Dalal Triggs, which in essence is a face detection algorithm including a sliding window. The implementation included three main steps, and they are listed below.

- 1. Create Positive and Negative Features

- 2. Train an SVM Classifier on these combined features

- 3. Run test images through the SVM Classifier to identify positive face detections.

Part 1 and 2: Positive and Negative Features, and training a linear, SVM Classifier

The first step in creating an accurate face detection system is to acquire a large amount of training images. These training images need to both be positive (those that depict a face) and negative (those that do not depict a face). From those training images, we need to compile a list of features. Increasing the number of samples provided can also increased the accuracy of the SVM, which in turn creates a more accurate detector. In this project, we utilized HoG features (Histogram of Gradients). These HoG features detail the overall gradient for a certain patch within a test image. Once we've compiled a large list of positive and negative HoG features, we can train a linear SVM. This SVM can then identify what is and isn't a face. Below is code that I used to compile HoG features.

%Positive and Negative Features

hog = vl_hog(im2single(img), feature_params.hog_cell_size);

vectorHog = reshape(hog, [1, dimension]);

features_pos(i, :) = vectorHog;

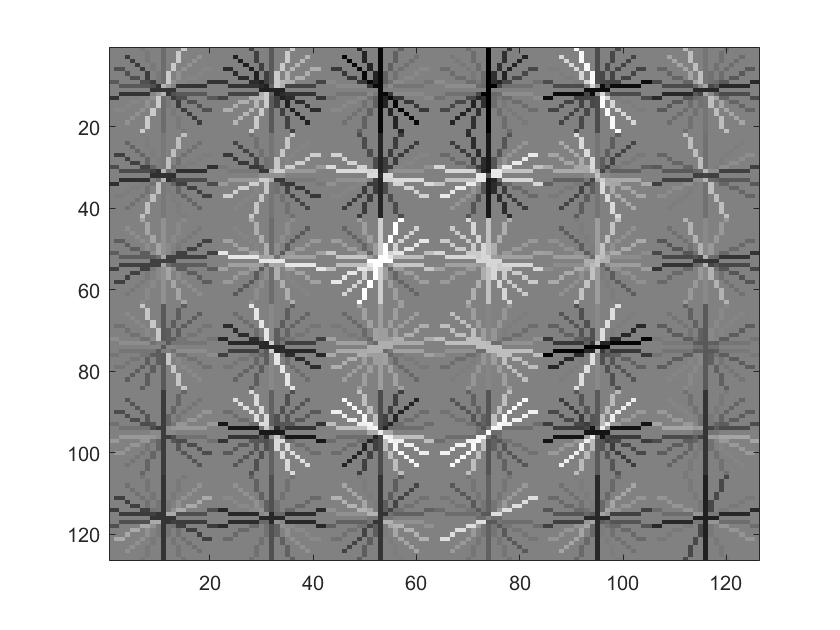

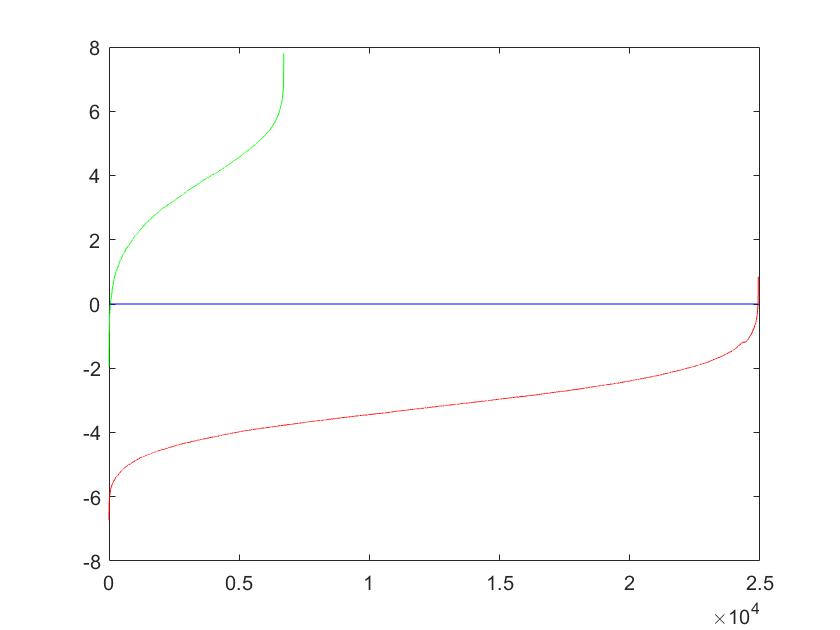

The following is the graph that depict my training confidences for both positive and negative features, and my HoG visualization.

|

Part 3: Run Detector for Test Images

After creating a linear, SVM classifier, we are ready to run some test images to identify faces. In this project, we implemented the multiscale detector. In this process, the test image is scaled down, then transformed into a HoG tensor. The program then iterates a window over the HoG tensor to identify patches that have high confidences which exceed a certain threshold. High confidences indicate faces, so these confidences and their respective windows are returned. This process then repeats for a scale_count number of times, each time with the image being scaled again. Precision of this process is directly related to three variables: HoG cellsize, threshold, and scale_count. A smaller cellsize, lower threshold, and higher scale_count ensure a greater precision. False positives also increase with a smaller threshold, but this is inconsequential if faces are being accurately detected.

%Face Detector and Sliding Window

patch = hog(j:j + (feature_params.hog_cell_size - 1), k:k + (feature_params.hog_cell_size - 1), :);

vectorPatch = reshape(patch, [1, dimension]);

confidence = (w' * vectorPatch') + b;

bbox = ([k, j, k + (feature_params.hog_cell_size - 1), j + (feature_params.hog_cell_size - 1)] ...

.* feature_params.hog_cell_size) ./ scale_factor^scale_count;

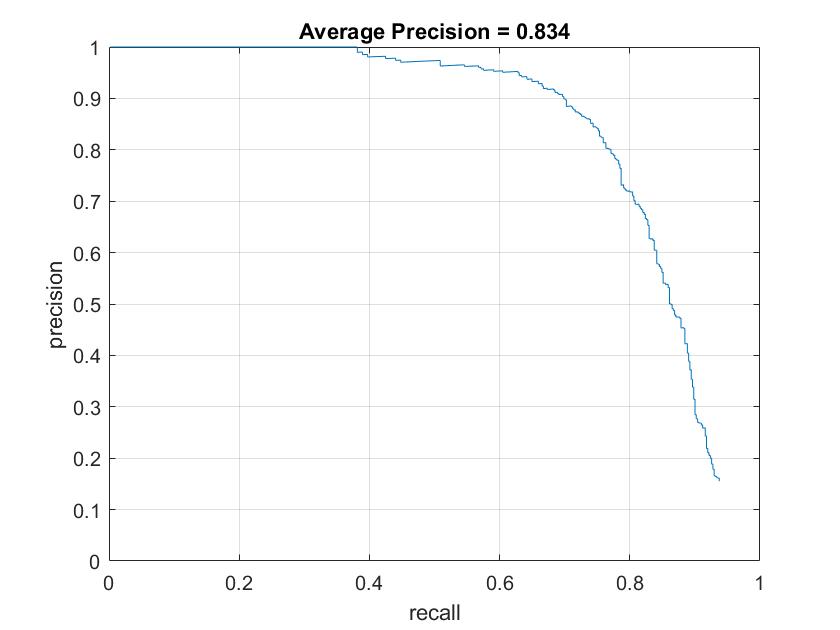

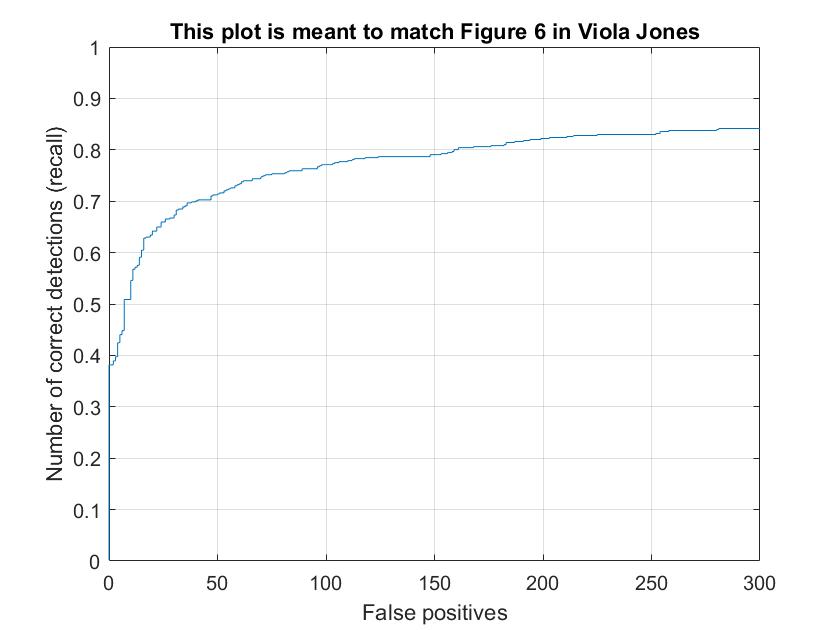

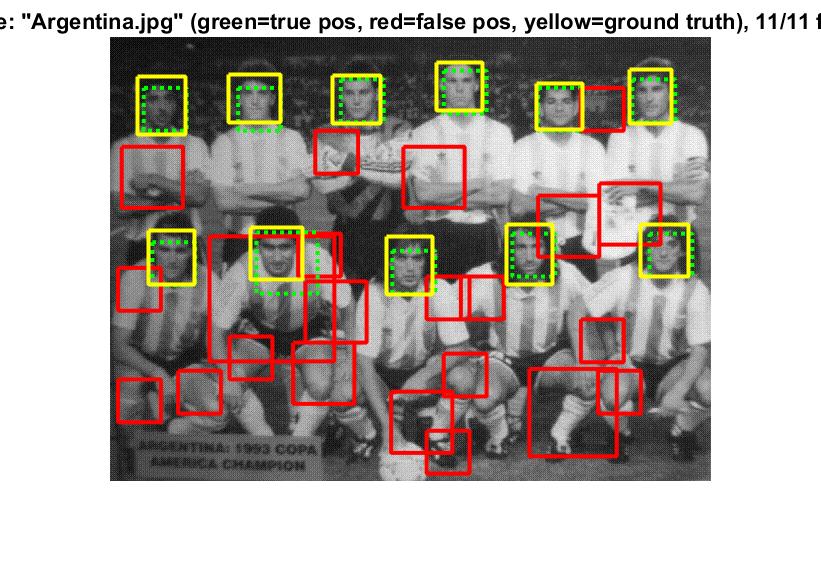

The following are the average_precision of my multiscale detector, the False Positive v. Accurate Detections, and an example Test Detection.

|

After doing extensive testing, I found that my accuracy stabilized ~83% with the following parameters: cellsize = 6, threshold = -0.5, scale_count = 6, scale_factor = 0.7, and num_negative_samples = 25K.