Project 5 / Face Detection with a Sliding Window

This project has 4 components to perform face detection using the Sliding Window Approach: Get Positive Features, Get random negative features, Classifier training, and Run Detector (which removes duplicate detections)

Get Positive Features

Get positive features involved iterating through the training image set and calling vl_hog (a SIFT-like feature representation) by passing in the image of type single (32-bits) and the provided cell size. After running HOG, I reshaped the HOG features to a 1xd vector and reassigned it back to the features_pos matrix. Since I used the default vl_hog parameters, I used d = (templateSize/cellSize)^2*31. Other than this, no other major design decisions were taken.

Get Random Negative Features

The intention of this part is to get all the non-faces from the pics so that the classifer is aware of what is not a face. The algorithm involved iterating through the non face image set and randomly picking boxes of dimensions templateSize*templateSize and running HOG on that patch. Before running HOG, each image is grayscaled using the grayscale function. The only design decision I took was to take the num samples/num_images number of samples from each image. I previously tried using num_samples, but it was too slow and there were overlaps.

Classifier Training

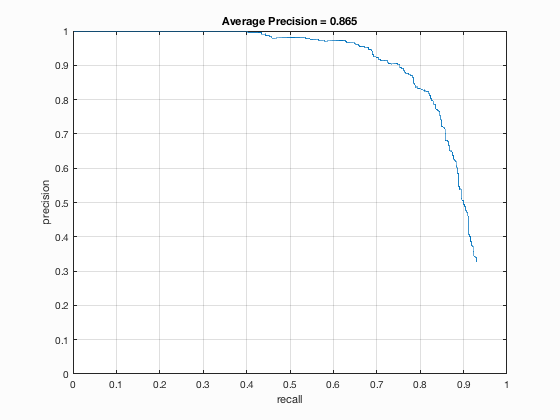

This part of the project used a linear SVM, which has a similar mechanism to the last project. We have to again adjust the regularization constant, lambda, to adjust the bias-variance tradeoff. I chose lambda to be 0.0001 and found out that it gave me the best result. The false positive rate for 0.001 was 0.0003, and the fpr for 0.0001 was 0.0001. Moreover, the precision for lambda of 1e-4 was 0.864 while the precision for the value was 0.861.

Run Detector

First I obtained the scales by starting at k=1 and then repeatedly multiplied k by 0.9 until I reached a scale of 0.2. I previously tried multiplying by 0.7, but the results were better with 0.9. The algorithm is simply for each image in the test set, and foreach scale I run HoG with the provided cell size. Then I constructed a feature vector of size (templatesX*templatesY, d) and then used hog to get the desired template. Afterwards, I suppressed non-max values using a threshold of 0.75 (tested 0.6, 0.8 and 0.9 and determined 0.75 to be the best) and then I constructed the BBox which is of size templateSize^2 and appended that to the cur_bboxes array.

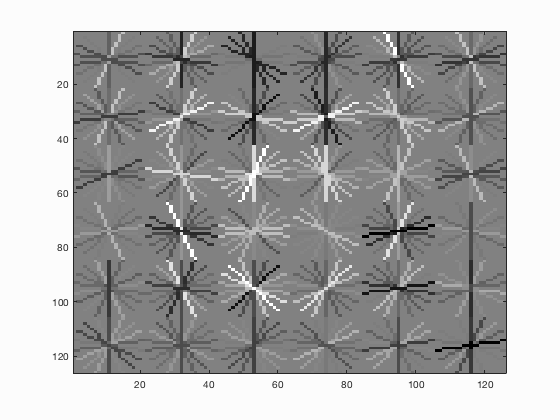

Face template HoG visualization. This looks like a face as seen from the chin and nose.

Precision Recall curve for the starter code.

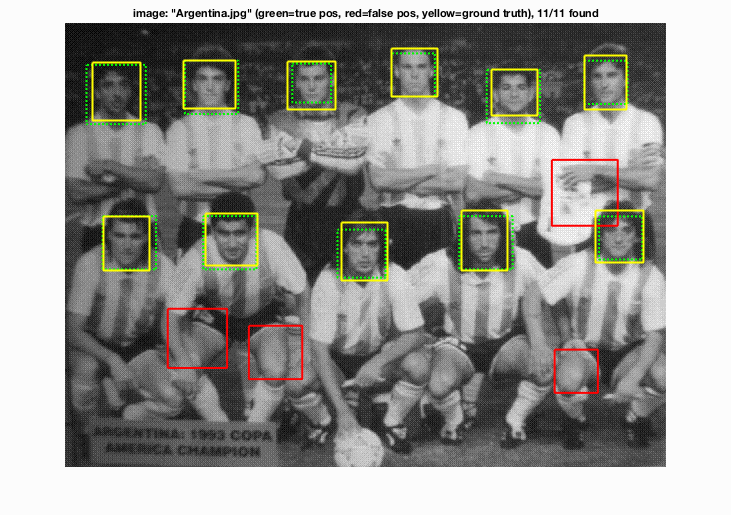

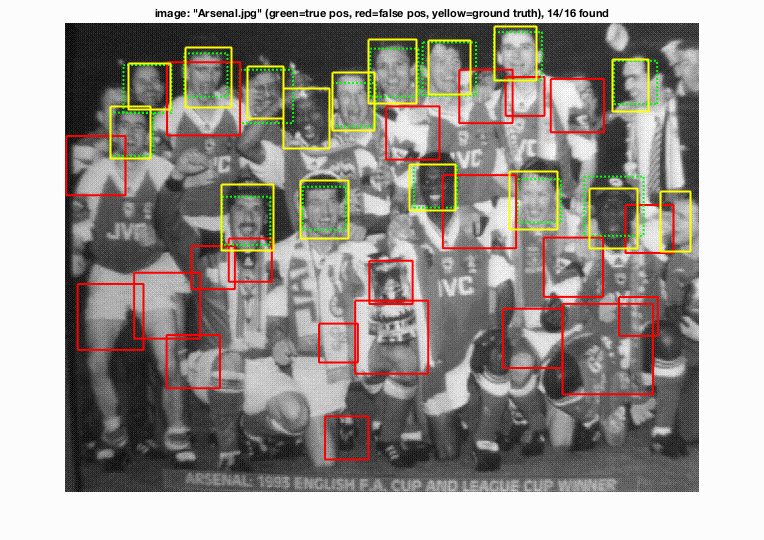

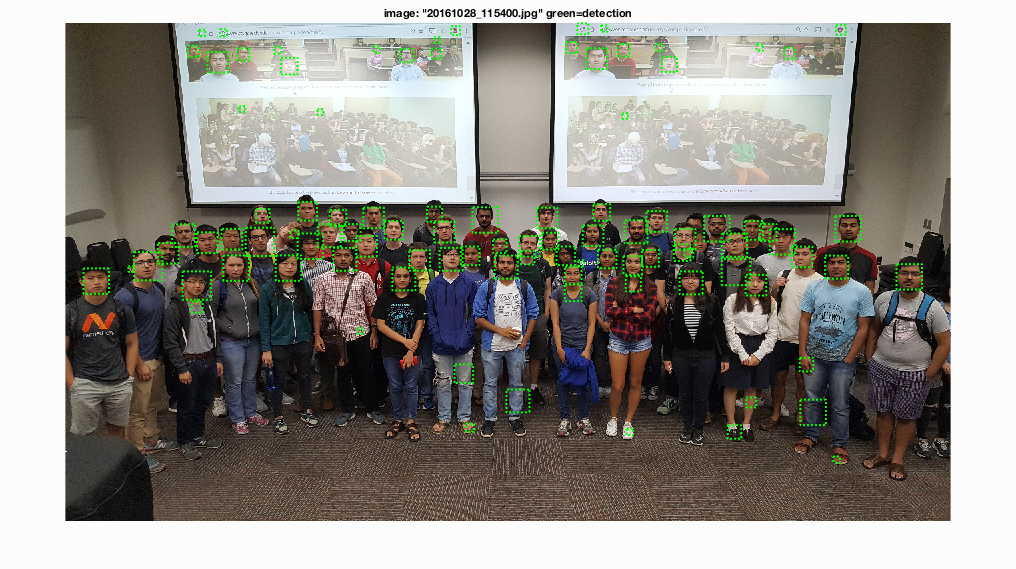

Example of detection on the test set and EXTRA SET from the starter code.