Project 5 / Face Detection with a Sliding Window

1. My implementation

1.1 Basic functions

- positive and random negative features extration

- SVM training

- multi-scale detector

1.2 Extra/graduate functions

- Logistic regression classifer

- hard-negative mining

1.3 Quick performance preview

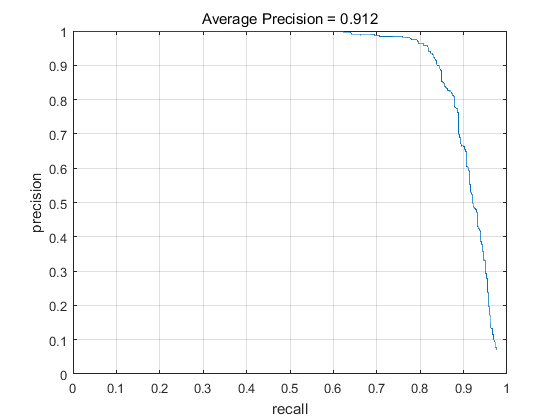

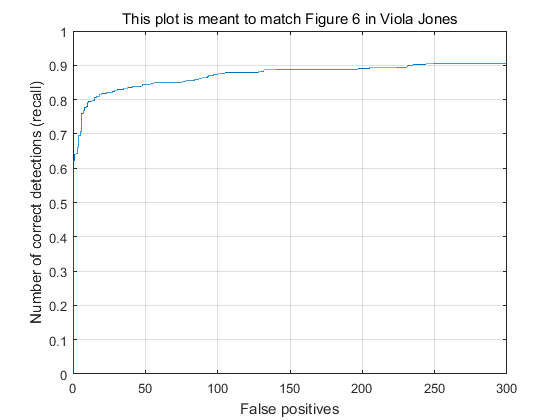

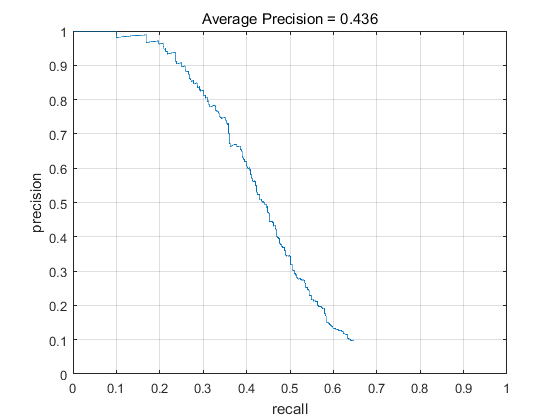

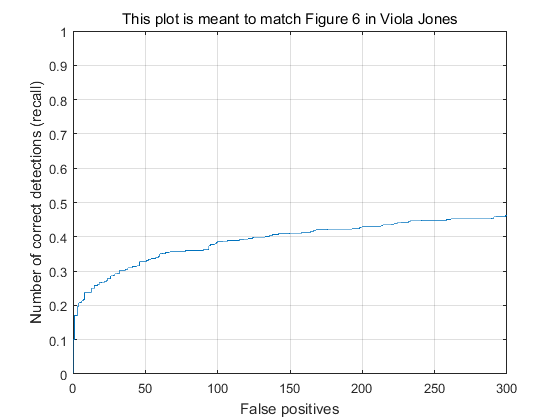

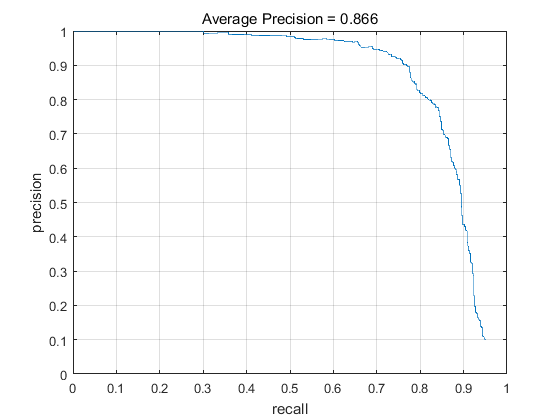

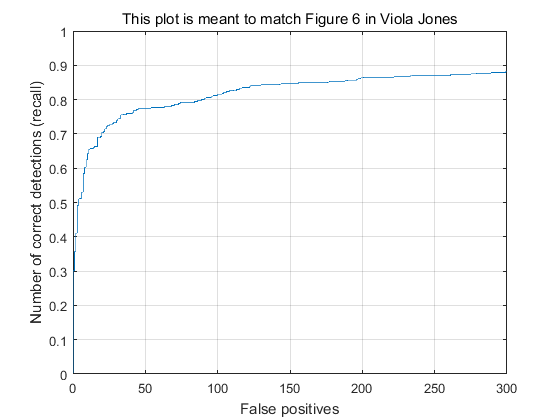

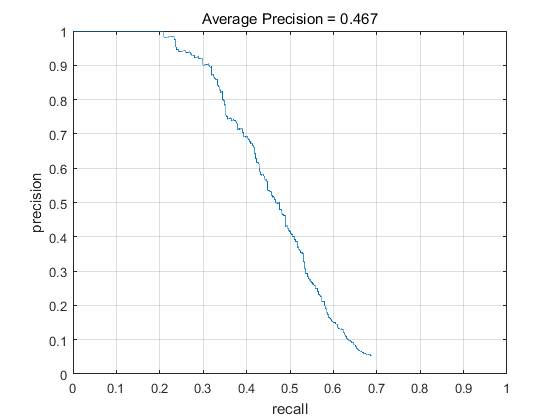

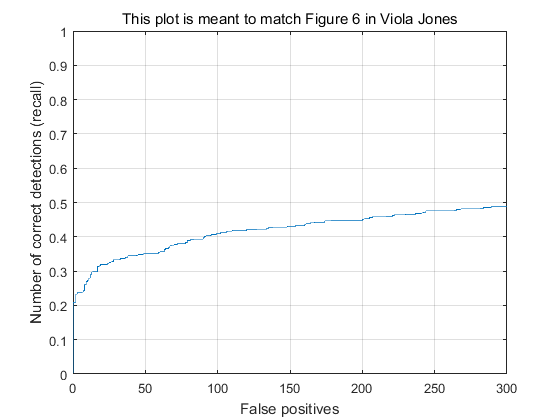

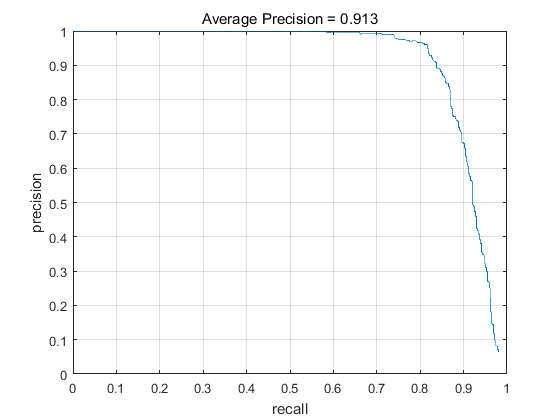

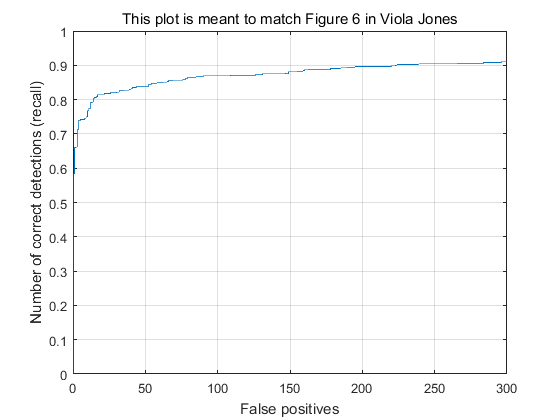

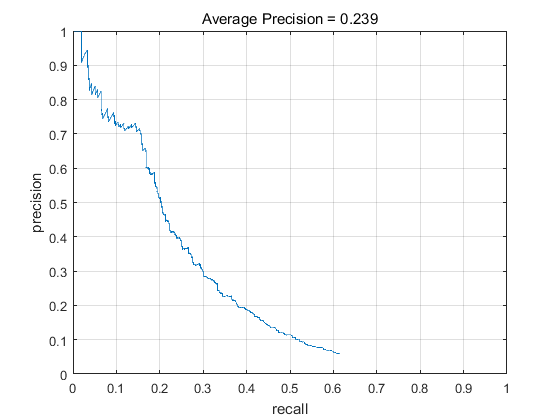

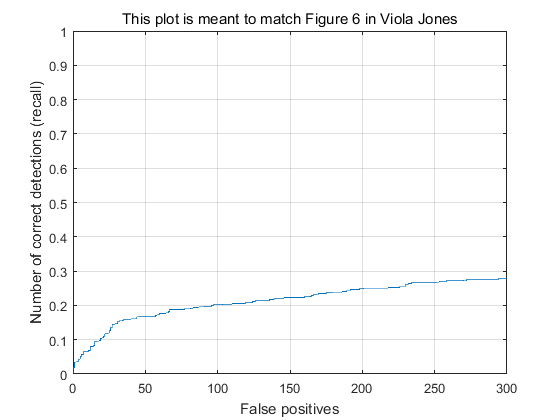

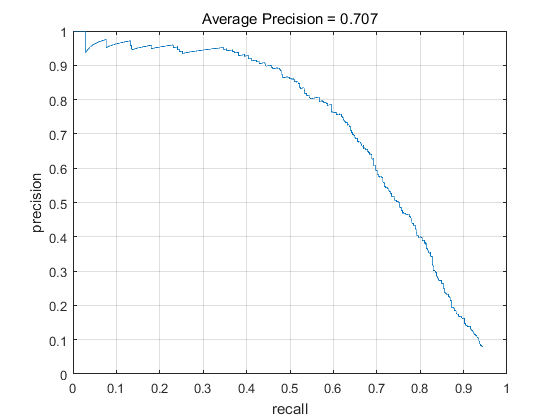

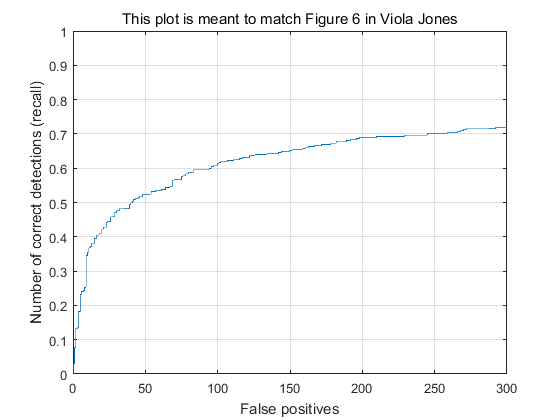

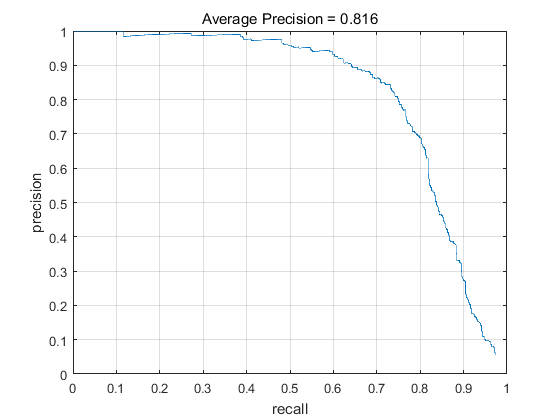

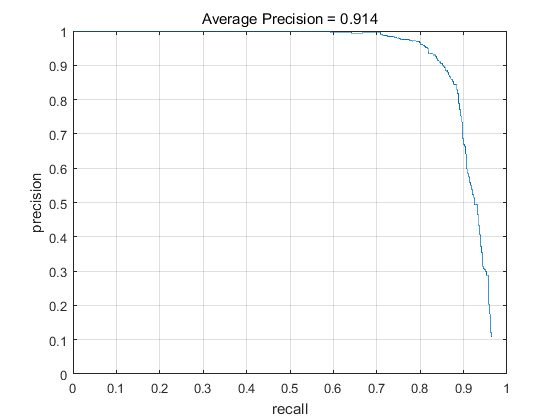

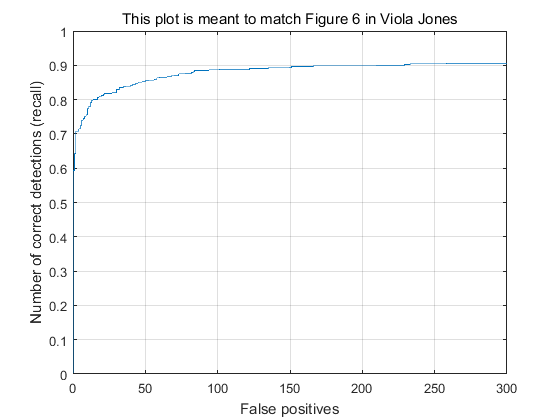

Similar as the expection provided in the bottom of proj5.m, my implementation would have 27-35% accuracy with single layer/cell size 6/SVM classifer. ~85%, with multiscale detector. ~92% with hog cell_size 3. Hard-negative mining might cause complicated effect. Logistic regression would decrease 10% accuracy, which actually make sense.

2. Some implementation details

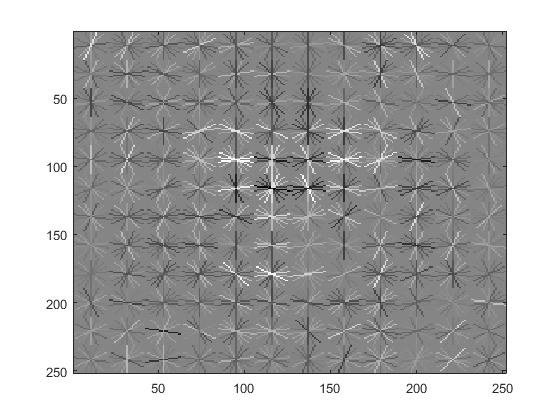

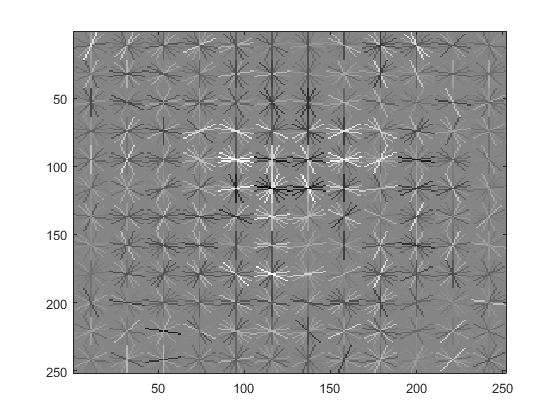

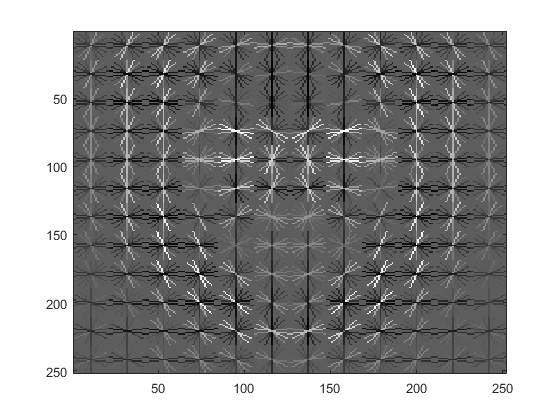

2.1 get_positive_features

The code basically loads the provided images, with RGB/grayscale check, transfers them to single type, and then feeds them to vl_hog with provided cell_size parameter to get features.

2.2 get_random_negative_features

Similar to get_positive_features just with two differences. The first one is the function would first sample the provided negative images(after converted to hog representation) with replacement based on the provided number of negative features needed.The second one is the function would randomly select a hog patch as negagive feature according to the provided template_size parameter.

2.3 SVM

First, pool the positive and negative features together and generate corresponding +1/-1 label vector. Then feed the features and labels to vl_svmtrain function. Different regularizer were tested and choose 0.00001 since it would produce zero false negative and false positive samples in the following classifier testing block.

2.4 Logistic regression

Logistic regression is also one kind of classical classifier. Basically, based on its objective function, my implementation would calculate the gradients based on current parameters and update the parameters according to their gradients. Since the data set is large, I implemented the mini-batch stochastic gradiet descent version, which first divide the samples into small batchs with size 100.Each time, it would updata the gradients based on the current one small batch. After all samples are calculated, the algorithm would iterate from the begining, and finally the algorithm should iterate 100 times of all samples. So the total number of descent steps is about (16000 / 100) * 100.Training time would be ~20 seconds for 16713 samples with 1116 dimension features.

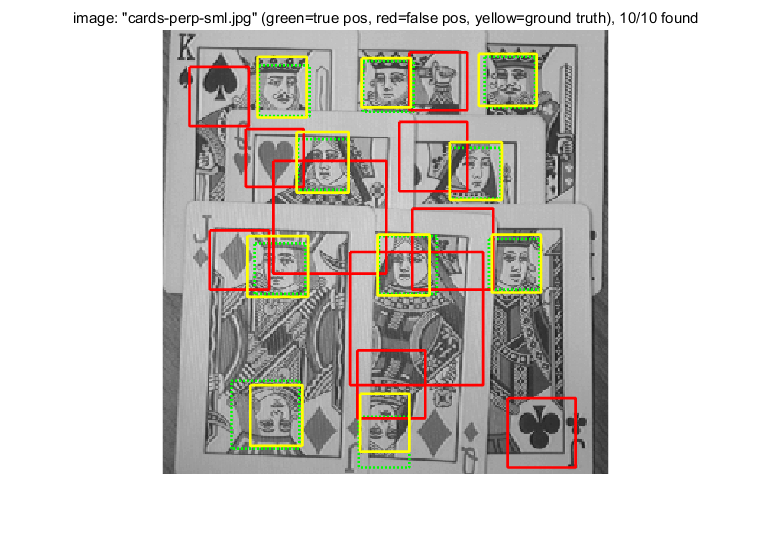

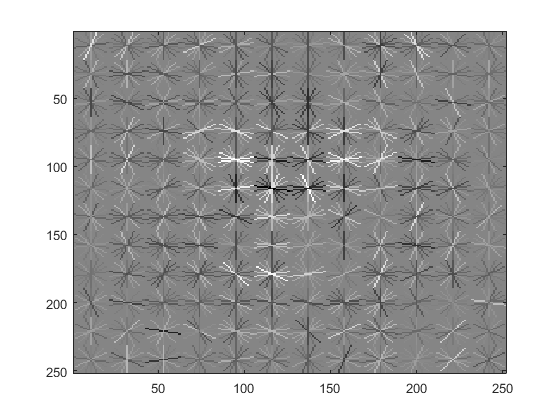

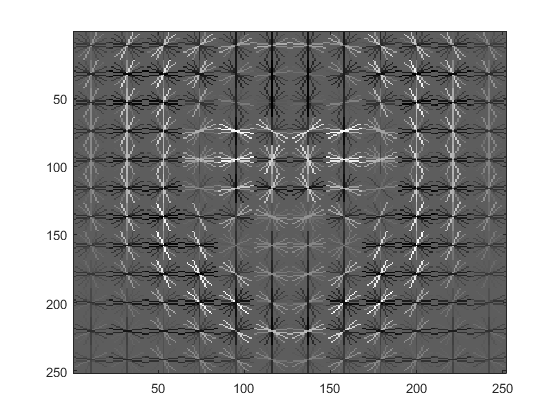

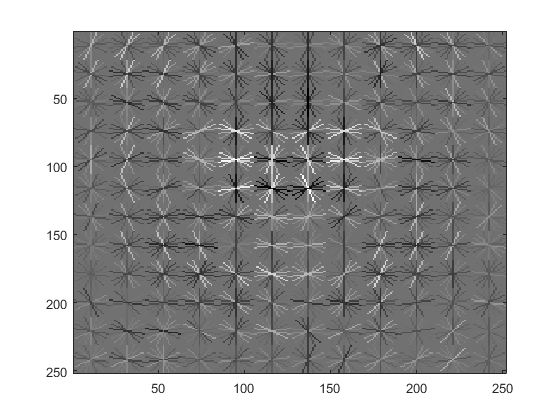

2.5 run_detector

Baseline: for each provided image, the code will convert it to HOG version, then iterate the hog representation with a window size of template_size/cell_size number. For each window, it will calculate the corresponding confidence and compare it to threshold.If the windows is acceptable, the code will calculate the corresponding coordinates of the window in the original image, and add all these information to cur_bboxes, cur_confidences, etc. Multiscale: the code also provide a parameter called num_scale, which is the number of scales the code should should calculate. If it is larger than 1, for each scale, the algorithm would resize the image using imresize with bilinear option. The down-sampling ratio could be specified by parameter down_sampling_ratio; Hard-negative mining: before entering detection of the test image, the implementation would first perform hard-negative mining to check the hard negative samples. The algorith would sample using the above get_random_negative_features to extrat features from a non-face path, then detect whether the predicted labels are positive. For those with positive predicted labels, add them to the previous generated and pooled all features. Considering computational complexity, here I the get_random_negative_features function to extract the negative features rather than using the very fine multi-scale sliding window implementation. Considering that when cell_size is small, feature dimension largely increases which incrase the models VC dimension and would cause over-fitting. So I test a large regularizer 0.01 whereas the previous one is 0.00001. As expected, the performence improved.

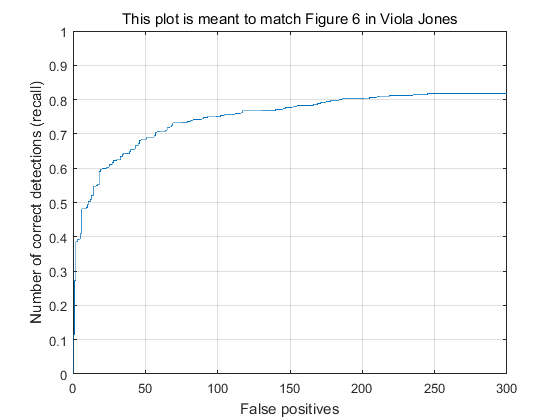

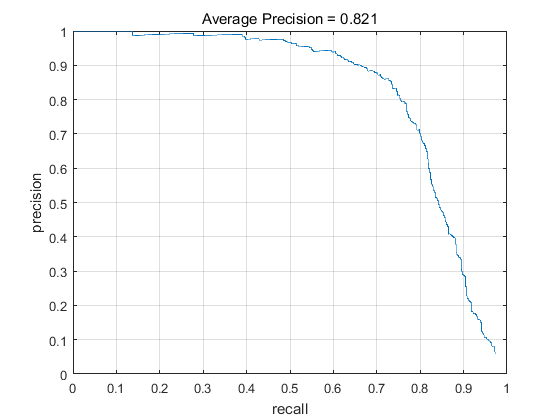

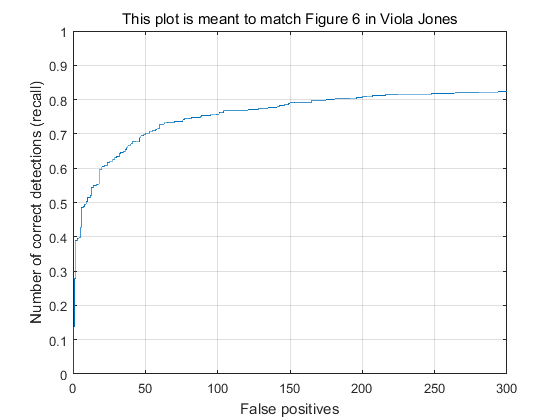

3. Performance evaluation

Here I tested a series of implementations.

|

|

|

|

|

|

|

|

|

|

Discussion: both multiscale and small cell size are crucial to precision. Interestingly, before implementing multiscale, the improvement of fine cell_size is very limited. It suggests that sometimes combining different methods, or runing certain methods on different platforms might get substantial improvement. I am not very superise that the logistic regression implementation does not perform very well. Before the emergence of deep learning, the beautiful SVM large margin classifier and ensemble methods, especially, Boosting are the dominating algortihms that have super high accuracies. Generally, a logistic classifier with 10% accuracy less than SVM is reasonable Hard negative mining caused negative effect for the SVM version but did give some improvement of the Logistic regression version. This also is another example of the importance of platform when testing a certain algorithm.