Project 5 / Face Detection with a Sliding Window

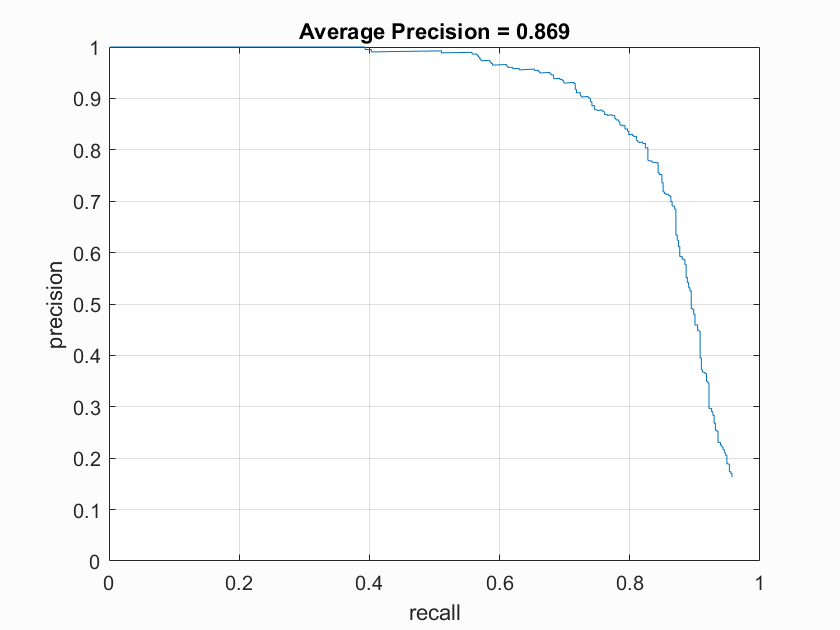

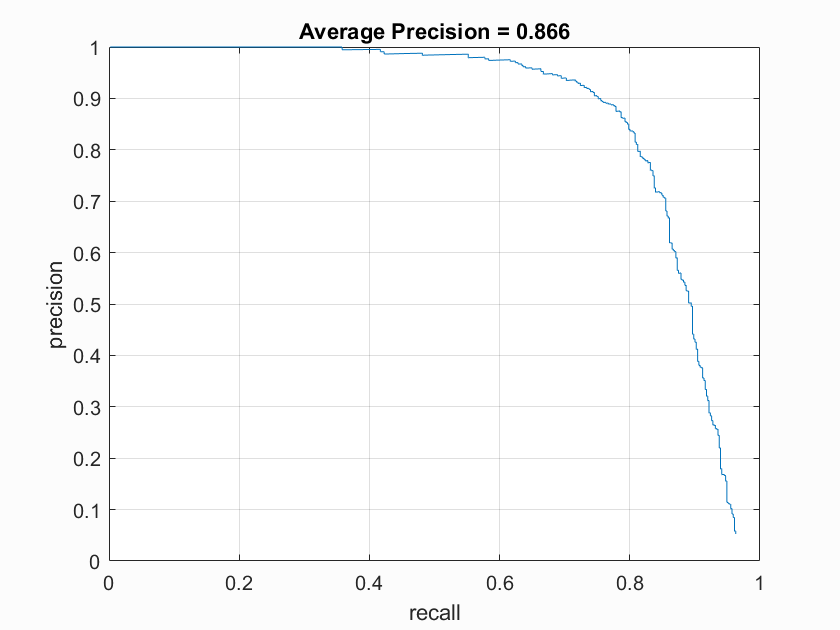

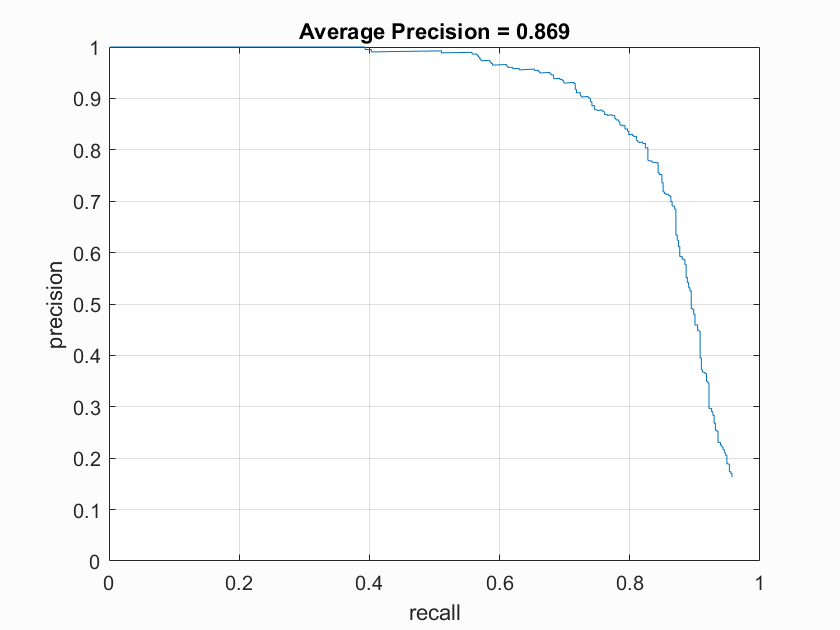

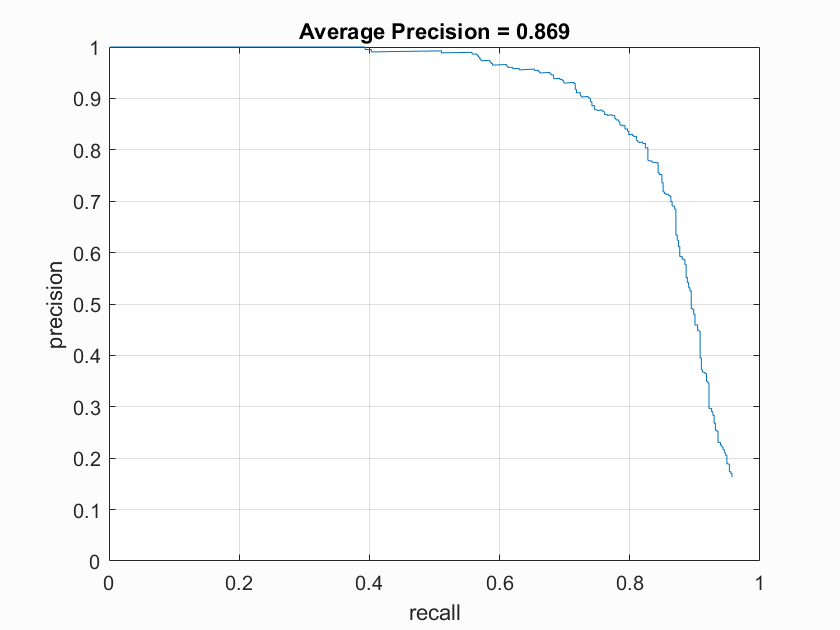

Final average precision.

For my face detection project I implemented feature generation using vl_hog, learned a linear classifier for faces using vl_svmtrain, implemented a sliding window detector that works at multiple scales, and implemented hard negative mining to reduce the number of false positives. The order of this write up is listed below.

- Feature generation and classifier training

- Sliding window face detector

- Hard negative mining

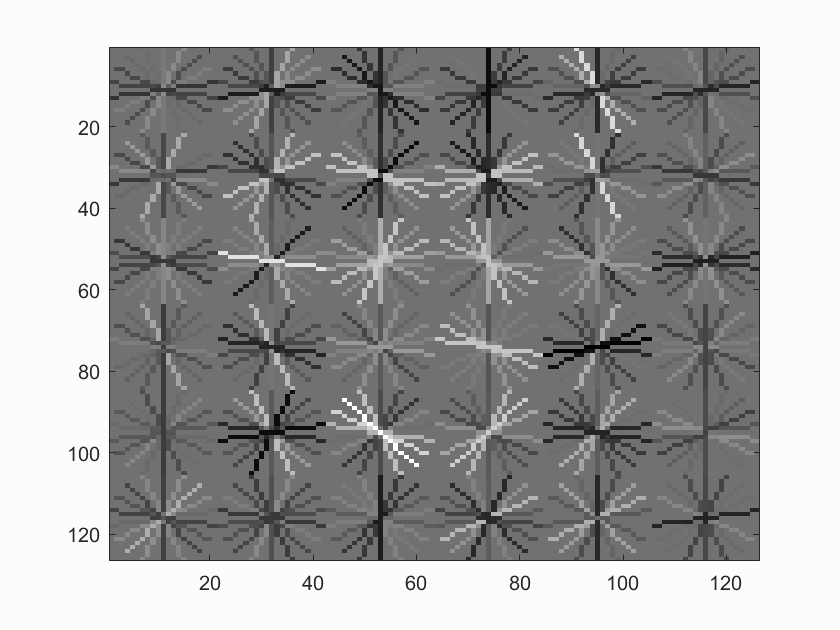

Feature generation and classifier training

For the positive feature generation I simple made calls to vl_hog and returned the vectorized results. Since the images were already the proper size no other processing was needed. For the negative results I use randsample() to get a random number of x and y values to use to cut out feature_params.template_size size squares from the image. Given an overall limit of num_samples to find I calculated the number to get per image as num_samples / num_images. Finally to train the linear classifier I used a call to vl_svmtrain. The training data was just the positive and negative features concatenated together. For the training labels I used 1 for the positive features and -1 for the negative features.

Sliding window face detector

For the detector I use vl_hog to convert the entire image into a hog field. I then used the fact that the image coordinates are the same as the hog field coordinates multiplied by the cell size to allow me to iterate over the smaller hog field while still returning image coordinates for the bounding boxes. To perform detections at multiple scales I scaled the entire image using imresize before converting it into the hog field. The main loop for this is shown below.

Sliding window main loop

for scale = [.1, .2, .3, .4, .5, .6, .7, .8, .9, 1]

%scale image

scaled = imresize(img, scale);

% convert the image to hog space

hog = vl_hog(single(scaled), feature_params.hog_cell_size);

dim = size(hog);

for y = 1 : dim(1) - block_size

for x = 1 : dim(2) - block_size

hog_cell = hog(y : (y + block_size - 1), x : (x + block_size - 1), :);

confidence = hog_cell(:)' * w + b;

img_x = x * feature_params.hog_cell_size / scale;

img_y = y * feature_params.hog_cell_size / scale;

img_block_size = block_size * feature_params.hog_cell_size / scale;

if (~neg_mining && confidence > -1) || (neg_mining && (confidence < 0 && confidence > -1))

if re_initialize_curr

cur_bboxes = [img_x, img_y, img_x + img_block_size, img_y + img_block_size];

cur_confidences = confidence;

cur_image_ids = {test_scenes(i).name};

cur_features = hog_cell(:)';

re_initialize_curr = false;

else

cur_bboxes = [cur_bboxes; [img_x, img_y, img_x + img_block_size, img_y + img_block_size]];

cur_confidences = [cur_confidences; confidence];

cur_image_ids = [cur_image_ids; {test_scenes(i).name}];

cur_features = [cur_features; hog_cell(:)'];

end

end

end

end

end

I chose a confidence threshold of -1 for finding likely faces as this value gave me the highest average precision (~.85 - ~.86) while have the least amount of false positives.

Hard negative mining

I implemented hard negative mining in two stages. First I re-run the detector method to

get likely false positives. I then made a method that converts the detector bounding boxes

back to hog space features.

To do this I modified the detector to look for features with a confidence between

0 and -1. I chose those values as in testing that was the range in which most of the false

positives were coming from. I also disabled non-maxima suppression during the

negative mining as suggested in the instructions. Then to get the negative training

data necessary to train the svm classifier I modified the detector method to save and

return the hog cells that were used to make the bounding boxes. This allowed me to then

simply re-use the code for train the classifier, this time replacing the random

negative features with real negative features that were likely false positives.

Results in a table

|

|

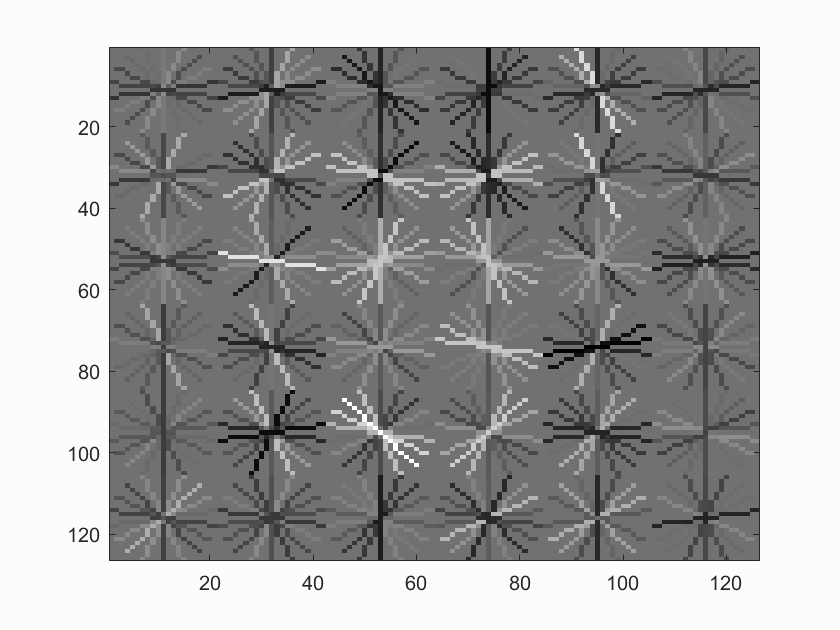

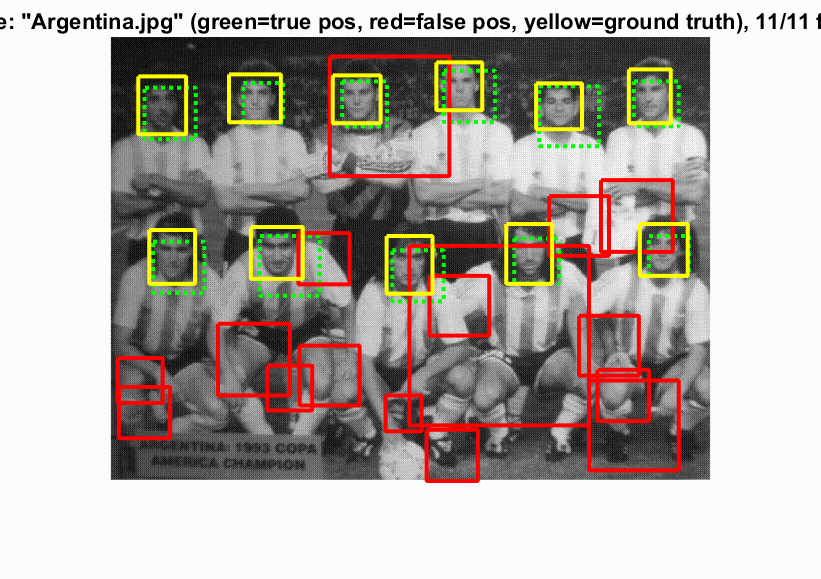

As shown in the table above, even though the negative mining didn't improve the overall average precision that much, it greatly reduced the amount of false positives in the actual face detection.

Precision Recall curve for the starter code.

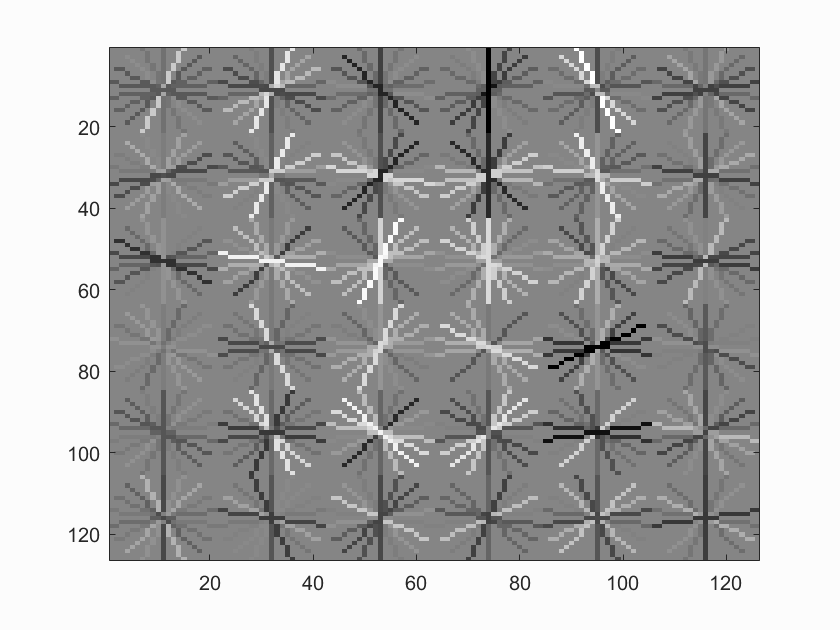

Example of detection on the test set from the starter code.

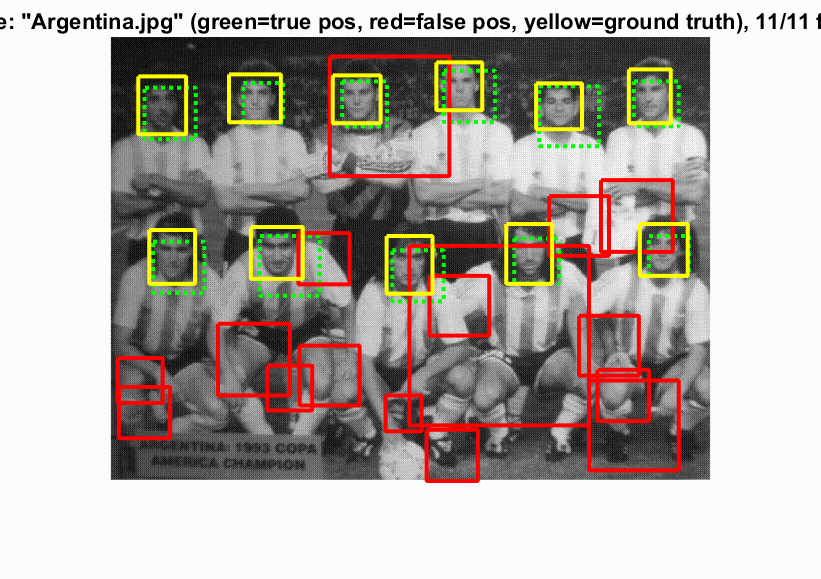

Shown above are full size versions of the results of the face detector with hard negative mining.