Project 5 / Face Detection with a Sliding Window

In this project we have implemented a face detector after Dalal-Triggs. I have

- implemented the baseline pipeline,

- and augmented the positive training examples.

First, I want to note that I chose to sample negative training examples from randomly selected images at random scales. So the image is randomly resized before selecting a 36x36 template where the scale is chosen uniformly from 0 to 1 instead of from a list of predefined scaling factors. Furthermore, I have started out with 100,000 negative training examples, which

- used up a lot of memory at a HOG cell size of 3 pixels

- and actually decreased performance.

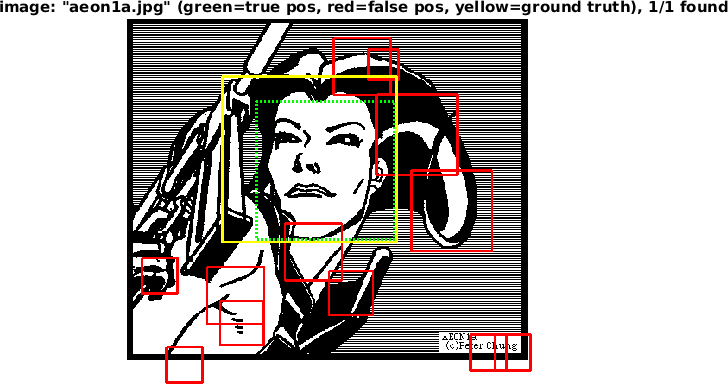

Face that is detected with 50,000 negative examples but not with 100,000.

Next, I compute the linear classifier with vl_svmtrain and a lambda value of 0.001. This was the same value that I used in project 4 and it worked right off the bat, so I did not experiment with this.

In the end, the detector runs on all test images. Here I have chosen a uniform selection of 7 scaling factors. This means that the image is searched for faces after being resized to 100%, 85%, and so forth until it is scaled down to the size of the template. At this point the detector would find images that display a single close-up of a face.

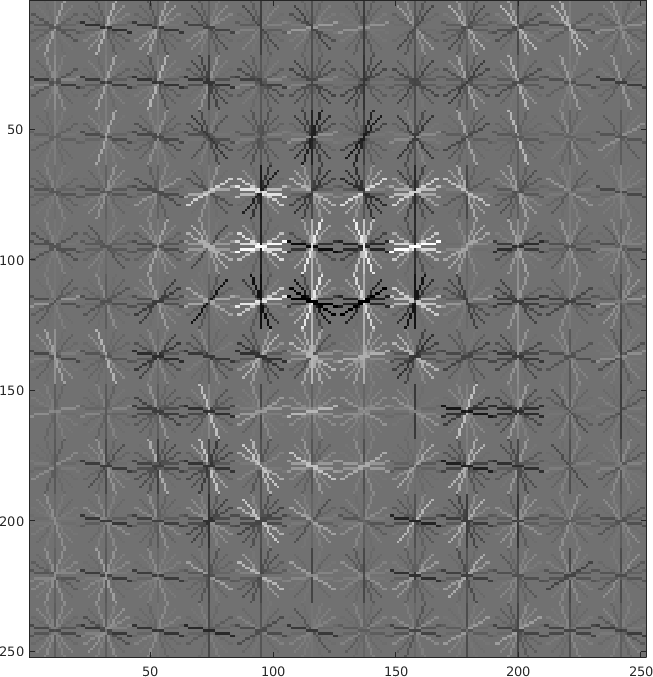

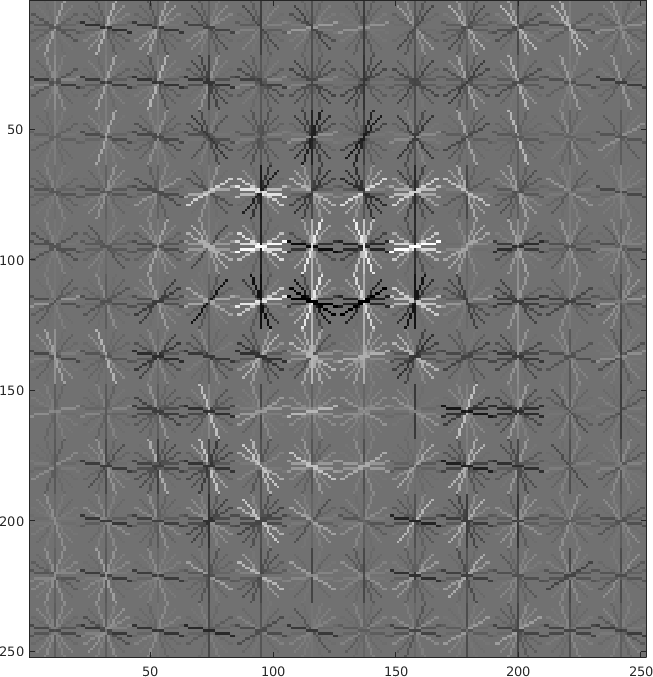

Visualization of the learned linear classifier in HOG space. I think I can see a face in there but that might just be my human vision hallucinating things.

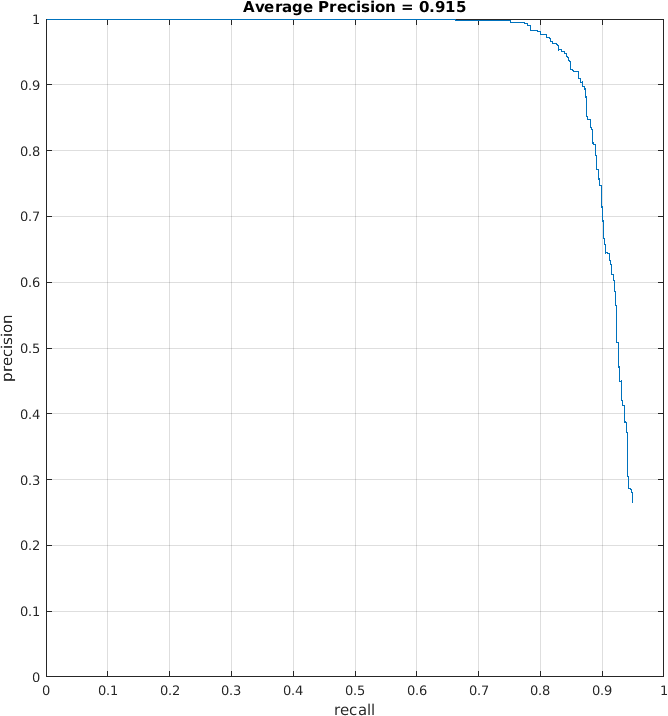

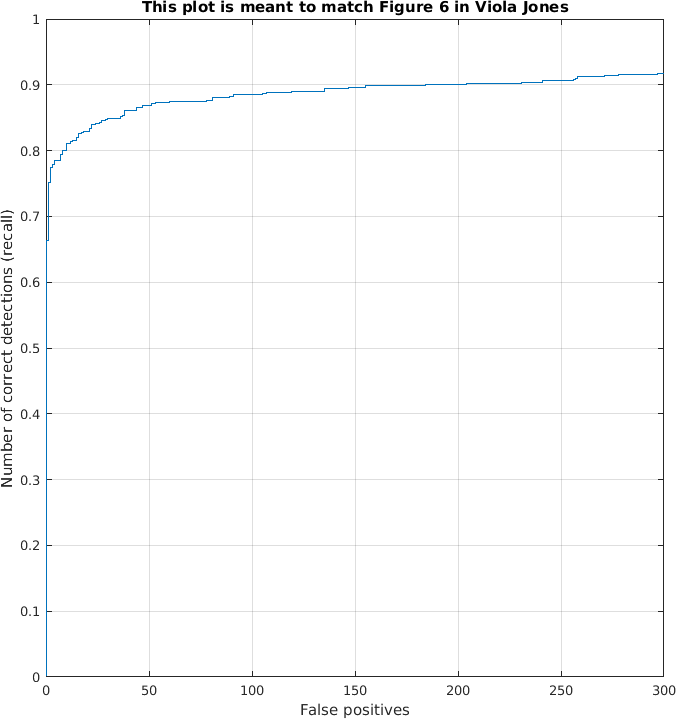

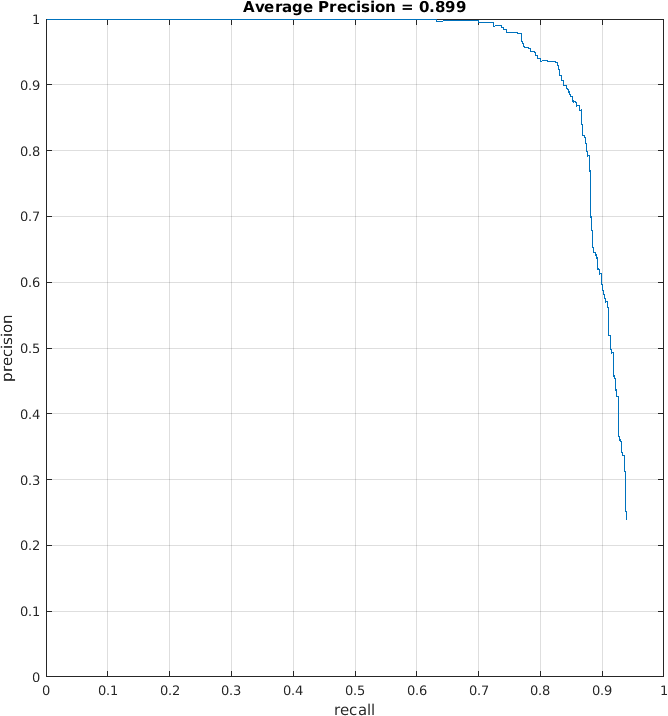

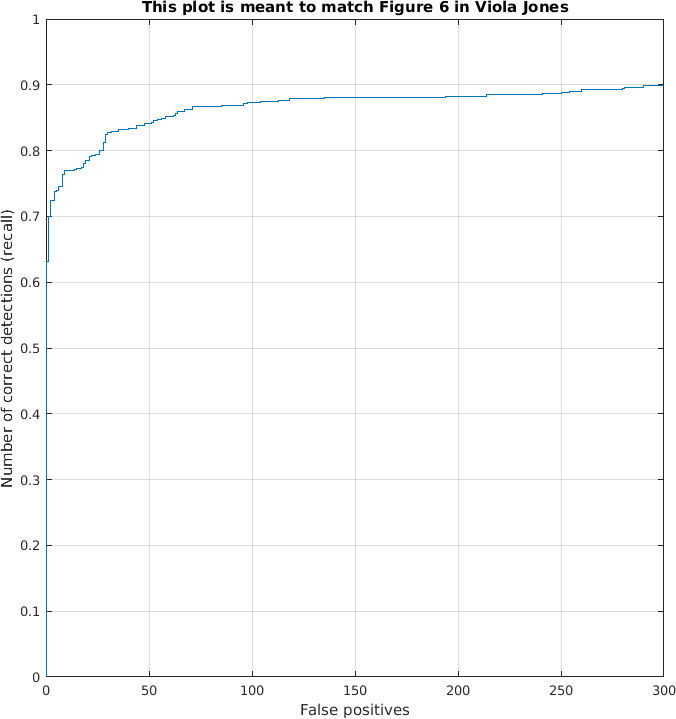

The precision-recall curve shows that 75% of the faces can be detected without false positives.

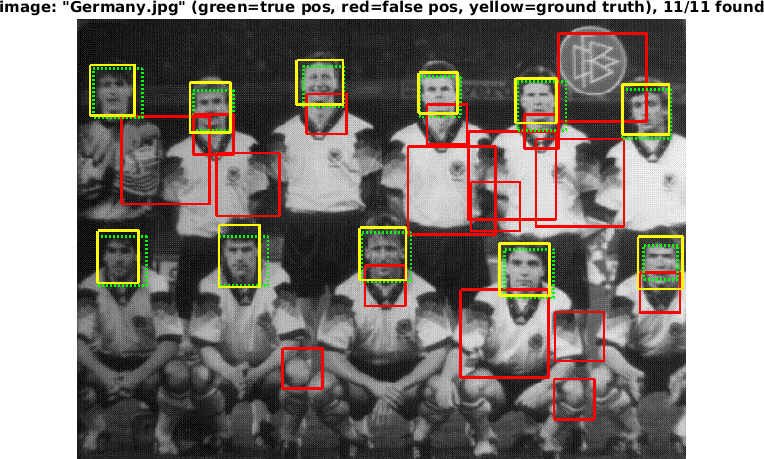

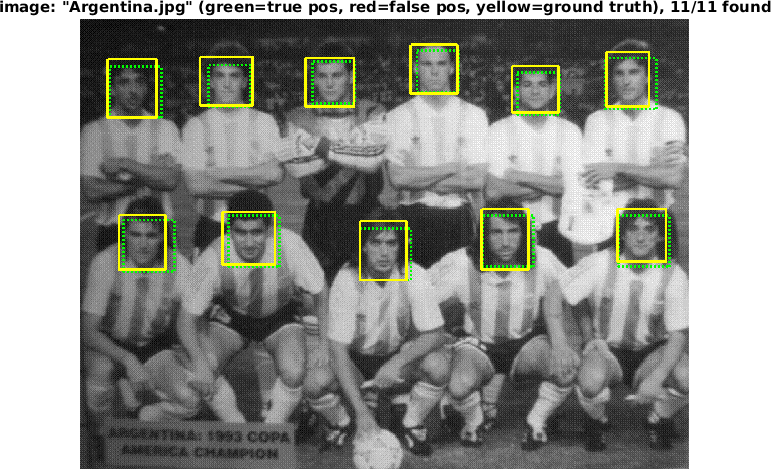

The detector finds all 11 faces in this image.

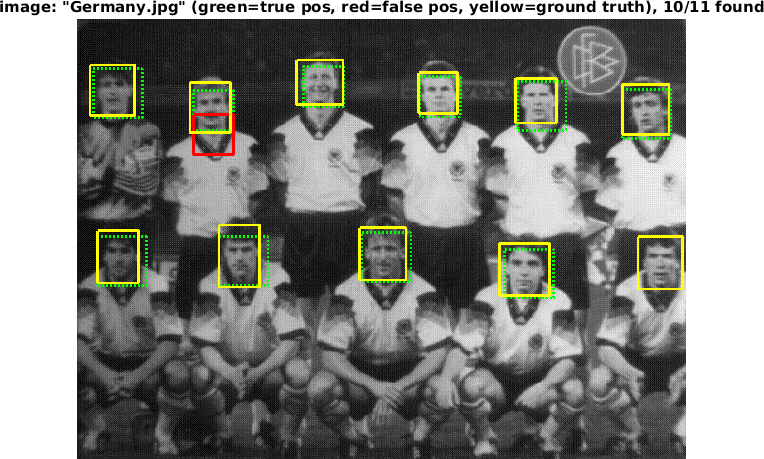

The shape of the precision-recall curve suggests that it should be easy to filter out a lot of the false positives in the photo of the German soccer team by thresholding more aggressively. And indeed, most non-faces produce a slightly positive confidence while true faces have confidence of over 1.0. The following pictures show the detections a threshold of +0.9. The false positives were completely eliminated except one at the cost of one true positive (Germany, lower right).

Thresholding with +0.9 eliminates almost all false positives.

Extra Credit: Augmenting the positive examples

I have tried to improve the performance of the face detector by augmenting the positive training examples. To this end, I have mirrored the training examples and rotated them by 5 degrees in either direction. This is supposed to extract more information from the images by teaching the detector that a face is still a face if it is mirrored or slightly rotated. However, this procedure actually impacted the performance negatively as shown in the following precision-recall curve. First, the average precision has been reduced to 89.9% from 91.5% and, second, the curve has become less smooth. So the extra training data actually made it harder to tell faces and none-faces apart.

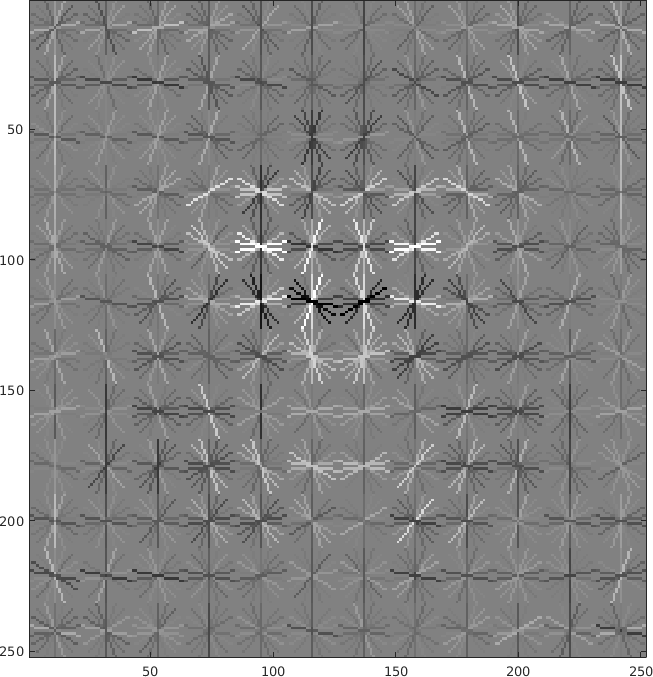

The following figure compares the detector learned with augmented training data to the detector learned by the baseline implementation. Overall the magnitude of the weights away from the center of the face has been reduced and the detector has learned unexpected straight edge features at the borders. I suspect that these might be artifacts from the rotation operation. However, I have trained another model with only mirrored training examples and the average precision was even worse (87.7%).

Visualizations of the baseline detector (left) and the detector with augmented training data (right).

The class photo

For the class photo I have run the baseline pipeline with a threshold of +0.9. In the easy case it recognizes all the faces in the foreground except 5 and even some faces from last year's class photo on the screen. The number of false positives was similarly small. The hard case was, well, harder and the detector even missed a full-frontal face (it missed the same guy in the easy case already).