Project 5 / Face Detection with a Sliding Window

In this project we look to detect faces in pictures by implementing the pipeline described in Histograms of Oriented Gradients for Human Detection paper by Navneet Dalal and Bill Triggs. We look to train a linear SVM classifier using HoG features extracted from positive examples (faces from the Caltech Web Faces dataset) and negative examples cropped from pictures that do not have humans in them. We have done the following work for this project:

- Implemented a module to extract HoG features from positive examples.

- Implemented a module to extract HoG features from negative examples.

- Trained a linear SVM classifier using our extracted feature vectors.

- Created a multi-scale, sliding window object detector.

- Found, pre-processed and used new positive training data from the Essex faces dataset.

To facilitate testing of different modules in the pipeline I figured that I should save the extracted features and have a way to load them so that I should not reconstruct them every time I wanted to test the latter steps of my algorithm. This made me save a lot of time because bugs are inevitable when building a CV system.

One of the problems that I had while implementing the algorithm was that due to not reading an explanation in the detector script I was taking the HoG features from patches of the image instead of taking the HoG features from the entire image and extracting patches from the HoG map. This gave me bad average precision when trying to detect faces, especially at different scales. One of the reasons is probably that when taking the HoG features of a cropped image we lose the effect that the rest of the image has on the border of the image patch. Also the pipeline ran very slowly as expected.

To use different scales for detection, for each scale I just resized the image, extracted the HoG feature map from the resized image and then performed the window sliding.

| lambda | accuracy |

|---|---|

| 1 | 0.489 |

| 0.1 | 0.708 |

| 0.01 | 0.770 |

| 0.001 | 0.778 |

| 0.0001 | 0.769 |

| 0.00001 | 0.760 |

| 0.000001 | 0.760 |

| 0.0000001 | 0.754 |

| 0.00000001 | 0.755 |

I tested the accuracy on the different positive training datasets: Caltech Web Faces, Essex Faces dataset and the two of these combined. The essex faces were downloaded from http://cswww.essex.ac.uk/mv/allfaces/index.html . The images contain mainly first year undergraduate students between 18-20 years old but some older individuals are also present. Male and female subjects are present. The total number of images was 7900 and the images were in 24bit color JPEG. The images were in rectangular form 180x200 pixels so preprocessing (cropping, resizing) was in order.

I used the following bash script to allow me to copy the images into a single folder, crop them (middle of the picture) and resize them. The conversion to grayscal is done in the matlab training script. I also had to manually erase some corrupted files and discarded the files that weren't in jpg format. The files that I deleted were the following: some of 9540636.*.jpg and some of 9540741.*.jpg where * can be any number from 1 to 20. I ended up with 7450 images in a 5.7MB folder which is included in this submission under "/data/essex_faces".

find . -iname '*.jpg' | xargs cp -t essex_faces/

mogrify -gravity center -crop 180x180+0+0 *.jpg

mogrify -resize 36x36\! *.jpg

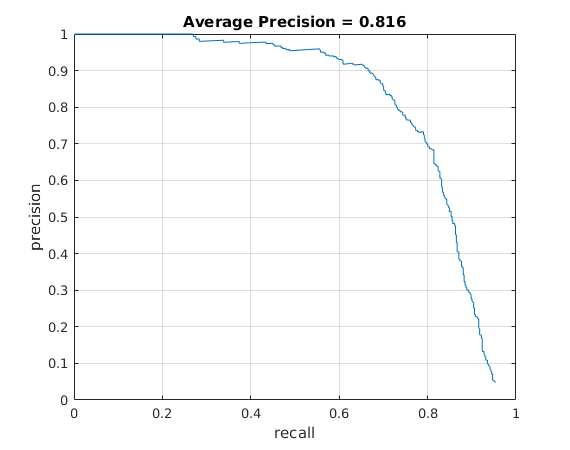

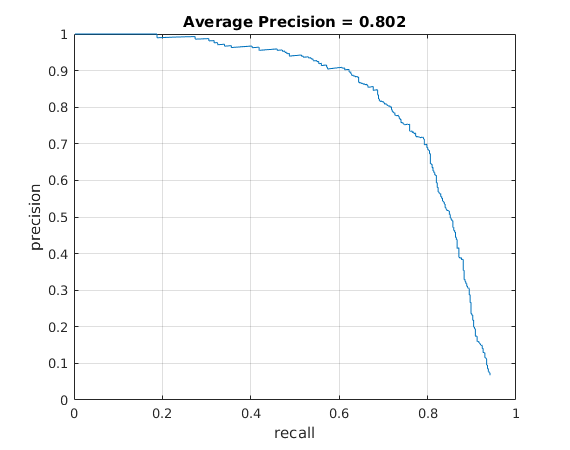

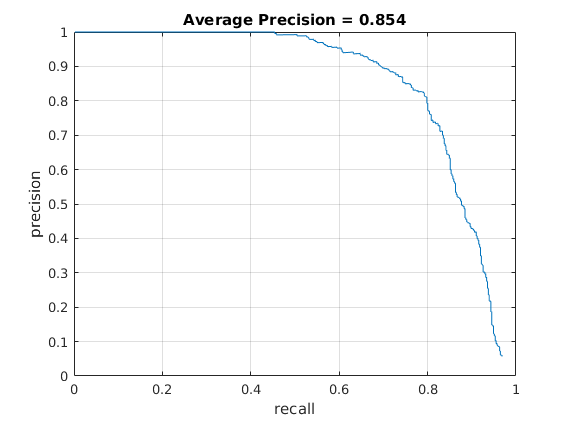

The following are the accuracies on the different datasets using lambda = 0.0001, threshold = -0.7, scales = 12, scale factor = 0.9. We can see that performance is comparable between Caltech and Essex but that the Caltech data gives us slightly better results. When combining the two datasets performance decreases from using the Caltech dataset exclusively. More data is not always better, especially if you had enough high quality data to begin with as is the case in this problem.

| dataset | accuracy |

|---|---|

| Caltech | 0.816 |

| Essex | 0.799 |

| Combined | 0.802 |

Caltech precision recall curve.

Essex precision recall curve.

Combined dataset precision recall curve.

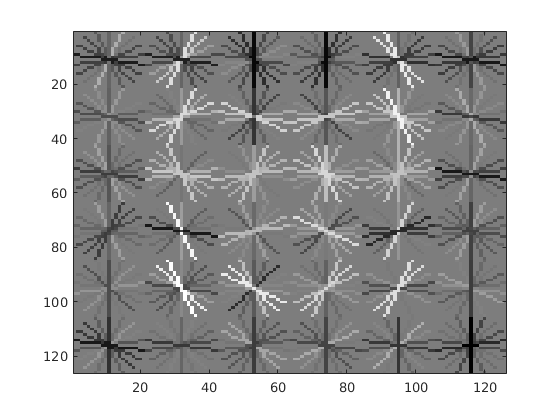

Face template HoG visualization for best performing detector.

Precision Recall curve for best .

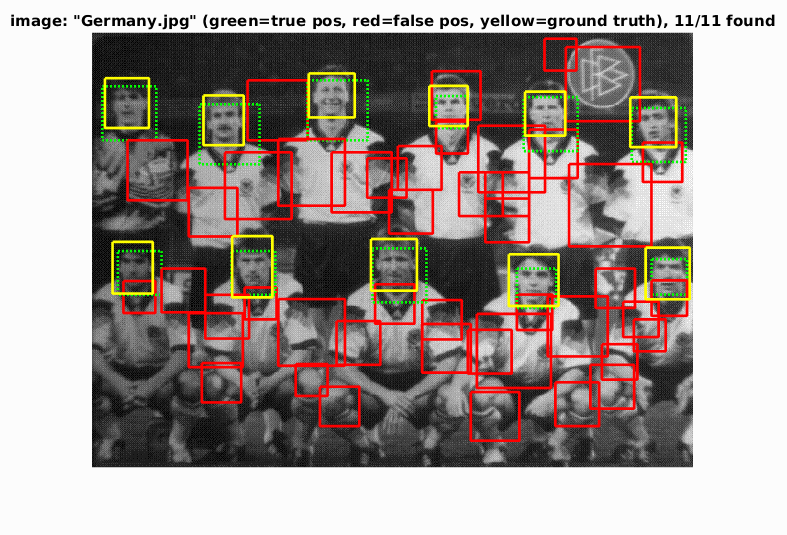

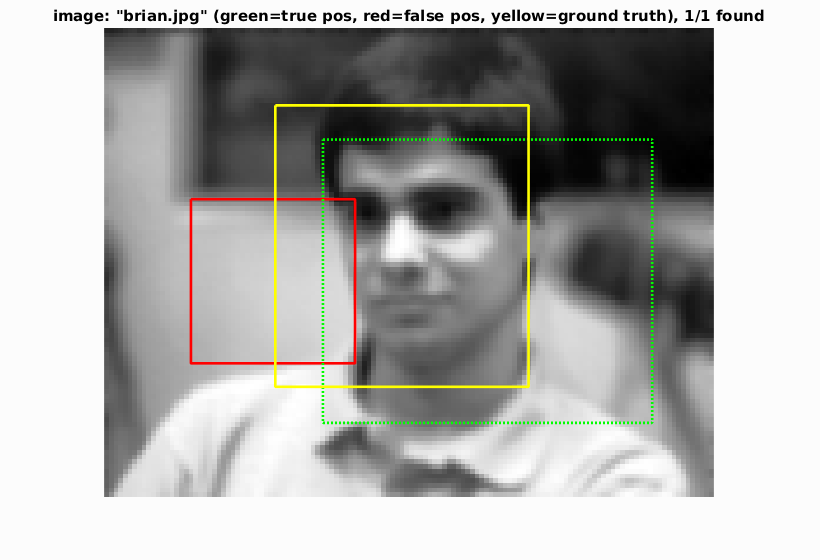

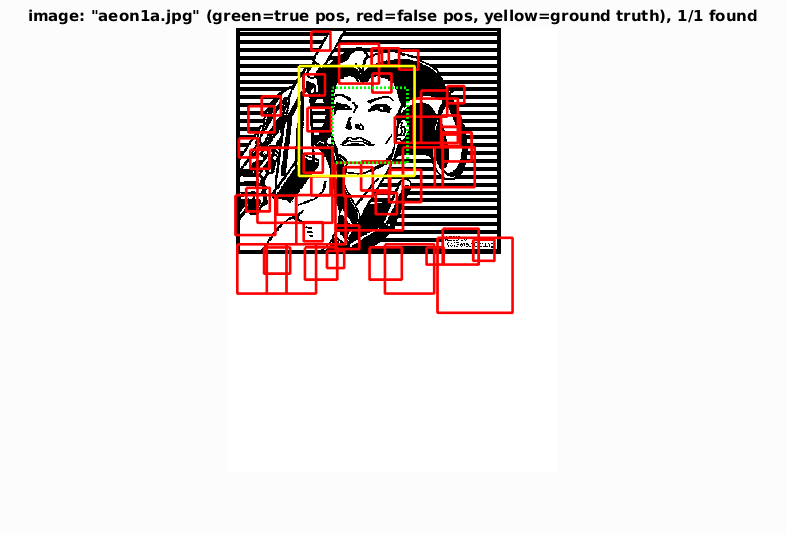

Example of detection on the test set from the starter code.

As you can see the detector does not only detect real human faces but drawings of huan faces and does a very good job in finding faces at different scales. It finds faces that are very blurry too. All in all it is a pretty accurate detector and this method shows the power of using good features for the task at hand. HoG is an excellent non-deep learning alternativev to detecting faces and it can work real-time.