Project 5 / Face Detection with a Sliding Window

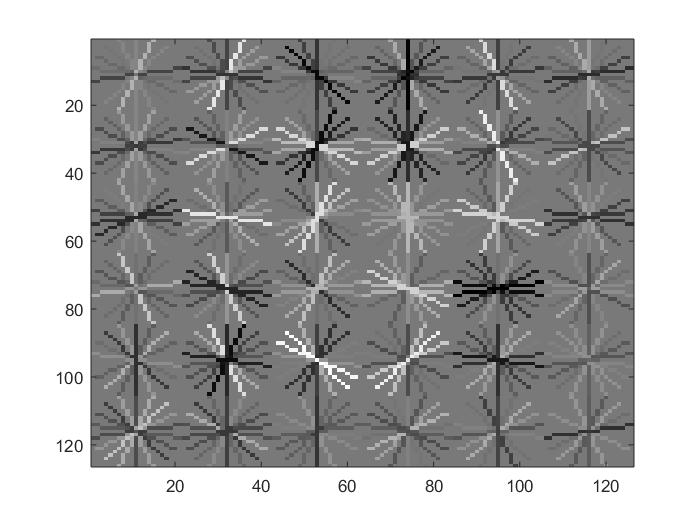

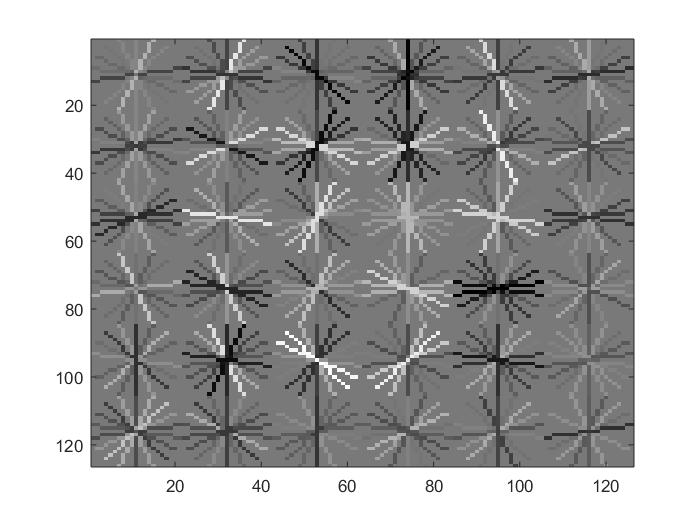

The created HoG template

The purpose of this project was to build a face detector, which I implemented by using a sliding window with SIFT-like features represented as a Histogram of Gradients. There were many different aspects that had to be handled in this project, including pulling positive and negative training features from various photos. I also had to train a linear classifier in order to determine if a certain area of a photo was a face. The parts of the face detection pipeline that were implemented were:

- get_positive_features.m

- get_random_negative_features.m

- A section of the proj5.m file that was responsible for training a classifer

- run_detector.m

get_positive_features.m

This function is used to get positive face classification features. These are used to train the linear classifier with features that it should look for to define a positive example of a face. HoG features were extracted from the photos by using a call to vl_hog(). A positive feature matrix is outputted as a result, which is then later loaded into the linear classifier.

get_random_negative_features.m

This function takes in random photos that have negative features (no faces) in order to provide the linear classifer with examples of what not to look for when trying to identify faces. Images were randomly selected by using rand(1) and multiplying it to the number of potential photos to choose negative features from. Using HoG, I pulled the negative features from tehse photos and outputted the results in feature matrix that aws inputted into the liear classifier.

Training Classifier

In order to train the classifier, I used vl_svmtrain with the positive and negative features that were returned from get_positive_features.m and get_random_negative_features.m. In order for the classifier to be trainied, there needed to be labels classifying each of the features as actually being positive or negative. In order to do this, i made a vector of 1's and -1's that corresponded to the lengths of the positive and negative features, respectively. I found that using a lambda of .0001 was most optimal for me.

run_detector.m

This function is used to test how the svm linear classifier performed by attempting to find faces among test pictures that are labeled with a ground truth. In order to test across this set, I looped through each image and scaled them 20 times. For each scale, I extracted HoG features by going through the different cells in the image of the defined cell size. I then got the confidences in each classification using svm weights and filtered out confidences below the level of 0.75.

Results in a table

|

|

|

Face template HoG visualization.

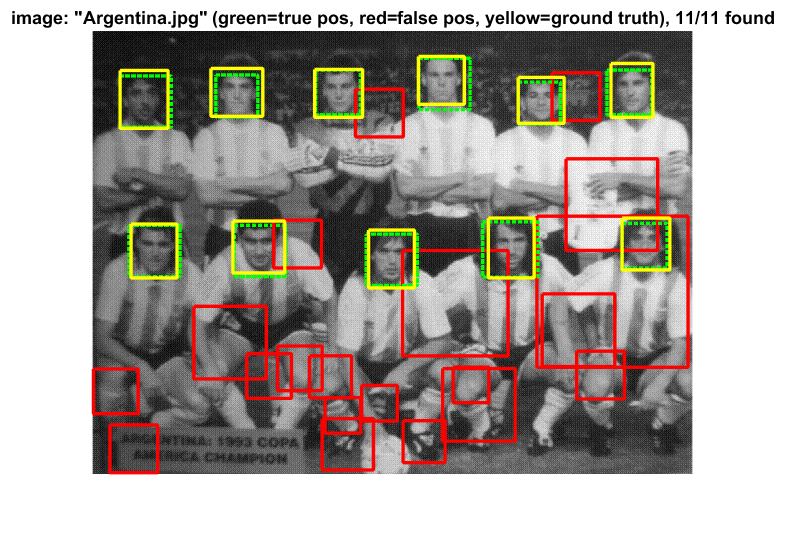

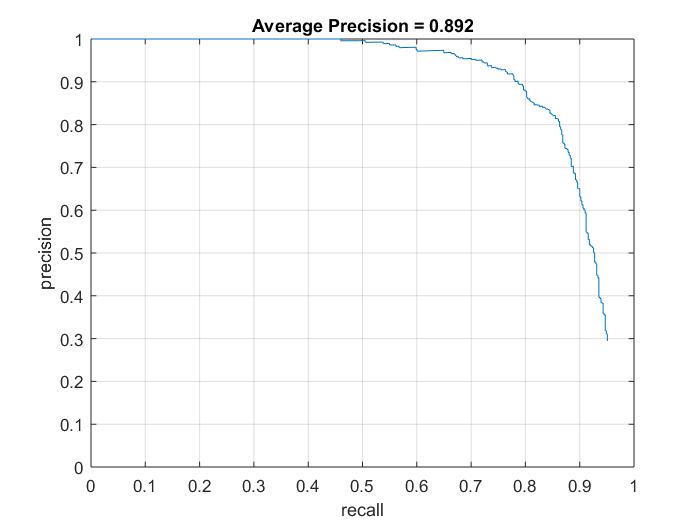

Precision Recall.

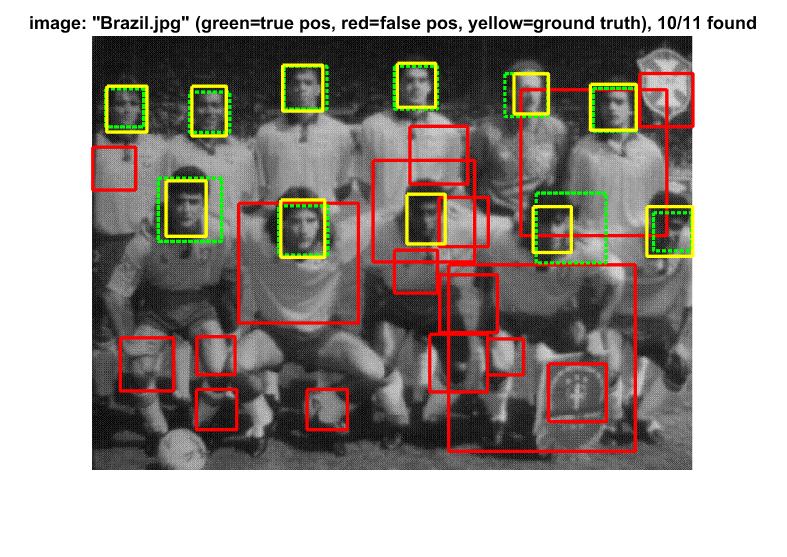

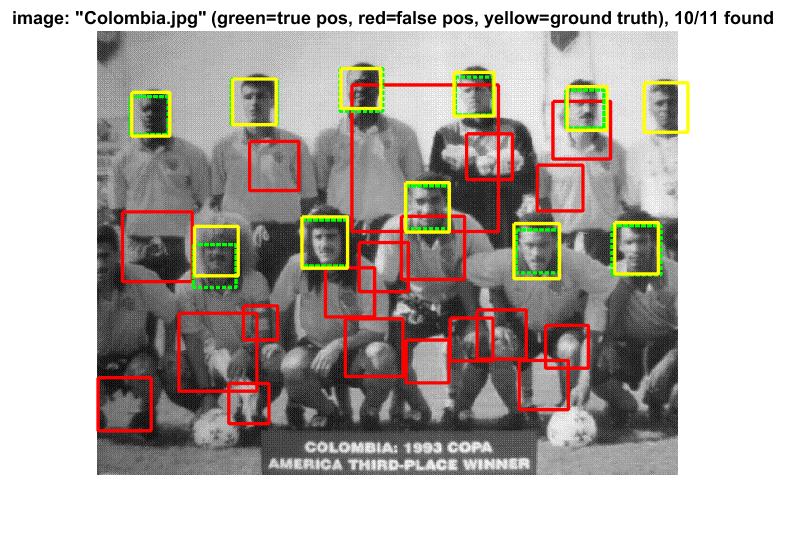

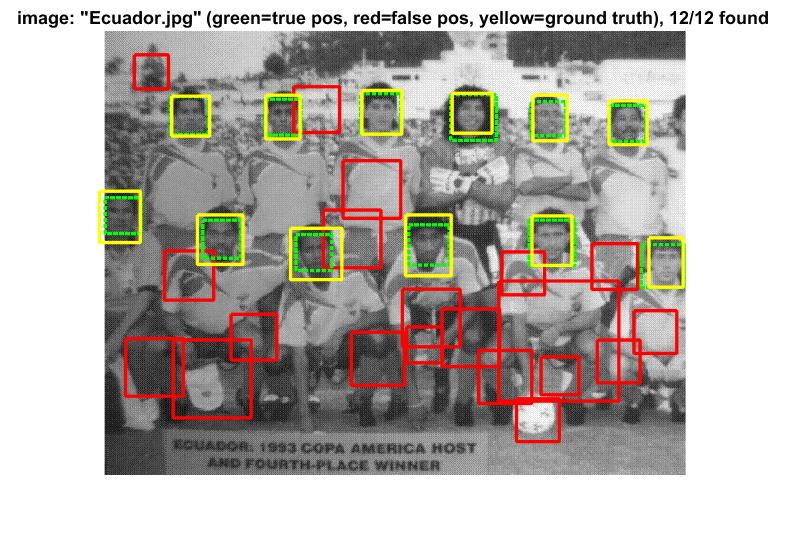

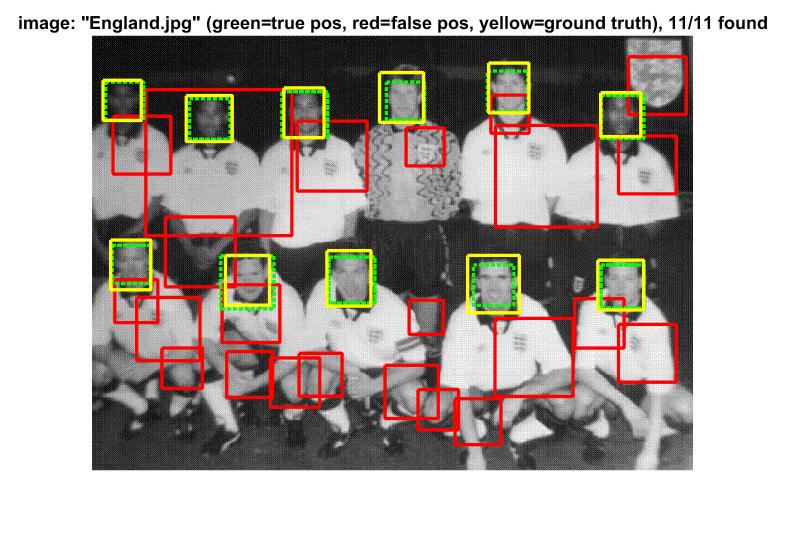

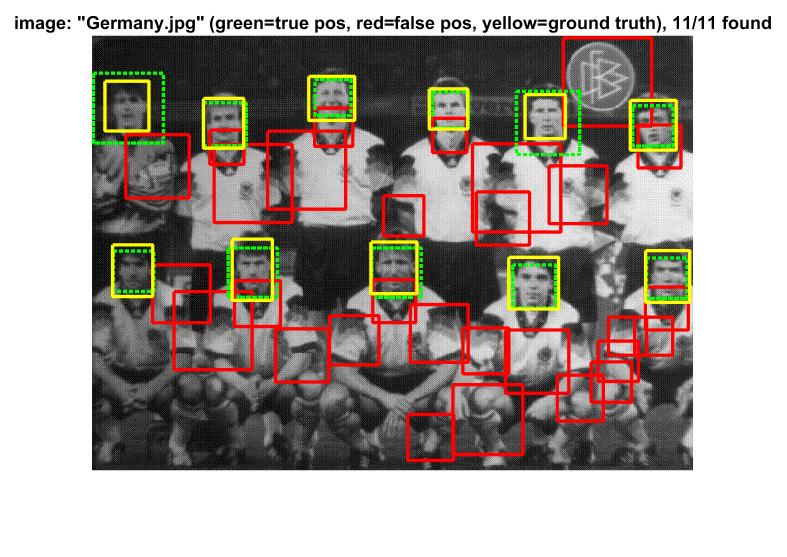

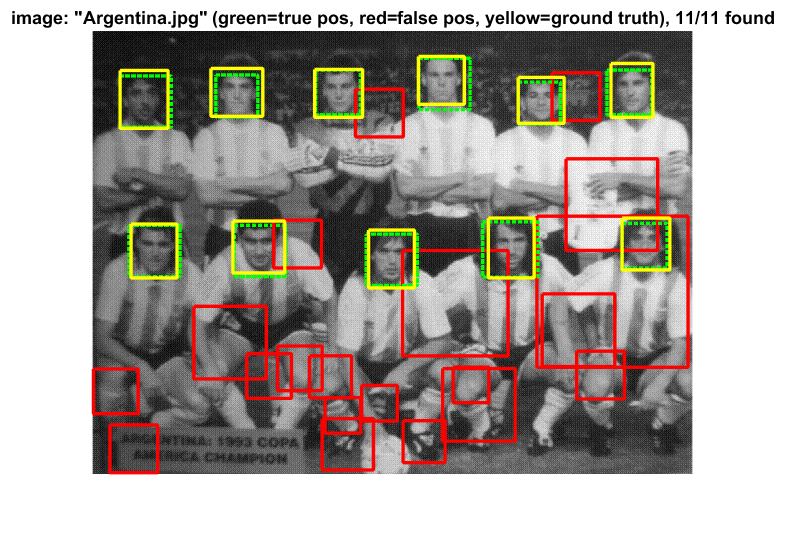

Face detection

I was able to obtain an average precision of about 89.2% in terms of correctly finding faces within the photographs of teams, as all of the teams above have found all, or all but one, of the faces in the pictures. However, one interesting thing to note is that there were a high number of false positives that were detected as well. This is something that I was working to try to correct, however, I ran out of time to be able to reduce the number of false positives. If I had more time, I would have tried working with the confidence threshold to reduce the number of false positives detected.