Project 5 / Face Detection with a Sliding Window

Example of a right floating element.

For this project, I immplemented face detection using a multiscale sliding window driven by linear SVM classification of HoG grid features.

The system first collects positive training examples from images of a fixed size. As each training image is of the same size as the proposed classifier window, each image is translated into a single feature by finding a histogram of gradients for each spatial bin of a fixed size within the image. Then, negative examples are produced from the negative training set. As with the positive example set, example features are created from image patches of the same size as the proposed classifier window. In the case of the negative training image set, however, appropriately sized image patches are selected at random, evenly distributed among all available negative training images up to the desired training sample size. These training features are then appropriately labeled and a single linear SVM is trained on them. In the process of tuning my detector, I found that a HoG cell size of 3 and a lambda value of 5E-7 for training the SVM were ideal. All available positive example images were used for generating positive example features, as were 100,000 randomly-selected negative example image patches. Though increasing the number of negative image patches used from my first tested value of 10,000 to 100,000 showed some minor improvement in the resulting classifier's average precision, further image patches did not show much improvement while increasing the training time of the SVM.

The system then tests this SVM on new images, attempting to identify faces in training images. A sliding window of the same size as the classifier window used to build the original training features is iterated over each image, and a new HoG grid feature is generated for the window at each location. This feature is then classified by the trained SVM. If the computed confidence of a face classification is greater than some threshold, then the bounding box for the sliding window and the image patch's corresponding face match confidence are stored. Once the window is evaluated at all positions over an image, the image is scaled down by a fixed scale step constant and the process is completed with the same sliding window on the new scaled image. This process is continued until the image is no longer larger than the sliding window, and at all image scales image patches of high match confidence are recorded to account for the location of their bounding boxes at the image's original scale. With high-confidence bounding boxes of suspected faces collected from all scales of an image, the system then uses the provided nonmaximal suppression code to prune overlapping bounding boxes of lower confidence. In the process of tuning the detector, I found that a confidence threshold of 0.7 or 0.8 was most effective at maximizing the detector's precision by screening false positives while also minimizing time spent in the removal of nonmaximal bounding boxes, depending on the negative training samples selected on any particular run of the pipeline. A scale step factor of 0.9 gave better precision then lower values; increasing (or "refining") the scale step constant further gave minimal improvements to performance while increasing runtime to the point of making the detector unwieldy due to the sheer number of matches being evaluated. Of further note was the effectiveness of including some small amount of antialiasing into the scaling process; early versions of the pipeline used simple downsampling when scaling the image, and adding some amount of averaging with nearby neighboring pixels to the downscaling process immediately improved precision by roughly 15%.

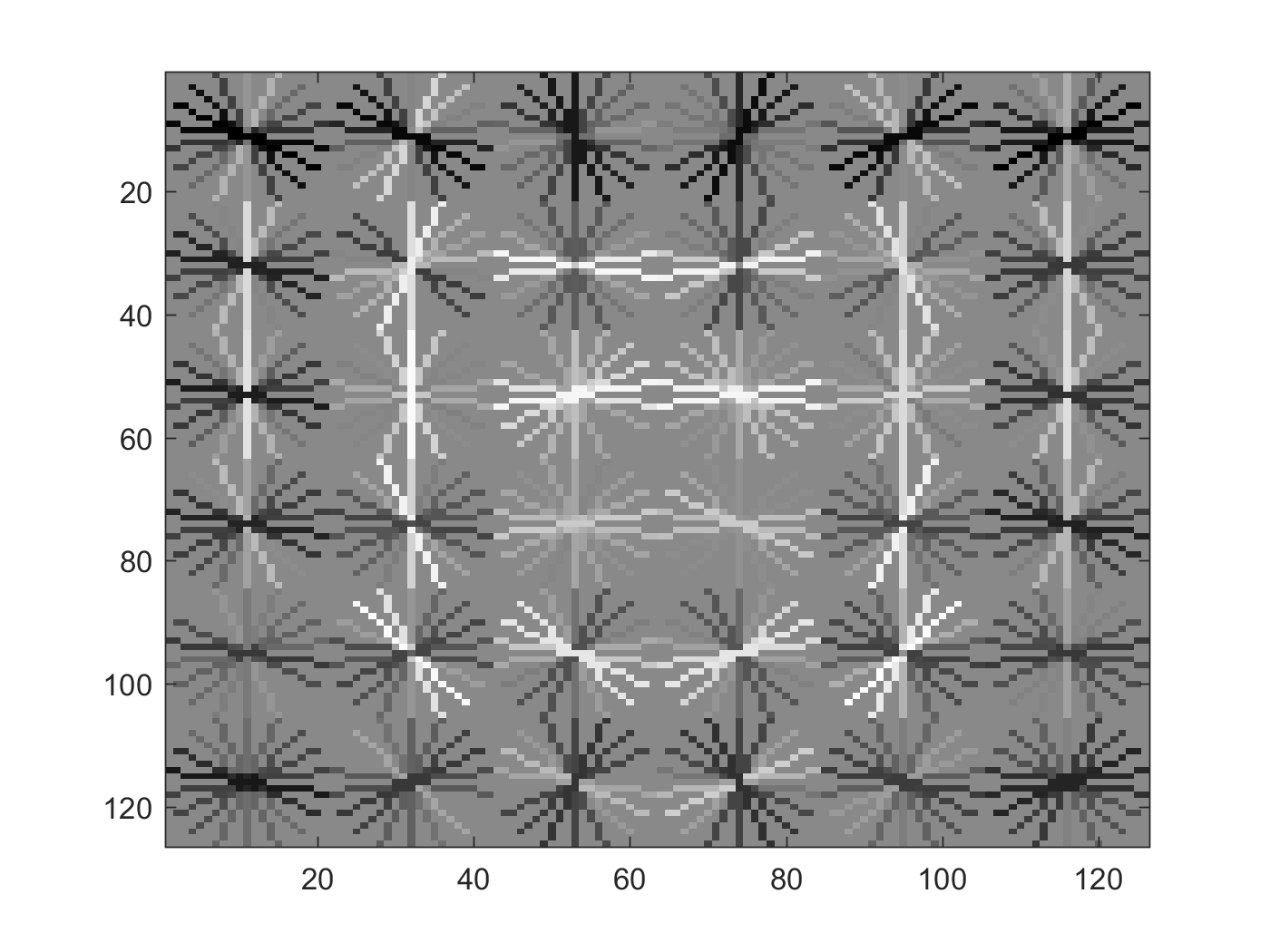

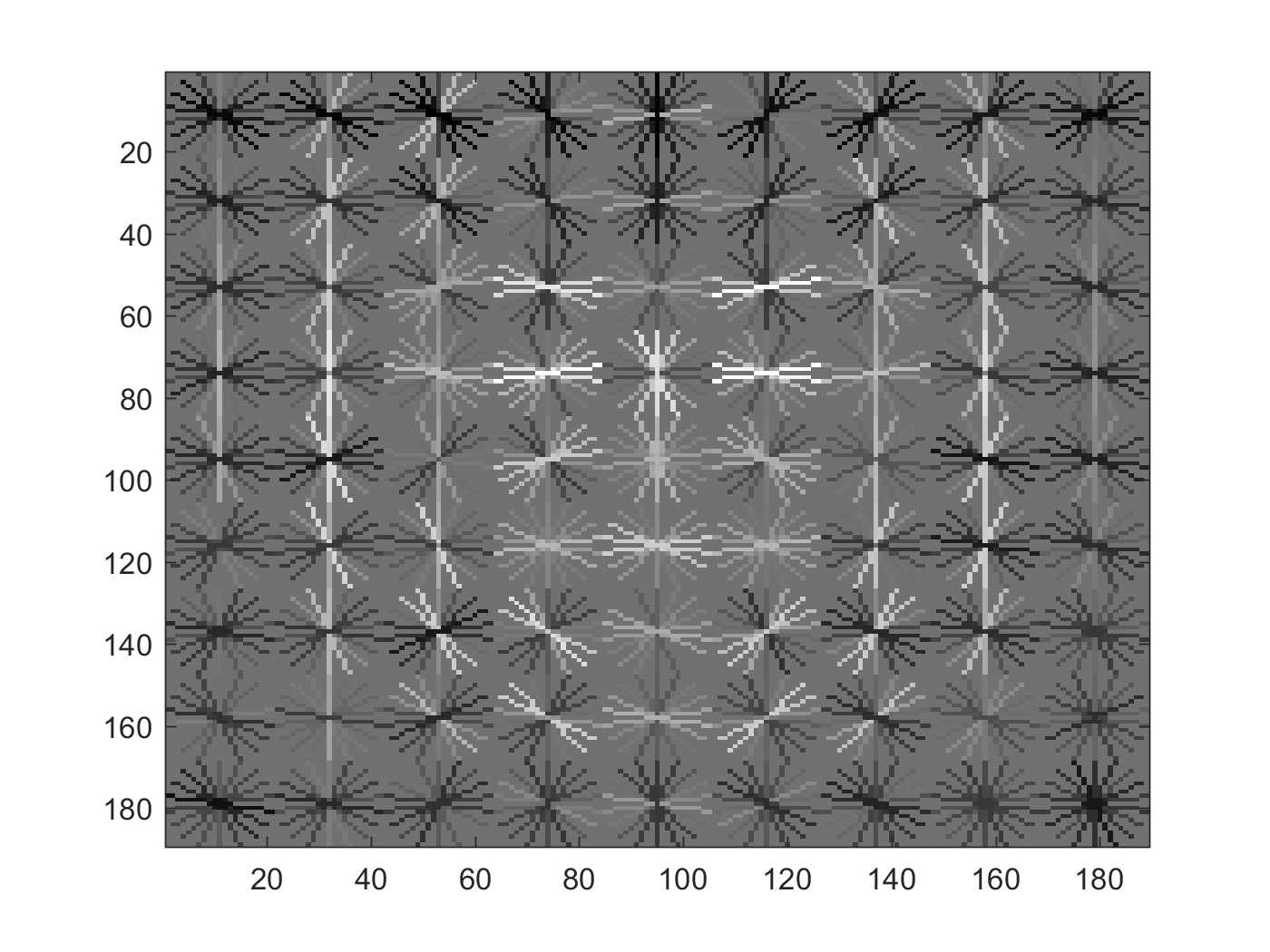

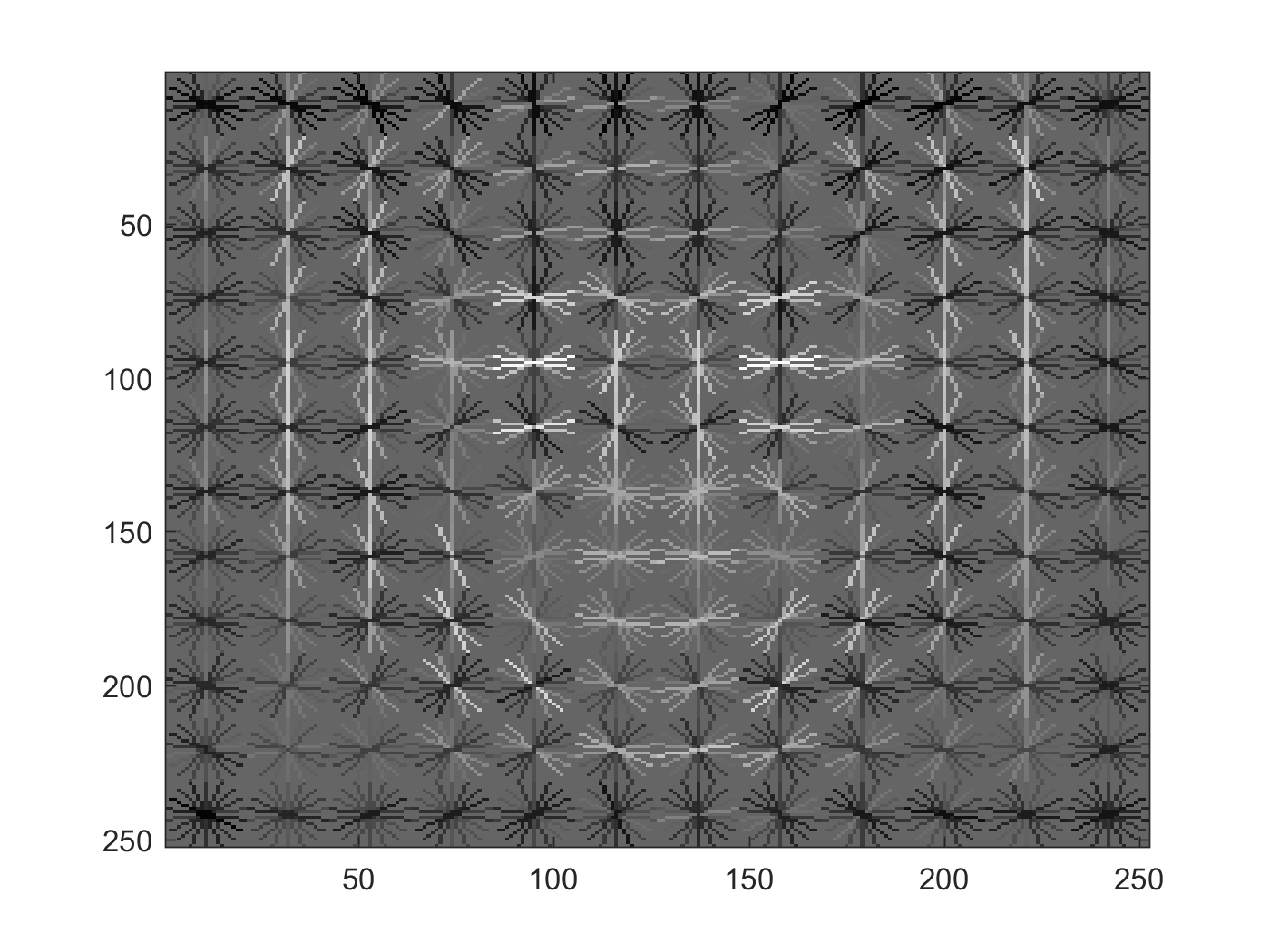

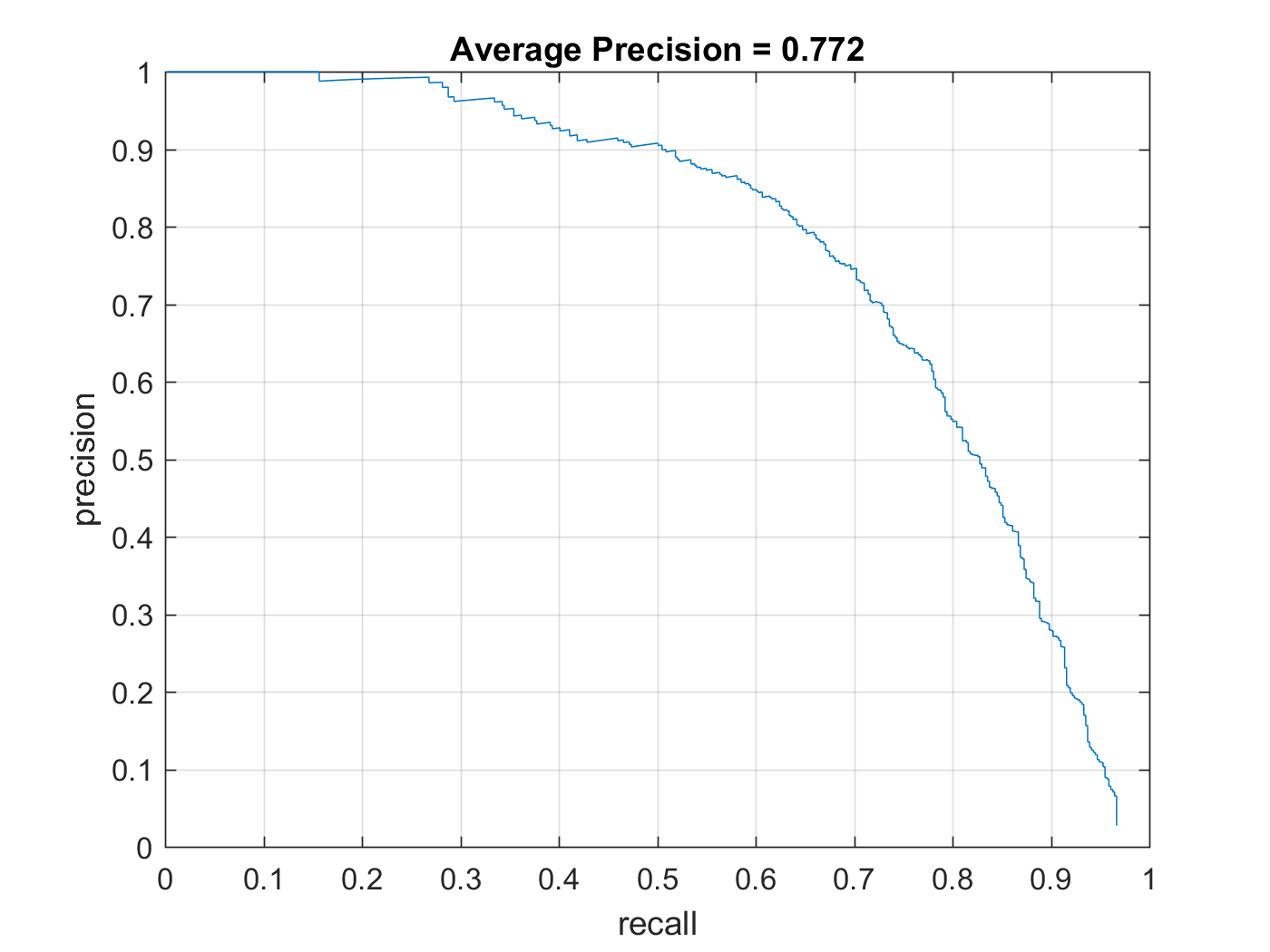

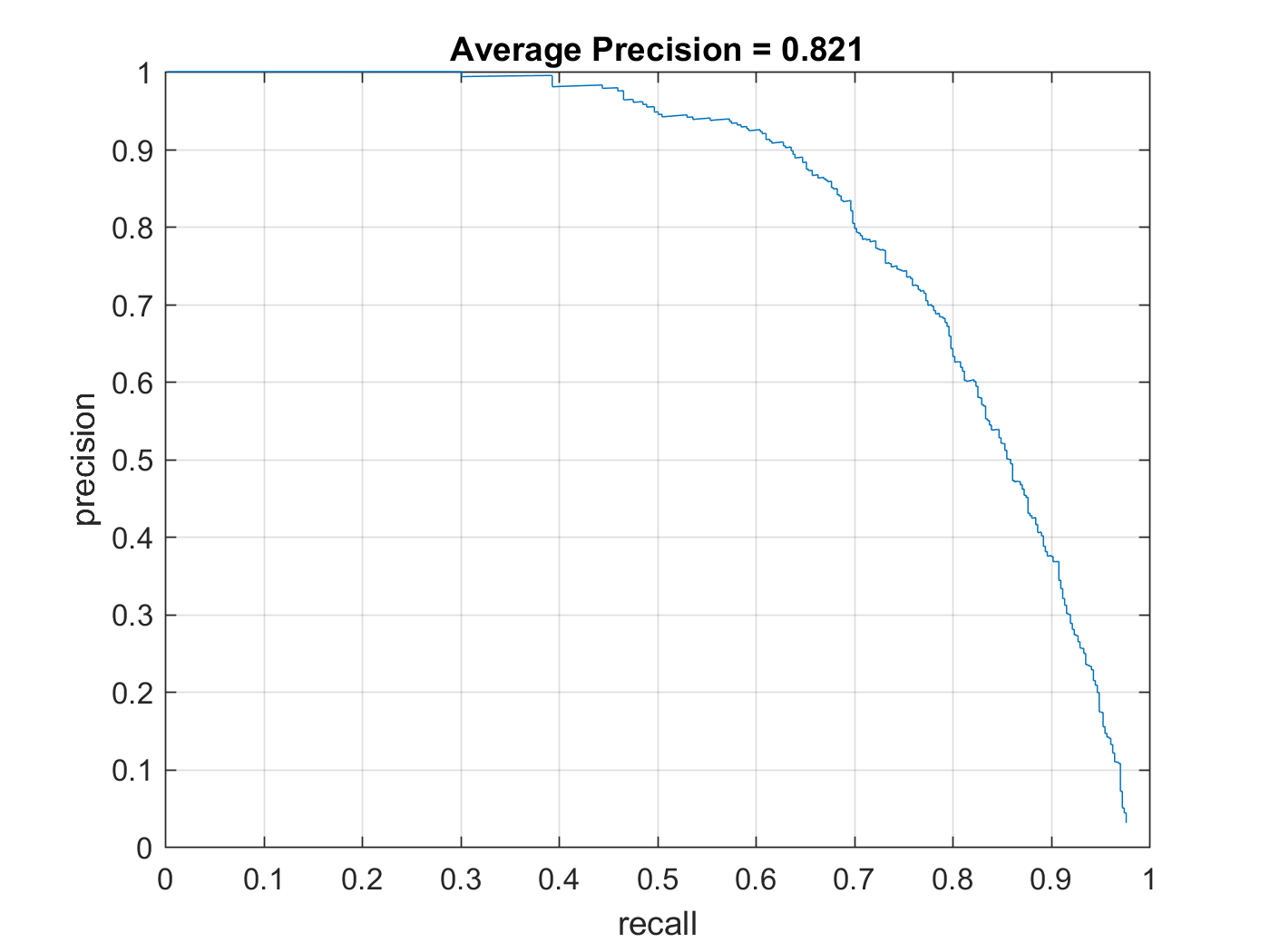

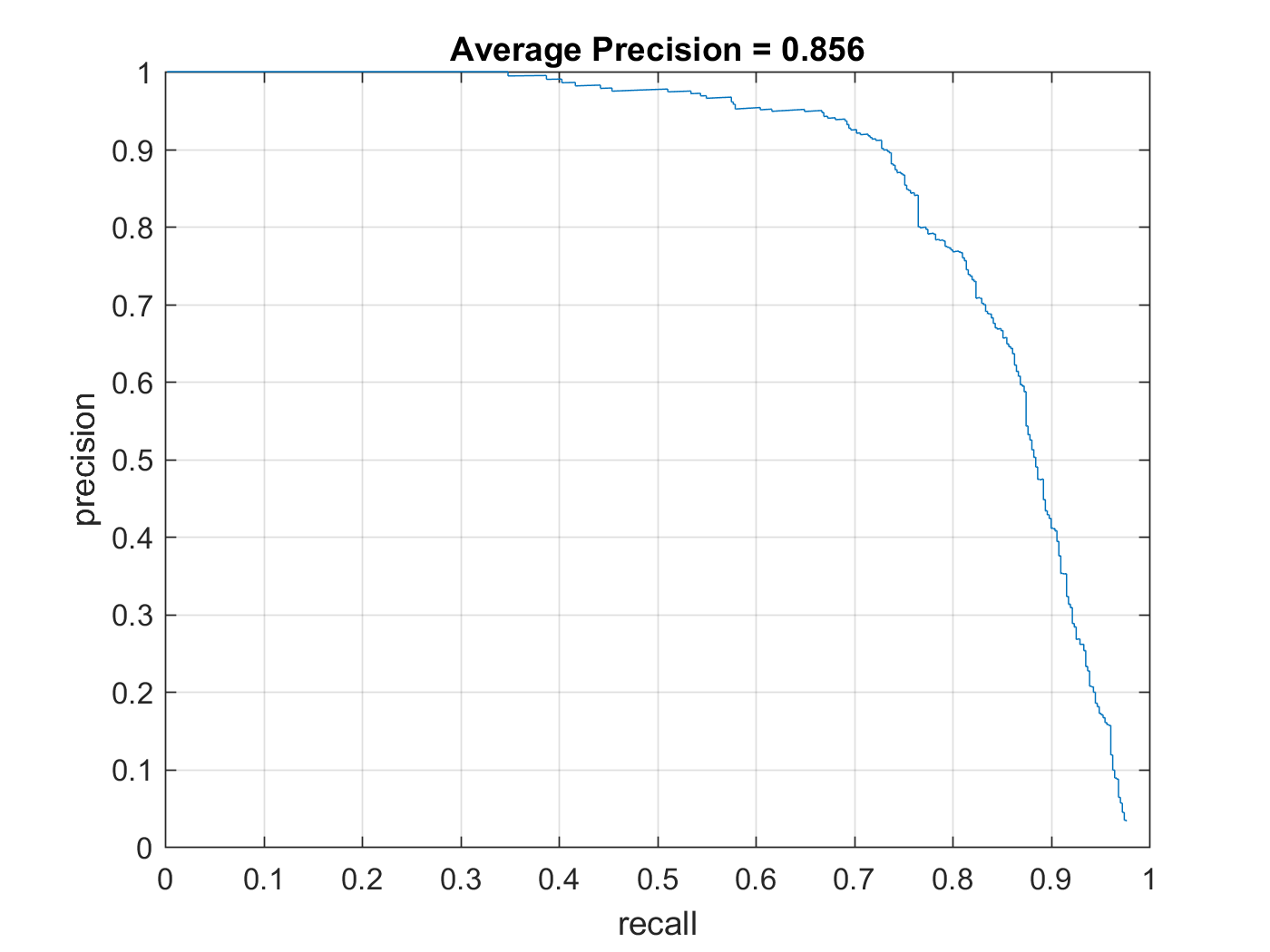

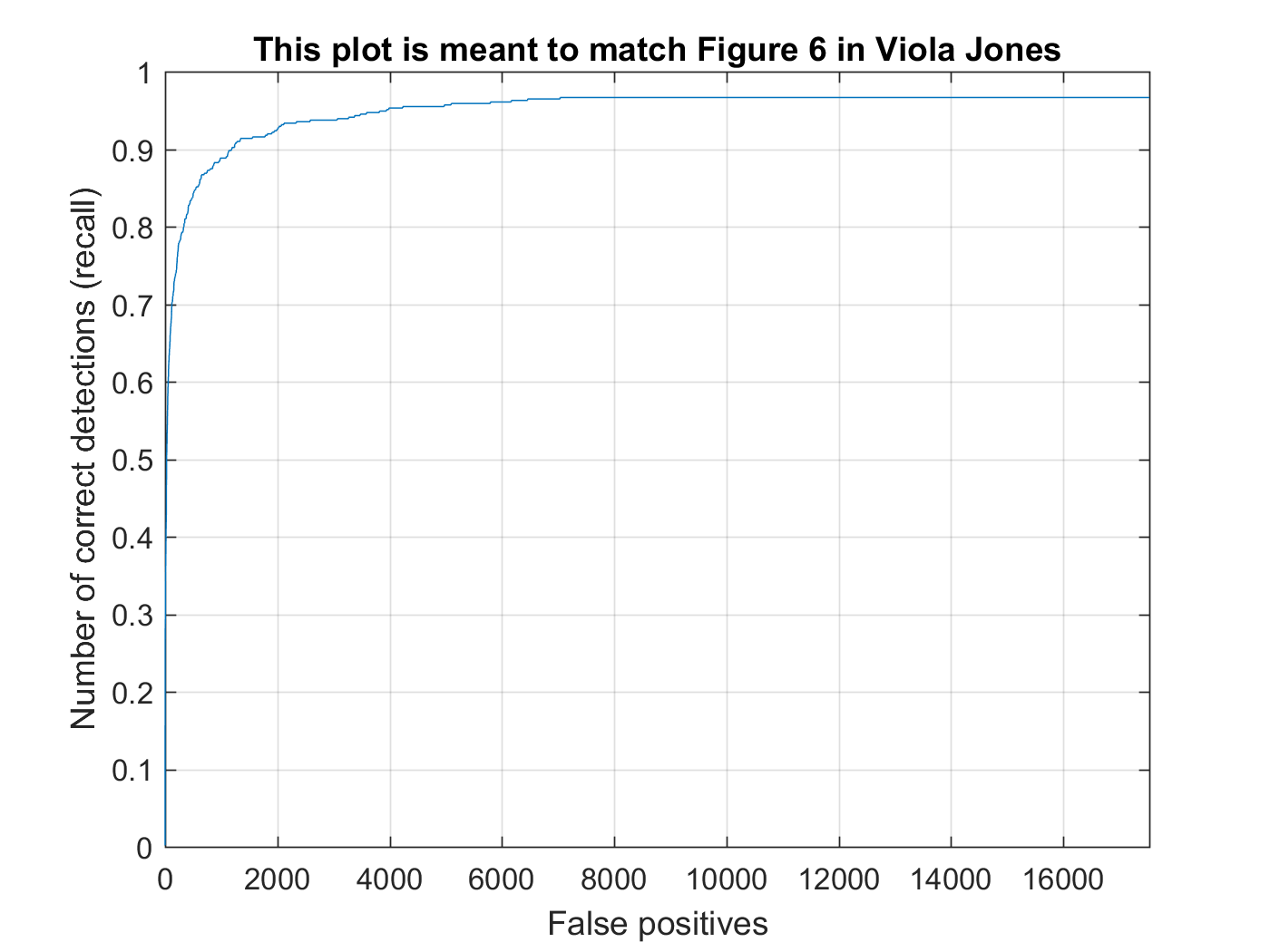

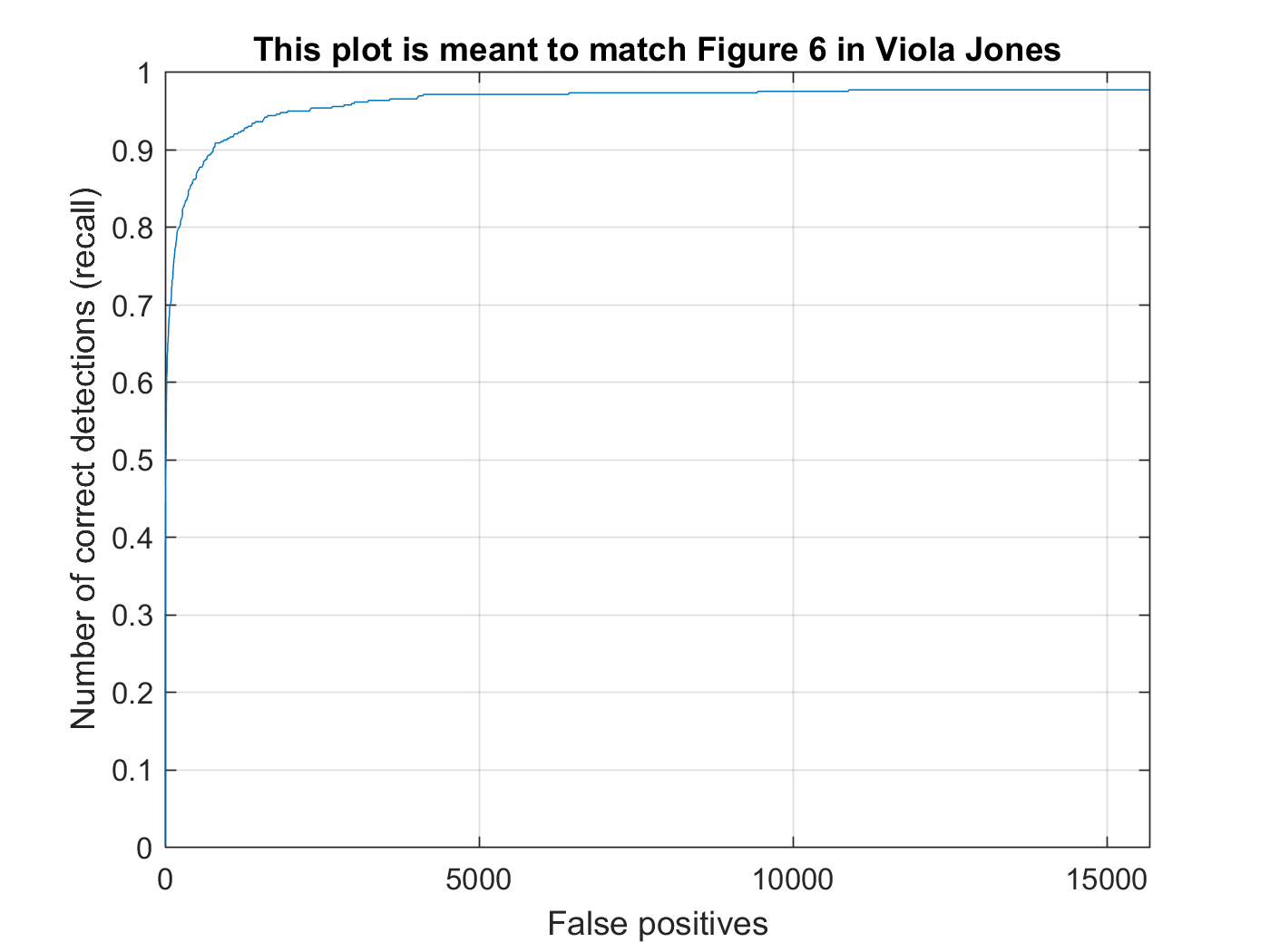

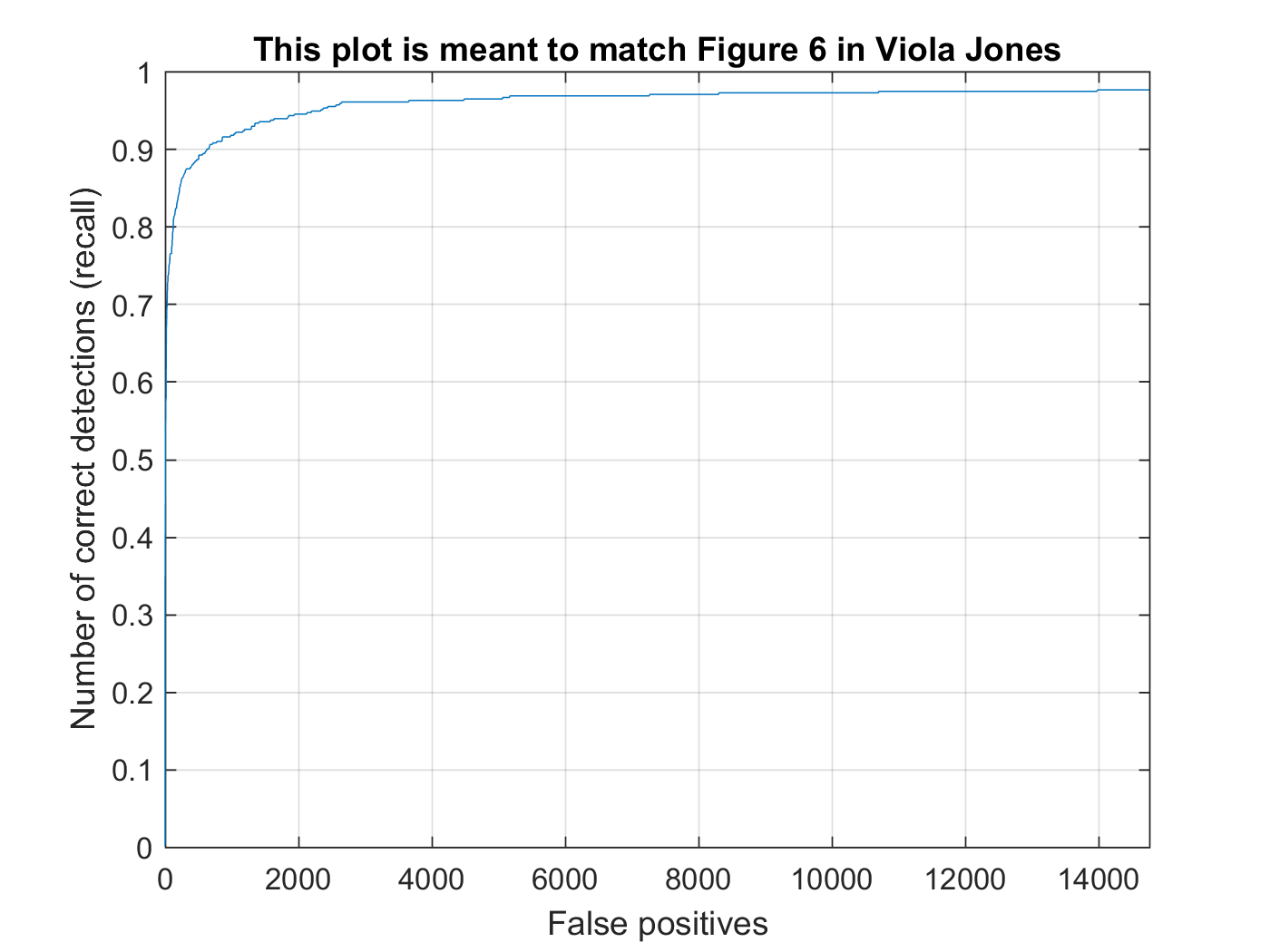

Below are some examples of classifiers, recall curves, and average precisions produced by my system at three different HoG cell sizes using what I found to be the ideal parameter values discussed above.

Classifiers trained for 6-, 4- and 3-pixel HoG cell sizes.

Precision curves for 6-, 4- and 3-pixel cell sizes.

Recall for 6-, 4- and 3-pixel HoG cell sizes.