Project 5 / Face Detection with a Sliding Window

In this project, we had to create a mutli-scale, sliding window face detector. The detector uses HoG features to train a linear SVM. My code include the following implimentation:

- The result with sliding window in multiscale

- Implement hard negative mining with sliding window

- Implement a HoG descriptor by myself

- Utilize alternative positive training date(UFI database)

- Try sift descriptor with sliding window

Implement Details

Features are represented with Histogram of Gradients computed via vl_hog in the vl_feat package. One important parameter here is the cell size of the histograms. I have tried to use 3, 4 and 6 pixels for the cell size. Smaller size gives better performance as images are described in finer detail, but slows down the algorithm significantly.

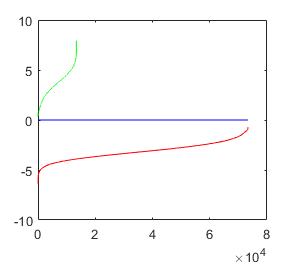

SVM training curve

Positive data and negative data are processed separately. The handling of positive data is straightforward: extract features for each image and return the results. Negative data are sampled randomly at different scales. Parameters include cell size, # of negative features, # of different scales has been tuned in the experiments

Classification of the training data uses a linear SVM. Performance on training set is almost perfect (accuracy around 0.98 ~ 1.00). Linear kernel requires less computational resources than other kernels, and with this task they provide similar performance. As mentioned in Dalal and Triggs, Gaussian kernel improves performance by 3% - 4%, but at the cost of a much higher run time.

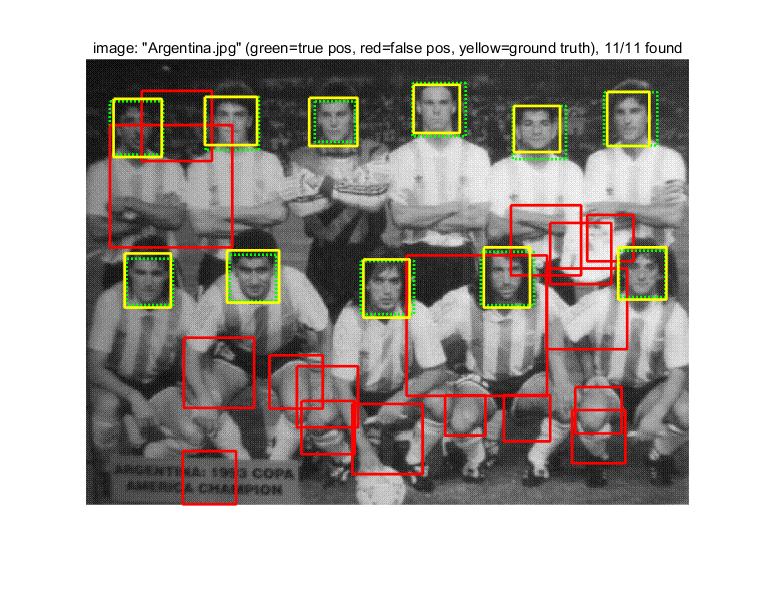

Detecting faces uses the sliding window approach in hog space. Each test image is rescaled to muti-scale, and then for every hog window of size 12 x 12 of the out put hog feature. Finally, windows that score above a threshold 0 go through non-maximum suppression, producing the final results.

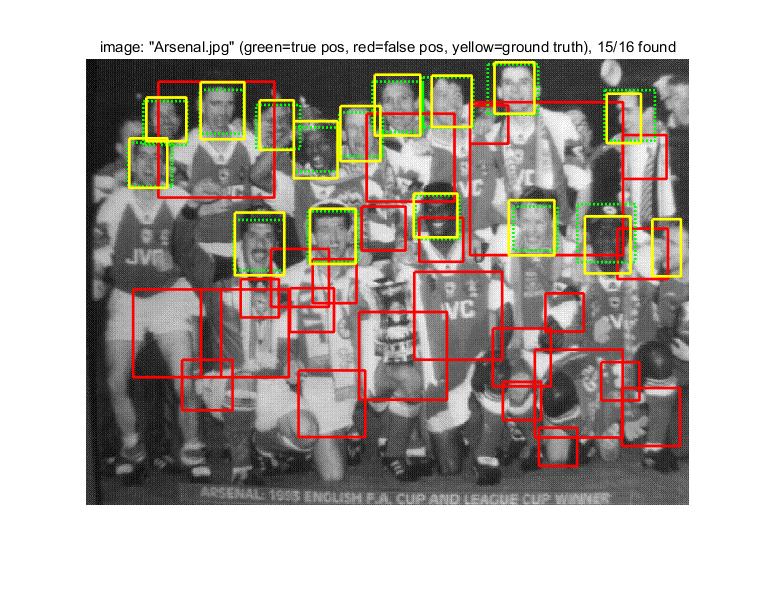

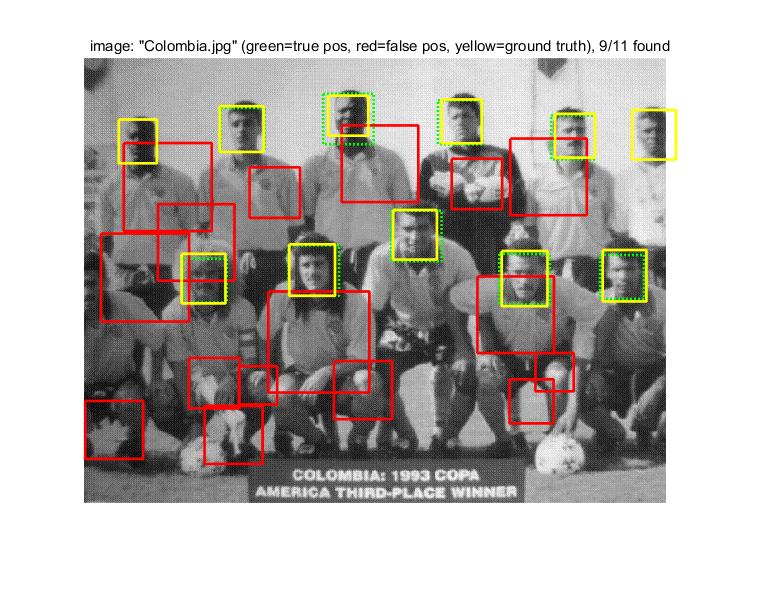

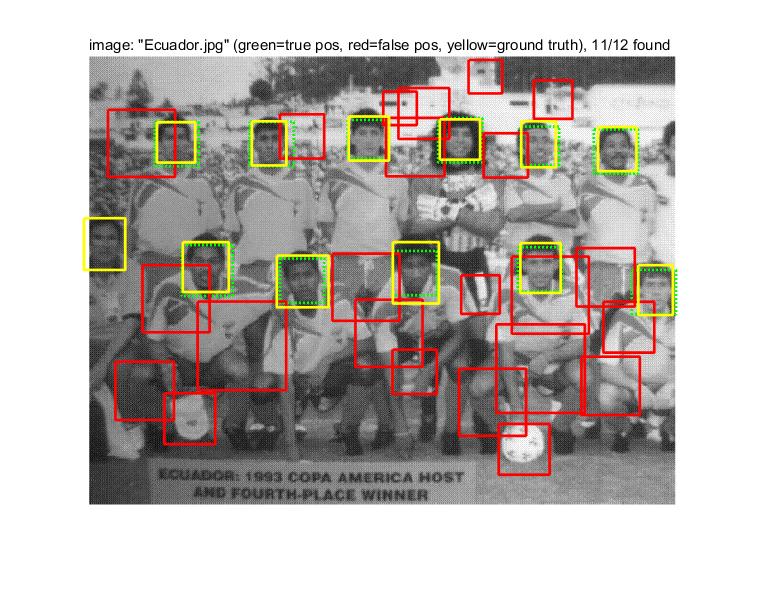

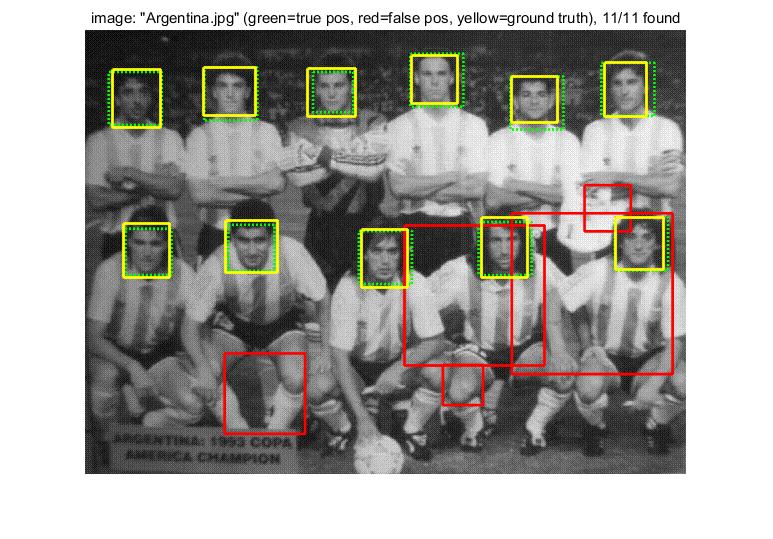

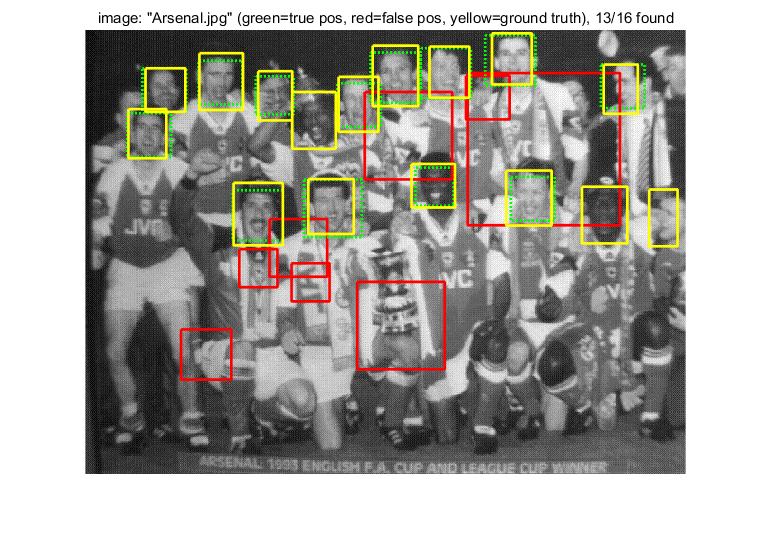

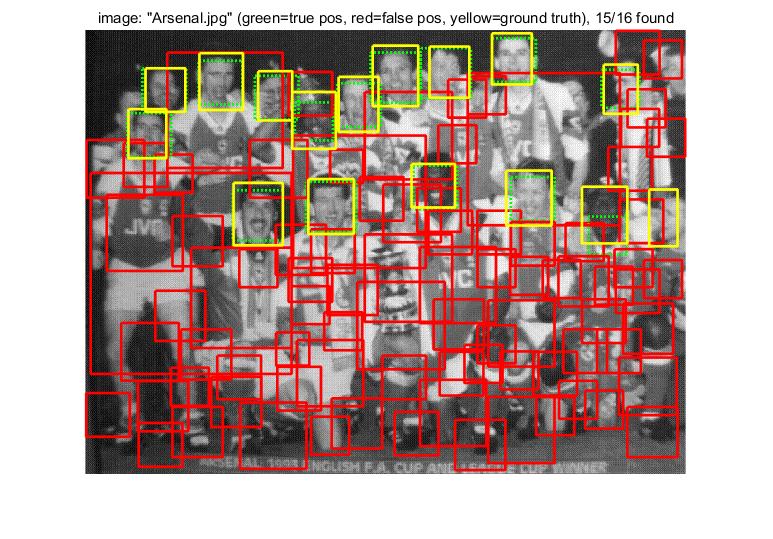

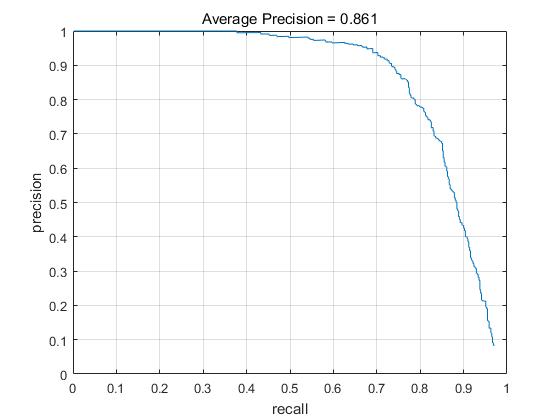

The result with sliding window in multiscale

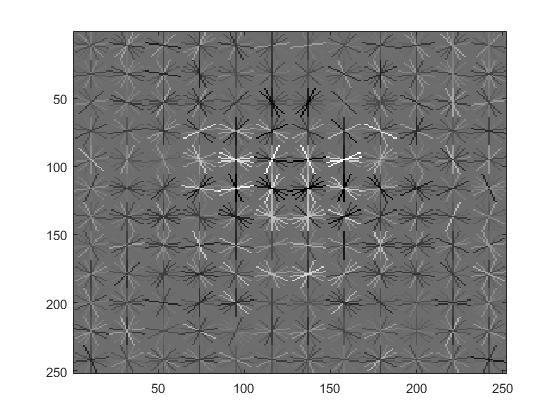

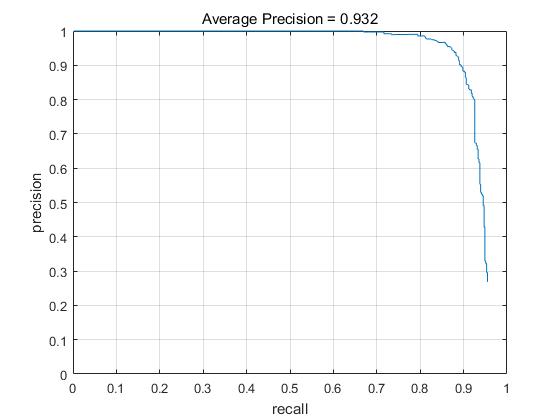

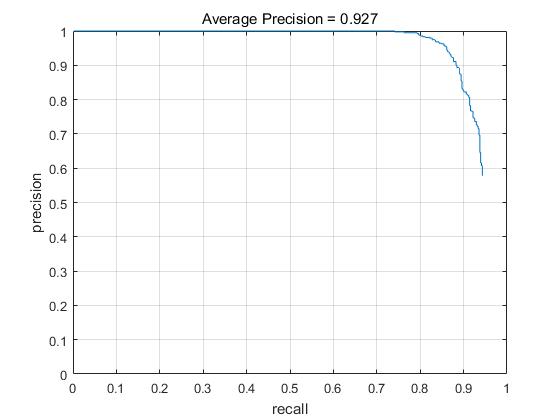

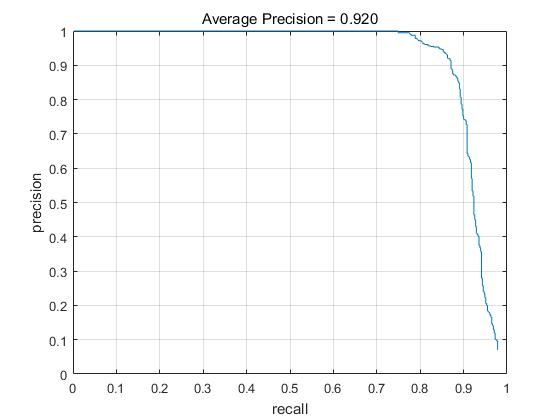

In the base line implimentation,positive training data are boosted with mirror image, negative data are sampled at a muti-scale, with 100000 samples and 3 pixel cell size. Sliding window detection uses 20 different scales (2.^(0:-0.2:-4)), and classifer threshold is 0. This implementation gives average precision of 0.932 as shown below, along with the HoG template. One can see that the template coarsely resembles the shape of a human face.

Precision Recall curve for sliding window in multiscale

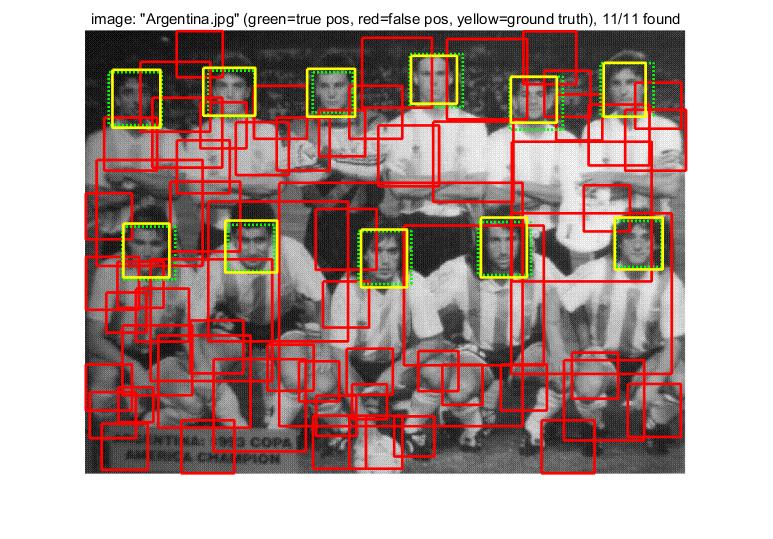

Implement hard negative mining with sliding window

In hard negative mining, i run the detector on the images in 'non_face_scn_path', and keep all of the features above some confidence level, add then to the negative training feat. this process is iterated to more then 10 times. The advantage of hard negative mining can hardly be obersived with thousands of negative training data in muti-scale. Here we demostrate the effective of hard negative mining by comparing to the case when the negative training data is fixed to 5000.

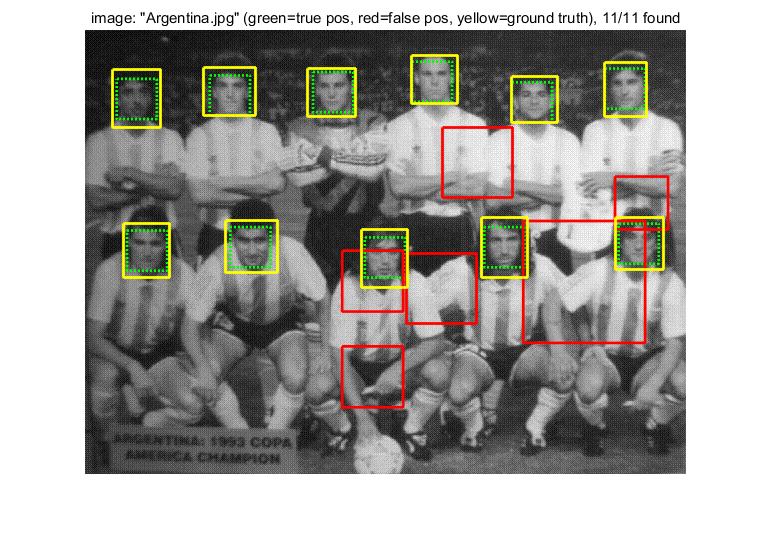

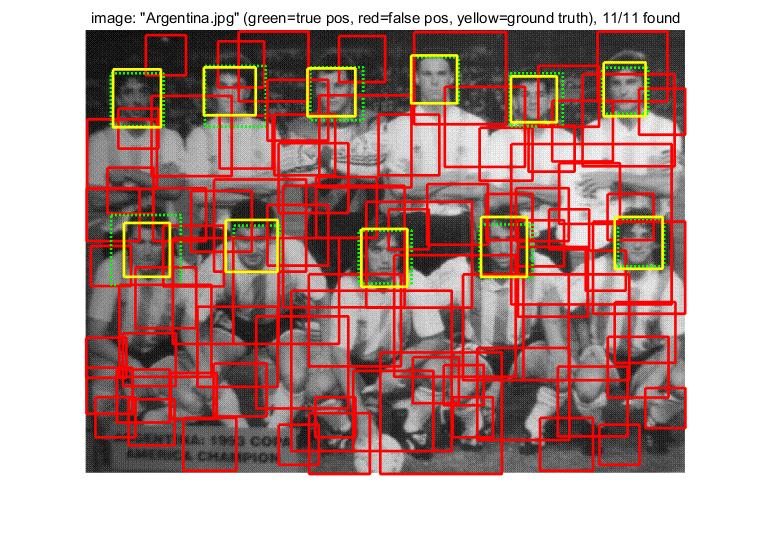

hard negative mining in 20 iterating(left) compare with base line with 5000 negative traning examples(right):

Compare with the case when the negative training data is fixed to 5000, we can clearly see that the number of false proposed box by sliding windows is largely decreased while the average precision is still in a relative high level. The demostrate the effective of hard negative mining when the amount the negative training data is limited

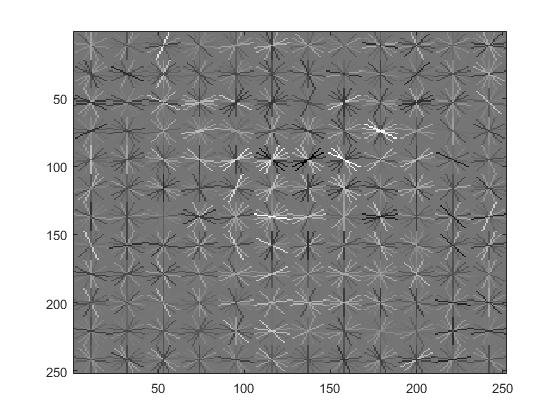

Self-implemented HoG descriptor

I have implement the hog feature by myself via matlab, which result in 11x11x36 dimension for 36x36 patch. The matlab version of hog is slower than vl_hog since the core function in VL_hog is impliment via C++. Here, in order to maintain the runing speed, we calibrate the parameter with trade-off to the algorithm performance. The negative training data is lower to 10000.

We can see that the performance of implement Hog is ok, although is not as good as the VL_hog version. And this may be caused by some detail parameter in the hog descritor such as gamma normlization.

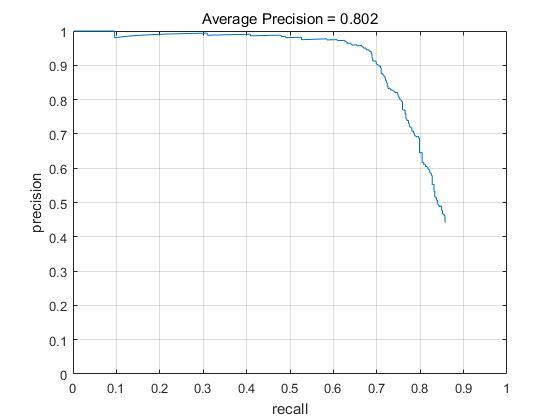

Utilize alternative positive training date(UFI database)

I have explore additional training data and test the performance with the algorithm. The UFI is a novel real-world database that contains images extracted from real photographs acquired by reporters of the Czech News Agency (CTK). It is further reported as Unconstrained Facial Images (UFI) database and is mainly intended to be used for benchmarking of the face identification methods. Here we adopt the cropped faces that were automatically extracted from the photographs using the Viola-Jones algorithm. The face size is thus almost uniform and the images contain just a small portion of background. In the algorithm, we resize the image to 32x32 and get hog descriptor.

We can obersived that the UFI database nearly not include any backward information. The cropped image only include the center of the face. Thereby the learning classfication parameter is different with previous learned. And the proposed box by the detector is relatively smaller. The training data together with the algorithm get 80.2% in the given test set.

Try Sift descriptor with sliding window

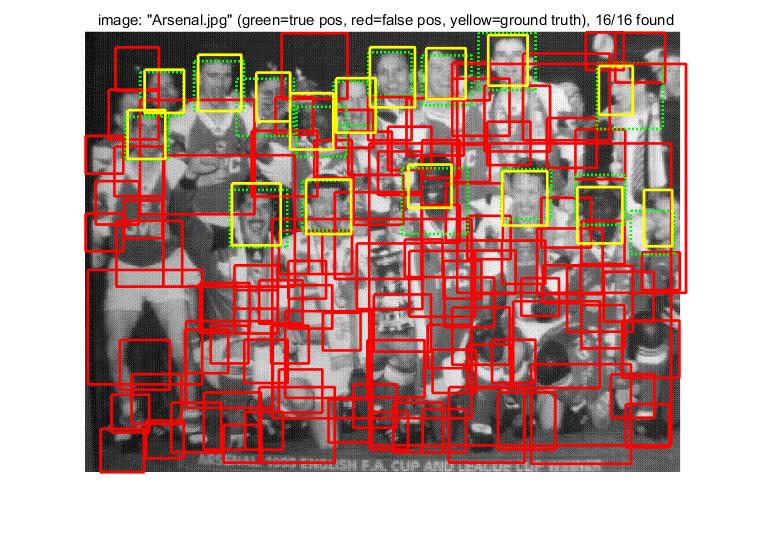

In this part, we use the sift descripter with the local box. Since the spatial arragement of the image is not the same as that of in the sift space, thereby, we crop the image first and then extract a single sift descriptor with vl_dsift(we set the step parameter very large which obtain a single sift descriptor in the middle of the crop image) which result in feature in 128 dimension. Other parameter such as scale or # of training data is the same with previous experiment.

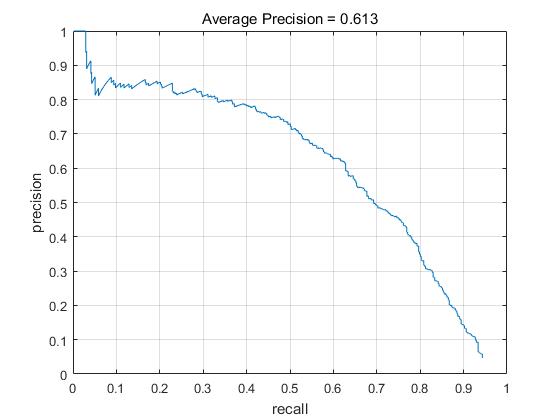

We can see that the performance of the algorithm with sift descriptor is not as well as the HOG. The sift descriptor obtain 61.3% in the test set with lots of wrong bounding boxs.