Project 5 / Face Detection with a Sliding Window

In this project, I implemented Dalal and Triggs with sliding windows. Below are the details of the implementation

Get positive features and negative features

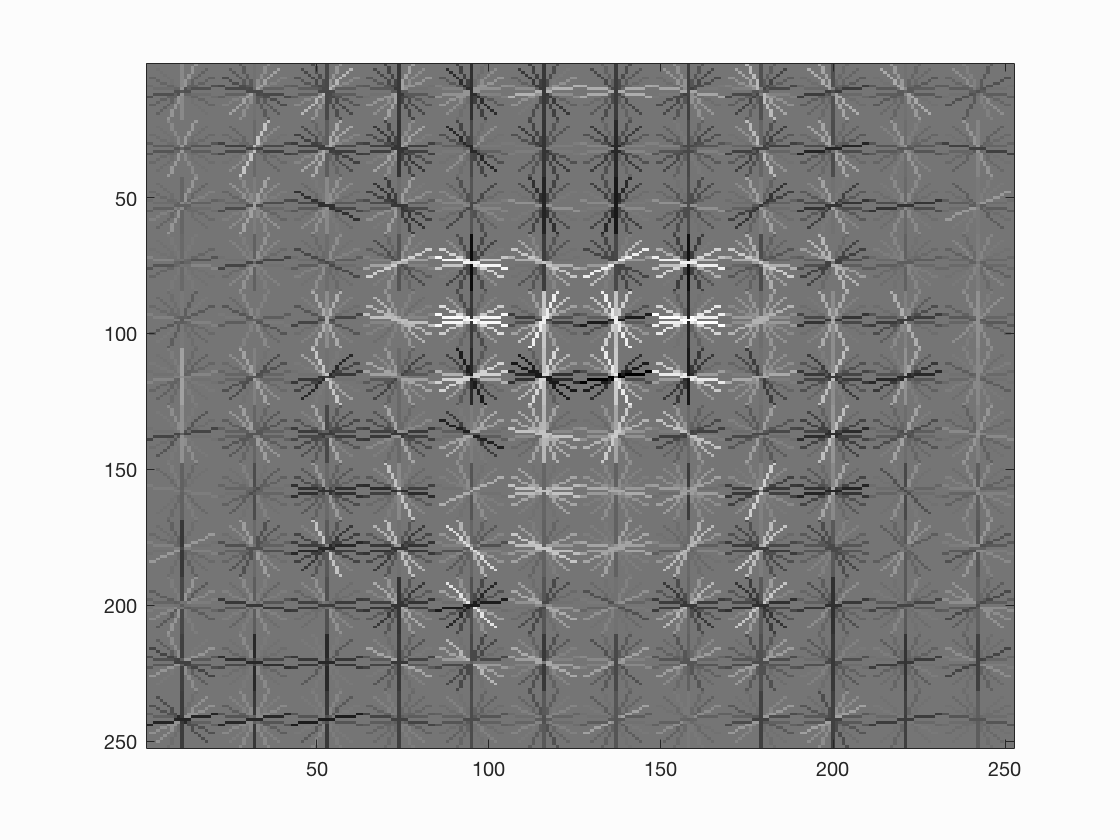

In get_positive/negative_features.m, I collected hog features of both positive templates (examples with faces) and negative examples(pictures without faces). I converted pictures into single format and called vl_hog function to get HOG features from these pictures.

One thing to notice in get_negative_features.m is that I tried getting negative features for different scales since in the run_detector function we classify windows at multiple scales. However, the average accuracy dropped about 3% with same values on other parameters like cell size and threshold. So I decide to just randomly select negative features at fixed scale.

Train classifier

this function is relatively straightforward. All I needed to do is to create labels for positive and negative examples and train the classifier. I tested out with several small lambda numbers but they don't seem to affect the accuracy too much. So I kept the value as 0.0001.

Run detector

The tricky part of the function is to figure out the corresponding position between parts of the original test pictures and scales images that are likely to be faces.

Example of code with highlighting

%example code

scale_min = feature_params.template_size / min(size(img));

for d = scale_min : 0.05 : 1

scale_img = imresize(img, d);

hog_space = vl_hog(scale_img, feature_params.hog_cell_size);

height = floor(size(scale_img, 1) / feature_params.hog_cell_size);

width = floor(size(scale_img, 2) / feature_params.hog_cell_size);

window_hog = zeros((height-range+1)*(width-range+1), range^2*31);

for k = 1 : height-range+1

for j = 1 : width-range+1

patch = hog_space(k:k+range-1, j:j+range-1, :);

patch = reshape(patch, 1, range^2*31);

window_hog((k-1)*(width-range+1)+j, :) = patch;

end

end

scores = window_hog * w + b;

indices = find(scores > 0.5);

scale_confidences = scores(indices);

%transform the one row to corresponding face boundaries

row_num = floor(indices / (width - range + 1));

col_num = mod(indices, width - range + 1);

row_num(find(col_num == 0)) = row_num(find(col_num == 0)) - 1;

col_num(find(col_num == 0)) = width - range + 1;

I would like to highlight several decisions and parameter values in this part

- the minimum scale to rescale the test picture is the template size. scale varies by 0.05 on each step.

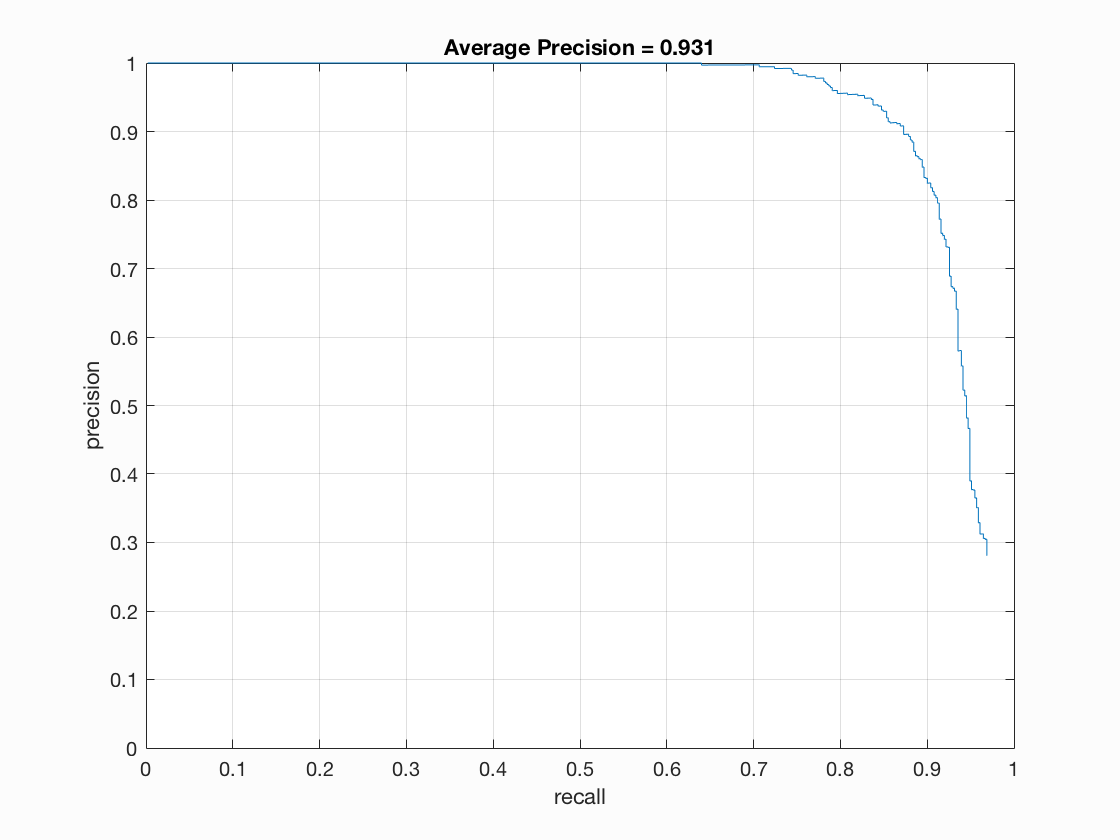

- for the threshold, I tested with different values. It seems that high value of threshold will lower the negative positive rate, but will also decrease the average accuracy rate. The average accuracy is 87.9% when threshold is set to be 0.75 or above, and when it's lowered to 50%, average accuracy improved a little bit to 88.1%. When threshold is between 0.5 - 0.3, average accuracy doesn't change but when it's lower, it's decreasing again.

- The smaller cell size is supposed to render a higher recall accuracy rate.When cell size is 6, the accuracy is around 0.88. When cell size is 4, the accuracy increased to 92.4%. And finally when cell size decreased to 3, the accuracy gets as high as 93.1%. Also, with a smaller cell size, less false detection occur. Nonetheless, it's exponentially computationally heavy with smaller cell size. So in real applications, tradeoff has to be made in terms of accuracy and speed. In this submission, I'll keep the cell size to be 6.

Results in a table

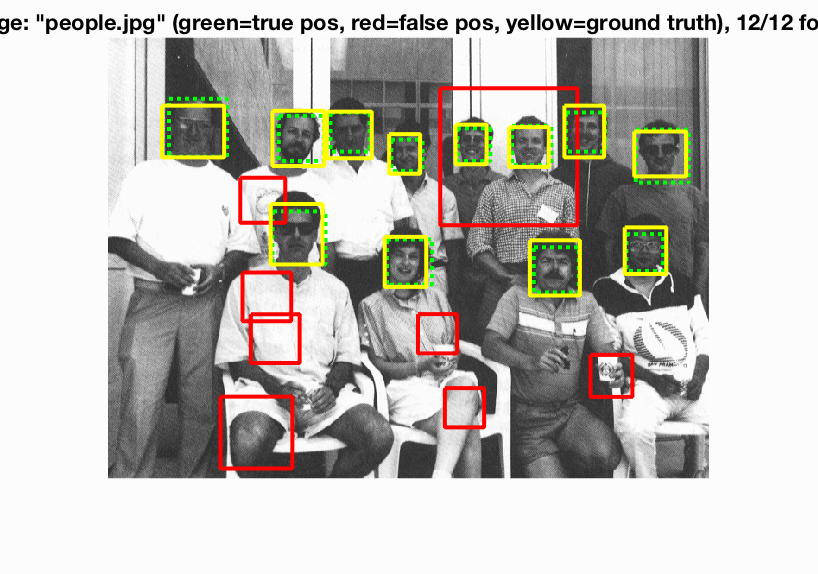

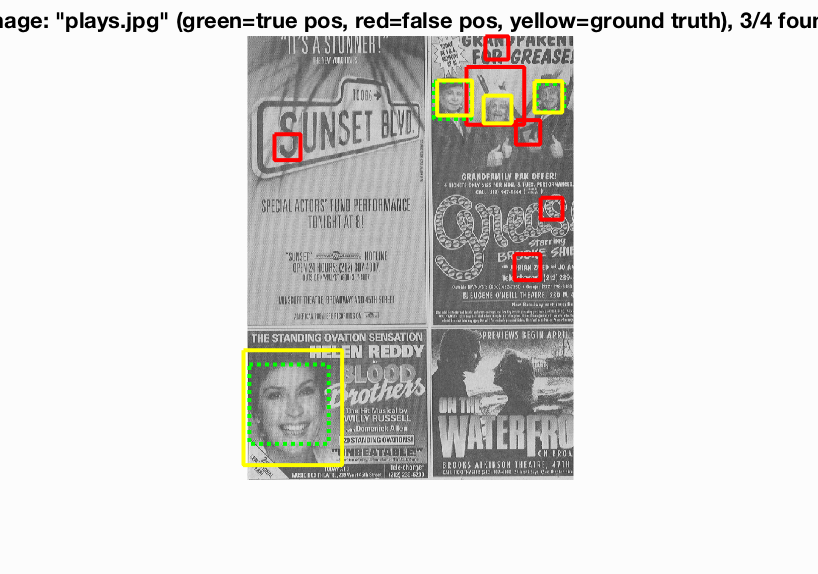

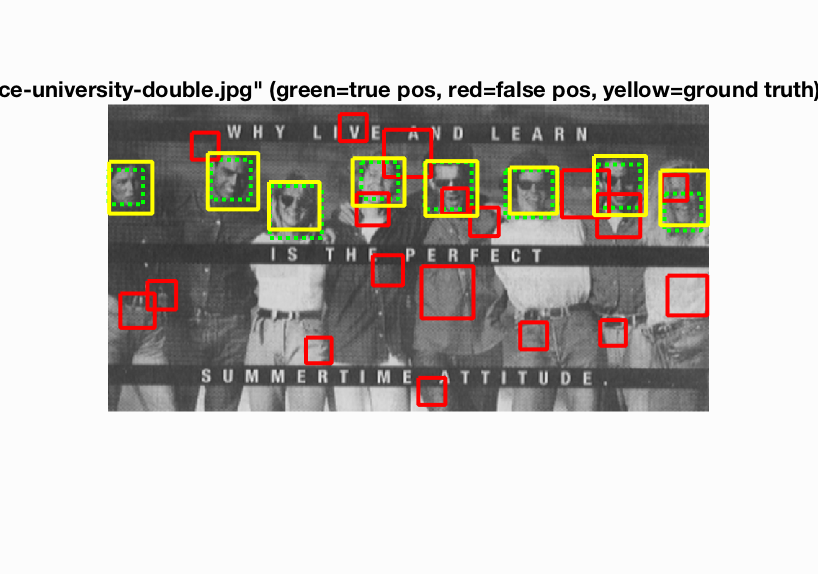

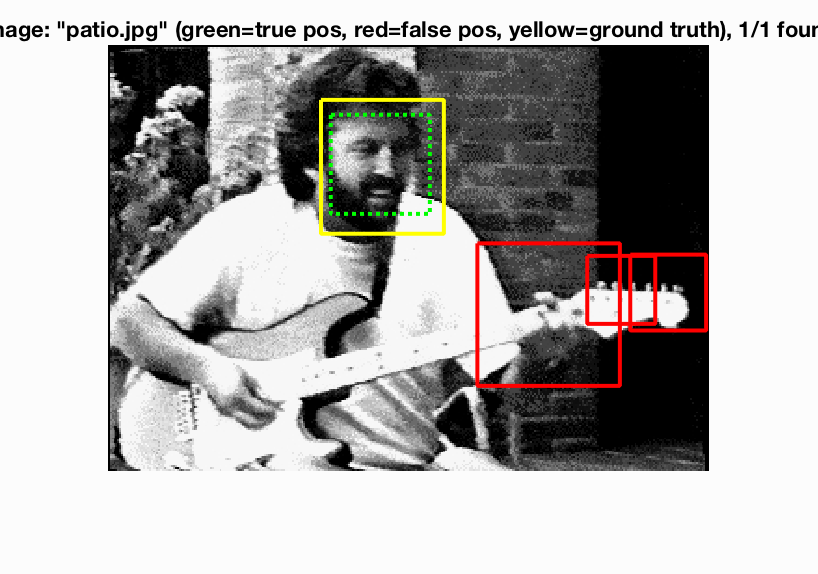

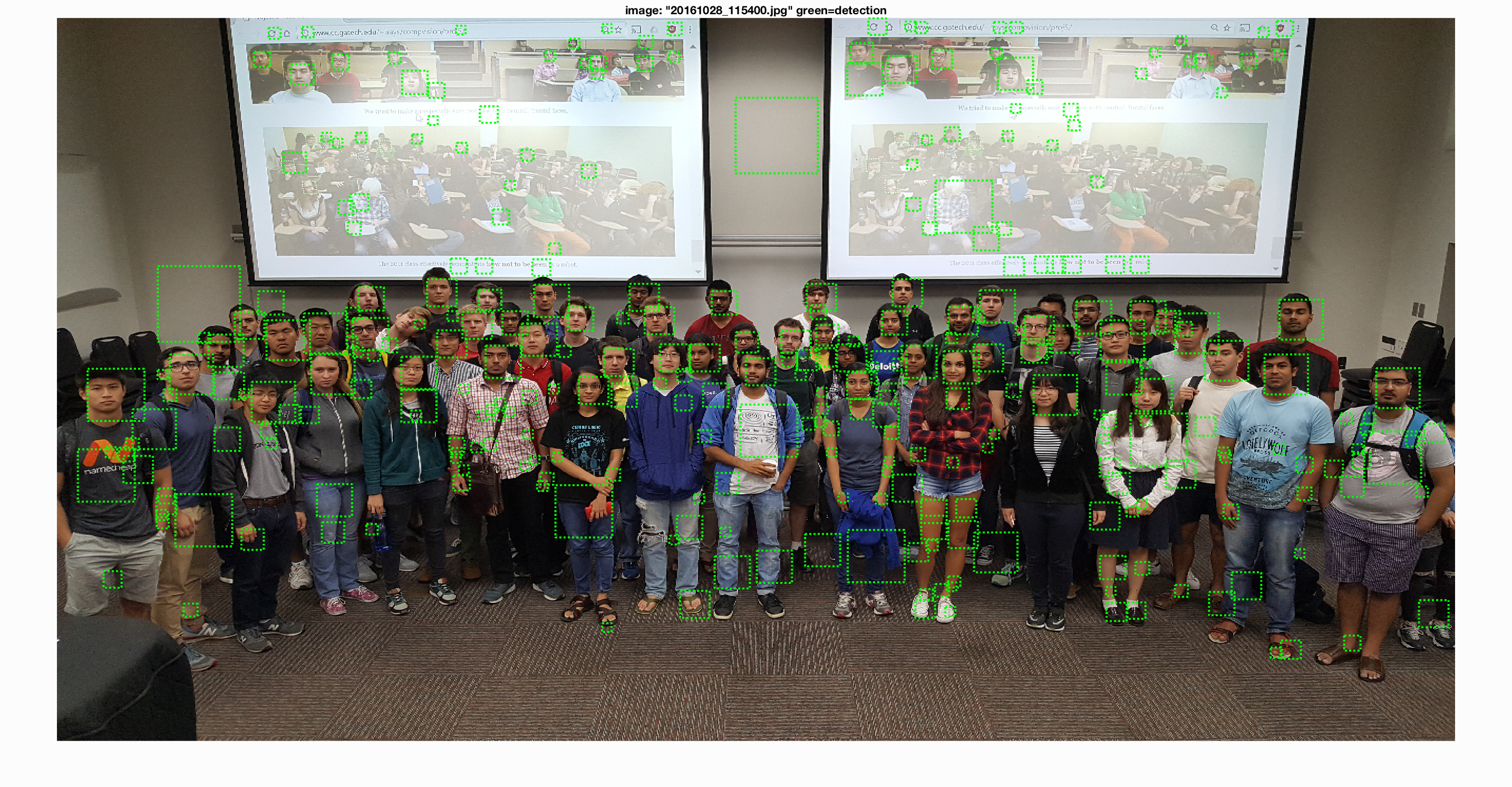

Example of detection on the test set.

|

|

Above are the two visualizations for the picture taken during the class. The one on the left is with 0.5 threshold, and the one on the right is with 0.75 threshold. From the comparisons we can see that overall the picture with higher threshold has less false positive boxex (detections).On the other hand, most faces are correctly detected.