Project 5 / Face Detection with a Sliding Window

In this project, we are going to implement a sliding window face detector. We use VLFeat to get hog descriptors of face and non-face trainning images to train a linear classfier. Then use the classifier to classify multi-scales of sliding window of test images. The implementation were following steps as below:

- Get postive features: get hog descriptors of images with faces.

- Get random negative features: get hog descriptors of images without faces.

- Train a linear classifier.

- Use the classifier to classify test data set.

Algorithm

- Get postive features: Use the cropped face images. Get their hog descriptors.

- Get random negative features: Get the hog descriptors of small patches of non-face images. Notice that the size of these images are different, so each image may provider different number of negative features. I counted the number of negative features collected, stop when it reached the number required.

- Train a linear classifier: Use both positive and negative features to train a linear SVM. I set the training labels of positive feature to 1 and negative ones to -1.

- Use the classifier to classify test data set: Use a sliding window to crop a path from the hog descriptors of test image, set a threshold to justify if it is a face.

Code

The core part of my code is shown as following:

% positive features

hog = reshape(vl_hog(img, feature_params.hog_cell_size), [1, template_size]);

% negative features

for r = 1 : step : row - feature_params.template_size + 1

for c = 1 : step : col - feature_params.template_size + 1

hog = reshape(vl_hog(img(r : r + feature_params.template_size - 1,...

c : c + feature_params.template_size - 1),...

feature_params.hog_cell_size), [1, template_size]);

end

end

% train classifier

X = horzcat(features_pos', features_neg');

Y = ones(size(features_pos, 1) + size(features_neg, 1), 1);

Y(size(features_pos, 1) + 1 : end) = -1;

lambda = 0.0001;

[w, b] = vl_svmtrain(X, Y, lambda);

% run detector

feature = hog(y : y + num_cells - 1, x : x + num_cells - 1, :);

score = reshape(feature, 1, []) * w + b;

if score > threshold

cur_bboxes = [cur_bboxes; ...

round(((x - 1) * feature_params.hog_cell_size + 1) / (scale ^ times)),...

round(((y - 1) * feature_params.hog_cell_size + 1) / (scale ^ times)),...

round((x + num_cells - 1) * feature_params.hog_cell_size / (scale ^ times)),...

round((y + num_cells - 1) * feature_params.hog_cell_size / (scale ^ times))];

cur_confidences = [cur_confidences; score];

cur_image_ids = [cur_image_ids; {test_scenes(i).name}];

end

% hard negative mining for extra credit

for iter = 0 : 10

num = size(features_neg, 1);

for i = 1 : num

score = features_neg(i, :) * w + b;

if(score > -1)

features_neg = [features_neg; features_neg(i, :)];

end

end

X = horzcat(features_pos', features_neg');

Y = ones(size(X, 2), 1);

Y(size(features_pos, 1) + 1 : end) = -1;

[w, b] = vl_svmtrain(X, Y, lambda);

end

Results in a table

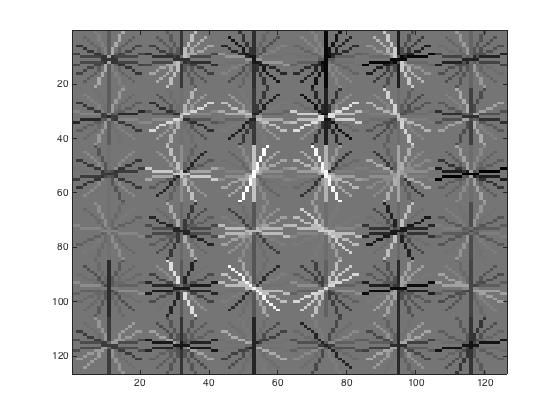

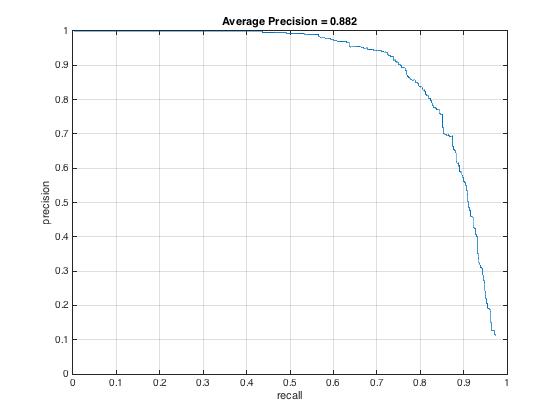

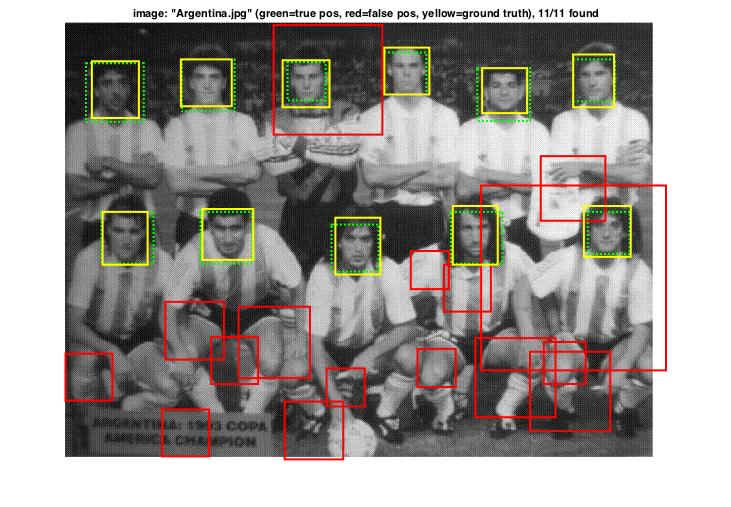

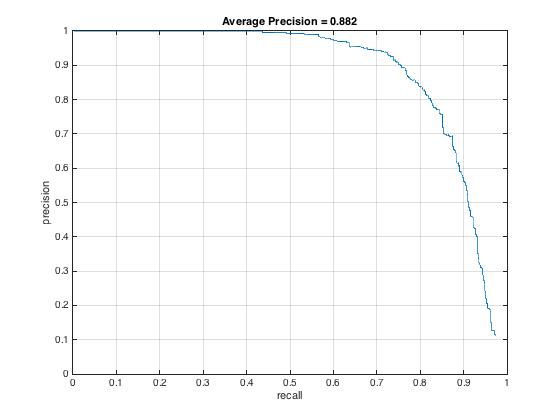

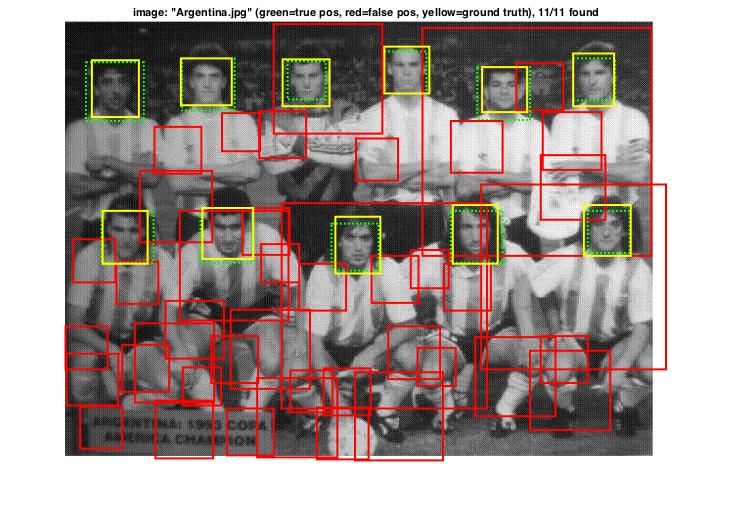

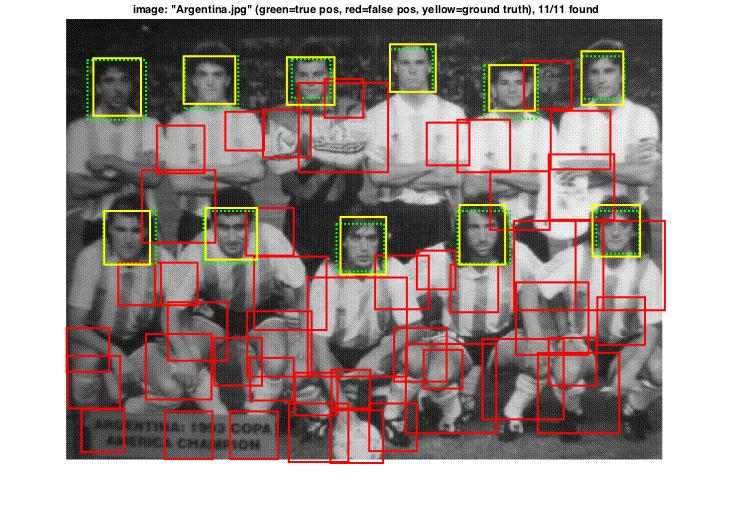

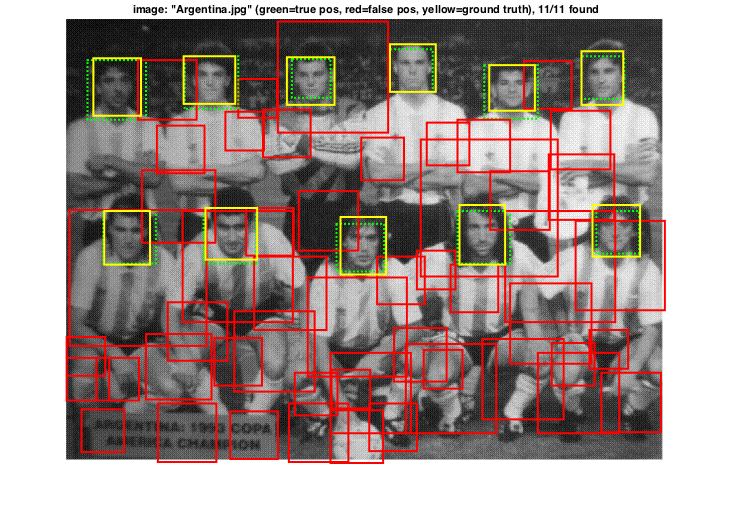

The first group of figures are the results of following parameters setting:

template_szie = 36, hog_cell_size = 6, num_negative_examples = 10000, scale_size = 0.9, scale times = 30, threshold = -0.1

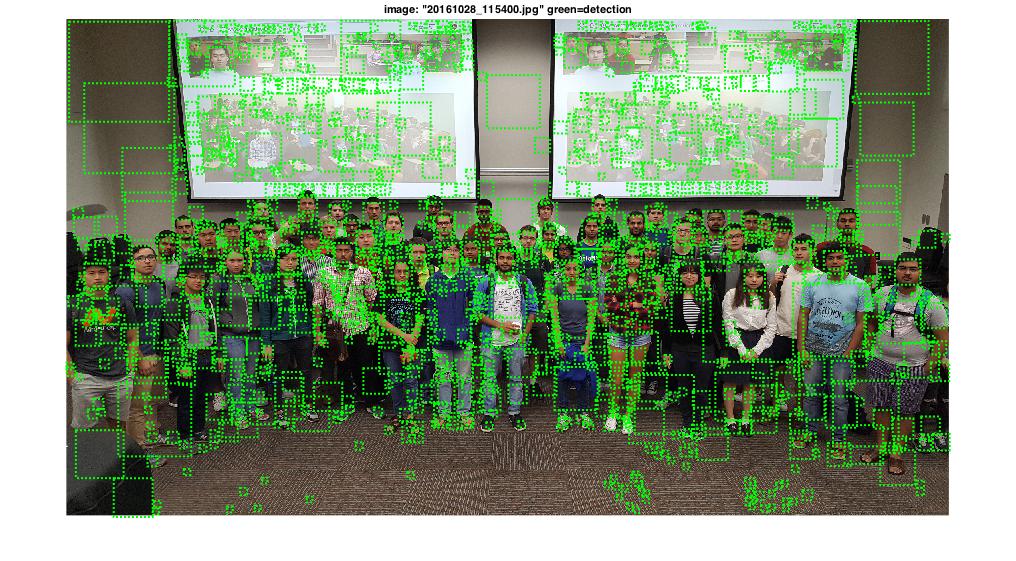

The results were good, I got 0.882 accuracy. Most faces in the images were detected, however, the false positive rate was also hgih.

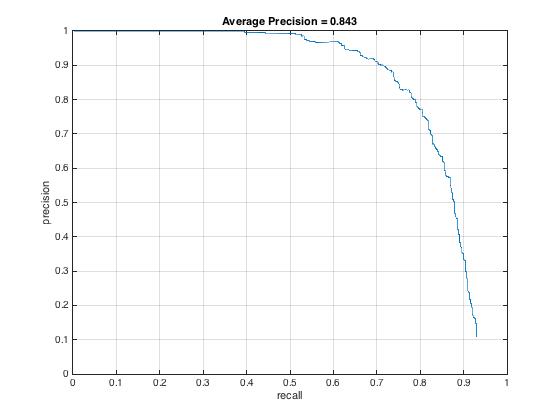

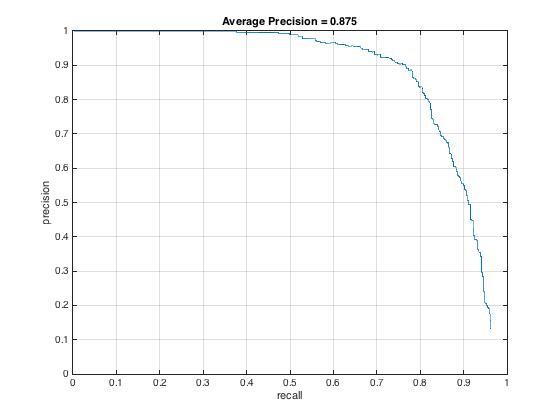

Besides the setting above, I also tried set iteration of scale in detector to 10 and 20. The results are shown as following. As we can see, the accuracy has slightly increased, from 0.843 to 0.875.

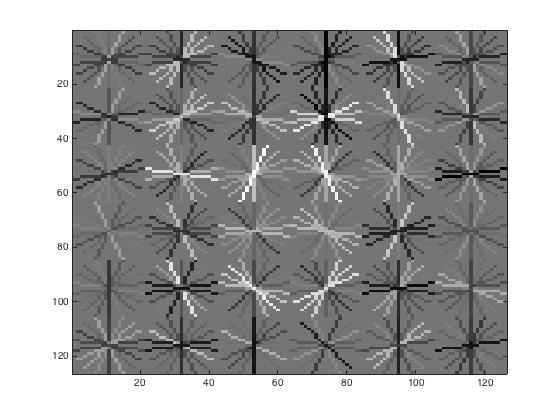

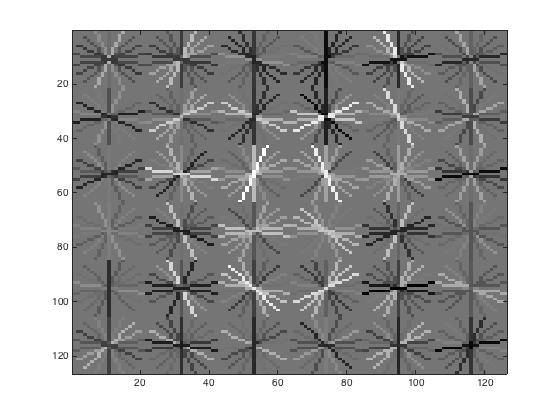

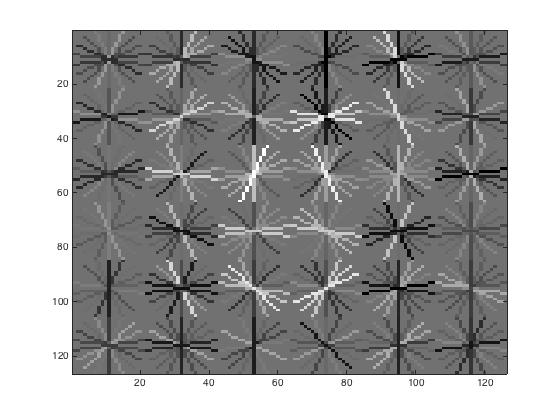

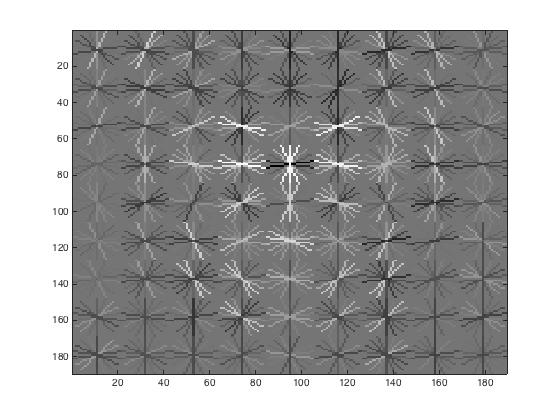

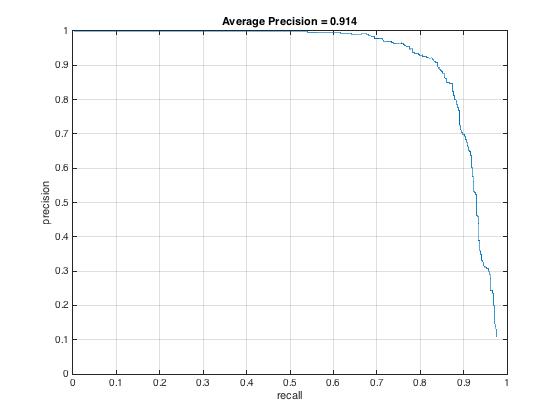

I also tried with hog_cell_size = 4. The results are shown as following:

We can see the hog template become more precise and vivid. The accuracy reached 0.914, which was pretty good. But the false positive seems also increased a little.

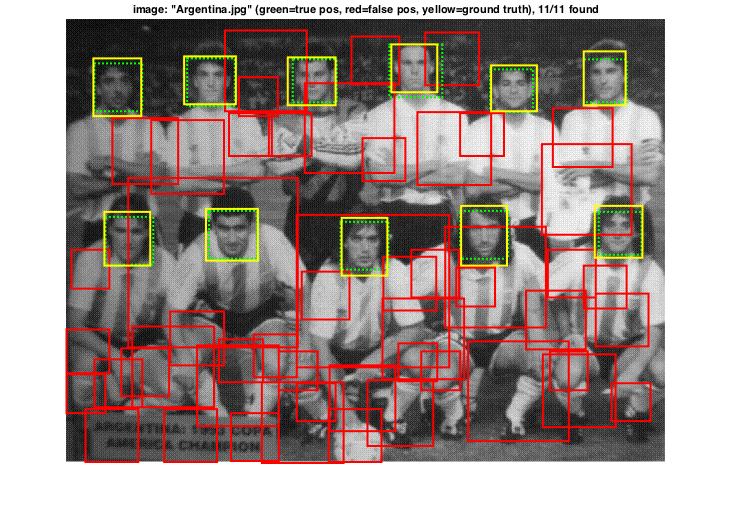

Extra credit

For the extra credit part, I added hard negative mining. I added hard negative features to the training set after we use the negative training set trained the linear SVM. From the result below, we can see the accuracy didn't increase much. however, compare to result before, the false positive had significantly decreased. So we can see by adding hard negative mining, the performance has been improved a lot. With more iterations, the performance can be further improved. But it will take a long running time.