View alignment between widely spaced cameras for a general scene is a hard problem in computer vision. However, in a meeting room scenario, certain assumptions can be made and the problem can be made more tractable. In this project, a system has been designed which enhances the view of an omnidirectional camera placed at the center of the room with views obtained from other cameras in the room. Important features are detected and matched and a warping function is computed in real time.

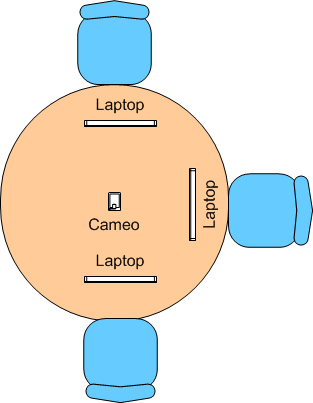

The meeting room consists of a table and 3 chairs for the people attending the meeting. At the center of the table is placed an omnidirectional camera. This camera, called CAMEO, is a product from CMU for providing a global sensing of activities in a meeting room. The camera consists of 4 Firewire cameras connected via a daisy chain. Only 3 of these cameras are actually involved in the experiment corresponding to the 3 people sitting in front of them.

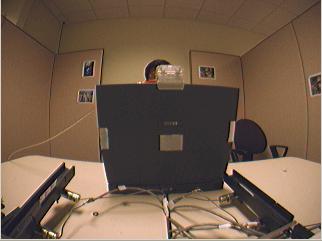

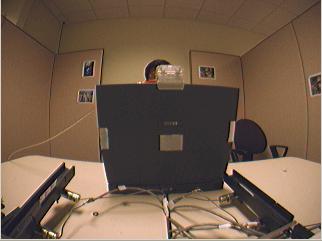

Global sensing of activities become difficult if the people involved in the meeting are carrying laptops and their views are blocked by the laptop screens. In order to retrieve this blocked information, cameras are fitted on their laptops. These cameras are also Firewire cameras.

The main problem in this setup is that information is being retrieved from 4 sensors as separate video streams (Cameo and the 3 laptop cameras). However, the event that they represent is the same. Thus, we want to combine these separate sensor information into one.

Additional sensors (laptop cameras) were required to replace the blocked portions of the scene. The system needs to detect blocked regions in the CAMEO view and replace them with the images retrieved from the laptops. Thus we come down to the problem of image registration from widely spaced cameras.

|

|

|

|

Background Image |

Current Image |

Foreground Pixels |

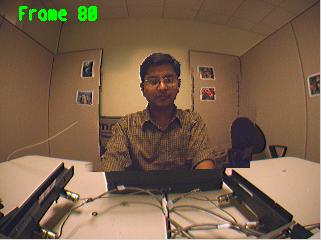

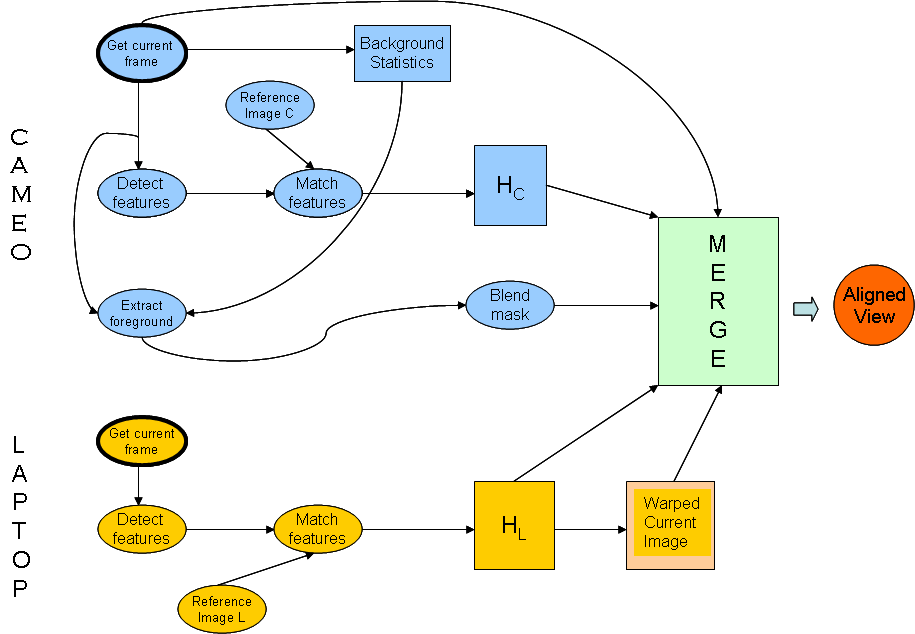

The background statistics are learnt for a couple of hundred frames. These statistics comprise of the mean intensity and the standard deviation. We assume the color pixels to vary in accordance with a Gaussian distribution. As the user arrives with the laptop and places it in front of the camera, the Background Subtraction module clusters together the foreground pixels into one foreground blob. Pixels which have not been classified as foreground are copied to the final image as it is.

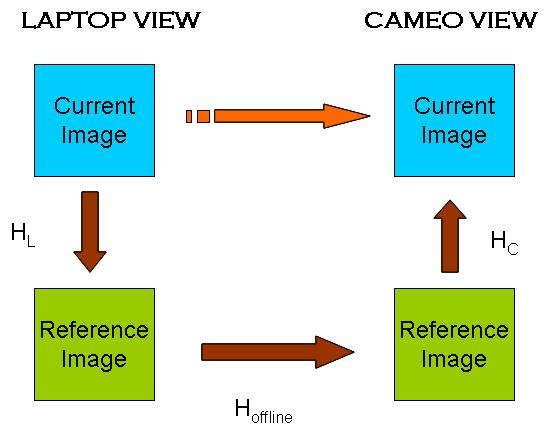

Reference images are taken from both the CAMEO camera and the laptop cameras. Features are pre-computed and stored for these reference views. During runtime, new features are detected in the current frames of both these cameras. The newly detected features are then matched with the pre-computed features in the reference image and a warping function (homography) is computed. The current frames are then warped and pasted onto the foreground regions of the CAMEO view to give the final results.

| Raw Video Streams | video | video | video |

| Processed Video Streams | video | video | video |

|

CAMERA 1 |

CAMERA 2 |

CAMERA 3 |

A detailed description of the project could be accessed here. (Project Report)