| Arno Schödl | Irfan Essa |

| College of Computing - GVU

Center Georgia Institute of Technology Atlanta, GA 30332-0280 |

|

The video clips on this web page show the results described in our NIPS 2000 paper. They appear in the same order as in the paper. Your browser has to have a MPEG file association to play them. Click on the images and the links in the text to play the videos.

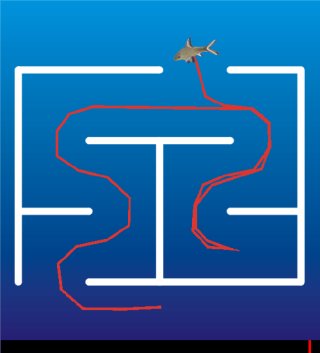

Maze. This example shows the fish swimming through a simple maze. The motion path was scripted in advance. It contains a lot of vertical motion, for which there are far fewer samples available than for horizontal motion. The computation time is approximately 100x real time on a Intel Pentium II 450 MHz processor. The motion is smooth and looks mostly natural. The fish deviates from the scripted path by up to a half of its length.

Interactive fish. Here the fish is made to follow the red dot which is controlled interactively with the mouse. We use precomputed cost functions for the eight compass directions and insert five motion-constraint-free frame transitions when the cost function is changed.

Fish tank. The final animation combines multiple video textures to generate a fishtank. The fish are controlled interactively to follow the number outlines, but the control points are not visible in this animation. Animations like this one are easier to create using the scripting technique presented in this paper, because interactive control requires the user to adapt to the delays in the sprite response.

If you want to learn more about video textures in general, look at the results of our SIGGRAPH 2000 paper.